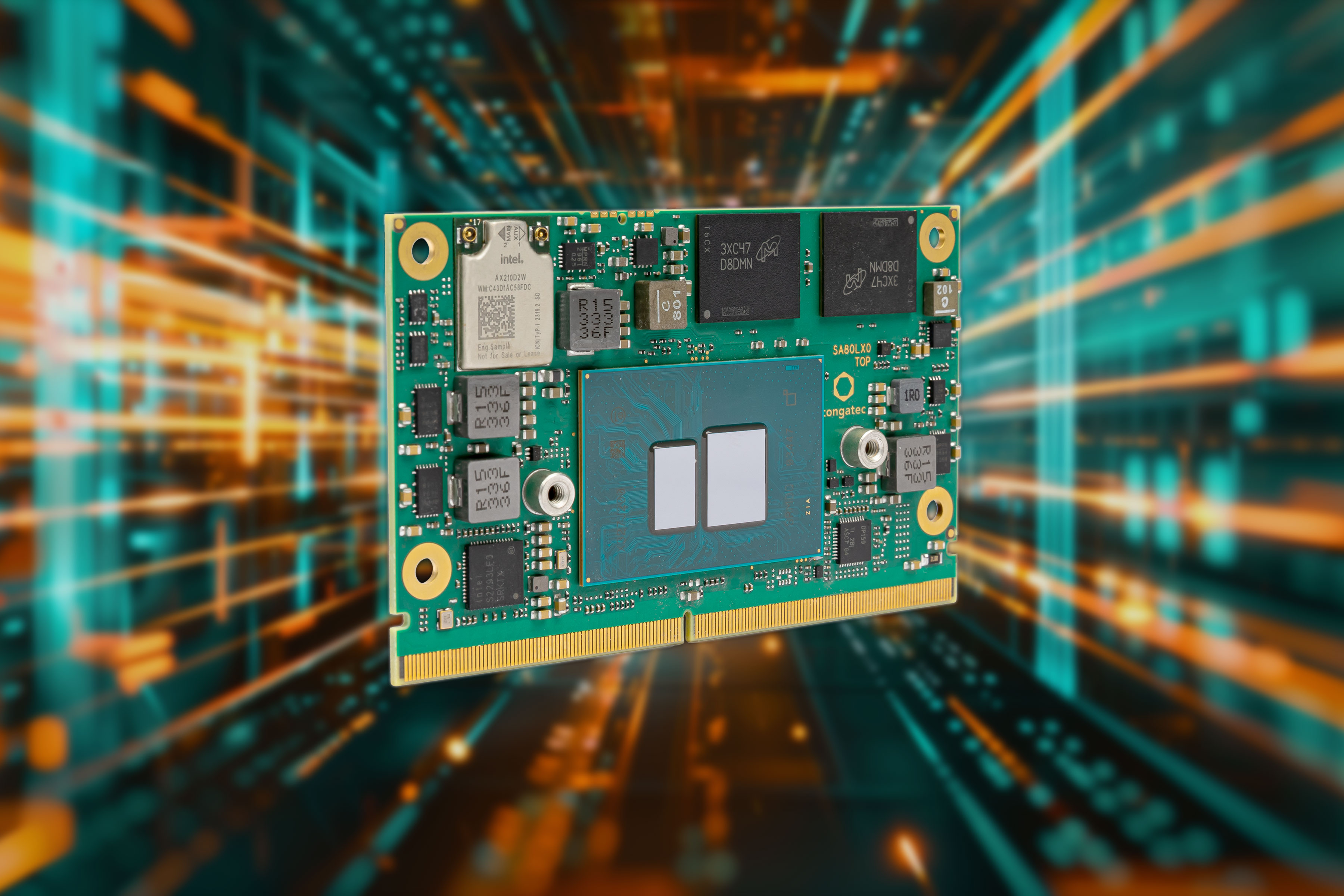

Congatec’s new conga-SA8 SMARC modules are powered by the Intel Atom x7000RE “Amston Lake” processors. With twice the processing cores and similar power consumption to the previous generation, congatec’s new credit-card-sized modules are “intended for future-facing industrial edge computing and powerful virtualization.” An Intel Core i3‑N305 Alder Lake-N processor is also offered as an alternative to the Intel Atom x7000RE series for high-performance IoT edge applications. The conga-SA8 modules support up to 16GB LPDDR5 onboard memory, 256GB eMMC 5.1 onboard flash memory, and offer several high-bandwidth interfaces such as USB 3.2 Gen 2, PCIe Gen 3, and SATA Gen 3. The integrated Intel UHD Gen 12 graphics processing unit has up to 32 execution units and can power three independent 4K displays. The conga-SA8 is described as virtualization-ready and has a hypervisor (virtual machine monitor) integrated into the firmware. The RTS hypervisor takes complete advantage of the eight processing cores […]

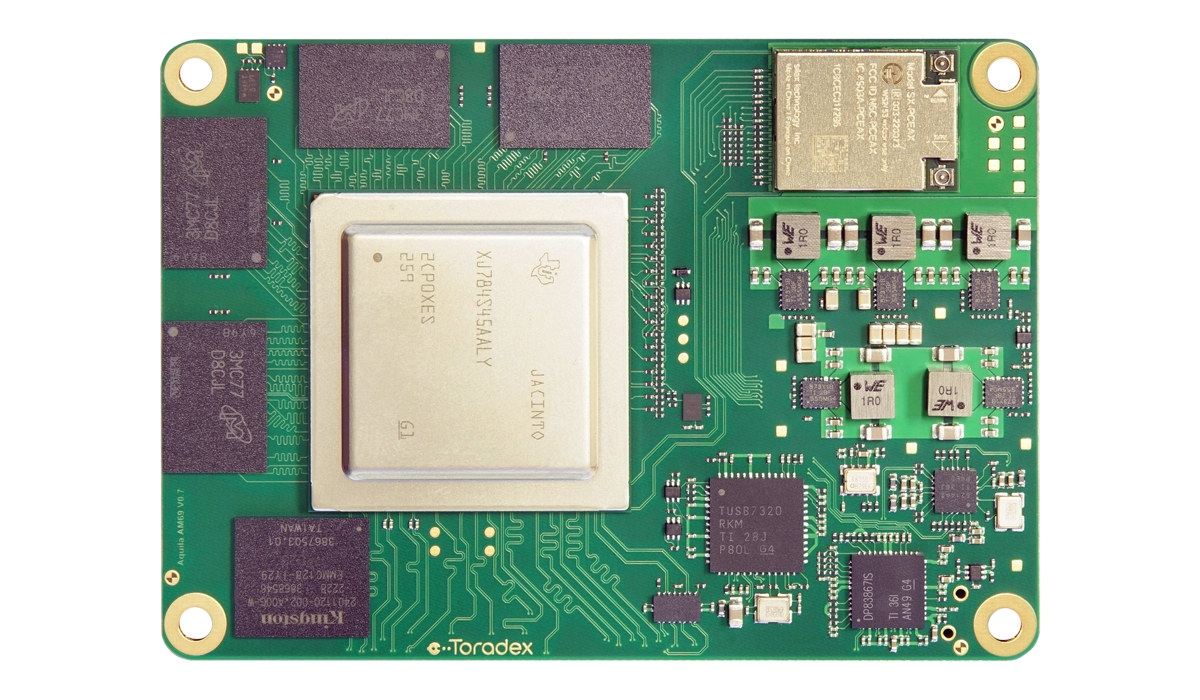

Toradex Aquila AM69 SoM features TI AM69A octa-core Cortex-A72 AI SoC, rugged 400 pin board-to-board connector

Toradex Aquila AM69 is the first system-on-module (SoM) from the company’s Aquila family with a small form factor and a rugged ~400-pin board-to-board connector targetting demanding edge AI applications in medical, industrial, and robotics fields with Arm platforms that deliver x86 level of performance at low power. The Aquila AM69 SoM is powered by a Texas Instruments AM69A octa-core Arm Cortex-A72 SoC with four accelerators delivering 32 TOPS of AI performance, up to 32GB LPDDR4, 128GB eMMC flash, built-in WiFi 6E and Bluetooth 5.3 module, and a board-to-board connector for display, camera, and audio interfaces, as well as dual gigabit Ethernet, multiple PCIe Gen3 and SerDes interfaces. All that in a form factor that’s only slightly bigger (86x60mm) than a business card or a Raspberry Pi 5. Toradex Aquila AM69 specifications: SoC – Texas Instruments AM69A Application processor – Up to 8x Arm Cortex-A72 cores at up to 2.0 GHz […]

Orange Pi Developer Conference 2024, upcoming Orange Pi SBCs and products

Orange Pi held a Developer Conference on March 24, 2024, in Shenzhen, China, and while I could not make it, the company provided photos of the event where people discussed upcoming boards and products, as well as software support for the Orange Pi SBCs. So I’ll go through some of the photos to check out what was discussed and what’s coming. While Orange Pi is mostly known for its development boards the company has also been working on consumer products including the Orange Health Watch D Pro and the OrangePi Neo handheld console. The Orange Pi Watch D Pro is said to implement non-invasive blood glucose monitoring, blood pressure monitoring, one-click “micro-physical examination” and other functions to to help users monitor their health monitoring. The Watch D Pro uses a technique that emits a green light to measure glucose levels in the blood, and we’re told it’s accurate enough to […]

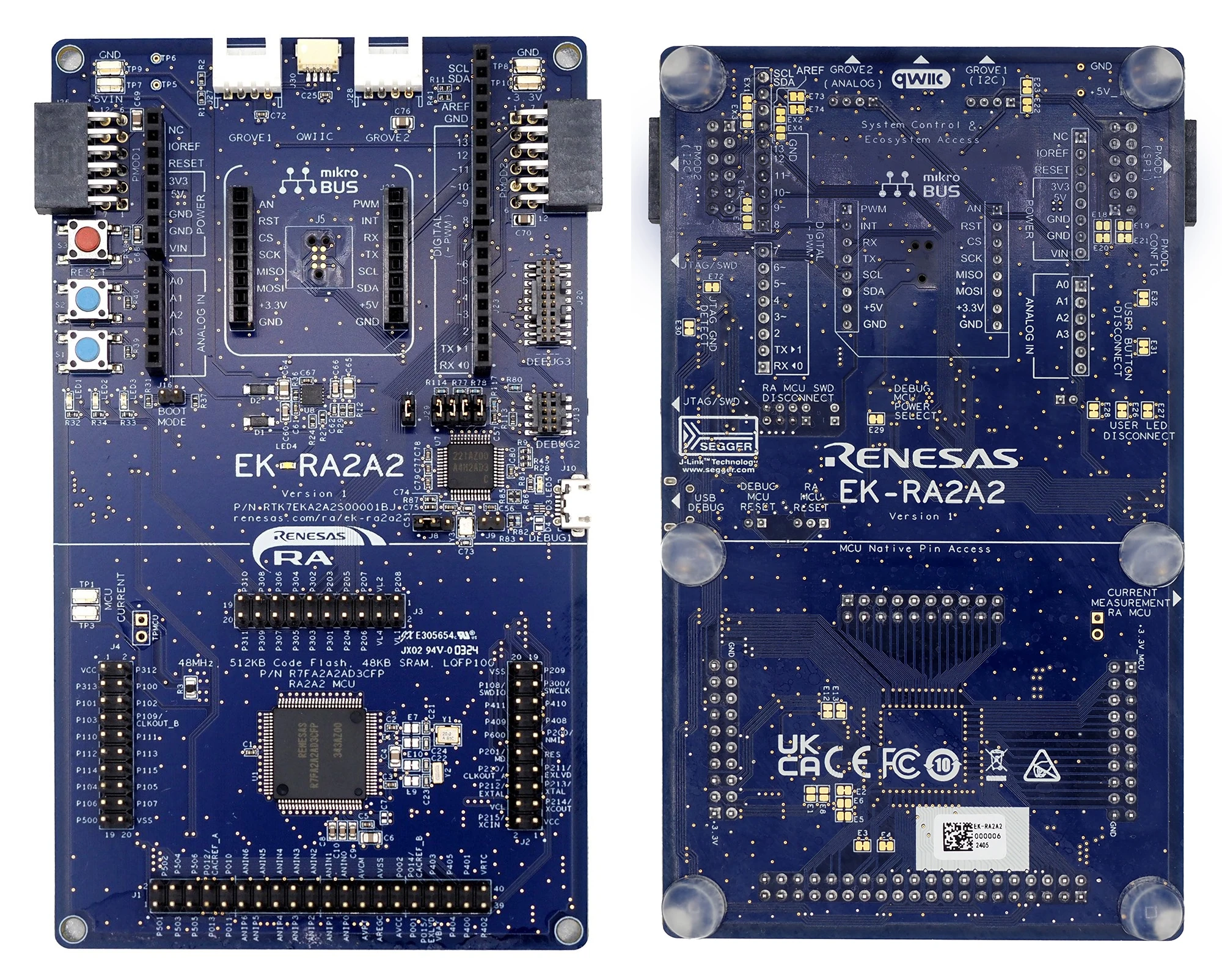

Renesas RA2A2 Arm Cortex-M23 microcontroller offers high-resolution 24-bit ADC, up to 512KB dual-bank flash

Renesas Electronics RA2A2 Arm Cortex-M23 microcontroller (MCU) group offers a 7-channel high-resolution 24-bit Sigma-Delta ADC, as well as dual-bank flash and bank swap function for an easier implementation of firmware over-the-air (FOTA) updates. The 48MHz MCU also comes with 48KB SRAM, up to 512KB code flash, various interfaces, and safety and security features that make it suitable for smart energy management, building automation, medical devices, consumer electronics, and other IoT applications that can benefit from high-resolution analog inputs and firmware updates. Renesas RA2A2 specifications: MCU core – Arm Cortex-M23 Armv8-M core clocked at up to 48 MHz Arm Memory Protection Unit (Arm MPU) with 8 regions Memory 48 KB SRAM Memory Protection Units (MPU) Memory Mirror Function (MMF) Storage Up to 512 KB code flash memory in dual bank (256 KB × 2 banks); bank swap support 8 KB data flash memory (100,000 program/erase (P/E) cycles) Peripheral interfaces Segment LCD […]

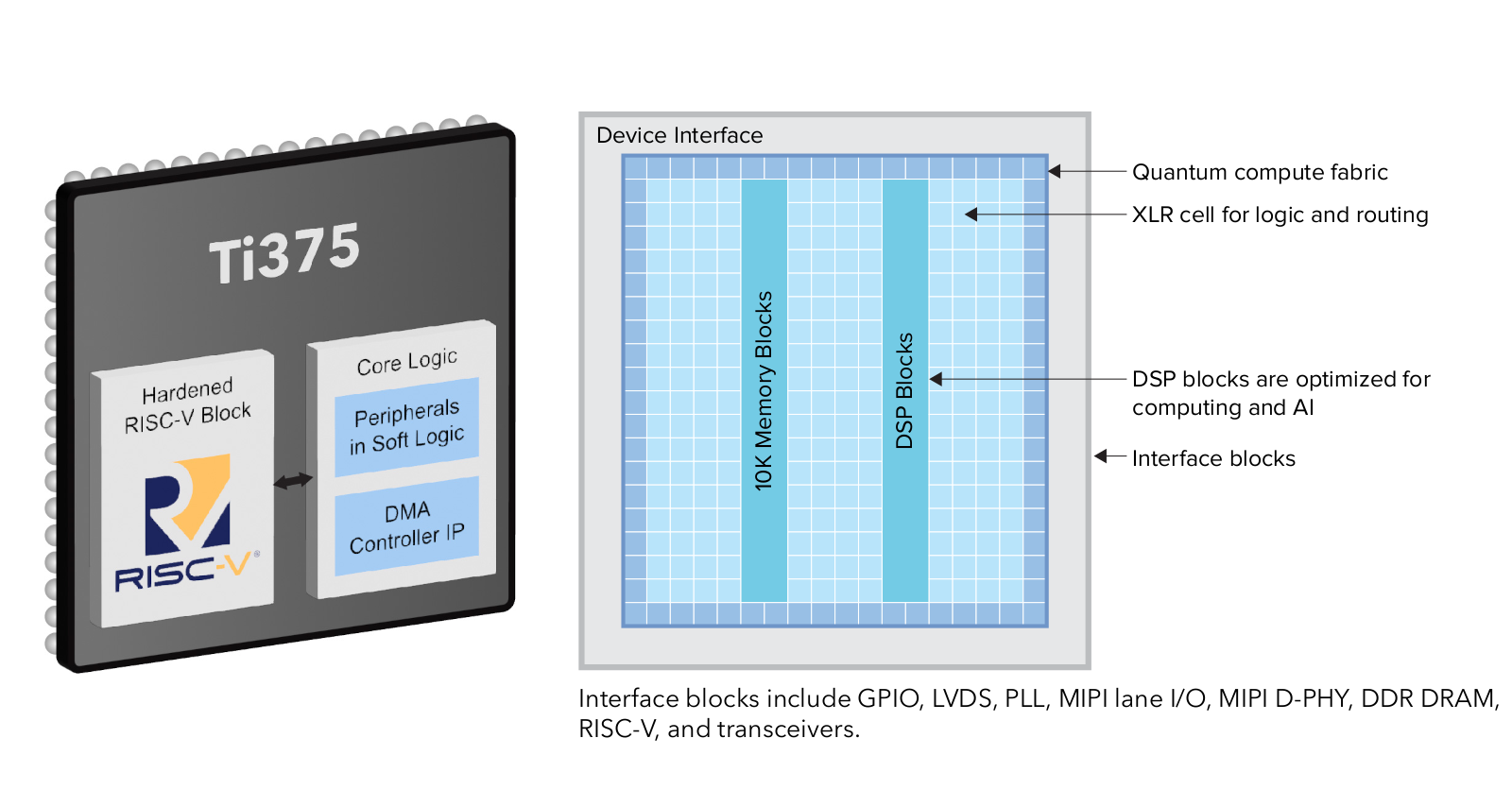

Efinix Titanium Ti375 FPGA offers quad-core hardened RISC-V block, PCIe Gen 4, 10GbE

Efinix Titanium Ti375 SoC combines high-density, low-power Quantum compute fabric with a quad-core hardened 32-bit RISC-V block and features a LPDDR4 DRAM controller, a MIPI D-PHY for displays or cameras, and 16 Gbps transceivers enabling PCIe Gen 4 and 10GbE interfaces. The Titanium Ti375 also comes with 370K logic elements, 1.344 DSP blocks, 2,688 10-Kbit SRAM blocks, and 27,53 Mbits embedded memory, as well as DSP blocks optimized for computing and AI workloads, and XLR (eXchangeable Logic and Routing) cells for logic and routing. Efinix Titanium Ti375 specifications: FPGA compute fabric 370,137 logic elements (LEs) 362,880 eXchangeable Logic and Routing (XLR) cells 27,53 Mbits embedded memory 2,688 10-Kbit SRAM blocks 1,344 embedded DSP blocks for multiplication, addition, subtraction, accumulation, and up to 15-bit variable-right-shifting Memory – 10-kbit high-speed, embedded SRAM, configurable as single-port RAM, simple dual-port RAM, true dual-port RAM, or ROM FPGA interface blocks 32-bit quad-core hardened RISC-V block […]

STMicro announces ultra-low-power STM32U0 MCU, unveils 18nm FD-SOI process for STM32 microcontrollers

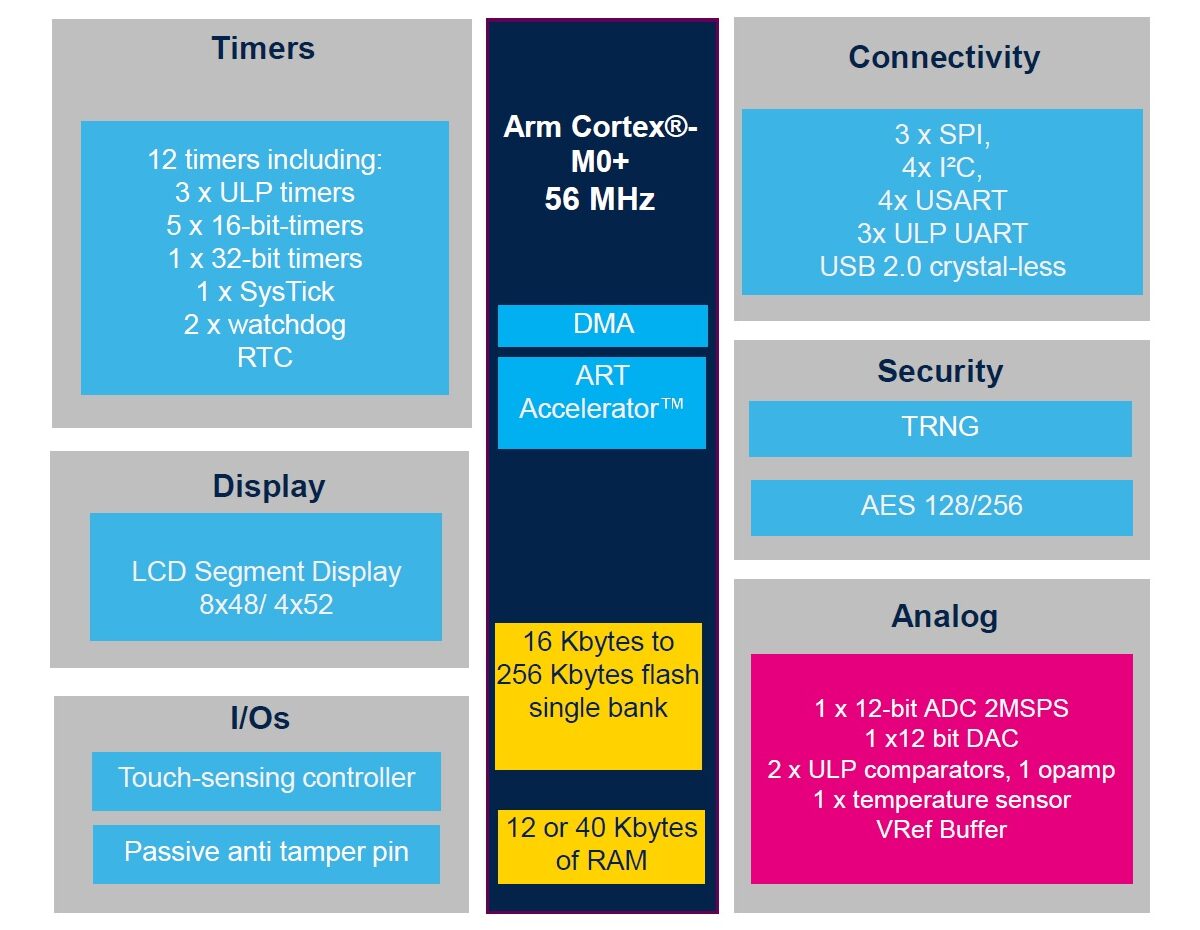

STMicro has announced the ultra-low-power STM32U0 Arm Cortex-M0+ microcontroller family running up to 56 MHz that reduces energy consumption by up to 50% compared to previous product generations such as STM32C0 or STM32L0 while targetting SESIP Level 3, PSA-Certified Level 1, and NIST certifications. Separately, the company also introduced a new 18nm FD-SOI manufacturing process for STM32 microcontrollers that will replace the 40nm process currently used. STMicro STM32U0 Cortex-M0+ MCU STMicro STM32U0 key features and specifications: MCU Core – Cortex-M0+ up to 54 MHz with ART accelerator Memory / Storage STM32U031x – 12KB SRAM, 16 to 64KB flash STM32U073x – 40 KB SRAM, 16 to 256 KB flash STM32U083x – 40 KB SRAM, 256 KB flash Display – LCD controller for 8×48 or 4×52 segment displays (STM32U073, STM32U083) Peripherals 3x I2C, 2x SPI, 4x USART, 2x low-power UART. Up to 21x capacitive sensing channels USB – 1x USB 2.0 device […]

Remi Pi is a compact, low-cost SBC powered by a Renesas RZ/G2L Cortex-A55/M33 SoC

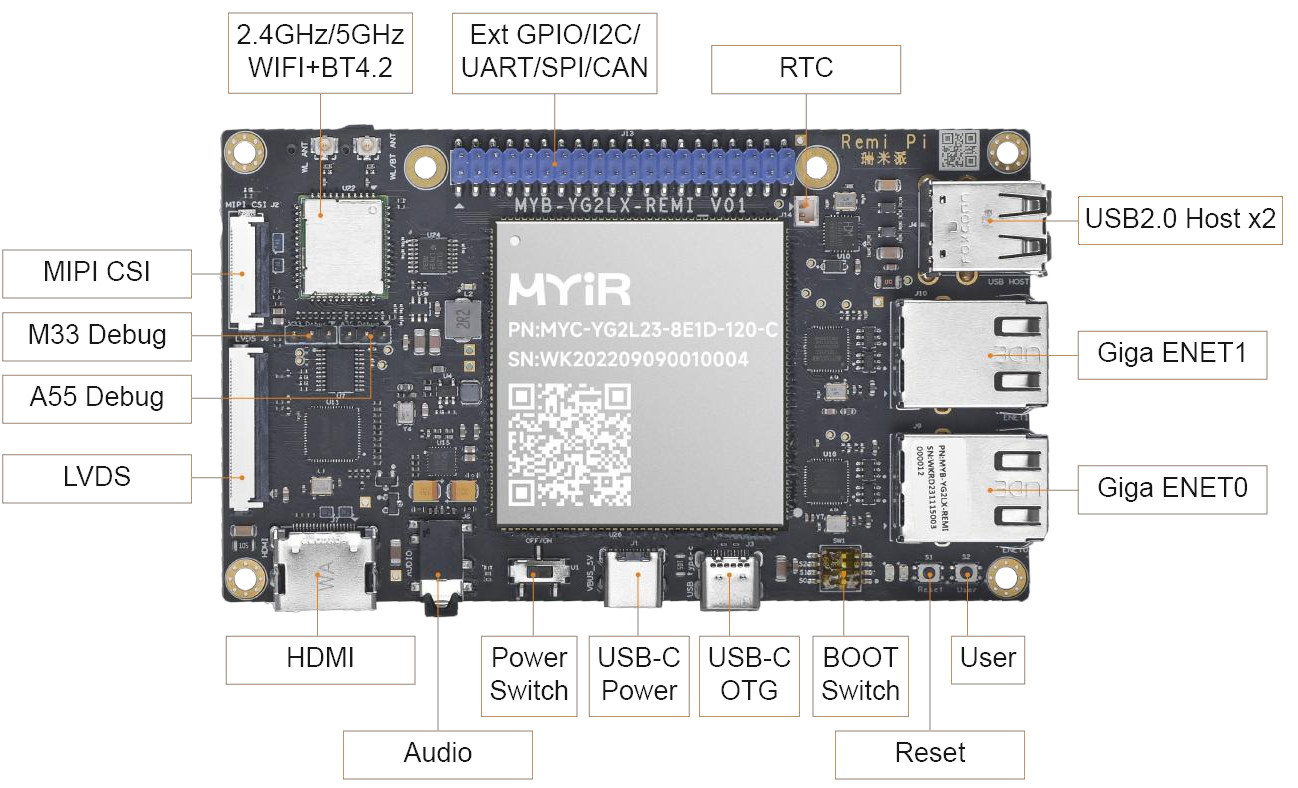

MYiR Tech Remi Pi is a low-cost SBC based on the company’s MYC-YG2LX CPU module featuring a Renesas RZ/G2L Arm Cortex-A55/M33 processor, 1GB RAM, 8GB eMMC flash, and plenty of ports and interfaces. Those include two gigabit Ethernet ports, a wireless module with dual-band WiFi 4 and Bluetooth 4.2 connectivity, HDMI and LVDS display interfaces, MIPI CSI camera input, a 3.5mm audio jack, a few USB ports, and a 40-pin GPIO header compatible with the one on the popular Raspberry Pi SBCs. Remi Pi specifications: System-on-Module – MYiR MYC-YG2L23 module with SoC – Renesas RZ/G2L processor (R9A07G044L23GBG) CPU 1.2 GHz dual-core Arm Cortex-A55 processor 200 MHz Arm Cortex-M33 real-time core GPU – Arm Mali-G31 3D GPU VPU – H.264 decoding/encoding System Memory – 1GB DDR4 Storage – 8GB eMMC flash, 32KB EEPROM PMIC – Renesas RAA215300 power management IC Storage – MicroSD card slot Display interfaces HDMI video output LVDS […]

Allwinner T527 System-on-Module features octa-core Cortex-A55 CPU, 2 TOPS AI accelerator

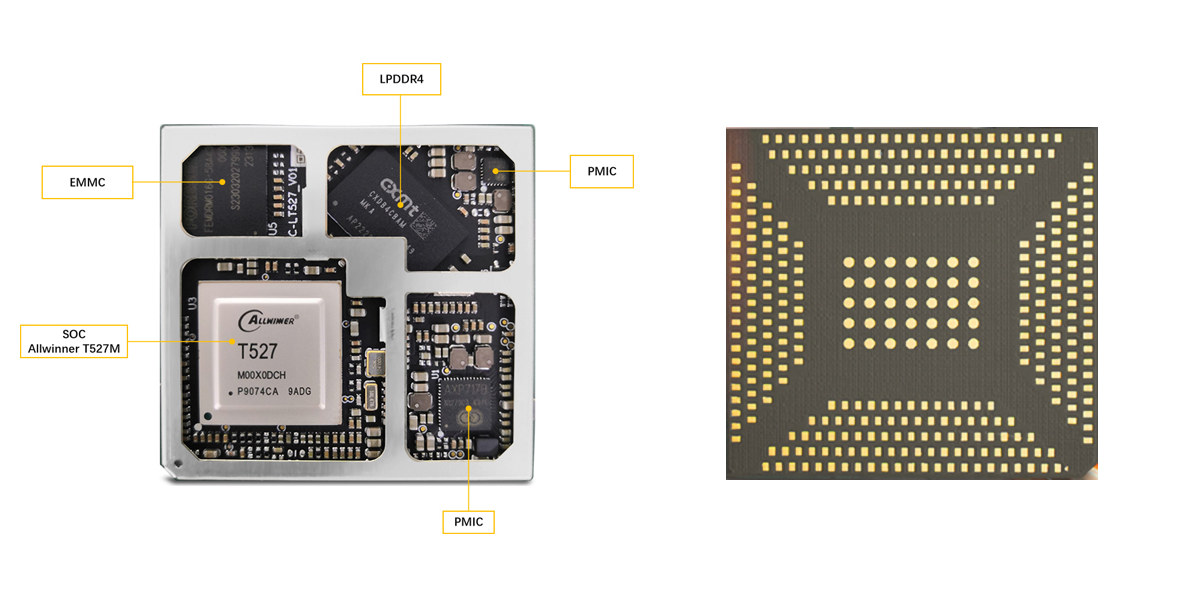

MYiR MYC-LT527 is a compact System-on-Module (SoM) based on Allwinner T527 octa-core Arm Cortex-A55 SoC with a 2 TOPS AI accelerator, up to 4GB RAM, 32GB flash, and a land grid array (LGA) comprised of 381 pads with a range of interfaces for displays and cameras, networking, USB, and PCIe, and more. The company also introduced the MYD-LT527 development board to showcase the capabilities of the Allwinner T527 CPU module suitable for a range of applications such as industrial robots, energy and power management, medical equipment, display and controller machines, edge AI boxes and boards, automotive dashboards, and embedded devices that require media and AI functionalities. MYC-LT527 Allwinner T527 System-on-Module MYC-LT527 specifications: SoC – Allwinner T527 CPU Octa-core Arm Cortex-A55 processor with four cores @ 1.80 GHz and four cores @ 1.42GHz E906 RISC-V core up to 200 MHz DSP – 600MHz HIFI4 Audio DSP GPU – Arm Mali-G57 MC1 […]