AAEON BOXER-8645AI is an embedded AI system powered by NVIDIA Jetson AGX Orin that features eight GMSL2 connectors working with e-con Systems’ NileCAM25 Full HD global shutter GMSL2 color cameras with up to 15-meter long cables. The BOXER-8645AI is fitted with the Jetson AGX Orin 32GB with 32GB LPDDR5 and 64GB flash and up to 200 TOPS of AI performance. Other features include M.2 NVMe and 2.5-inch SATA storage, 10GbE and GbE networking ports, HDMI videos, and a few DB9 connectors for RS232, RS485, DIO, and CAN Bus interfaces. The embedded system takes 9V to 36V wide DC input from a 3-pin terminal block. AAEON BOXER-8645AI specifications: AI accelerator module – NVIDIA Jetson AGX Orin 32GB CPU – 8-core Arm Cortex-A78AE v8.2 64-bit processor with 2MB L2 + 4MB L3 cache GPU / AI accelerators NVIDIA Ampere architecture with 1792 NVIDIA CUDA cores and 56 Tensor Cores @ 1 GHz […]

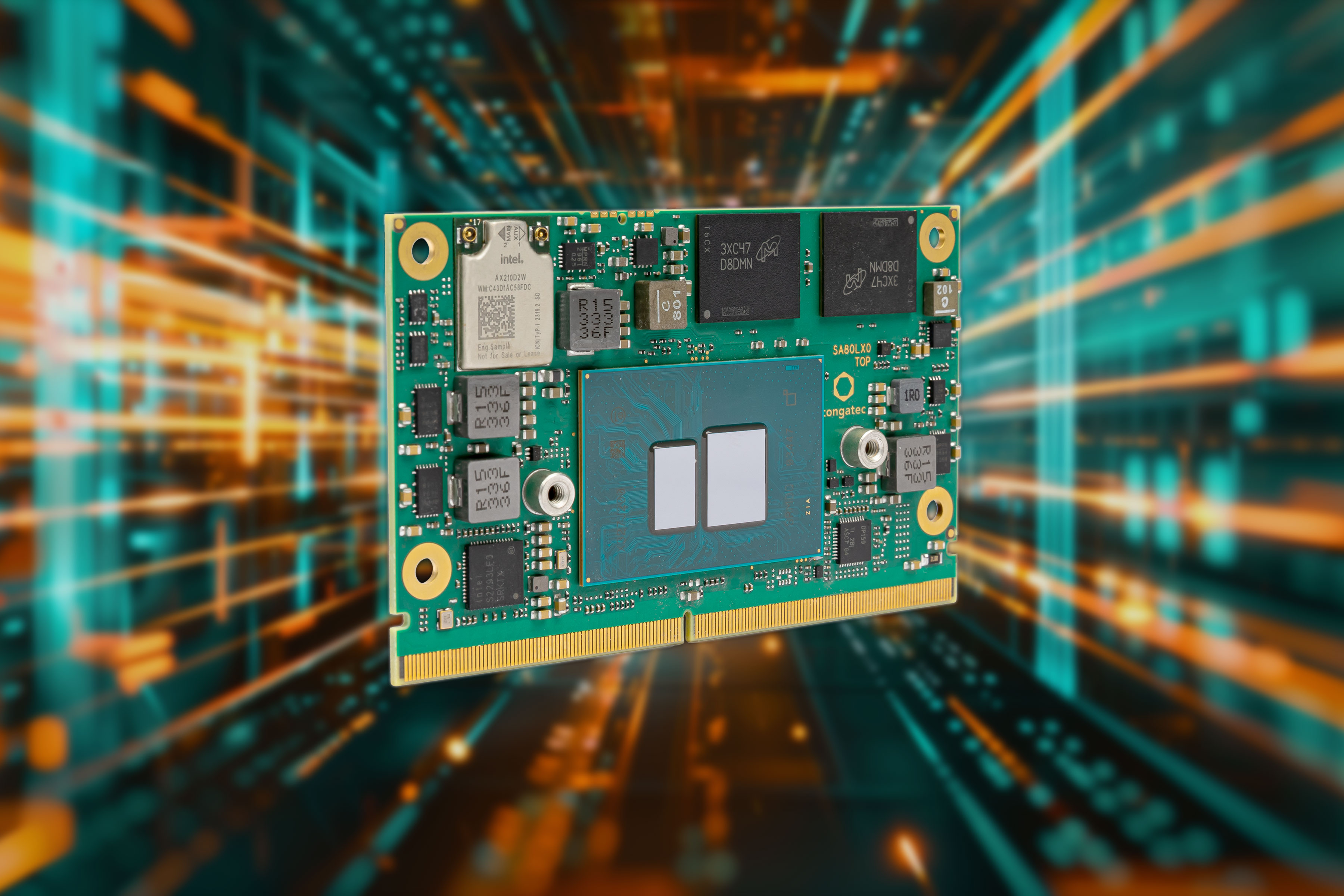

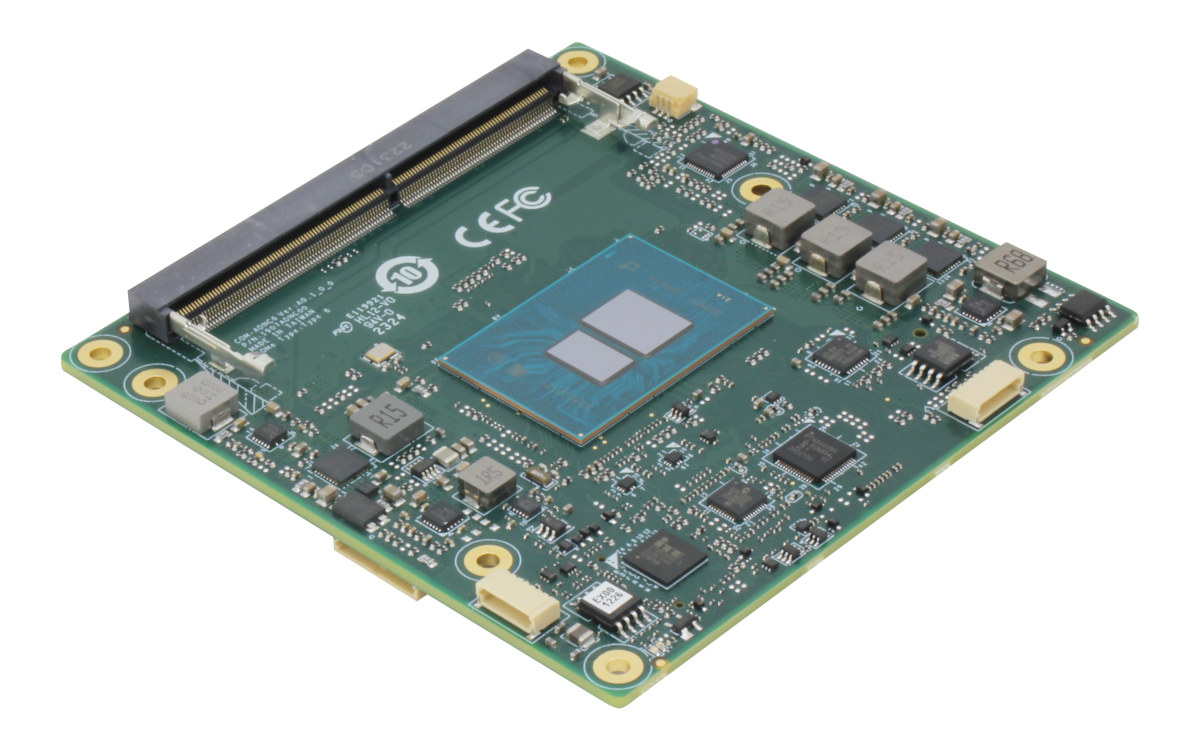

congatec conga-SA8 Amston Lake SMARC modules are targeted at industrial edge applications

Congatec’s new conga-SA8 SMARC modules are powered by the Intel Atom x7000RE “Amston Lake” processors. With twice the processing cores and similar power consumption to the previous generation, congatec’s new credit-card-sized modules are “intended for future-facing industrial edge computing and powerful virtualization.” An Intel Core i3‑N305 Alder Lake-N processor is also offered as an alternative to the Intel Atom x7000RE series for high-performance IoT edge applications. The conga-SA8 modules support up to 16GB LPDDR5 onboard memory, 256GB eMMC 5.1 onboard flash memory, and offer several high-bandwidth interfaces such as USB 3.2 Gen 2, PCIe Gen 3, and SATA Gen 3. The integrated Intel UHD Gen 12 graphics processing unit has up to 32 execution units and can power three independent 4K displays. The conga-SA8 is described as virtualization-ready and has a hypervisor (virtual machine monitor) integrated into the firmware. The RTS hypervisor takes complete advantage of the eight processing cores […]

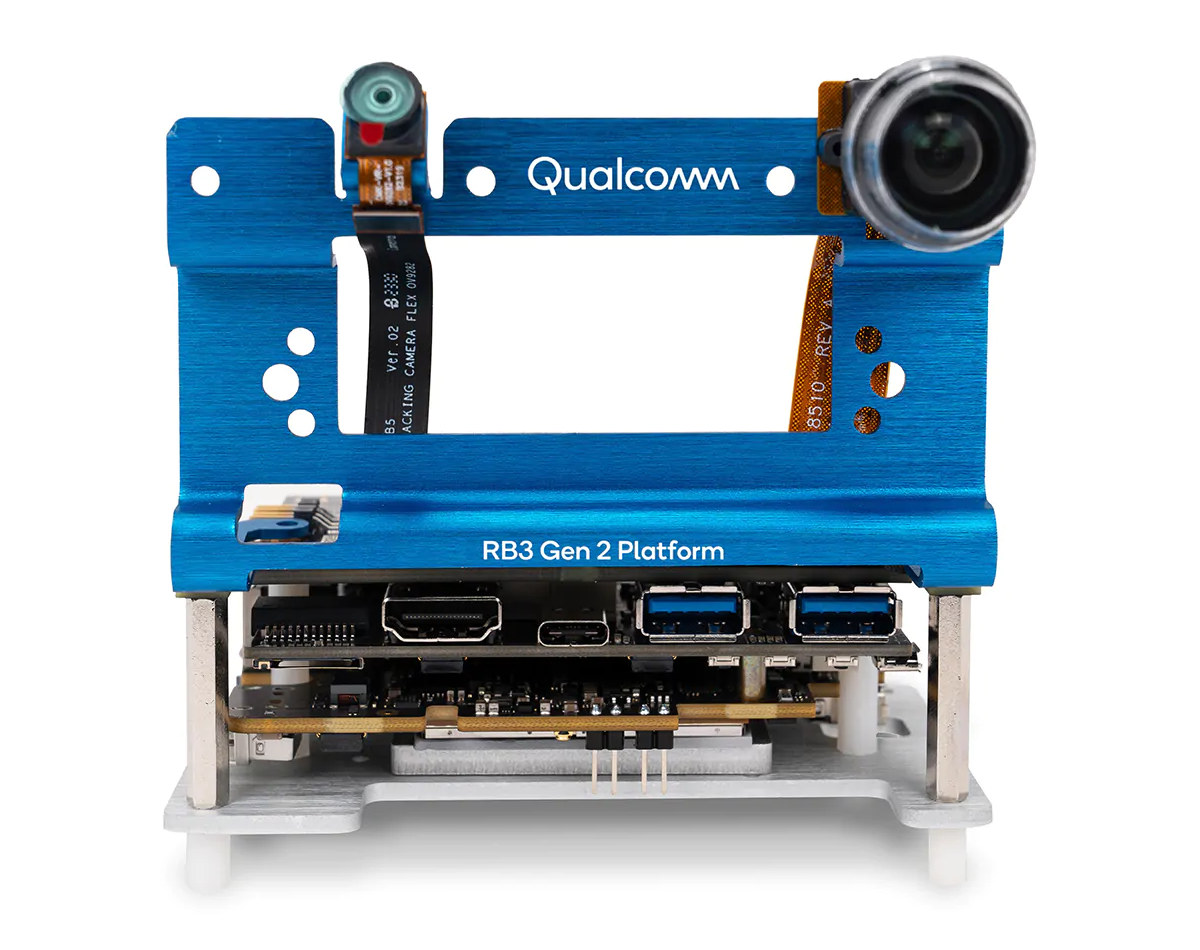

Qualcomm RB3 Gen 2 Platform with Qualcomm QCS6490 AI SoC targets robotics, IoT and embedded applications

Qualcomm had two main announcements at Embedded World 2024: the ultra-low-power Qualcomm QCC730 WiFi microcontroller for battery-powered IoT devices and the Qualcomm RB3 Gen 2 Platform hardware and software solution designed for IoT and embedded applications based on the Qualcomm QCS6490 processor that we’re going to cover today. The kit is comprised of a QCS6490 octa-core Cortex-A78/A55 system-on-module with 12 TOPS of AI performance, 6GB RAM, and 128GB UFS flash connected to the 96Boards-compliant Qualcomm RBx development mainboard through interposer, as well as optional cameras, microphone array, and sensors. Qualcomm QCS6490/QCM6490 IoT processor Specifications: CPU – Octa-core Kryo 670 with 1x Gold Plus core (Cortex-A78) @ 2.7 GHz, 3x Gold cores (Cortex-A78) @ 2.4 GHz, 4x Silver cores (Cortex-A55) @ up to 1.9 GHz GPU – Adreno 643L GPU @ 812 MHz with support for Open GL ES 3.2, Open CL 2.0, Vulkan 1.x, DX FL 12 DSP – Hexagon […]

8devices TobuFi SoM is designed for drones, robotics, and advanced audio systems

8devices has recently introduced TobuFi, a Qualcomm QCS405-powered System-on-Module (SoM) featuring dual-band Wi-Fi 6 and Wi-Fi 5 capabilities. The device also features 1GB LPDDR3, 8GB of eMMC storage, and multiple display resolutions. It also offers various interfaces, including USB 3.0, HDMI, I2S, DMIC, SDC, UART, SPI, I2C, and GPIO. In previous posts, we covered 8devices product launches like the Noni M.2 WiFi 7 module, Rambutan and Rambutan-I modules, Habanero IPQ4019 SoM, Mango-DVK OpenWrt Devkit, and many more innovative products. If you’re interested in 8devices, feel free to check those out for more details. 8devices TobuFi SoM specifications: SoC – Qualcomm QCS405 CPU – Quad-core Arm Cortex-A53 at 1.4GHz; 64-bit GPU – Qualcomm Adreno 306 GPU at 600MHz; supports 64-bit addressing DSP – Qualcomm Hexagon QDSP6 v66 with Low Power Island and Voice accelerators Memory – 1GB LPDDR3 + 8GB eMMC Storage 8GB eMMC flash SD card – One 8-bit (SDC1, 1.8V) and one […]

AMD Ryzen Embedded 8000 Series processors target industrial AI with 16 TOPS NPU

AMD has recently “announced” the Ryzen Embedded 8000 Series processors in a community post with the latest AMD embedded devices combining a 16 TOPS NPU based on the AMD XDNA architecture with CPU and GPU elements for a total of 39 TOPS designed for industrial artificial intelligence. The Ryzen Embedded 8000 CPUs will be found in machine vision, robotics, and industrial automation applications to enhance the quality control and inspection processes, enable real-time, route-planning decisions on-device for minimal latency, and predictive maintenance, and autonomous control of industrial processes. AMD Ryzen Embedded 8000 key features and shared specifications: CPU – Up to 8 “Zen 4” cores, 16 threads Cache L1 Instruction Cache – 32 KB, L1 Data Cache = 32 KB (per core) L2 Cache – Up to 8 MB (total) L3 Cache- Up to 16 MB unified Graphics – RDNA 3 graphics with up to 6 WGPs (Work Group processors) […]

M5Stack BugC2 programmable robot base has an STM32 control chip and a four-way motor driver

Modular IoT hardware developer, M5Stack, has released a new programmable robot base based on the STM32F030F4 microcontroller with LEGO and Arduino compatibility. The M5Stack BugC2 is “compatible with the M5StickC series controllers,” and includes the ESP32-powered M5StickC Plus2 development kit in the package. It features an L9110S four-way motor driver for all-directional operation, two programmable RGB LEDs, an infrared encoder, and a 16340 rechargeable Li-ion battery holder. It also comes with a USB Type-C port for charging the battery and supports onboard reverse charging protection and voltage detection. Listed applications for the M5Stack BugC2 programmable robot base include remote motor control, robot control, and an intelligent toy. M5Stack BugC2 specifications: Microcontroller – STMicroelectronics STM32F030F4 microcontroller, with Arm 32-bit Cortex-M0 CPU @ 48 MHz, and with up to 256KB of flash memory Motor driver – L9110S Infrared receiver – SL0038GD IR detection distance (StickC Plus2) Infrared emission distance (linear distance) at […]

Vecow SPC-9000 fanless embedded system is powered by an Intel Core 7 Ultra 165U or Core 5 Ultra 135U SoC

Vecow SPC-9000 fanless embedded system is powered by the 14th Gen Intel Core Ultra 7 165U or Core Ultra 5 135U 12-core Meteor Lake processor and targets Edge AI applications for factory management, data acquisition & monitoring, autonomous mobile robots (AMR), Smart Retail, and more. The device supports up to 32GB DDR5 RAM, offers M.2 PCIe sockets and a 2.5-inch SATA bay for storage, can drive up to three 4K displays through HDMI, DP, and USB-C ports, features two 2.5GbE ports, and a few USB ports. The SPC-9000 can also be fitted with a 4G LTE module, comes with two RS232/422/485 serial ports, takes a wide 9V-55V DC input voltage range, and can operate up to a -40°C to 75°C temperature range depending on the model. Vecow SPC-9000 specifications: Meteor Lake SoC (one or the other) Intel Core Ultra 7 165U 12-core (2P+8E+2LPE) processor @ 1.2 / 1.7 / 4.9 […]

AAEON COM-RAPC6 and COM-ADNC6 COM Express modules feature Raptor Lake and Alder Lake-N CPUs

AAEON has unveiled two COM Express Type 6 Compact Computer-on-Module families with the COM-RAPC6 designed for high performance with 13th generation Raptor Lake processors from the 15W Intel Processor U300E up to 45W Intel Core i7 SKUs, and the COM-ADNC6 optimized for efficiency with Alder Lake-N processors such as the Intel Core i3-N305 or Atom x7425E CPUs. COM-RAPC6 Raptor Lake COM Express CPU modules Specifications: 13th gen Raptor Lake SoC (one or the other) – Note frequencies shown as base frequencies Intel Core i7-13800HRE 14C/20T processor @ 2.5GHz with Intel Iris Xe Graphics; PBP: 45W Intel Core i7-13800HE 14C/20T processor @ 2.5GHz with Intel Iris Xe Graphics; PBP: 45W Intel Core i5-13600HE 12C/16T processor @ 2.7GHz with Intel UHD Graphics; PBP: 45W Intel Core i3-13300HE 8C/12T processor @ 2.1GHz with Intel UHD Graphics; PBP: 45W Intel Core i7-1370PE 14C/20T processor @ 1.9GHz with Intel UHD Graphics; PBP: 28W Intel Core […]