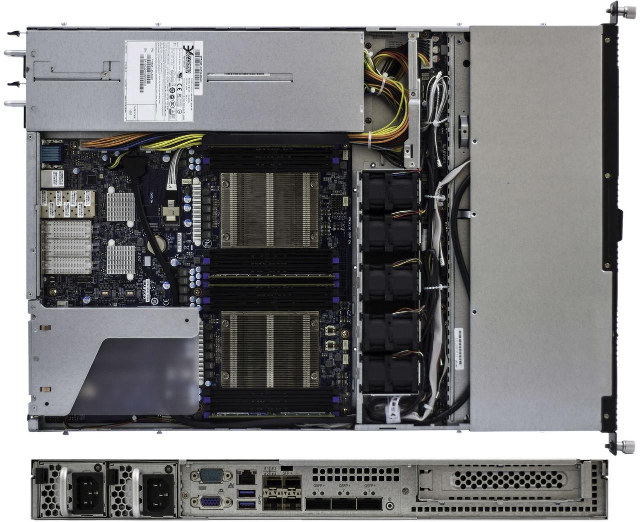

64-bit ARM servers are starting to show up more and more for sale, and after servers such as Softiron Overdrive 1000, Avantek H270-T70, and Gigabyte MP30-AR0, System76, a company selling only Ubuntu powered computers and servers, has launched Startling Pro ARM server equipped with two Cavium ThunderX_CP 48-core processors, and a choice of two operating systems: Ubuntu 16.04.2 LTS 64-bit or Ubuntu 16.10 64-bit.

System76 Starling Pro ARM “stap1” server specifications:

System76 Starling Pro ARM “stap1” server specifications:

- Processor – 2× Cavium ThunderX_CP 48 core 64-bit ARMv8 processor @ up to 2.5 GHz (96 cores in total)

- System Memory – Up to 1024 GB quad-channel registered ECC DDR4 @ 2400MHz

- Storage – Up to 4x 3.5″ drives, 32 TB in total

- Video Output – VGA port

- Virtualization – ARM Virtualization Host Extensions

- Networking – 3x 40-Gigabit QSFP+ (Quad Small Form-factor Pluggable+), 4x 10 Gigabit SFP+, 1x Gigabit Ethernet (RJ45)

- Expansion – 1x PCI Express x16 (Gen3 x8)

- USB – 4x USB 3.0 (2x front/ 2x back)

- Serial – 1x COM port

- Misc – Power on/off button, reset button, ID switch button, LEDs (Power, ID, HHD Activity, System status, LAN Activity, ID)

- Power Supply – 2x 650 Watts with redundancy

- Dimensions – 43.0 × 4.4 × 62.5 cm

- Weight – 13 kg (based weight, varies on configuration)

Cavium has four versions of their ThunderX processor optimize for compute, storage, secure compute, and network, and ThunderX_CP model is optimized for Compute workloads such as cloud web servers, content delivery, web caching, search and social media.

You’ll find more details on System76 Starling Pro product page, and if you click on Design + Buy button at the top of that page, you’ll be able to built your server with a combination of memory, storage, rail kit, accessories, and support (Ubuntu Advantage) options. Price starts at $6,399 with 16GB + 16 GB memory for the two processors, and a 250 GB SSD, but you can go up to $27,528 by maxing out memory to 1TB (512GB per processor with 8 64GB RAM modules each) and 16TB of SSD storage (4x 4GB).

Via Olof Johansson

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

You can see all 96 cores under load (stress-ng) @ https://plus.google.com/photos/photo/114471118004229223857/6392972265877171010?icm=false

That stress-ng picture is very cool!

How wonder how many servers will be sold. It this a niche product, or a main stream success?

Gigabyte, too, carry Caviums. I think this is their top model (384 cores): http://b2b.gigabyte.com/Density-Optimized/H270-T70-rev-100#ov

@blu

That’s the same as the Aventek model I linked to in the introduction. So I guess the Aventek server is a rebranded Gigabyte server.

@Sander

I guess it will stay niche, unless we all start to have private data centers at home.

SOHO business are in need of their own CRM web and mail servers and to have their own cloud. Thwy made it because there is a demand and others are already selling this products.

And best ARM64 cpus , at least reading the benchmarks as geekbench, perform about 2/3 than the best Intel and cheaper, that is good enough for long term service servers, and they are cheaper in electric bills, quieter, and do not need fans (noise is important for some environments) so not so niche.

What we do not know is the ARM GPUs benchmarks compared with actual Intel Nvidia and AMDs.

@Sander

Regarding ‘niche’ or not: In data centers it’s mostly about rack density (performance/space ratio) and energy efficiency but this time not idle as usually in ARM land but medium to full load. If those ARMv64 machines are better here they will be chosen if customers have full control over the software stack (unfortunately not possible everywhere).

Some years ago I tested with a real workload through 3 different Sun/Oracle servers using different CPU architectures. Workload was image/PDF conversion including ICC compliant color space conversions, creating many downscaled/sharpened versions also containing invisible watermarks to track image using world-wide (using Digimarc SDK). We tested through these different architectures:

– Westmere-EX (Intel Xeon with 10 cores each à 2 threads)

– SPARC64-VII (classical SPARC architecture with 4 CPU cores à 2 threads)

– Netra SPARC T4 (not that great single thread performance but 8 CPU cores à 8 threads)

Results of an image conversion benchmark decoupling IO intensive stuff (reading in source material and interpreting/parsing all sort of image/PDF metadata) from CPU intensive:

– Oracle M5000, 6 SPARC64-VII+ @ 2.66 GHz, 48 logical CPU cores: 26,350 conversions per hour

– Oracle T4-2, 2 SPARC-T4 @ 2848 MHz, 128 logical CPU cores: 29,350 conversions per hour

– Oracle X2-4 (X4470 M2), 4 Xeon E7-4870 @ 2.4-2.8 GHz, 80 logical CPU cores: 57,100 conversions per hour

With these asynchronous job types T4 could slightly outperform SPARC64-VII+ but as soon as responsiveness would’ve been a requirement it would’ve looked totally different since T4 was only able to do 230 conversions per hour/thread while SPARC64-VII+ did 549 and the E7 714. Fortunately I was assisted by a former Sun performance specialist from Oracle sharing a lot of insights and we tested also through stupid ‘standard benchmarks’ showing totally different/irrelevant results.

Lessons to learn: ‘Use case first’. Always. And also interesting how power consumption differed depending on a bunch of things (eg. larger DIMMs were more expensive but showed less consumption so with a ‘per rack’ based calculation choosing more expensive DIMMs could redeem within 48 months).

ARM wasn’t an option back then and I doubt that it’s now for any new in-house server installation. At least at our customers where some heterogeneity is still a must and x86 simply set (using two fat vSphere hosts for Linux/macOS/Solaris/Windows VM guests combined with a performant storage cluster and the cheap vMotion license –> never ever downtimes again)

Still waiting for many cores, e.g. 10k cores !?

http://www.bitkistl.com/p/64bit.html

IIRC price of Gigabyte was much lower when I checked some time ago. Anyway, if there is no hurry, I would rather wait for ThunderX2 which should provide much better single-threaded performance…

Hardware like this with so much concentrated processing power in one box are a very bad idea, an epic single point of failure just waiting to happen.

@Drone

Maybe you should consult something like Wikipedia about so called ‘data centers’ (that’s where most of tomorrow’s processing power moves to). 😉

And to be honest: even in house I would today prefer cramping as much processing power as possible in a single enclosure and create redundancy between racks (preferable in a different fire zone with some monitoring set up). It’s pretty easy to avoid any SPoF with 2 fat vSphere hosts combined with a 2 node storage cluster, the cheap vMotion license and a few 10 GbE cables between compartments/rooms. You can move server instances within seconds while even running so no planned downtimes any more and recovering from a failed virtualization host is done within minutes.

But for me and our customers I see no use for ARM here since virtualized servers mostly depend on x86 architecture anyway.