Linux EXT-4 File System Corruption & Attempted Recovery

There’s a file system corruption bug related to EXT-4 in Linux, and it happened to me a few times in Ubuntu 18.04. You are using your computer normally, then suddenly you can’t write anything to the drive, as the root partition has switched to read-only. Why? Here are some error messages:

|

1 2 3 4 |

[15882.773747] EXT4-fs (dm-4): re-mounted. Opts: (null) [15898.557605] EXT4-fs error (device dm-4): ext4_iget:4831: inode #2113041: comm rm: bad extra_isize 20100 (inode size 256) [15898.568305] EXT4-fs error (device dm-4): ext4_iget:4831: inode #2113042: comm rm: bad extra_isize 35148 (inode size 256) [15898.569774] EXT4-fs error (device dm-4): ext4_lookup:1577: inode #2557277: comm rm: deleted inode referenced: 2113043 |

What then happens is that you restart your PC, and get to the command where you are asked to run:

|

1 |

esfsck /dev/sda2 |

Change /dev/sda2 to whatever your drive is, and manually review errors. You can take note of the file modified, as you’ll likely have to fix your Ubuntu installation later on. Usually the fix consists of various package re-installations:

|

1 |

sudo apt install --resintall <package-name> |

It happened to me two or three times in the past, and it’s a pain, but I eventually recovered. But this time, I was not so lucky. The system would not boot, but I could still SSH to it. I fixed a few issues, but there was a problem with the graphics drivers and gdm3 would refuse to start because of it was to open “EGL Display”. Three hours had passed with no solution in sights, so I decided to reinstall Ubuntu 18.04.

Backup Sluggishness

Which brings me to the main topic of this post: Backups. I normally backup the files on my laptop once week. Why only once a week? Because it considerably slow down my computer especially during full backups, as opposed to incremental backups. So I normally leave my laptop on on Wednesday night, so the backup will start at midnight, and hopefully ends before I start working in the morning but it’s not always the case. I’m using the default Duplicity via Deja Dup graphical interface to backup files to a USB 3.0 drive directly connected to my laptop.

Nevertheless, since I only do a backup once a week on Thursday, and I got the file system bug on Tuesday evening, I decided to manually backup my full home directory to the USB 3.0 using tar and bz2 compression as extra precaution before reinstalling Ubuntu. I started at 22:00, and figured it would be done when I’d wake up in the morning. How naive of me! I had to wait until 13:00 the next day for the ~300GB tarball to be ready.

One of my mistakes was to use the single-threaded version of bzip2 with a command like:

|

1 |

tar cjvf backup.tar.bz2 /home/user |

Instead of going with the faster multi-threaded bzip2 (lbzip2):

|

1 |

tar cvf linux2.tar.bz2 linux --use-compress-program=lbzip2 |

It would probably have made sure it finished before the morning, but still would have take many hours.

(Failing to) Reinstall Ubuntu 18.04

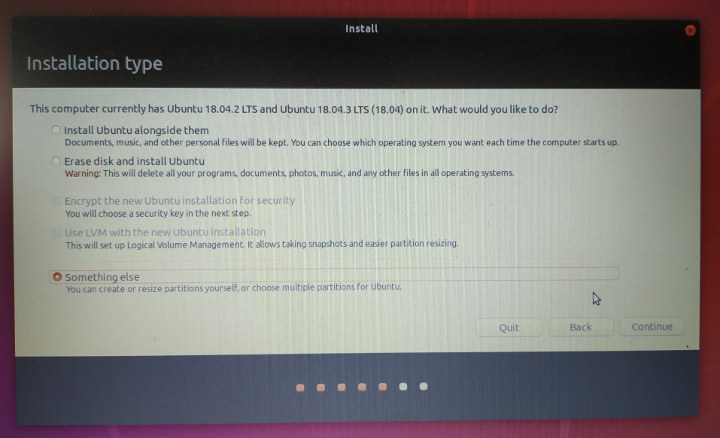

Time to reinstall Ubuntu 18.04. I did not search on the Internet the first time, as I’d assume it would be straightforward, but the installer does not have a nice-an-easy way to reinstall Ubuntu 18.04. Instead you need to select “Something Else”

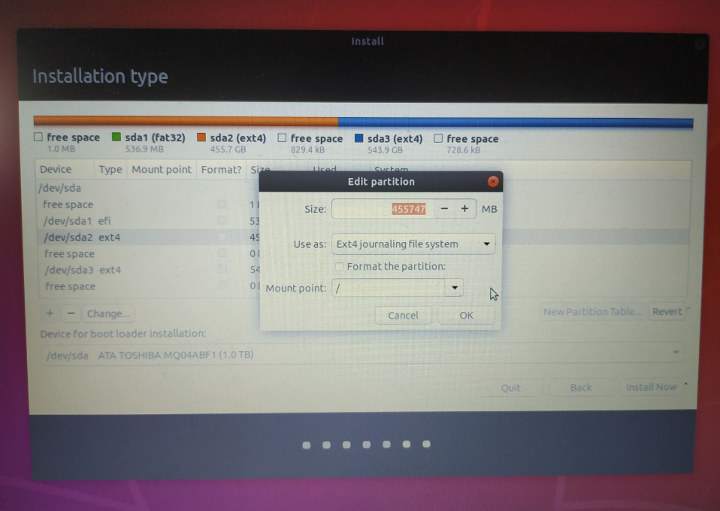

and do the configuration manually by selection the partition you want to re-install, select the mount point, and make sure “Format the partition” is NOT ticked.

and do the configuration manually by selection the partition you want to re-install, select the mount point, and make sure “Format the partition” is NOT ticked.

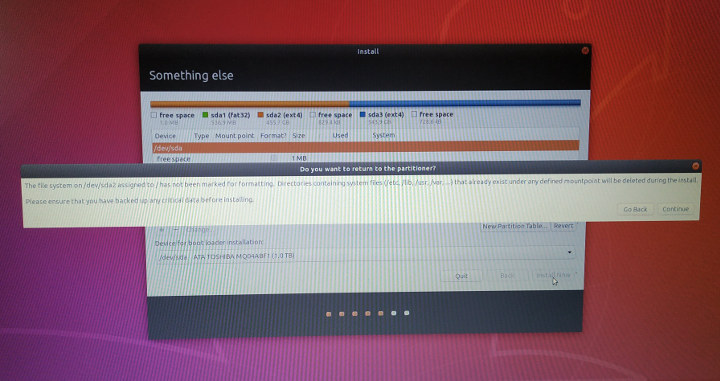

Click OK, and you’ll get a first warning that the partition has not marked for formatting, and that you should backup any critical data before installation.

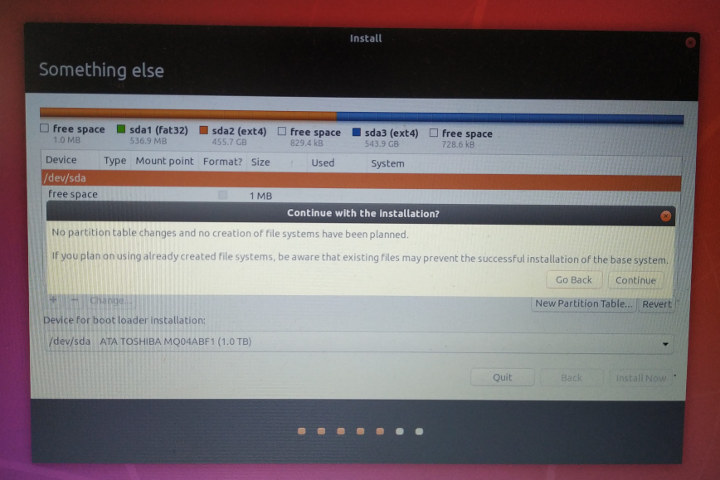

That’s followed by another warning that reinstalling Ubuntu may completely fail due to existing files.

Installation completed in my case, but but the system would not boot. So I reinstall Ubuntu 18.04 on another partition on my hard drive. With hindsight, I wonder if Ubuntu 18.04.3 LTS release may have been the cause the re-installation failure, since I had the Ubuntu 18.04.2 ISO, and my system may have already been updated to Ubuntu 18.04.3.

Fresh Ubuntu 18.04 Install and Restoring Backup – A time-consumption endeavor

Installation on the other partition worked well, but I decided to use Duplicity backup in case I forgot about some important files in my manual tar backup. I was sort of hoping restoring a backup would be faster, but I was wrong. I started at 13:00, and it completed the next day around 10:00 in the morning.

It looks like duplicity does not support multi-threaded compression. The good news is that I can still have my previous home folder, so I’ll be able to update the recently modified files accordingly, before deleting the old Ubuntu 18.04 installation and recovering space.

Lessons Learned, Potential Improvements, and Feedback

Anyway I learned a few important lessons:

- Backups are very important as you’ll never know when problem may happen

- Restoring backup may be a timing consuming process

- Having backup hardware like another PC or laptop is critical if your work depends on you having access to a computer. I also learned this the day the power supply of my PC blew up, and since it was under warranty I would get a free replacement. I just had to wait for three weeks since they had to send the old one to a shop in the capital city, and get a new PSU back

- Ubuntu re-installation procedure is not optimal.

I got back to normal use after three days due to the slow backup creation and restoration. I still have to reinstall some of the apps I used, but the good thing if that they’ll still have customizations and things like list of recent files.

I’d like to improve my backup situation. To summarize, I’m using Duplicity / Deja Dup in Ubuntu 18.04 backing up to a USB 3.0 drive directly attached to my laptop weekly.

One of the software improvements I can think of is making sure the backup software uses multi-threaded compression and decompression. I may also be more careful when selecting which files I backup to decrease the backup time & size, but this is a time consuming process that I’d like to avoid.

Hardware-wise, I consider saving the files to an external NAS, as Gigabit Ethernet should be faster than USB 3.0, and it may also lower I/O to my laptop. Using a USB 3.0 SSD instead of a hard drive may also help, but it does not seem the most cost-effective solution. I’m not a big fan of backup personal files to the cloud, but at the same time, I’d like this option to restore programs and configuration files. I just don’t know if that’s currently feasible.

So how do you handle backups in Linux on your side? What software & hardware combination do you use, and how often do you backup files from your personal computer / laptop?

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

I use burp ( https://burp.grke.org/ ) it works quite well, you tell the server how much backups you want for your computers, and the client connects regularly to the server ( crontab) to ask if the backup needs to be run. Security is done through ssl and certificates, and there’s a quie good web UI on top of that.

Very reliable, handles big backups very well, i recommend it ( over BackupPC which i used before, worked well, but.. easy to break)

Did you have look at https://restic.net already?

I’ve only ever used Simple Backup or Deja-Vu. Have you used Restic?

> Have you used Restic?

Nope, but the people I know who use Linux on their desktop all do. On my client machines the bundled built-in backup solution (TimeMachine) backs up to Linux hosts (mostly SBC).

And on servers we utilize filesystem features to ensure data safety, data integrity and data consistency (the built-in features of modern storage attempts like ZFS or btrfs are perfectly fine for this).

Have a look at timeshift: https://github.com/teejee2008/timeshift

I think this is what I’m looking for!

Looks really nice. Thanks for the tip.

And what about use self hosted Nexcloud, where you will backup continuously.

Back when making an install usable was a week long grind of messing with config files backing up and restoring everything sort of made sense but I don’t think it does anymore. Especially if the result is 300GB files that will cause automated backups to stop working pretty soon and make manually doing them a massive pain. Backing up home should really be enough if you are careful enough not to have loads of totally legal BD rips etc in there. That said I don’t back up anything. My work stuff is all done in git so as long as… Read more »

Make a gitlab.com account too and push to both of them.

Hey,

Try Veeam Agent for Linux FREE, fast system restore.

What was disk usage (df -h)?

What’s the reason for using ~0.5TB for os?

Data should have own partition aside from os.

I just used a single partition for everything. I just resized the partition to install Ubuntu on another partition since i was unable to reinstall Ubuntu on the existing partition. Using a separate partition for /home looks like a good idea though.

When I partition disks for Linux I make three 40GB partitions and then put the rest into a single partition. I install the OS into the 40GB partition and make the big partition /home. So when I want to try out a new OS I install it into an unused 40GB partition and point fstab to mount /home. Now it is trivial to switch between operating systems. And if you mess up an install just reformat the 40GB partition. When Windows is involved the setup is more complicated. I try very hard not to have to boot Windows natively but… Read more »

I have a backup server that has a cron job to run my own script that does rsync over ssh on selected folders from multiple computers (windows and Linux). Backup server is Ubuntu + ZFS, and after rsync on a zfs filesystem, it does a snapshot, so I can easily mount backups from different days in the past (time machine). Also backup server has a dock where I can put an external hdd, and update the zfs snapshots on it e.g. weekly, then take hdd offsite – I rotate a couple of hdd so that at any point in time… Read more »

I use ccollect, a big shell script over rsync+hardlinks to handle all my backups of my servers and laptops. I did not liked rsnapshot way of naming the backups (I prefer the ccollect way of naming them with date strings)

https://www.nico.schottelius.org/software/ccollect/

Over SSH on low cpu devices, I used arcfour cipher to lower the encyption load, or even went straight over rsync:// protocol without SSH. SSH is still not using low cpu cipher based on elliptic curves.

My Linux laptop is not backed up, but I am usually creating a separate partition for the home directory. My Windows has a Macrium Reflect backup without any drivers installed and one where all needed drivers + minimal programs are installed (Total Commander, Office, Firefox, Chrome). On my XU4 running as a NAS I used to have a cronjob running once a month where the boot and the system partitions were dumped with dd to an external drive. It used to be ok to backup partitions like that since XU4 used a microSD card and partitions were small by today’s… Read more »

Would not shrink to below 25GB for 18.04 server version.

https://askubuntu.com/questions/1030839/minimum-requirements

https://askubuntu.com/questions/2596/comparison-of-backup-tools

rather than dd, you could try fsarchiver (http://www.fsarchiver.org/). It’ll compress just the selected partition(s) content. Say, you have 40GB sda1 with just 10GB used space. Fsarchiver will just comporess that 10GB to a file.

I’ve used to backup the 446 first bytes from my drive then fsarchiver partitions that need to be backed up.

I stopped backing up a long time ago. I daily copy (manually or automatically) from my workstation to a NAS (currently an HC2 plus two others that sync from it). I also have a 2nd workstation which I start using when the first one starts behaving. If the issue was hardware, I replaced it. Then I simply reinstall. I found it simpler and faster than to deal with a backup architecture. I have not lost data in the last 25 years (except my phone which recently committed sepuku for an unknown reason, lost my contact list, totally forgot that my… Read more »

>I found it simpler and faster than to deal with a backup architecture

This. I suspect a lot of the people suggesting their favourite system wouldn’t realise if it stopped working months ago until they actually needed to restore something and the backups don’t exist or are totally useless. Just make logging your changes and pushing them somewhere part of your workflow.

>lost my contact list,

Allowing your phone to use google or apples sync service does make sense for this at least.

> Just make logging your changes and pushing them somewhere part of your workflow.

Exactly. For coding, as you wrote, git. For other stuff like docs you received or wrote and sent by email, just copy them to the NAS one you’re done with them.

> Google sync

I have very little trust in cloud storage that ends up being data mined…

Just export your contacts as files.

Id get myself a big enough storage media and use simple rsync. No compression (or maximum something light like i.e. gzip) and easy incremental backup. Of course you should do a full snapshot every now and then, or you backup to zfs or similar (with rsync, as long as your desktop uses ext4) and snapshot with the fs’ on board utilities. Should be considerably faster.

Currently im rather lazy and don’t backup the OS at all. The data is copied between my current and previous pc manually. Which has the added benefit that my previous pc is hardly ever running.

I use rsync for home and data directory/filesystems, and fsarchiver ( https://en.wikipedia.org/wiki/FSArchiver ) for the OS. With it I made a weekly “hot backup” compressed image. I used it because its compression also uses threads, so you can maximize the usage of HyperThreading CPUs during the compression, resulting in a lower time to create the entire image. To recover a single file/directory you’ve to restore the image in another equal or greater partition than the source, but all we hope that this never happens; in the meanwhile, I can confirm that each time I had issues with the OS, it… Read more »

I don’t back up anything either, I have everything in the cloud. I can whack my Linux box and not lose anything except a few configuration settings which are easy to set back in. In fact, every time I upgrade my major Ubuntu revision I use a clean partition. Gitlab.com gives you unlimited, private projects for free. Plus I have a second copy of most stuff in my Amazon account. All of my mail is in gmail. I do work on everything locally and then commit and push the changes up to gitlab. Gitlab works for all files, not just… Read more »

Sneaky. Google Compute has 50GB of free git as well… Not “unlimited” but that’s more than enough for config and most personal/business documents that anyone will have. If you’re a video content creator then cloud storage is probably not in the cards, at least not for raw footage.

Have you tried out https://www.borgbackup.org/ ?

What ext4 software bug caused this issue? Are you sure it is not your hardware problem?

I think exactrly like you ! It’s look like a hardware corruption !

I can’t see any hardware problems on my side. It has been happening for six months, I’ve looked into hardware error but no clue in the log at all. I’ve linked to the potential EXT-4 bug in the very first link in the post.

(care for secure options selection in the last line)

(If disk should skip bad fs sectors e2fsck -c option can be one possibility, but summary of file system errors is hidden then, AFAIK at least for e2fsck. Important note: man badblocks)

Edit: copy/paste changes single quotation mark possibly into acute (accent), that has to be re-edited into (single) quotation mark(s) again !

I’ve put your commands into a “preformatted block”. This should have solved the quotation marks.

/etc/nixos and /home rsynced to a separate harddrive every once in a while. I should probably create another backup on a separate machine but I’m too lazy.

redeployment takes an hour more or less.

Just verified; It’s this snippet on a cron job twice a week:

#! /usr/bin/env sh

rsync -avc /mnt/sdb1/home/rk /mnt/sdc1/home

rsync -avc /etc/nixos /mnt/sdc1/nixos

Additionally I have syncthing dumping some stuff from my phone over here on my home but yeah… If both hard drives fail I’m screwed so I should probably do something about this at some point… Bah. Still too lazy.

Hi,

I use backintime on kunbuntu 18.04. It is well configurable. Cron + Autoexec when pluged in external drive.

I use usb3 nvme external ssd drive: 10Gbit/s.

I’m happy with that.

I use duplicity with Backblaze and I simply renamed pigz to gz and pbzip2 to bzip2 to overcome duplicity’s silly lack of switches… and tbh I don’t understand why installing these parallel versions doesn’t use the alternatives system to replace the single-threaded ones by default… SMP/SMT has been a standard feature of even cheap systems for a decade.

Sorry you are having data problems, I know how frustrating that can be. I’ve lost important data before, so now I overcompensate with triple redundancy. I rotate between 3 identical external hard drives that are kept in 3 different locations so that even a catastrophic building collapse or fire will not destroy my data. I rsync my important data frequently to the external drive currently on-site, then swap it with one of the other drives when I visit one of the other locations where the drives are stored. All drives are LUKS encrypted so I don’t have to worry about… Read more »

Thanks for all your answers. Interesting discussion and so many options 🙂 It looks like the easiest option for me is to rename I think I’ll probably rename pigz to gz and pbzip2 to lbzip2, so I can keep using duplicity and getting much faster backups and “restores”. I’ll also create three partitions: 2x 64GB (or 128GB) for OS, and the remaining large partition for home in case I get into troubles next time. I may also use timeshift to back up the OS partition less frequently, so I can restore my settings and programs more easily. I plan to… Read more »

“The system would not boot, but I could still SSH to it. ” If the system had not booted you would would not be able to SSH in to it. For the SSH service to be running the network device is activated. CTRL-ALT-F1 or CTRL-ALT-F2 etc upto maybe CRL-ALT-F6 should get you to a virtual console where you could login directly to a shell prompt as root or su to root (exec sudo su ) to then fix the problem. For corrupted file system recover or other major disasters, it is always a TOP priority to have a boot DVD… Read more »

I should have said the system would not boot to the command line. Ctrl+Alt+F2 did not work for some reasons.

I am using timeshift for backing up the system automatically, keeping different snapshots for three weeks each. As they are done incrementally, they are very fast and just need some minutes to be created (depending how fast the storage is attached, of course). Additionally I created a lot of scripts using rsync, runned automatically by a cronjob, backing up several other aspects (work data, e-mail-profiles, etc…). And every month I am creating a whole image by using clonezilla. Just on my personal computer, not on the servers as there has to be no downtime, of course. Maybe these are a… Read more »

I test for server restic software, with S3 cloud. Easy differential backups

I’m using rsync through my “dailybak” script (http://git.1wt.eu/web?p=dailybak.git). It performs daily snapshots via the network (when the laptop is connected) to my NAS (DS116J, reinstalled on Linux to get rid of bugs^Wvendor-specific features), and it manages to keep a configurable number of snapshots per week, month, year and 5yr so that I can easily go back in time just by SSHing into the NAS without having to untar gigs of files. rsync is particularly efficient at doing this because it performs hardlinks to the previous backup and uses little extra space. However it uses gigs of RAM to keep track… Read more »

“Gigabit Ethernet should be faster than USB 3.0” – wait, what? Why?

Gigabit Ethernet is, well, 1 Gb/s, whereas USB 3.0 is 5 Gb/s.

Am I missing something here?

True. But my mechanical drive is limited to around 90 MB/s sequential read/write, and during backups it drops much lower, especially when copying a large number of small files.

I wouldn’t be surprised if the very same disk now used in a NAS shows even inferior backup performance (since bottlenecks add up).

I’d be using SATA drives in the NAS, so maybe it would be better.

I also like having my backup drive connected to another machine, as I’ve just remembered I had a backup on a USB drive in the past connected to a machine that would often turn off. Eventually, the main drive was dead, but the backup was corrupted as well.

I had the exact same problem for a couple of months – ssd goes off line, and comes back mounted read-only. No useful error messages.

Turned out to be a flaky power connector. Very difficult to troubleshoot.

Didn’t you see message the drive when the drive would come back online?

My problem may be different since it’s in a laptop, so no cables are involved. I didn’t see any message about the drive coming back online, nor I/O errors, just suddenly apparing file systems errors. It will take a while to debug because it maybe happens once every 2 to 3 months.

My drive stayed online as well, it was just remounted ro. It was a remote system, and every couple of days it would be inaccessible, but when I went to visit it, I could login to the console – the system was still running. There were no messages in syslog, and I couldn’t run dmesg. Bash builtins worked, “echo * for ls, etc”, but it wouldn’t load anything from the disk. Very weird error.

But, since you were talking about a laptop, it’s probably not a power cable anyhow…

Borg Backup someone mentioned here has de-deduplication (as well as compression etc) that can be invaluable to some users.

Why has no one suggested checking for bios or driver updates for the laptop?

Just use ZFS 🙂

( with replication / mirrors + backup to a ZFS remote pool using zfs send )

http://www.nongnu.org/rdiff-backup/ is what I use with an external USB drive.