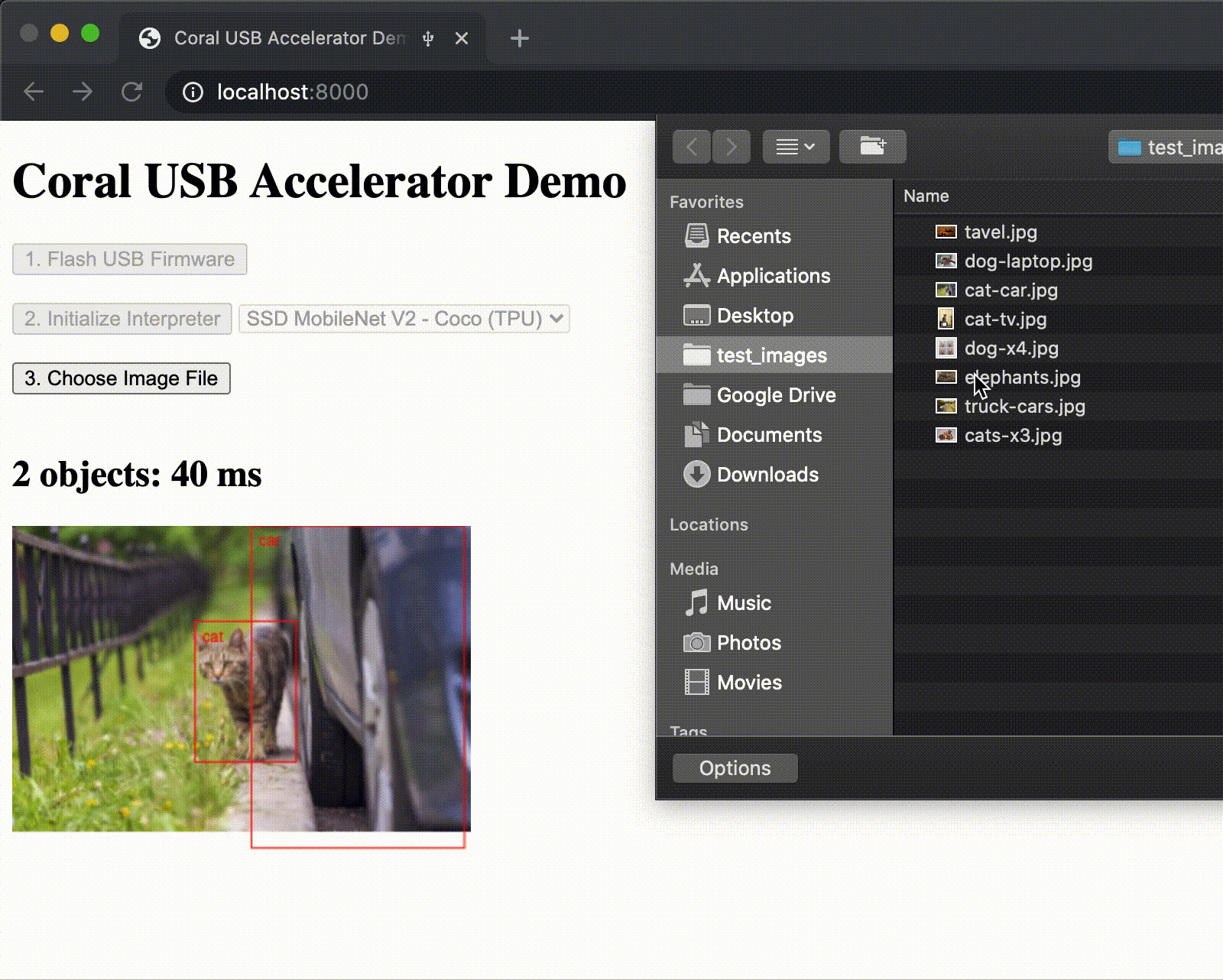

Google Coral is a family of development boards, modules, M.2/mPCIe cards, and USB sticks with support with local AI, aka on-device or offline AI, based on Google Edge TPU. The company has just published some updates with one important firmware update, a manufacturing demo for worker safety & visual inspection, and the ability to use the Coral USB accelerator in Chrome. Coral firmware update prevents board’s excessive wear and tear If you own the original Coral development board or system-on-module based on NXP i.MX 8M processor, you may want to update your Mendel Linux installation with:

|

1 2 |

sudo apt update sudo apt dist-upgrade |

The update includes a patch from NXP with a critical fix to part of the SoC power configuration. Without this patch, the SoC might overstress and the lifetime of your board could be reduced. Note this only affects NXP-based boards, so other Coral products such as Coral Dev Mini powered by Mediatek MT8167S […]