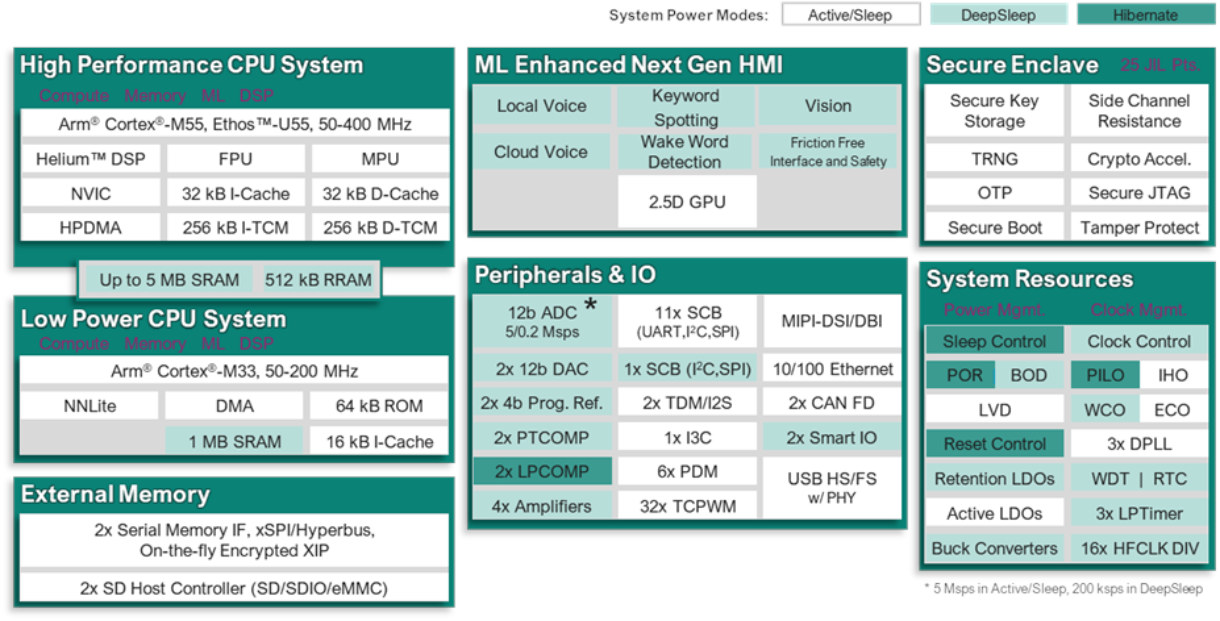

Infineon PSOC Edge E81, E83, and E84 MCU series are dual-core Cortex-M55/M33 microcontrollers with optional Arm Ethos U55 microNPU and 2.5D GPU designed for IoT, consumer, and industrial applications that could benefit from machine learning acceleration. This is a follow-up to the utterly useless announcement by Infineon about PSoC Edge Cortex-M55/M33 microcontrollers in December 2023 with the new announcement introducing actual parts that people may use in their design. The PSOC Edge E81 series is an entry-level ML microcontroller, the PSOC Edge E83 series adds more advanced machine learning with the Ethos-U55 microNPU, and the PSOC Edge E84 series further adds a 2.5D GPU for HMI applications. Infineon PSOC Edge E81, E83, E84-series specifications: MCU cores Arm Cortex-M55 high-performance CPU system up to 400 Mhz with FPU, MPU, Arm Helium support, 256KB i-TCM, 256KB D-TCM, 4MB SRAM (Edge E81/E83) or 5MB SRAM (Edge E84) Arm Cortex-M33 low-power CPU system up […]

AAEON BOXER-8645AI Jetson AGX Orin-powered embedded AI system supports up to 8 GMSL2 cameras

AAEON BOXER-8645AI is an embedded AI system powered by NVIDIA Jetson AGX Orin that features eight GMSL2 connectors working with e-con Systems’ NileCAM25 Full HD global shutter GMSL2 color cameras with up to 15-meter long cables. The BOXER-8645AI is fitted with the Jetson AGX Orin 32GB with 32GB LPDDR5 and 64GB flash and up to 200 TOPS of AI performance. Other features include M.2 NVMe and 2.5-inch SATA storage, 10GbE and GbE networking ports, HDMI videos, and a few DB9 connectors for RS232, RS485, DIO, and CAN Bus interfaces. The embedded system takes 9V to 36V wide DC input from a 3-pin terminal block. AAEON BOXER-8645AI specifications: AI accelerator module – NVIDIA Jetson AGX Orin 32GB CPU – 8-core Arm Cortex-A78AE v8.2 64-bit processor with 2MB L2 + 4MB L3 cache GPU / AI accelerators NVIDIA Ampere architecture with 1792 NVIDIA CUDA cores and 56 Tensor Cores @ 1 GHz […]

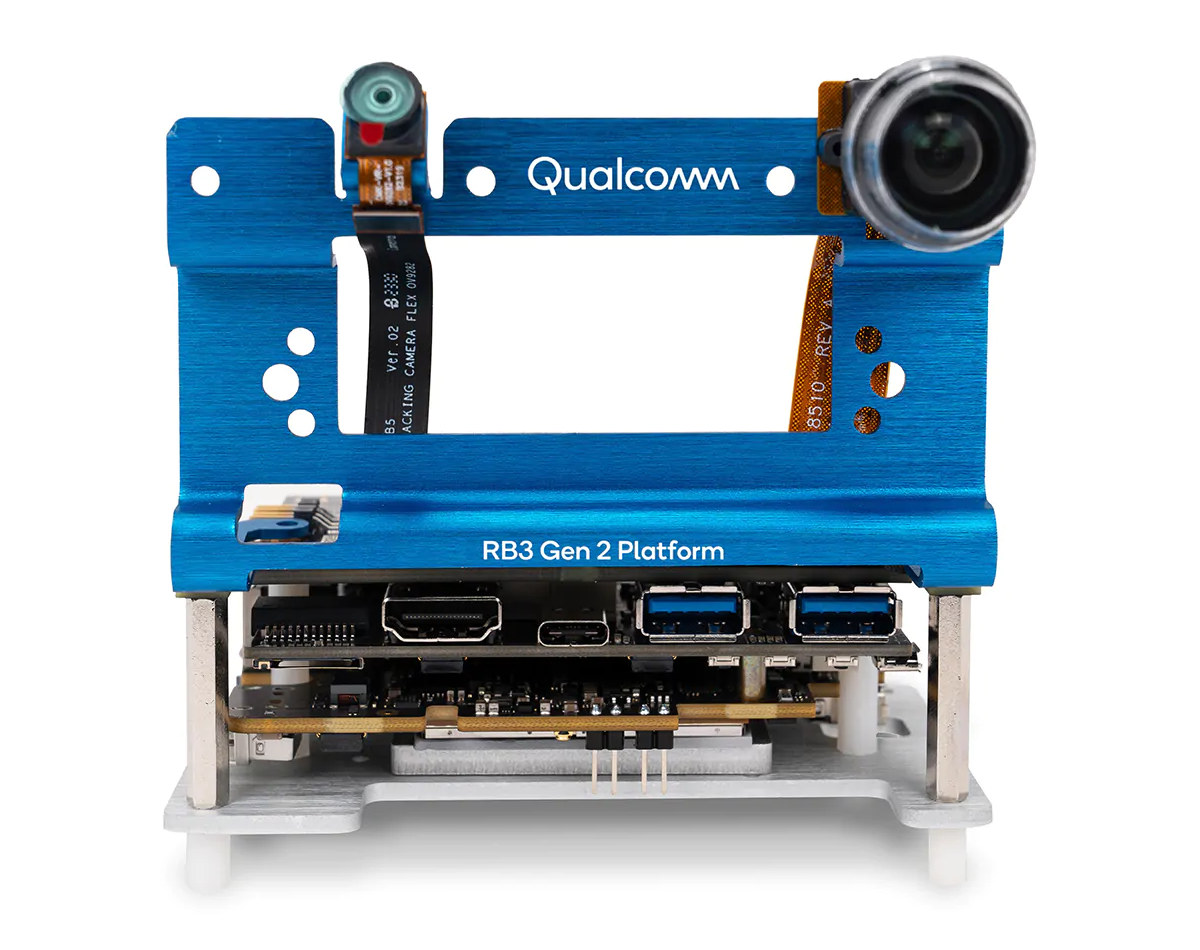

Qualcomm RB3 Gen 2 Platform with Qualcomm QCS6490 AI SoC targets robotics, IoT and embedded applications

Qualcomm had two main announcements at Embedded World 2024: the ultra-low-power Qualcomm QCC730 WiFi microcontroller for battery-powered IoT devices and the Qualcomm RB3 Gen 2 Platform hardware and software solution designed for IoT and embedded applications based on the Qualcomm QCS6490 processor that we’re going to cover today. The kit is comprised of a QCS6490 octa-core Cortex-A78/A55 system-on-module with 12 TOPS of AI performance, 6GB RAM, and 128GB UFS flash connected to the 96Boards-compliant Qualcomm RBx development mainboard through interposer, as well as optional cameras, microphone array, and sensors. Qualcomm QCS6490/QCM6490 IoT processor Specifications: CPU – Octa-core Kryo 670 with 1x Gold Plus core (Cortex-A78) @ 2.7 GHz, 3x Gold cores (Cortex-A78) @ 2.4 GHz, 4x Silver cores (Cortex-A55) @ up to 1.9 GHz GPU – Adreno 643L GPU @ 812 MHz with support for Open GL ES 3.2, Open CL 2.0, Vulkan 1.x, DX FL 12 DSP – Hexagon […]

SolidRun Bedrock R8000 is the first Industrial PC to feature AMD Ryzen Embedded 8000 series

Israeli embedded systems manufacturer, SolidRun, has recently introduced the Bedrock R8000, a new fanless, Industrial PC targeted at edge AI applications. The Bedrock R8000 integrates the newly-announced AMD Ryzen Embedded 8000 series processors with 8 Zen 4 cores and 16 threads clocked at up to 5.1 GHz. The Ryzen Embedded 8000 Series has a 16 TOPS NPU for AI workloads and offers up to 10 years of guaranteed availability. Also, up to 3 AI accelerators (either Hailo-10 or Hailo 8) can be combined with the onboard NPU to achieve over 100 TOPS for generative or inferencing AI workloads. Apart from the Ryzen Embedded 8000 series, the Bedrock R8000 series also supports other Accelerated Processing Units (APU) in the “Hawk Point” family. The CPU power limit can be adjusted in the BIOS within a range of 8W to 54W. Memory goes up to 96GB DDR5 ECC/non-ECC and three NVME PCIe Gen4 […]

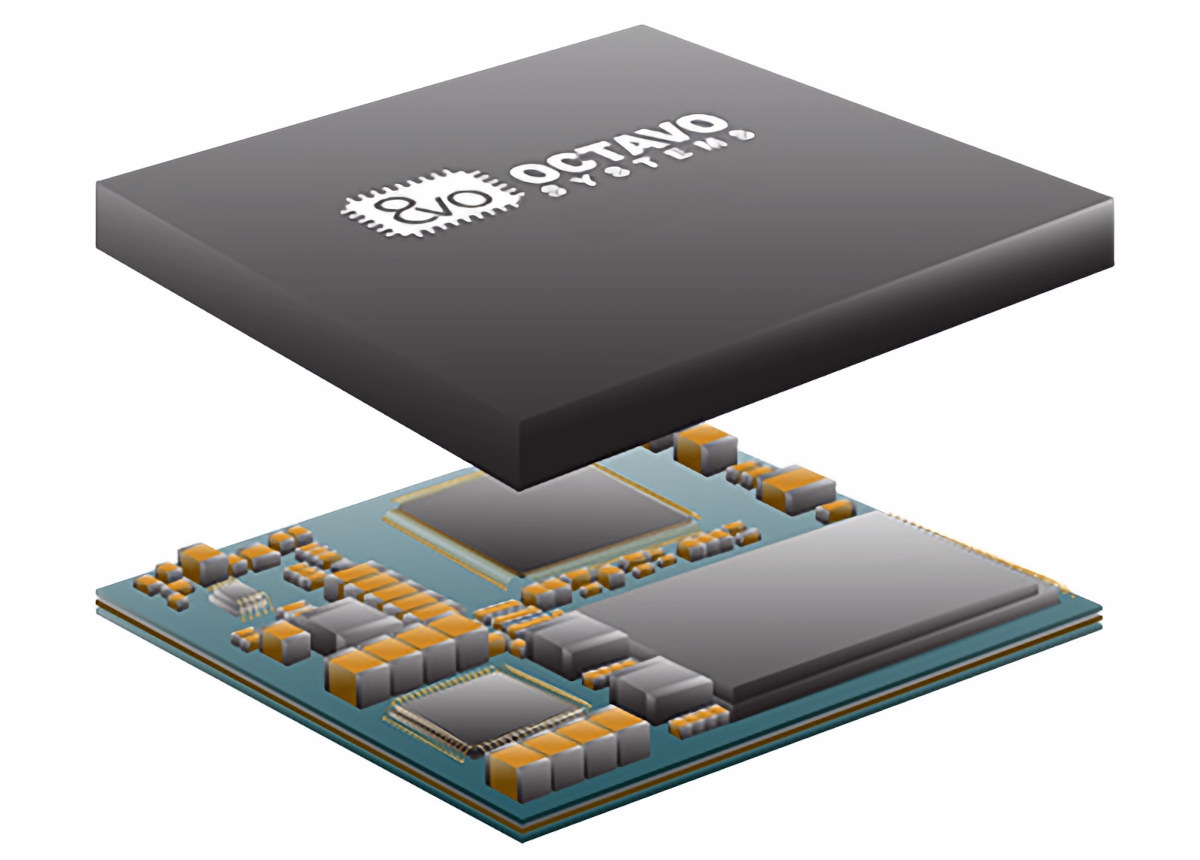

Octavo OSD32MP2 System-in-Package (SiP) packs an STM32MP25 SoC, DDR4, EEPROM, and passive components into a single chip

Octavo Systems OSD32MP2 is a family of two System-in-Package (SiP) modules, comprised of the OSD32MP2 and OSD32MP2-PM, based on the STMicro STM32MP25 Arm Cortex-A35/M33 AI processor, DDR4 memory, and various components to reduce the complexity, size, and total cost of ownership of solutions based on the STM32MP2 chips. The OSD32MP2 is a larger, yet still compact, 21x21mm package with the STM32MP25, DDR4, EEPROM, oscillators, PMIC, passive components, and an optional RTC, while the OSD32MP2-PM is even smaller at 14x9mm and combines the STM32MP25, DDR4, and passive components in a single chip. OSD32MP2 specifications: SoC – STMicro STM32MP25 CPU – Up to 2x 64-bit Arm Cortex-A35 @ 1.5 GHz MCU 1x Cortex-M33 @ 400 MHz with FPU/MPU; 1x Cortex M0+ @ 200 MHz in SmartRun domain GPU – VeriSilicon 3D GPU @ 900 MHz with OpenGL ES 3.2 and Vulkan 1.2 APIs support VPU – 1080p60 H.264, VP8 video decoder/encoder Neural […]

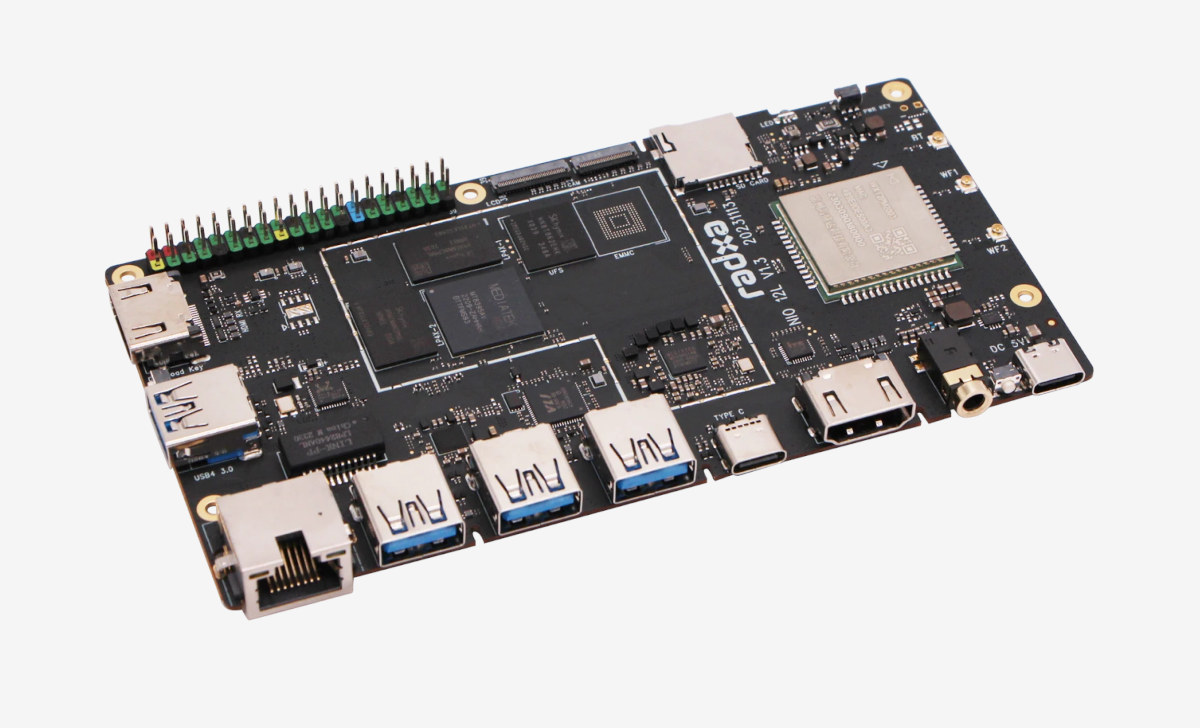

Radxa NIO 12L – A low-profile MediaTek Genio 1200 SBC with Ubuntu certification for at least 5 years of updates

Radxa NIO 12L is a low-profile single board computer (SBC) based on the MediaTek Genio 1200 octa-core Cortex-A78/A55 SoC with a 4 TOPS NPU that got Ubuntu certification with at least 5 years of software update, and up to 10 years for extra payment. The board comes with up to 16GB RAM, 512GB UFS storage, HDMI, USB-C (DisplayPort), and MIPI DSI video interfaces, a 4K-capable HDMI input port, two MIPI CSI camera interfaces, gigabit Ethernet and WiFi 6 connectivity, five USB ports, and a 40-pin GPIO header for expansion. Radxa NIO 12L specifications: SoC – Mediatek Genio 1200 (MT8395) CPU Quad-core Arm Cortex-A78 @ up to 2.2 to 2.4GHz Quad-core Arm Cortex-A55 @ up to 2.0GHz GPU Arm Mali-G57 MC5 GPU with support for OpenGL ES1.1, ES2.0, and ES3.2, OpenCL 1.1, 1.2 and 2.2, Vulkan 1.1 and 1.2 2D image acceleration module APU – Dual‑core AI Processor Unit (APU) Cadence […]

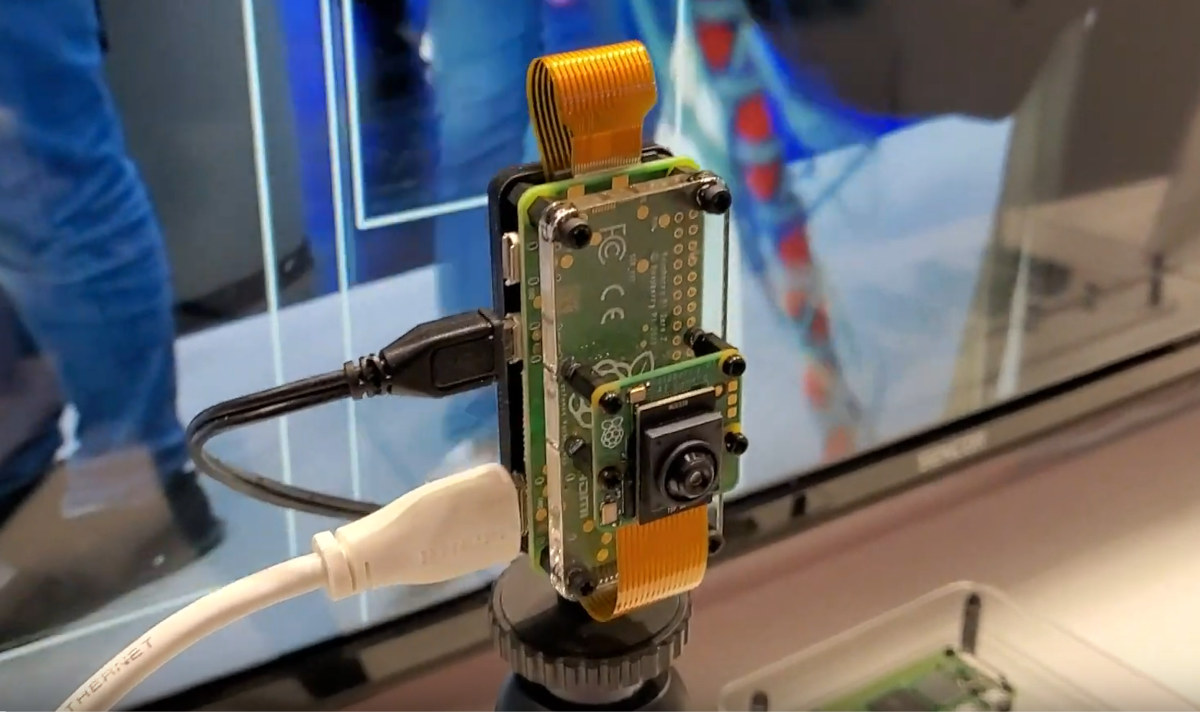

Raspberry Pi at Embedded World 2024: AI camera, M.2 HAT+ M Key board, and 15.6-inch monitor

While Raspberry Pi has not officially announced anything new for Embedded World 2024 so far, the company is currently showcasing some new products there including an AI camera with a Raspberry Pi Zero 2 W and a Sony IMX500 AI sensor, the long-awaited M.2 HAT+ M Key board, and a 15.6-inch monitor. Raspberry Pi AI camera Raspberry Pi AI Camera kit content and basic specs: SBC – Raspberry Pi Zero 2 W with Broadcom BCM2710A1 quad-core Arm Cortex-A53 @ 1GHz (overclockable to 1.2 GHz), 512MB LPDDR2 AI Camera Sony IMX500 intelligent vision sensor 76-degree field of view Manually adjustable focus 20cm cables The kit comes preloaded with MobileNet machine vision model but users can also import custom TensorFlow models. This new kit is not really a surprise as we mentioned Sony and Raspberry Pi worked on exactly this when we covered the Sony IMX500 sensor. I got the information from […]

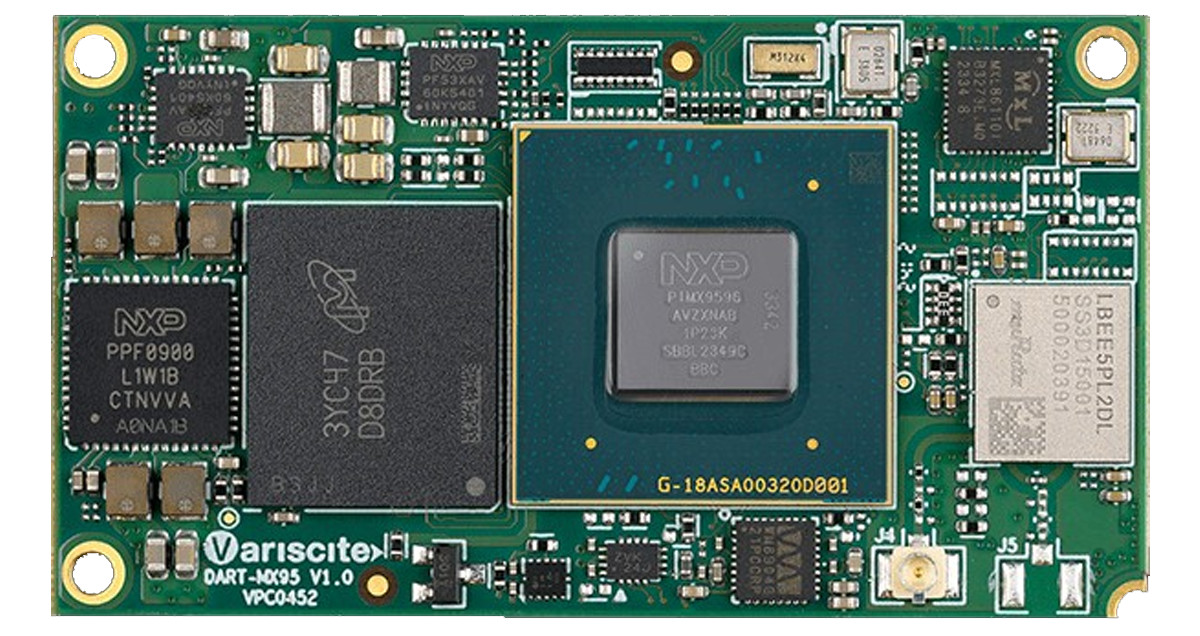

Variscite DART-MX95 SoM – Edge Computing with dual GbE, 10GbE, Wi-Fi 6, and AI/ML capabilities

Introduced at Embedded World 2024, the Variscite DART-MX95 SoM is powered by NXP’s i.MX 95 SoC and features an array of high-speed peripherals including dual GbE, 10GbE, and dual PCIe interfaces. Additionally, this SoM supports up to 16GB LPDDR5 RAM and up to 128GB of eMMC storage. It features a MIPI-DSI display interface, multiple audio interfaces, MIPI CSI2 for camera connectivity, USB ports, and a wide range of other functionalities, making it highly versatile for a variety of applications. Previously, we have covered many different SoMs designed by Variscite, including the Variscite VAR-SOM-6UL, Variscite DART-MX8M, and Variscite DART-6UL SoM, among others. Feel free to check those out if you are looking for products with NXP SoCs. Variscite DART-MX95 SoM Specifications SoC – NXP i.MX 95 CPU Up to 6x 2.0GHz Arm Cortex-A55 cores Real-time co-processors – 800MHz Cortex-M7 and 250MHz Cortex-M33. 2D/3D Graphics Acceleration 3D GPU with OpenGL ES 3.2, […]