ADLINK “AI Camera Dev Kit” is a pocket-sized NVIDIA Jetson Nano devkit with an 8MP image sensor, industrial digital inputs & outputs, and designed for rapid AI vision prototyping. The kit also features a Gigabit Ethernet port, a USB-C port for power, data, and video output up to 1080p30, a microSD card with Linux (Ubuntu 18.04), and a micro USB port to flash the firmware. As we’ll see further below it also comes with drivers and software to quickly get started with AI-accelerated computer vision applications. AI Camera Dev Kit specifications: System-on-Module – NVIDIA Jetson Nano with CPU – Quad-core Arm Cortex-A57 processor GPU – NVIDIA Maxwell architecture with 128 NVIDIA cores System Memory – 4 GB 64-bit LPDDR4 Storage – 16 GB eMMC Storage – MicroSD card socket ADLINK NEON-series camera module Sony IMX179 color sensor with rolling shutter Resolution – 8MP (3280 x 2464) Frame Rate (fps) – […]

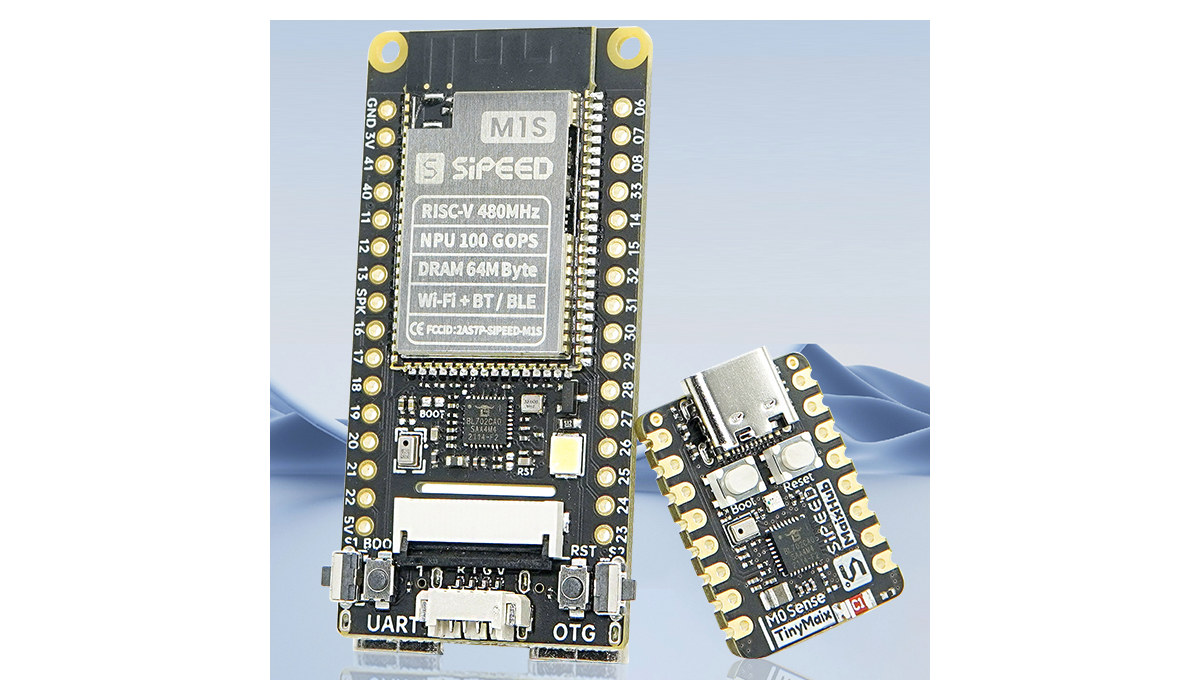

Sipeed M1s & M0sense – Low-cost BL808 & BL702 based AI modules (Crowdfunding)

Sipeed has launched the M1s and M0Sense AI modules. Designed for AIoT application, the Sipeed M1s is based on the Bouffalo Lab BL808 32-bit/64-bit RISC-V wireless SoC with WiFi, Bluetooth, and an 802.15.4 radio for Zigbee support, as well as the BLAI-100 (Bouffalo Lab AI engine) NPU for video/audio detection and/or recognition. The Sipeed M0Sense targets TinyML applications with the Bouffa Lab BL702 32-bit microcontroller also offering WiFi, BLE, and Zigbee connectivity. Sipeed M1s AIoT module The Sipeed M1S is an update to the Kendryte K210-powered Sipeed M1 introduced several years ago. Sipeed M1s module specifications: SoC – Bouffalo Lab BL808 with CPU Alibaba T-head C906 64-bit RISC-V (RV64GCV+) core @ 480MHz Alibaba T-head E907 32-bit RISC-V (RV32GCP+) core @ 320MHz 32-bit RISC-V (RV32EMC) core @ 160 MHz Memory – 768KB SRAM and 64MB embedded PSRAM AI accelerator – NPU BLAI-100 (Bouffalo Lab AI engine) for video/audio detection/recognition delivering up […]

MediaTek unveils Dimensity 9200 Octa-core Cortex-X3/A710/A510 5G mobile processor

MediaTek has just launched the Dimensity 9200 octa-core flagship 5G mobile processor with one Cortex-X3 core, two Cortex-A710 cores, and four Cortex-A510 cores, as well as the latest Arm Immortalis-G715 GPU. Manufactured for a TSMC 4nm processor for efficiency, the new flagship processor supports mmWave 5G and sub-6GHz cellular connectivity, LPDDR5x 8,533 Mbps memory, UFS 4.0 storage, and embeds a faster MediaTek APU 690 AI processor MediaTek Dimensity 9200 specifications: Octa-core CPU subsystem 1x Arm Cortex-X3 core at up to 3.05 GHz 3x Arm Cortex-A710 cores at up to 2.85 GHz 4x Arm Cortex-A510 cores up to 1.80GHz 8MB L3 cache 6MB system cache GPU – Arm Immortalis-G715 with support for Vulkan 1.3, hardware-based ray tracing engine AI Accelerator – MediaTek APU 690 AI processor with MDLA (MediaTek Deep Learning Accelerator), MVPU (MediaTek Vision Processing Unit), SME (I don’t know what that is), and DMA Memory I/F – LPDRR5x 8,533 […]

AXERA AX620A 4K AI SoC delivers up to 14.4 TOPS for computer vision applications

AXERA AX620A is a high-performance, low-power AI SoC with a quad-core Arm Cortex-A7 processor and a 14.4TOPs @ INT4 or 3.6TOPs @ INT8’s NPU that is slightly inferior to the Amlogic A311D, and mainly used for AI vision applications. With high computing power and built-in image processing capabilities, the AX620A can support a wide range of AI workloads. It also offers low power consumption with low standby power and fast wake-up, so the chip can be integrated into battery-powered products. AXERA AX620A specifications: CPU – Quad-core Arm Cortex-A7 @ 1.0 GHz with 32KB L1 I-cache + 32KB L1 D-cache per core, 256KB L2 cache, FPU and NEON NPU – 14.4 TOPS @ INT4, 3.6 TOPS @ INT8 with support for Imagenet, AlexNet, VGG, ResNet, GoogLeNet, Faster R-CNN, SSD, FPN, Yolo V3, and other neural networks. ISP Proton AI-ISP up to 4Kp30 4 channels of camera support up to 4x 1080p30 Support […]

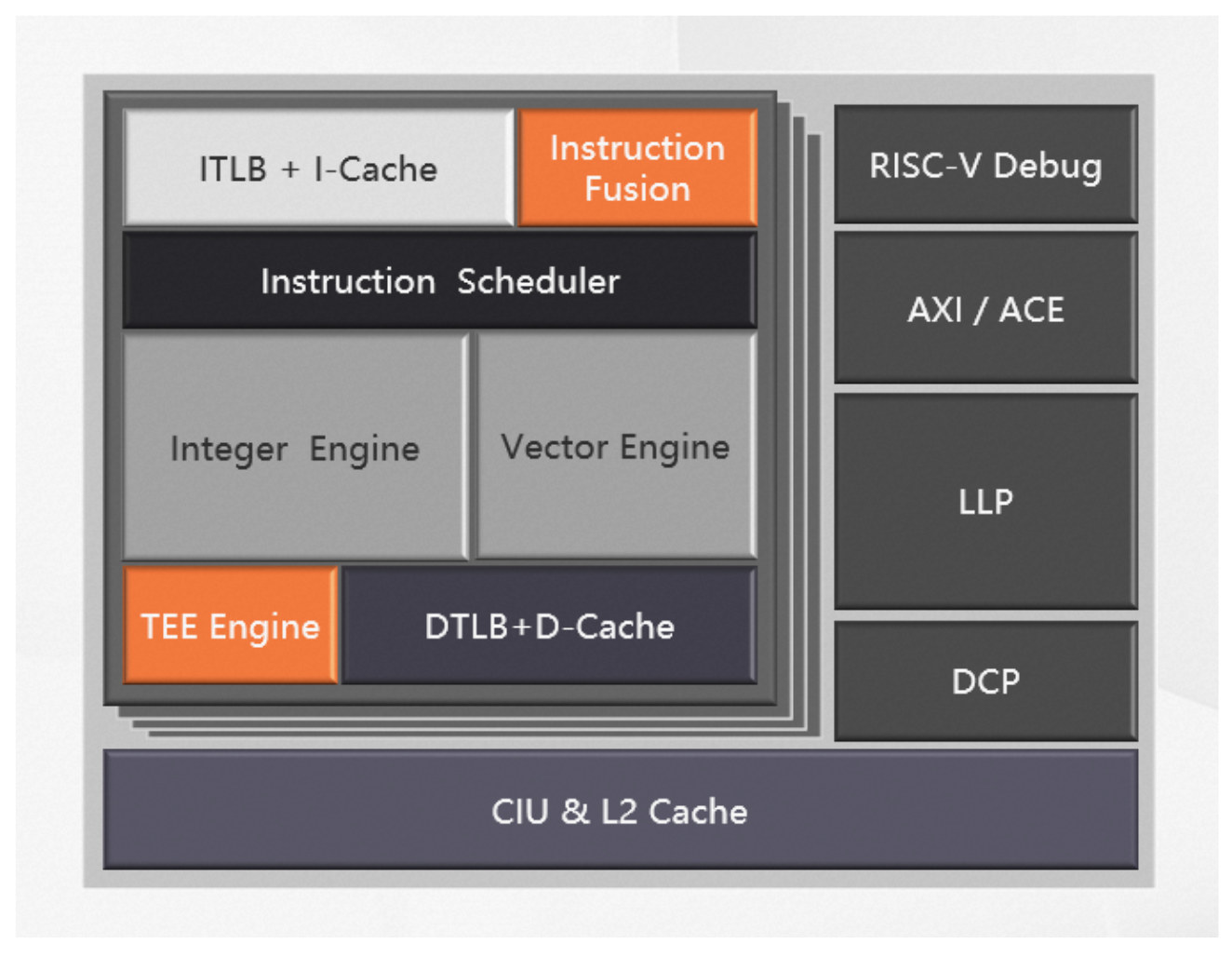

T-Head XuanTie C908 RISC-V core targets AIoT applications

We’ve seen two announcements of high-end RISC-V cores this week with the SiFive P670 and Andes AX65 processors each with a 4-way out-of-order pipeline, but Alibaba’s T-Head Semiconductor Xuantie C908 is a little different with a dual-issued, 9-stage in-order pipeline and support for the RISC-V Vector extension acceleration targeting mid-range AIoT applications. The C908 64-bit RISC-V core adopts the RV64GCB[V] instruction and complies with the RVA22 profile for better compatibility with Android and other “rich” operating systems. The company says its performance is between the C906 and C910 cores introduced in 2020 and 2019 respectively. XuanTie C908 highlights: RV32GCB[V] 32-bit and RV64GCB[V] 64-bit RISC-V architectures with Bit manipulation and (optional) Vector operations extensions Support for RV32 COMPAT mode which allows for 64-bit RISC-V CPUs to run 32-bit binary code, and was merged into Linux 5.19. XuanTie extensions, including Instruction, Memory Attributes Extension (XMAE). RVA22 profile compatibility Cluster of 1 to […]

Andes unveils AndesCore AX65 Out-of-Order RISC-V core for compute intensive applications

Andes Technology has unveiled the high-end AndesCore AX60 series out-of-order 64-bit RISC-V processors at the Linley Fall Processor Conference 2022 with the new cores designed for compute-intensive applications such as advanced driver-assistance systems (ADAS), artificial intelligence, augmented/virtual reality, datacenter accelerators, 5G infrastructure, high-speed networking, and enterprise storage. AndesCore AX65 is the first member of the family and supports RISC-V scalar cryptography extension and bit manipulation extension. It is a 4-way superscalar core with Out-of-Order (OoO) execution in a 13-stage pipeline and can fetch 4 to 8 instructions per cycle. The company further explains the AX65 core then decodes, renames, and dispatches up to 4 instructions into 8 execution units, including 4 integer units, 2 full load/store units, and 2 floating-point units. The AX65’s memory subsystem also includes split 2-level TLBs (translation lookaside buffers) with up to 64 outstanding load/store instructions. Up to eight AX65 cores (or should that then be […]

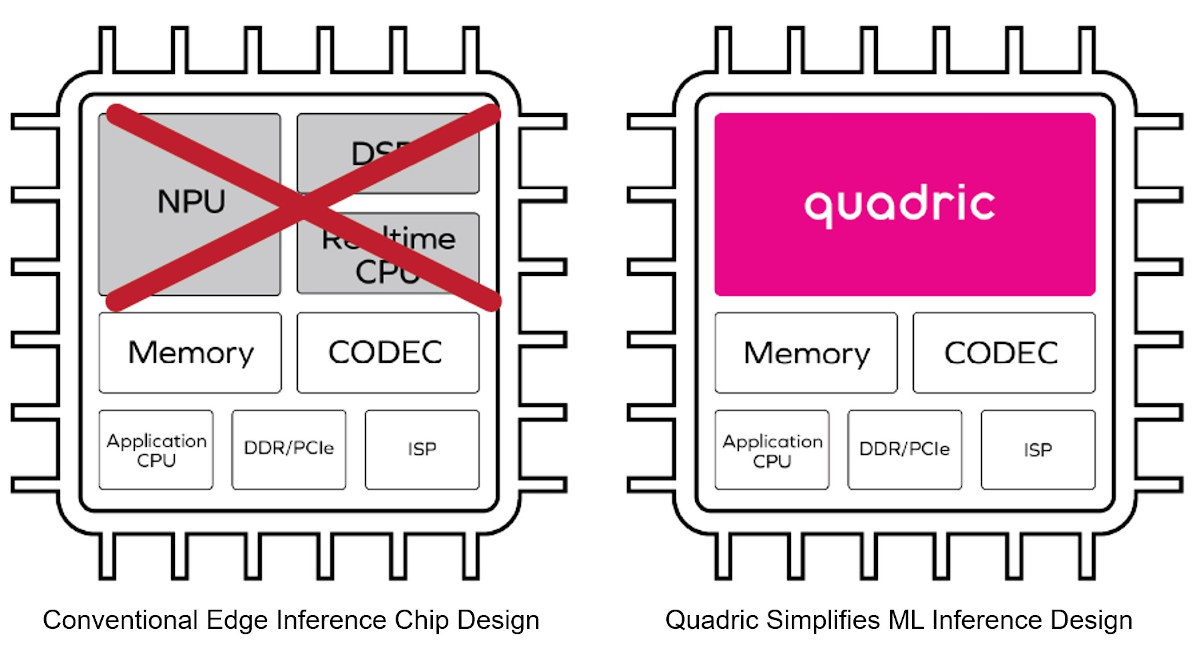

Quadric Chimera GPNPU IP combines NPU, DSP, and real-time CPU into one single programmable core

A typical chip for AI or ML inference would include an NPU, a DSP, a real-time CPU, plus some memory, an application processor, an ISP, and a few more IP blocks. Quadric Chimera GPNPU (general purpose neural processor unit) IP combines the NPU, DSP, and real-time CPU into one single programmable core. According to Quadric, the main benefit of such design is simplifying system-on-chip (SoC) hardware design and subsequent software programming once the chip is available thanks to a unified architecture for machine learning inference as well as pre-and-post processing. Since the core is programmable it should also be future-proof. Three “QB series” Chimera GPNPU cores are available: Chimera QB1 – 1 TOPS machine learning, 64 GOPS DSP capability Chimera QB4 – 4 TOPS ML, 256 GOPS DSP Chimera QB16 – 16 TOPS ML, 1 TOPS DSP Quadric says the Chimera cores can be used with any (modern) manufacturing process […]

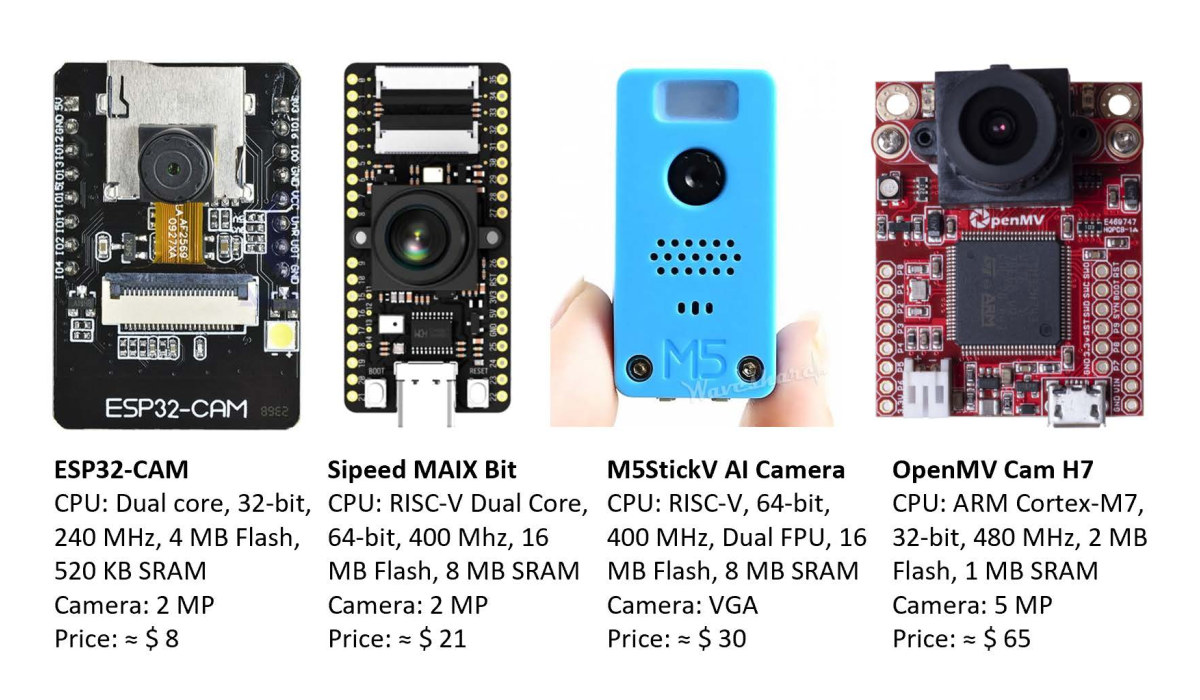

TinyML-CAM pipeline enables 80 FPS image recognition on ESP32 using just 1 KB RAM

The challenge with TinyML is to extract the maximum performance/efficiency at the lowest footprint for AI workloads on microcontroller-class hardware. The TinyML-CAM pipeline, developed by a team of machine learning researchers in Europe, demonstrates what’s possible to achieve on relatively low-end hardware with a camera. Most specifically, they managed to reach over 80 FPS image recognition on the sub-$10 ESP32-CAM board with the open-source TinyML-CAM pipeline taking just about 1KB of RAM. It should work on other MCU boards with a camera, and training does not seem complex since we are told it takes around 30 minutes to implement a customized task. The researchers note that solutions like TensorFlow Lite for Microcontrollers and Edge Impulse already enable the execution of ML workloads, onMCU boards, using Neural Networks (NNs). However, those usually take quite a lot of memory, between 50 and 500 kB of RAM, and take 100 to 600 ms […]