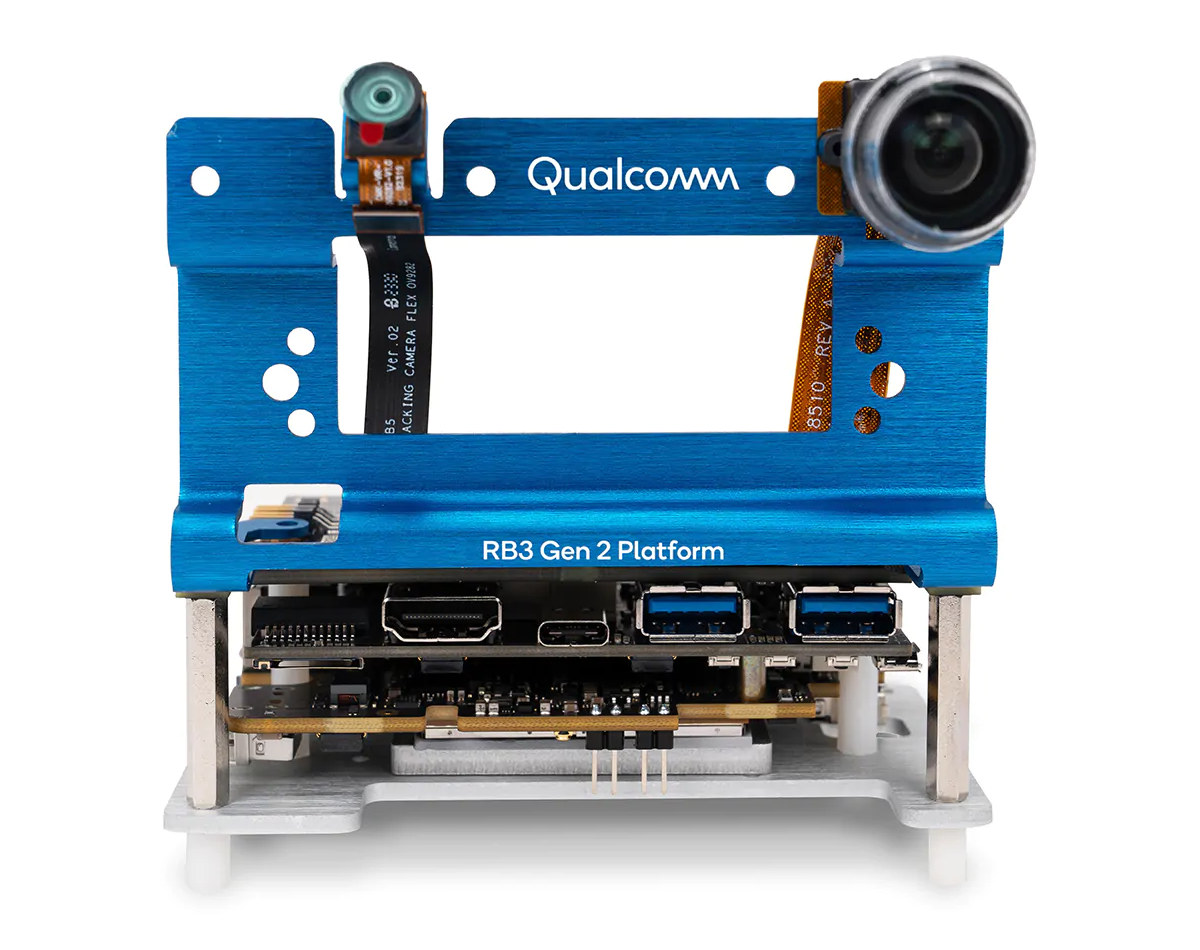

Qualcomm had two main announcements at Embedded World 2024: the ultra-low-power Qualcomm QCC730 WiFi microcontroller for battery-powered IoT devices and the Qualcomm RB3 Gen 2 Platform hardware and software solution designed for IoT and embedded applications based on the Qualcomm QCS6490 processor that we’re going to cover today. The kit is comprised of a QCS6490 octa-core Cortex-A78/A55 system-on-module with 12 TOPS of AI performance, 6GB RAM, and 128GB UFS flash connected to the 96Boards-compliant Qualcomm RBx development mainboard through interposer, as well as optional cameras, microphone array, and sensors. Qualcomm QCS6490/QCM6490 IoT processor Specifications: CPU – Octa-core Kryo 670 with 1x Gold Plus core (Cortex-A78) @ 2.7 GHz, 3x Gold cores (Cortex-A78) @ 2.4 GHz, 4x Silver cores (Cortex-A55) @ up to 1.9 GHz GPU – Adreno 643L GPU @ 812 MHz with support for Open GL ES 3.2, Open CL 2.0, Vulkan 1.x, DX FL 12 DSP – Hexagon […]

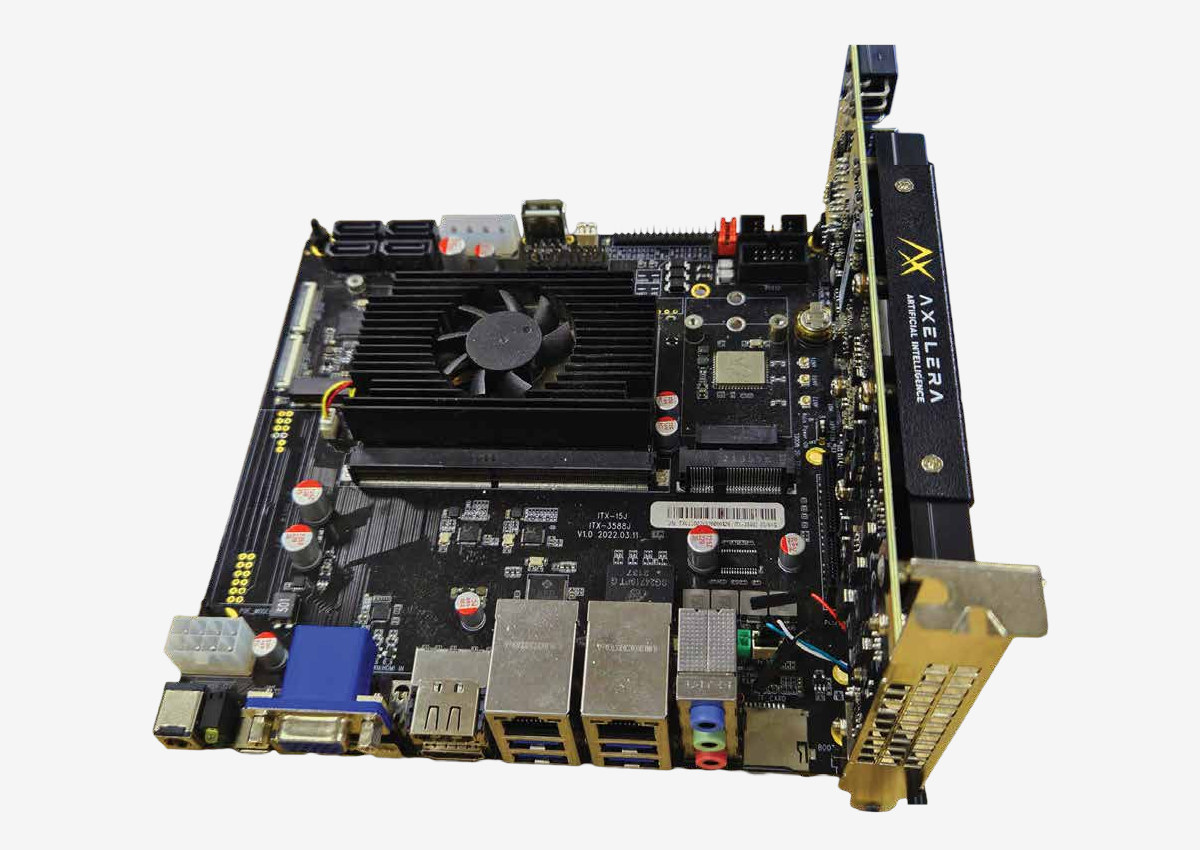

Axelera Metis PCIe Arm AI evaluation kit combines Firefly ITX-3588J mini-ITX motherboard with 214 TOPS Metis AIPU PCIe card

Axelera has announced the general availability of several Metis PCIe AI Evaluation Kits that combine the company’s 214 TOPS Metis AIPU PCIe card with x86 platforms such as Dell 3460XE workstation and Lenovo ThinkStation P360 Ultra computers, Advantech MIC-770v3 or ARC-3534 industrial PCs, or the Firefly ITX-3588J mini-ITX motherboard powered by a Rockchip RK3588 octa-core Cortex-A76/A55 SoC. We’ll look into detail about the latter in this post. When Axelera introduced the Metis Axelera M.2 AI accelerator module in January 2023 I was both impressed and doubtful of the performance claims of the company since packing a 214 TOPS Metis AIPU in a power-limited M.2 module seemed like a challenge. But it was hard to check independently since the devkits were not available yet although the company only started their early-access program in August last year. Now, anybody with an 899 Euros and up budget can try out their larger Metis […]

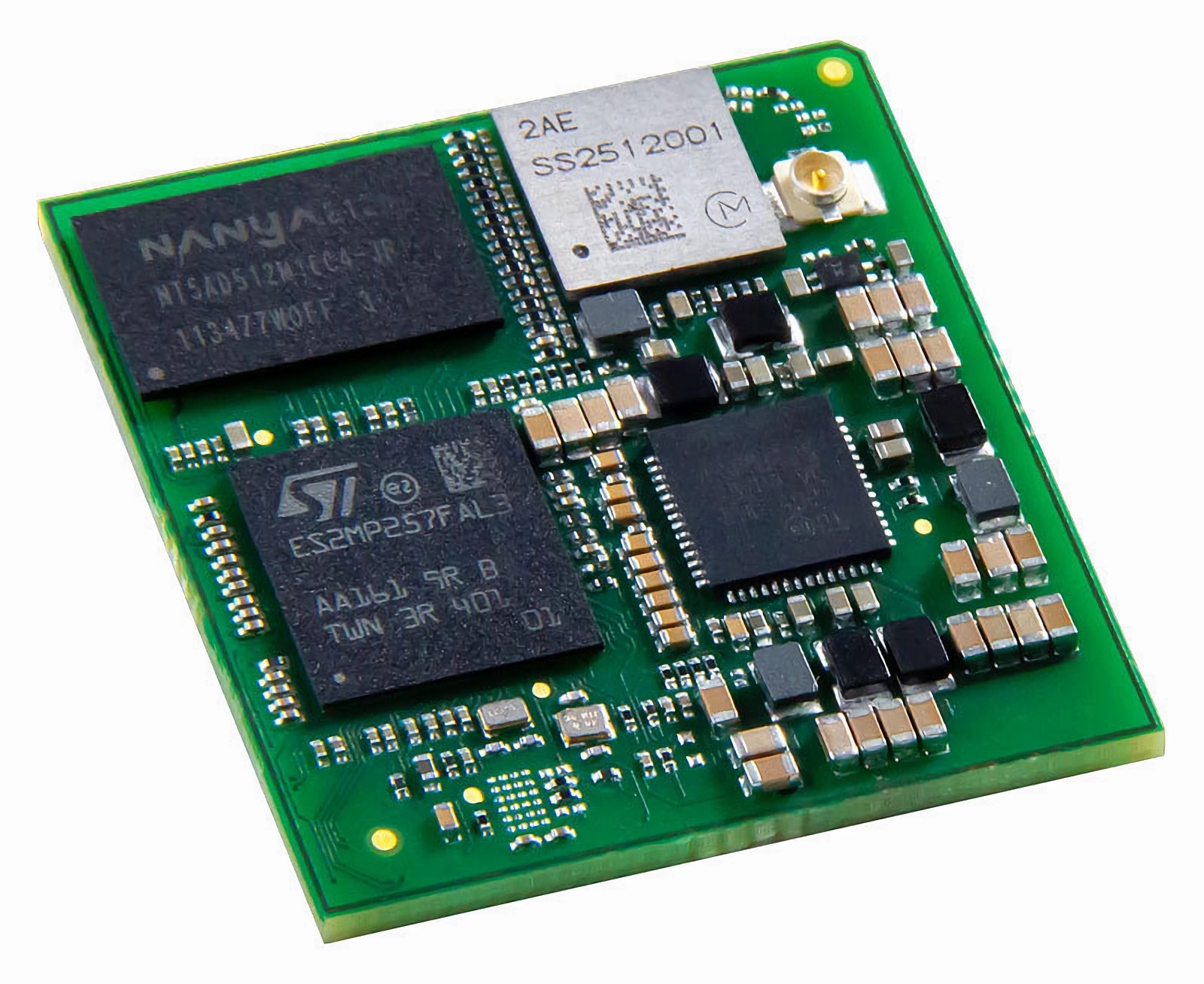

Digi ConnectCore MP25 SoM targets Edge AI and computer vision applications with STM32MP25 MPU

Digi International, an American Industrial IoT solutions provider, has announced its latest system-on-module, the Digi ConnectCore MP25 SoM, at Embedded World 2024 in Nuremberg, Germany. The Digi ConnectCore MP25 SoM is built upon STMicroelectronics’ STM32MP25 microprocessor. It supports artificial intelligence and machine learning functionality through an integrated neural processing unit (NPU) capable of 1.35 tera operations per second (TOPS) and an image signal processor (ISP). It is powered by two 64-bit Arm Cortex-A35 cores running at 1.5GHz, supported by a 32-bit Cortex-M33 core operating at 400MHz and a 32-bit Cortex-M0+core running at 200MHz. With its machine learning capabilities, support for time-sensitive networking, and versatile connectivity features, the ConnectCore MP25 module is suitable for edge AI, computer vision, and smart manufacturing applications in various sectors, including medical, energy, and transportation. Digi ConnectCore MP25 specifications: SoC – STMicroelectronics STM32MP257F CPU – 2x 64-bit Arm Cortex-A35 @ 1.5 GHz; MCU 1x Cortex-M33 @ […]

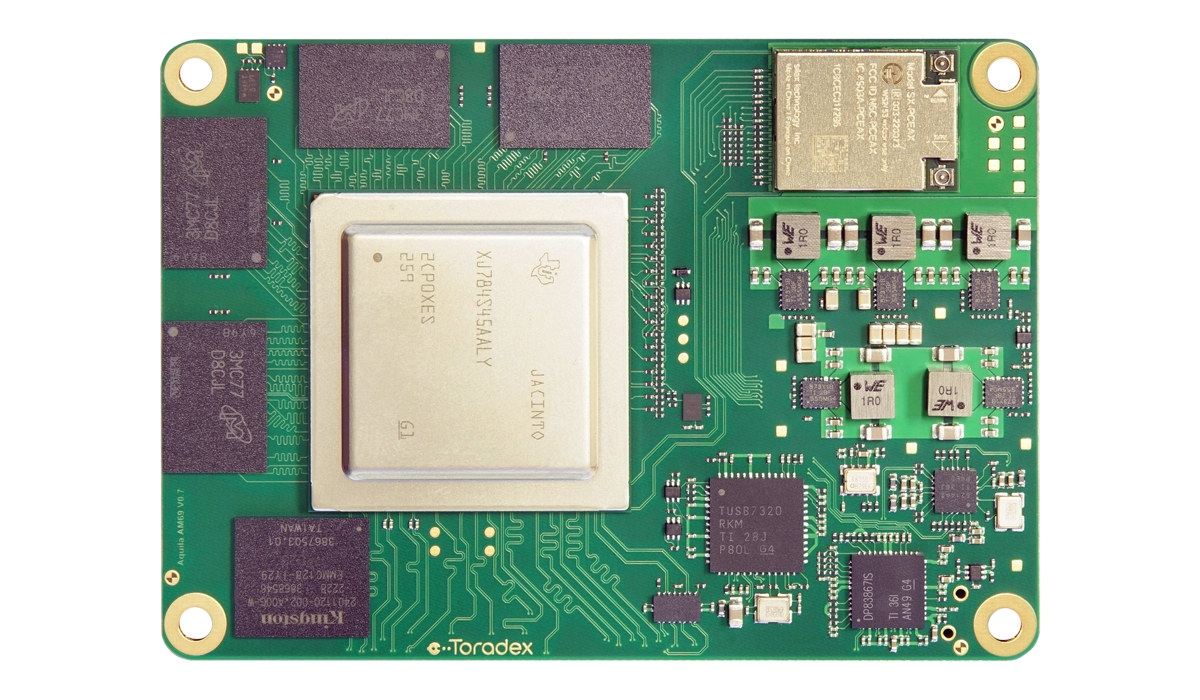

Toradex Aquila AM69 SoM features TI AM69A octa-core Cortex-A72 AI SoC, rugged 400 pin board-to-board connector

Toradex Aquila AM69 is the first system-on-module (SoM) from the company’s Aquila family with a small form factor and a rugged ~400-pin board-to-board connector targetting demanding edge AI applications in medical, industrial, and robotics fields with Arm platforms that deliver x86 level of performance at low power. The Aquila AM69 SoM is powered by a Texas Instruments AM69A octa-core Arm Cortex-A72 SoC with four accelerators delivering 32 TOPS of AI performance, up to 32GB LPDDR4, 128GB eMMC flash, built-in WiFi 6E and Bluetooth 5.3 module, and a board-to-board connector for display, camera, and audio interfaces, as well as dual gigabit Ethernet, multiple PCIe Gen3 and SerDes interfaces. All that in a form factor that’s only slightly bigger (86x60mm) than a business card or a Raspberry Pi 5. Toradex Aquila AM69 specifications: SoC – Texas Instruments AM69A Application processor – Up to 8x Arm Cortex-A72 cores at up to 2.0 GHz […]

AMD Ryzen Embedded 8000 Series processors target industrial AI with 16 TOPS NPU

AMD has recently “announced” the Ryzen Embedded 8000 Series processors in a community post with the latest AMD embedded devices combining a 16 TOPS NPU based on the AMD XDNA architecture with CPU and GPU elements for a total of 39 TOPS designed for industrial artificial intelligence. The Ryzen Embedded 8000 CPUs will be found in machine vision, robotics, and industrial automation applications to enhance the quality control and inspection processes, enable real-time, route-planning decisions on-device for minimal latency, and predictive maintenance, and autonomous control of industrial processes. AMD Ryzen Embedded 8000 key features and shared specifications: CPU – Up to 8 “Zen 4” cores, 16 threads Cache L1 Instruction Cache – 32 KB, L1 Data Cache = 32 KB (per core) L2 Cache – Up to 8 MB (total) L3 Cache- Up to 16 MB unified Graphics – RDNA 3 graphics with up to 6 WGPs (Work Group processors) […]

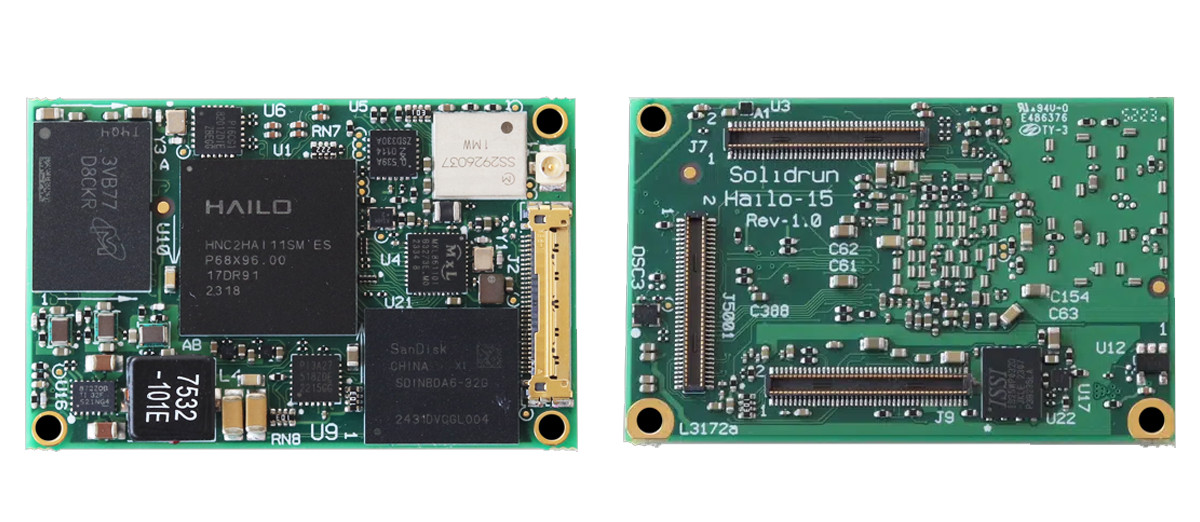

SolidRun launches Hailo-15 SOM with up to 20 TOPS AI vision processor

In March last year, we saw Hailo introduce their quad-core Cortex-A53-based Hailo-15 AI Vision processor. The processor features an advanced computer vision engine and can deliver up to 20 TOPS of processing power. However, after that initial release, we didn’t find it in any commercial products with the SoC. But in a recent development, SolidRun has released a SOM that not only features the Hailo-15 SoC but also integrates up to 8GB LPDDR4 RAM and 256GB eMMC storage along with dual camera support with H.265/4 Video Encoder. This is not the first SOM that SolidRun has released. Previously, we wrote about the SolidRun RZ/G2LC SOM, and before that, SolidRun launched the LX2-Lite SOM along with the ClearFog LX2-Lite dev board. Last month, they released their first COM Express module based on the Ryzen V3000 Series APU. Specification of SolidRun’s Hailo-15 SOM: SoC – Hailo-15 with 4 x Cortex A53 @ 1.3GHz; […]

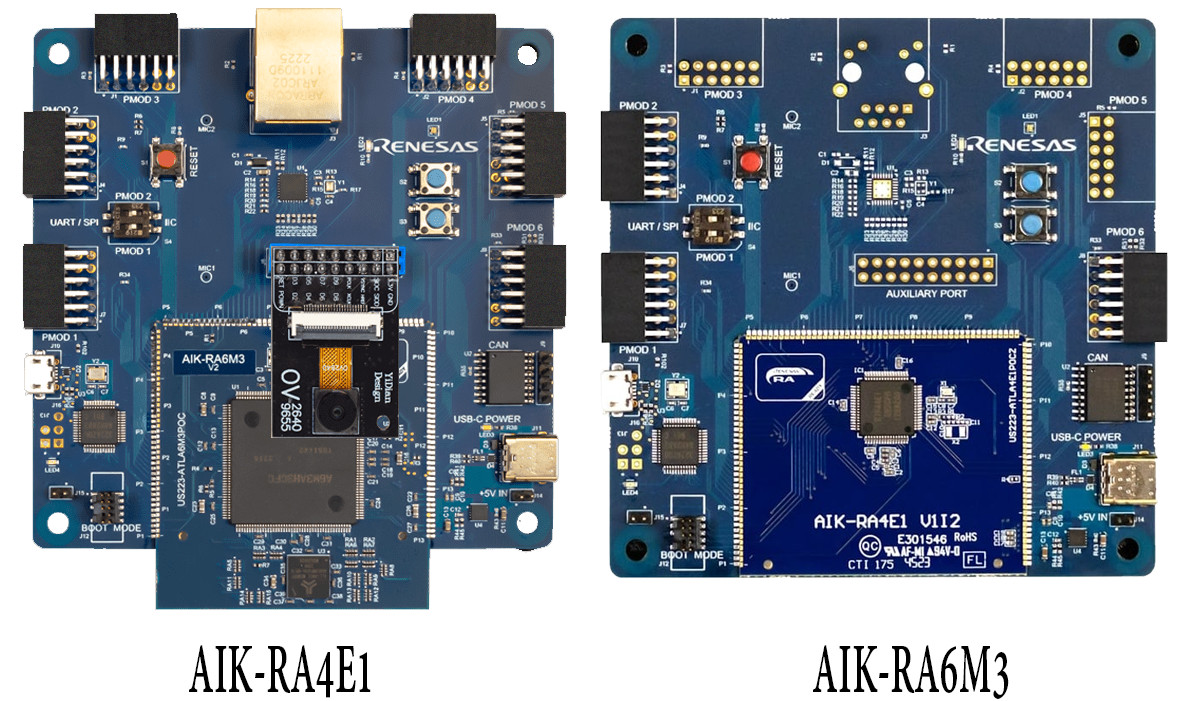

Renesas AIK-RA4E1 and AIK-RA6M3 reference kits are designed for accelerated AI/ML development

Renesas AIK-RA4E1 and AIK-RA6M3 are two new development boards based on RA-series 32-bit microcontrollers. These new dev boards have multiple reconfigurable connectivity functions to accelerate AI and ML design and development time. Both boards appear similar, but the AIK-RA4E1 uses the R7FA4E110D2CFM MCU, features three Pmod ports, and has no Ethernet support. On the other hand, the AIK-RA6M3 utilizes the R7FA6M3AH3CFC MCU, has six Pmod ports, and includes Ethernet support. Both the boards support full-speed USB and CAN bus. Renesas AIK-RA4E1 and AIK-RA6M3 reference kits specifications (Consolidated): RA4E1 Microcontroller Features: Model: R7FA4E110D2CFM Package: 64-pin LQFP Core: 100 MHz Arm Cortex-M33 SRAM: 128 KB on-chip Code Flash Memory: 512 MB on-chip Data Flash Memory: 8 KB on-chip RA6M3 Microcontroller Features: Model: R7FA6M3AH3CFC Package: 176-pin LQFP Core: 120 MHz Arm Cortex-M4 with FPU SRAM: 640 KB on-chip Code Flash Memory: 2 MB on-chip Data Flash Memory: 64 KB on-chip Connectivity: One USB […]

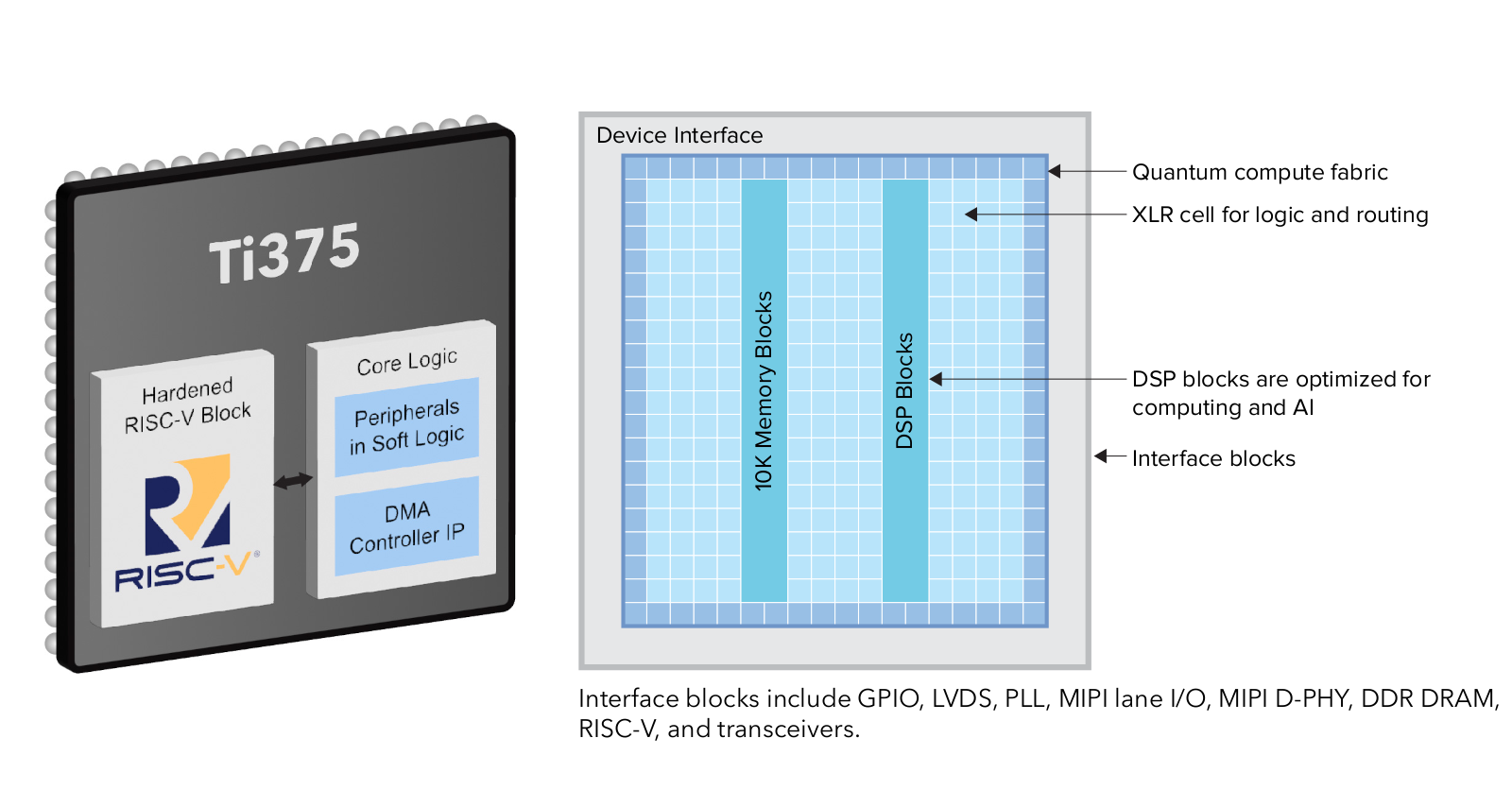

Efinix Titanium Ti375 FPGA offers quad-core hardened RISC-V block, PCIe Gen 4, 10GbE

Efinix Titanium Ti375 SoC combines high-density, low-power Quantum compute fabric with a quad-core hardened 32-bit RISC-V block and features a LPDDR4 DRAM controller, a MIPI D-PHY for displays or cameras, and 16 Gbps transceivers enabling PCIe Gen 4 and 10GbE interfaces. The Titanium Ti375 also comes with 370K logic elements, 1.344 DSP blocks, 2,688 10-Kbit SRAM blocks, and 27,53 Mbits embedded memory, as well as DSP blocks optimized for computing and AI workloads, and XLR (eXchangeable Logic and Routing) cells for logic and routing. Efinix Titanium Ti375 specifications: FPGA compute fabric 370,137 logic elements (LEs) 362,880 eXchangeable Logic and Routing (XLR) cells 27,53 Mbits embedded memory 2,688 10-Kbit SRAM blocks 1,344 embedded DSP blocks for multiplication, addition, subtraction, accumulation, and up to 15-bit variable-right-shifting Memory – 10-kbit high-speed, embedded SRAM, configurable as single-port RAM, simple dual-port RAM, true dual-port RAM, or ROM FPGA interface blocks 32-bit quad-core hardened RISC-V block […]