When we first wrote about the Golioth IoT development platform with ESP32 and nRF9160 devices support in 2022, we noted they offered a free Dev Tier account for up to 50 devices, 10 MB of LightDB data with a 7-day retention policy, and other limitations. The company has now decided to remove many of the limitations from the free developer tier without any limit to the number of IoT devices and also added other benefits: Unlimited Device Connections: Empowering developers to scale projects without constraints. Over-the-air (OTA) Device Firmware Updates (DFU) with 1GB monthly bandwidth 1,000,000 Monthly Log Messages up to 200MB Free data retention Time series – 30 days Logs: 14 days The main limitations compared to paid plans are that only one project can be created and a single seat (single loading) is available, so it’s not possible to have a team of users with different permissions like […]

Setting up a private LoRaWAN network with WisGate Connect gateway

In this guide, we’ll explain how to set up a private LoRaWAN network using the Raspberry Pi CM4-based RAKwireless’ WisGate Connect gateway and Docker or Portainer to install NodeRED, InfluxDB, Grafana, and other packages required to configure our gateway. The WisGate Connect is quite a versatile gateway with Gigabit and 2.5Gbps Ethernet plus several optional wireless connectivity options such as LoRaWAN, 4G LTE, 5G, WiFi 6, Zigbee, WiFi HaLoW, and more that can be added through Mini PCIe or M.2 sockets, expansion through WisBlock IO connectors and a 40-pin Raspberry Pi HAT connector. We’ll start by looking at the gateway features in detail, but if you already know all that, you can jump to the private LoRaWAN network configuration section. WisGate Connect unboxing, specifications, and teardown RAKwireless sent us a model with a Raspberry Pi CM4 equipped with 4 GB of RAM and 32 GB of eMMC memory, GPS and […]

Getting Started with LoRaWAN on SenseCAP K1100 sensor prototype kit (Part 1)

CNXSoft: This getting started guide/review of the SenseCAP K1100 sensor prototype kit is a translation of the original post on CNX Software Thai. The first part of this tutorial describes the kit and shows how to program it with Arduino to get sensor data to a LoRAWAN gateway and display it on Wio Terminal, before processing the data in a private LoRaWAN network using open-source tools such as Grafana. The second part – to be published later – will demonstrate the AI capability of the kit. In the digital era where IoT and big data are more prevalent, a large amount of data is required to be collected through sensors. To enable the digital transformation, SeeedStudio’s SenseCAP K1100 comes with all necessary sensors and equipment including the Wio Terminal, AI Vision Sensor, and a LoRaWAN module. With this plug-and-play platform, makers can easily create DIY sensors for data collection and […]

Oracle Cloud “Always Free” services include Ampere A1 Arm Compute instances

Oracle added thirteen additional new “Always Free” services to Oracle Cloud Free Tier last June, including Ampere A1 Arm Compute, Autonomous JSON Database, NoSQL, APEX Application Development, Logging, Service Connector Hub, Application Performance Monitoring (APM), flexible load balancer, flexible network load balancer, VPN Connect V2, Oracle Security Zones, Oracle Security Advisor, and OCI Bastion. So that means you could register an account for free, albeit a credit card or debit card is required for a $1 hold released after a few days, and use up to four Arm-based Ampera A1 cores with 24GB RAM for evaluation for free forever. Oracle Always Free services include: Infrastructure 2x AMD based Compute VMs with 1/8 OCPU and 1 GB memory each 4x Arm-based Ampere A1 cores and 24 GB of memory usable as one VM or up to 4 VMs. Note: 1x OCPU on x86 CPU Architecture (AMD and Intel) = 2x vCPUs; […]

Linux Capable Smarphones Database Launched with over 200 Models

Recently there have been companies working on smartphones that ship with Linux with Purism Librem 5 and Pine64 PinePhone being the most popular. But it has been possible to install Linux on various other (Android) phones thanks to projects like PostMarketOS or UBports’ Ubuntu Touch. But to make it easier to find out whether your phone is supported, the guy(s) at Tuxphones have created a database of phones that can run Linux. There are currently over 200 devices listed. The database is not updated manually, and scripts are used to scrape websites and wikis of open source projects such as PostMarketOS, UBports, Asteroid OS, or Maemo Leste. Some Linux operating systems such as Tizen or Sailfish OS are not included because they are not fully open-source nor independent. The use of scripts means the database does not only contain phones, but all some SBC’s, smartwatches, and TV boxes. If you […]

GearBest Database Was Left Unsecured For 2 Weeks

GearBest is one of the most popular Chinese online stores, and we often feature products sold by the company on the website. However, VPNMentor research team headed by Noam Rotem, a hat hacker and activist, discovered a serious security breach in Gearbest, where their database was completely unsecured for a period of time. Specifically the research team was able to access the following databases in March 2019: Orders database with products purchased, shipping address and postcode, customer name, email address, phone number Payments and invoices database with order number, payment type, payment information, email address, name, IP address Members database with name, address, date of birth, phone number, (unencrypted) email address, IP address, national ID and passport information, (unencrypted) account password They discovered 1.5+ million records in total. They managed to login successfully to two accounts from the database breach for testing. Payment information included data related Boleta (used in […]

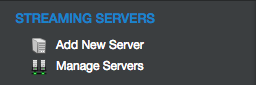

Xtream Codes IPTV Panel 2.4.2 Review – Part 4: Tutorial to Change the Main Server, Backup & Restore the Database

This is the fourth part of a review about Xtream Codes IPTV Panel, software to become your own content provider, and manage streams, clients, and resellers. The first three parts: Review of Xtream-Codes IPTV Panel Professional Edition – Part 1: Introduction, Initial Setup, Adding Streams… Xtream Codes IPTV Panel Review – Part 2: Movie Data Editing, Security, Resellers, Users and Pricing Management Xtream-Codes IPTV Panel Review – Part 3: Updates and New Features for Version 2.4.2 Main Server Change – Part 1: New Server Changing your Main Server could bring you troubles, if you do not know what you are doing. Many different reasons to change the Main server such as crashes, new one. making a Load Balancer to be a Main Server… Remember, it’s all about the existing backup, and you’ll restore your backup later, after successfully changing the Main Server. That is not difficult and everybody can do […]

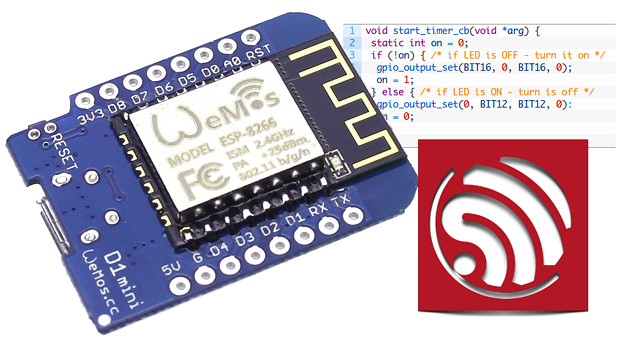

How to Write ESP8266 Firmware from Scratch (using ESP Bare Metal SDK and C Language)

CNXSoft: This is a guest post by Alexander Alashkin, software engineer in Cesanta, working on Mongoose Embedded Web Server. Espressif’s ESP8266 had quite an evolution. Some may even call it controversial. It all started with ESP8266 being a WiFi module with a basic UART interface. But later it became clear that it’s powerful enough for embedded system. It’s essentially a module that can be used for running full-fledged applications. Espressif realized this as well and released an SDK. As first versions go, it was full of bugs but since has become significantly better. Another SDK was released which offered FreeRTOS ported to ESP. Here, I want to talk about the non-OS version. Of course, there are third-party firmwares which offer support for script language to simplify development (just Google for these), but ESP8266 is still a microchip (emphasis on MICRO) and using script language might be overkill. So what we […]