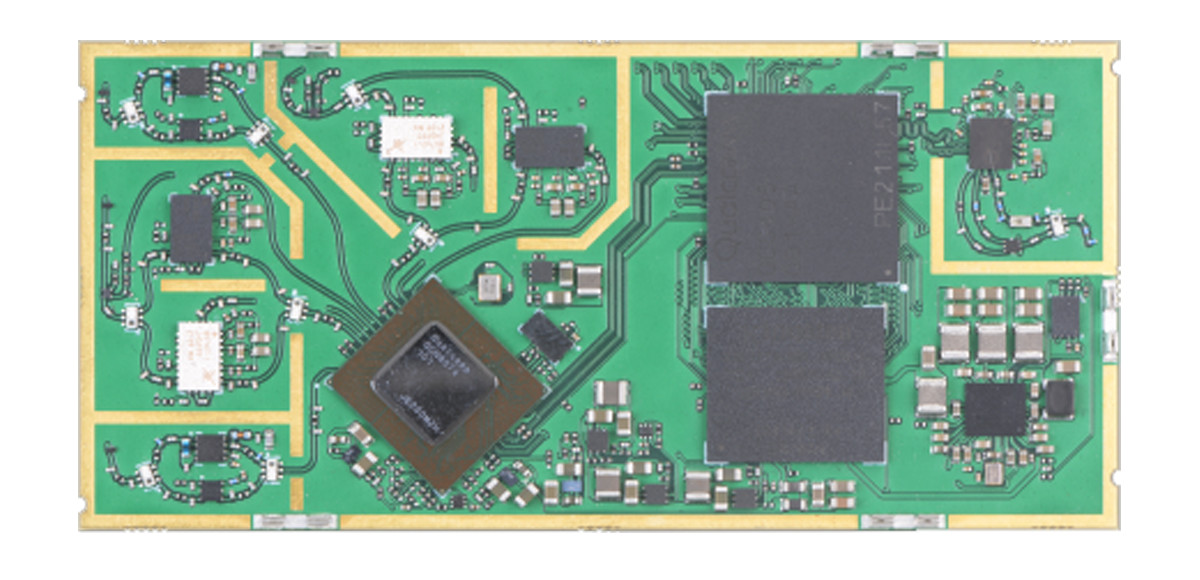

8devices has recently introduced TobuFi, a Qualcomm QCS405-powered System-on-Module (SoM) featuring dual-band Wi-Fi 6 and Wi-Fi 5 capabilities. The device also features 1GB LPDDR3, 8GB of eMMC storage, and multiple display resolutions. It also offers various interfaces, including USB 3.0, HDMI, I2S, DMIC, SDC, UART, SPI, I2C, and GPIO. In previous posts, we covered 8devices product launches like the Noni M.2 WiFi 7 module, Rambutan and Rambutan-I modules, Habanero IPQ4019 SoM, Mango-DVK OpenWrt Devkit, and many more innovative products. If you’re interested in 8devices, feel free to check those out for more details. 8devices TobuFi SoM specifications: SoC – Qualcomm QCS405 CPU – Quad-core Arm Cortex-A53 at 1.4GHz; 64-bit GPU – Qualcomm Adreno 306 GPU at 600MHz; supports 64-bit addressing DSP – Qualcomm Hexagon QDSP6 v66 with Low Power Island and Voice accelerators Memory – 1GB LPDDR3 + 8GB eMMC Storage 8GB eMMC flash SD card – One 8-bit (SDC1, 1.8V) and one […]

DIY ESP32 drone costs about $12 to make

The team at Circuit Digest has designed a low-cost DIY drone controlled by an ESP32 module, based on a custom PCB and off-the-shelf parts that costs around 1000 Rupees to make, or $12 at today’s exchange rate. The DIY ESP32 drone was designed as a low-cost alternative to more expensive DIY drones that typically cost close to $70. The result is a WiFi drone that fits in the palm and controlled over WiFi using a smartphone. Interestingly it does not include any 3D printed parts as the PCB forms the chassis of the device. DIY ESP32 drone key features and components Wireless module – ESP32-WROOM-32 for WiFi control using a smartphone. Storage – MicroSD card slot Sensors – MPU6050 IMU for stability control. Propulsion 4x 720 coreless motors 2x 55mm propeller type A(CW) 2x 55mm propeller type B(CCW) USB – 1x USB-C port for charging and programming (via CP2102N) Power […]

This New X-Fly drone mimics a bird’s flight (Crowdfunding)

Aeronautical engineer Edwin Van Ruymbeke has introduced X-Fly, a drone that emulates the flight pattern of a bird. The drone communicates via Bluetooth using the STM32WB15CC microcontroller, has a range of 100 meters, and can fly for 8-12 minutes with a swappable battery system. The company mentions that they have collaborated with the French military to develop the flapping wings mechanism, which incorporates gyroscopes and g-sensors, ensuring a stable flight. The drone can be controlled with a smartphone app or an attachable optical joystick and It’s also durable against crashes, has a quick-swap battery system, and improved wing mechanics for longer flights. We’ve previously covered drones such as the Qualcomm Flight RB5-based drone Kudrone Nano Drone and some drone kits like Qualcomm Flight Pro. Feel free to explore these if you’re interested. Key Features and Specification of X-Fly Drone: Control Board – The PCB features an STM32WB15CC Bluetooth microcontroller, motor […]

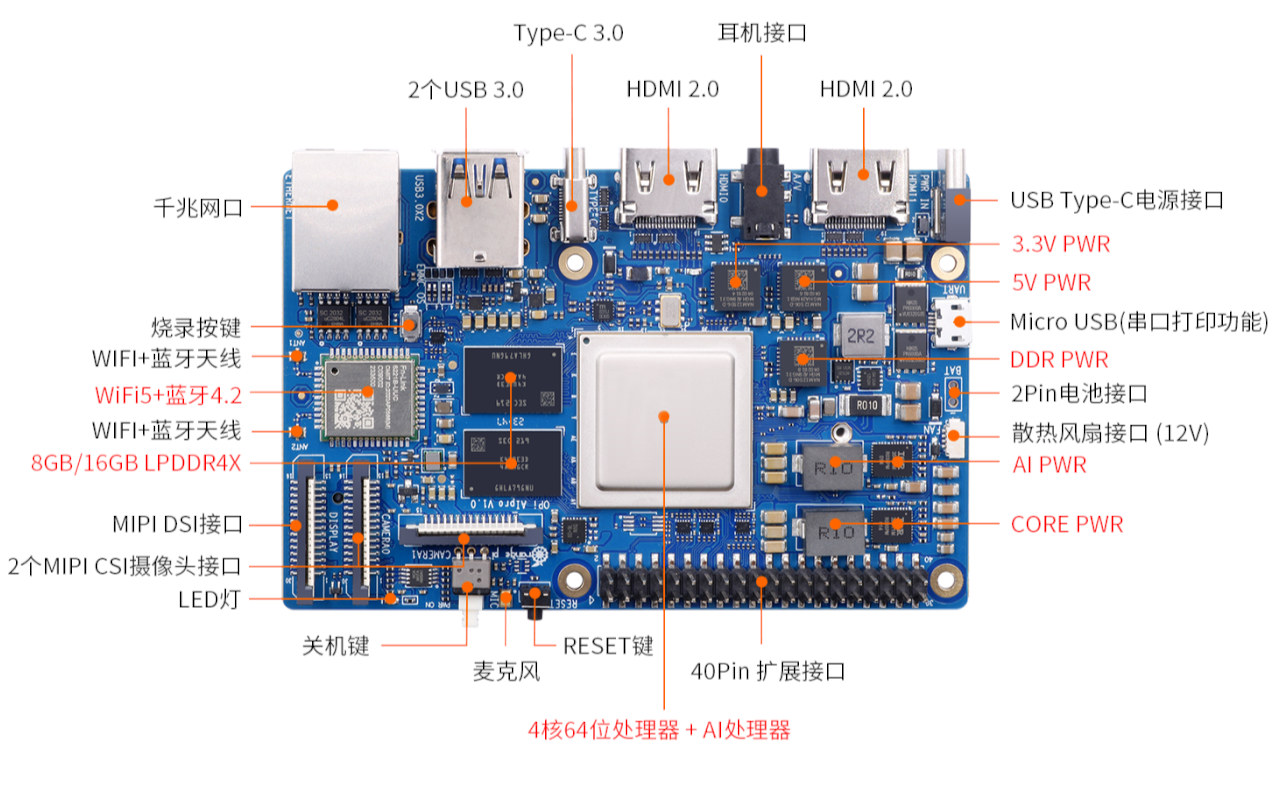

Orange Pi AIPro SBC features a 20 TOPS Huawei Ascend AI SoC

Orange Pi AIPro is a new single board computer for AI applications that features a new (and unnamed) Huawei Ascend AI quad-core 64-bit processor delivering up to 20 TOPS (INT8) or 8 TOPS (FP16) of AI inference performance. The SBC comes with up to 16GB LPDDR4X and a 512Mbit SPI flash but also supports other storage options such as a microSD card, an eMMC flash module, and/or an M.2 NVMe or SATA SSD. The board also features two HDMI 2.0 ports, one MIPI DSI connector, and an AV port for video output, two MIPI CSI camera interfaces, Gigabit Ethernet and WiFi 5 connectivity, a few USB ports, and a 40-pin GPIO header for expansion. Orange Pi AIPro specifications: SoC – Huawei Ascend quad-core 64-bit (I’d assume RISC-V) processor delivering up to 20 TOPS (INT8) or 8TOPS (FP16) AI performance and equipped with an unnamed 3D GPU System Memory – 8GB […]

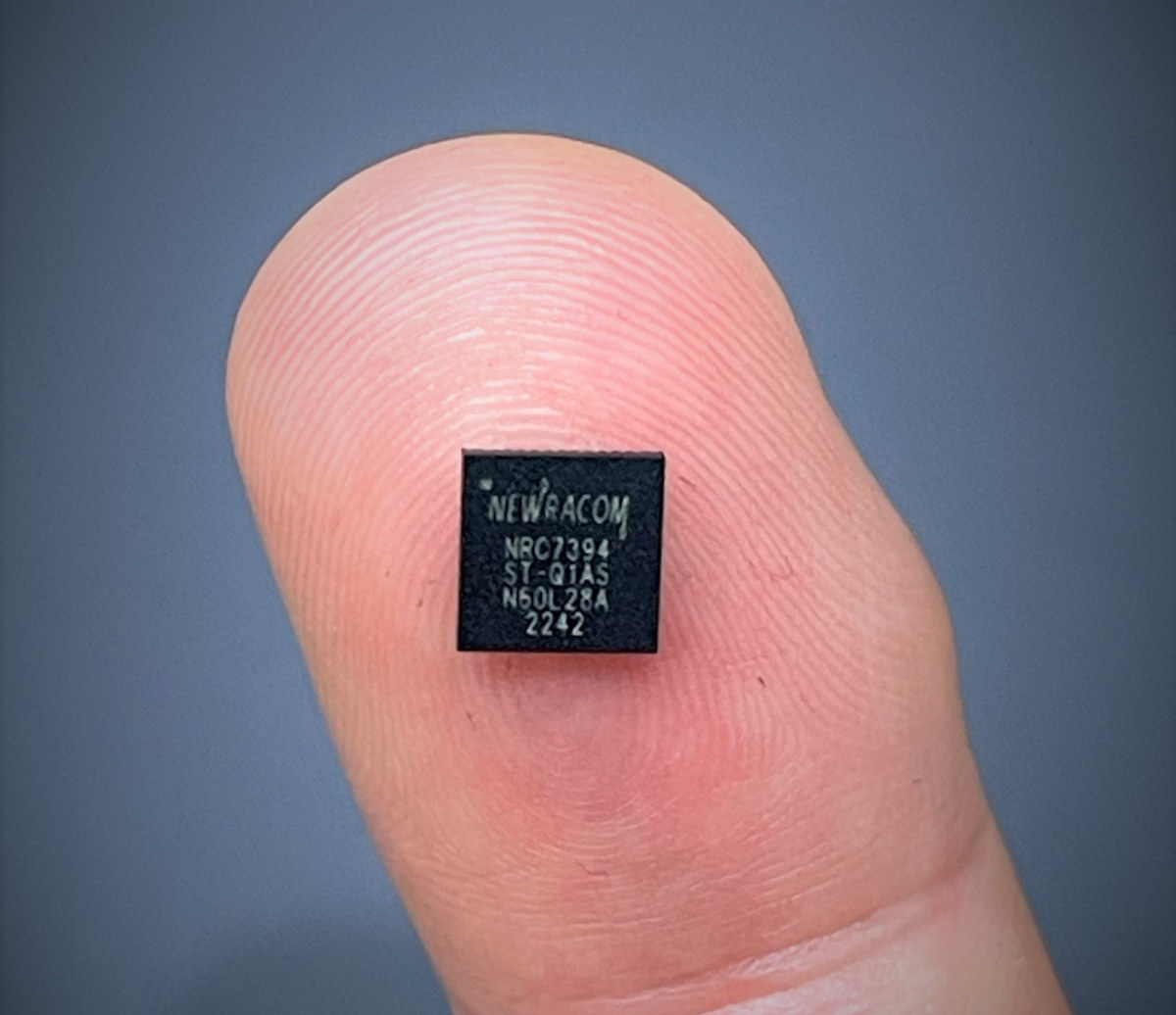

NEWRACOM NRC7394 WiFi HaLow SoC delivers higher power efficiency and cost-effectiveness

NEWRACOM has just introduced the NRC7394 Wi-Fi HaLow Arm Cortex-M3 SoC with higher power efficiency and lower cost than the previous generation NRC7292 Cortex-M3/M0 HaLow SoC and available in a 6x6mm package. I first wrote about the 802.11ah standard in 2014. Also known as the WiFi HaLow (consumers name), it operates in the 900 MHz band, offers a longer range and lower power consumption for items like IP cameras, and the first products came to market in 2021. I was expecting a flood of new WiFi HaLow devices in 2022 in my year 2021 round-up and it was not exactly a prescient prediction as it never happened. But maybe the new NRC7394 SoC will help make WiFi HaLow devices more popular by lowering the costs and further improving battery life. NEWRACOM NRC7394 key features: CPU – Arm Cortex-M3 core @ 32 MHz for IEEE 802.11ah WLAN and application Connectivity Full […]

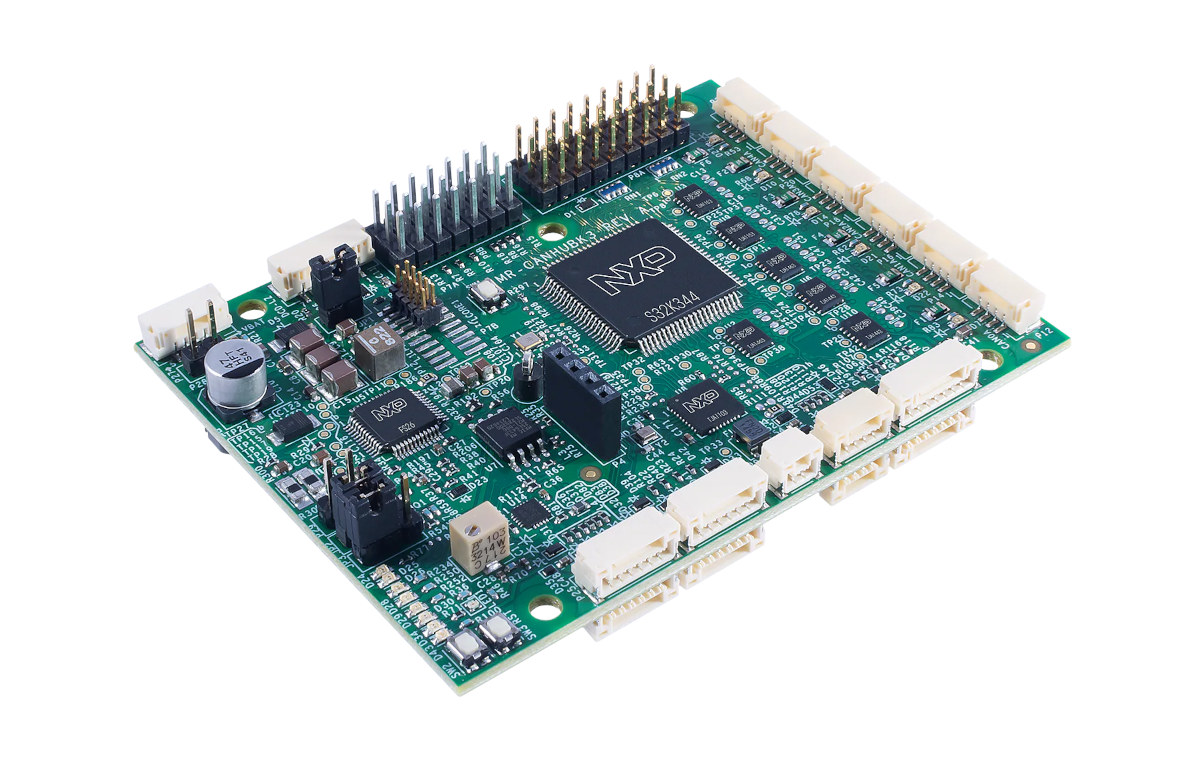

NXP S32K344 evaluation board for mobile robots offers one 100BaseT1, six CAN-FD interfaces

MR-CANHUBK344 is an evaluation board based on NXP S32K344 Arm Cortex-M7 automotive general-purpose microcontroller designed for mobile robotics applications such as autonomous mobile robots (AMR) and automated guided vehicles (AGV) with a 100baseT1 Ethernet interface and six CAN-FD ports. The six CAN bus connectors come in three pairs of CAN-FD, CAN-SIC (signal improvement), and CAN-SCP (secure) interfaces using NXP chips. The board can notably be used for tunneling CAN over Ethernet using IEEE1722, plus the board is equipped with an SE050 Secure element with NFC for authentication, and various general-purpose peripheral interfaces via DroneCode standard JST-GH connectors. MR-CANHUBK344 evaluation board specifications: MCU – NXP S32K344 lockstep Cortex-M7 microcontroller @ up to 160 MHz with 4MB flash, 512KB SRAM, 6x CAN bus interfaces, up to 218 I/Os, AEC-Q100 compliant Ethernet – ASIL-B compliant 100BASE-T1 Ethernet PHY (TJA1103) 6x CAN-FD interfaces with 2x CAN Bus with flexible data rate through TJA144x automotive […]

AAEON BOXER-8224AI – An NVIDIA Jetson Nano AI Edge embedded system for drones

AAEON BOXER-8224AI is a thin and lightweight AI edge embedded system solution based on NVIDIA Jetson Nano system-on-module and designed for drones, or other space-constrained applications such as robotics. AAEON BOXER products are usually Embedded Box PCs with an enclosure, but the BOXER-8224AI is quite different as it’s a compact and 22mm thin board with MIPI CSI interfaces designed to add computer vision capability to unmanned areal vehicles (UAV), as well as several wafers for dual GbE, USB, and other I/Os. BOXER-8224AI specifications: AI Accelerator – NVIDIA Jetson Nano CPU – Arm Cortex-A57 quad-core processor System Memory – 4GB LPDDR4 Storage Device – 16GB eMMC 5.1 flash Dimensions – 70 x 45 mm Storage – microSD slot Display Interface – 1x Mini HDMI 2.0 port Camera interface – 2x MIPI CSI connectors Networking 2x Gigabit Ethernet via wafer connector (1x NVIDIA, 1x Intel i210) Optional WiFi, Bluetooth, and/or cellular connectivity […]

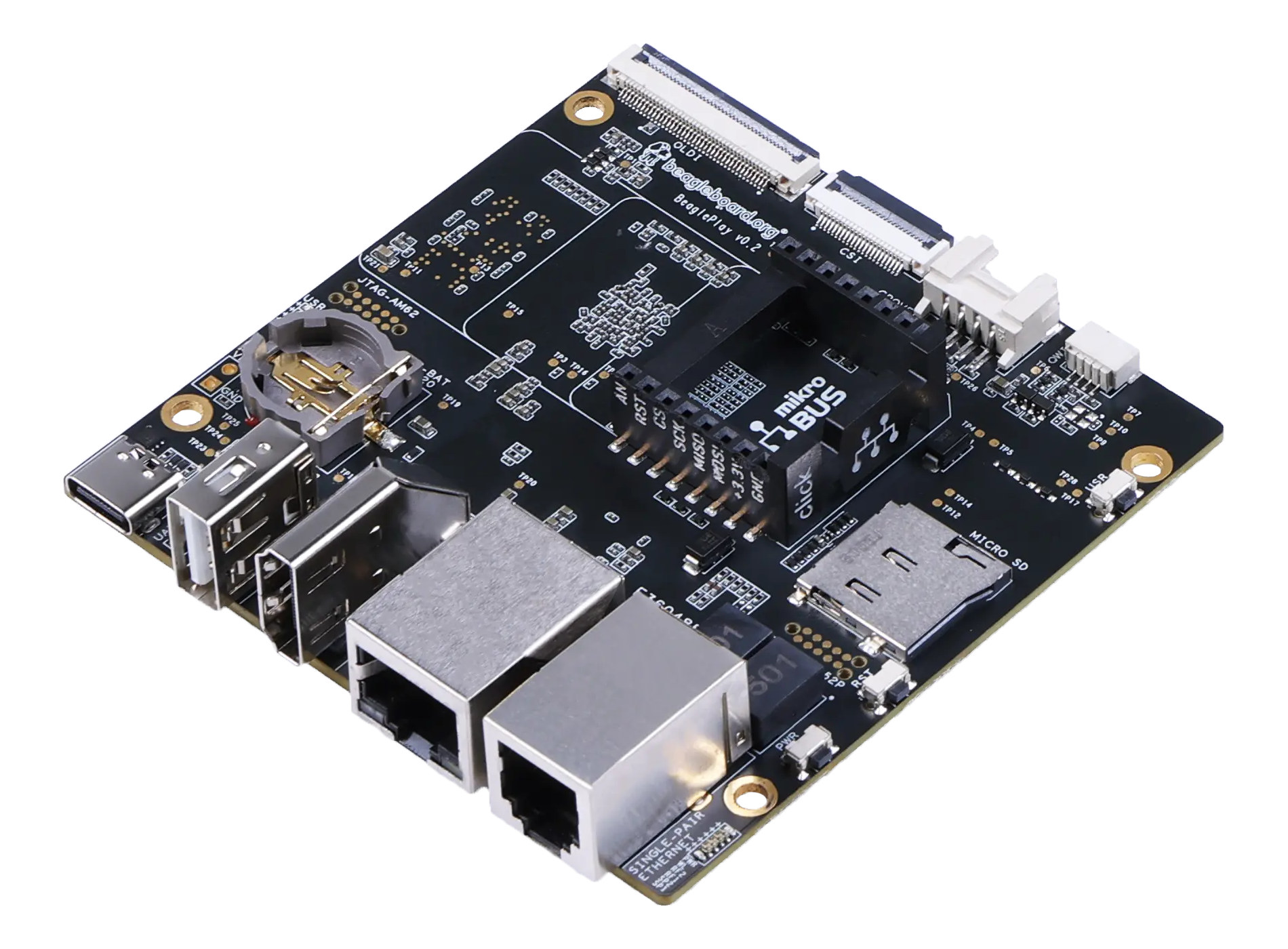

BeaglePlay – A $99 Texas Instruments AM625 industrial SBC with plenty of communication and expansion options

The BeagleBoard.org Foundation has just launched their latest single board computer with the BeaglePlay SBC powered by a Texas Instruments AM625 Cortex-A53/M4/R5 processor with 16GB eMMC flash, 2GB DDR2, and a wide range of I/Os, wired and wireless communication options, and support for expansion module compatible with MikroBus, Grove, and Qwiic connectors. Two wired Ethernet are offered, namely a typical Gigabit Ethernet RJ45 port, as well as a single-pair Ethernet RJ11 port limited to 10 Mbps but with a much longer range and power over data, and wireless connectivity includes dual-band WiFi 4, Bluetooth LE, and Sub-GHz. The board also features HDMI and MIPI DSI display interfaces and a MIPI CSI camera interface. BeaglePlay specifications: SoC – Texas Instruments Sitara AM625 (AM6254) with Quad-core 64-bit Arm Cortex-A53 processor @ 1.4 GHz Arm Cortex-M4F at up to 400 MHz Arm Cortex-R5F PowerVR Rogue 3D GPU supporting up to 2048×1080 @ 60fps, […]