Virtual reality headsets used to have to be connected to computers for optimal performance while gaming, and several years ago, most standalone VR headsets were only good to play some videos. But technology has evolved, and with the launch of Snapdragon XR2 powered Oculus Quest 2 standalone VR headset available for pre-order for $299, Facebook has decided to completely leave the PC-only VR headset business. Oculus Quest 2 specifications: SoC – Qualcomm Snapdragon XR2 platform with AI acceleration capability similar to Snapdragon 865 System Memory – 6GB RAM Storage – 64GB or 256GB flash Display Dual 1832 x 1920 pixel (per eye) display @ 90 Hz IPD Adjustment – 58mm, 63mm or 68mm Audio Integrated speakers and microphone, 3.5 mm audio jack Connectivity – WiFi, Bluetooth (but no audio support due to latency) Tracking Technology – Oculus Insight inside-out 6-DoF tracking with motion controllers USB – USB-C cable for charging […]

Virtual Desktop and Oculus Quest Tips & Tricks

Hey, Karl here. I wanted to share some experimenting I did with the Oculus Quest we purchased as a family gift for Christmas. One of the features I was looking forward to was wirelessly streaming VR games from my PC. It is not officially supported by Oculus but Virtual Desktop allows you to do this. Virtual Desktop is an app that can be purchased through the Oculus store. Unfortunately, there are a couple of steps that need to be taken to stream VR to the headset. Oculus forced VD to remove the emulated VR feature in its official store version. Once it is purchased you can then sideload the emulated VR version through Side Quest. Side Quest is a simple tool that makes sideloading apps easy and has a bunch of demo and games that aren’t in the Oculus store. The instructions are easy to follow on the Side Quest […]

Facebook Oculus Quest Standalone VR Headset Launched for $399 and up

One year ago, Facebook introduced their very first standalone virtual reality headset with Oculus Go. At $199 the price was fairly attractive, but the headset only supported 3DOF motion tracking. The company announced a new model at F8 2019. Oculus Quest is equipped with a more powerful Snapdragon 835 processor, two 1600 x 1440 displays, and support for 6DOF (degrees of freedom) virtual reality thanks to two handheld motion controllers. Oculus Quest specifications: SoC – Qualcomm Snapdragon 835 octa-core processor with 4x high performance Kryo 280 cores @ 2.20 GHz/ 2.30 GHz (single core operation), 4x low power Kryo 280 cores @ 1.9 GHz, Adreno 540 GPU System Memory – 4GB RAM Storage – 64GB or 128GB flash storage Display – 2x 1600×1440 OLED displays up to 72 Hz Camera – 4x wide-angle tracking cameras for inside-out position tracking Audio – Integrated speakers, 2x 3.5mm audio jacks Battery – Lithium-Ion […]

FOSDEM 2019 Open Source Developers Meeting Schedule

FOSDEM – which stands for Free and Open Source Software Developers’ European Meeting – is a free-to-participate event where developers meet on the first week-end of February to discuss open source software & hardware projects. FOSDEM 2019 will take place on February 2 & 3, and the schedule has already been published with 671 speakers scheduled to speak in 711 events themselves sorted in 62 tracks. Like every year, I’ll create a virtual schedule based on some of the sessions most relevant to this blog in tracks such as open hardware, open media, RISC-V, and hardware enablement tracks. February 2 10:30 – 10:55 – VkRunner: a Vulkan shader test tool by Neil Roberts A presentation of VkRunner which is a tool to help test the compiler in your Vulkan driver using simple high-level scripts. Perhaps the largest part of developing a modern graphics driver revolves around getting the compiler to […]

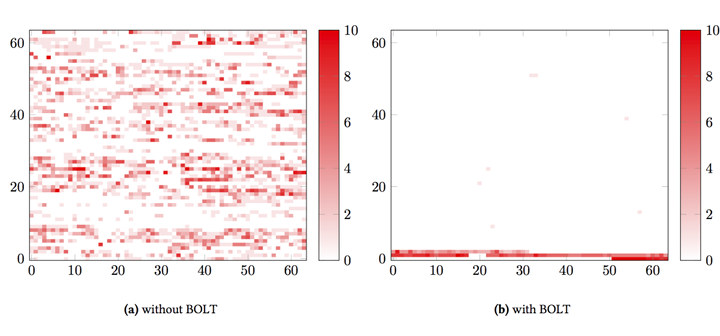

Facebook BOLT Speeds Up Large x86 & ARM64 Binaries by up to 15%

Compilers like GCC OR LLVM normally do a good job at optimizing your code when processing your source code into assembly, and then binary format, but there’s still room for improvement – at least for larger binaries -, and Facebook has just released BOLT (Binary Optimization and Layout Tool) that has been found to reduce CPU execution time by 2 percent to 15 percent. The tool is mostly useful for binaries built from a large code base, with binary size over 10MB which are often too large to fit in instruction cache. The hardware normally spends lots of processing time getting an instruction stream from memory to the CPU, sometimes up to 30% of execution time, and BOLT optimizes placement of instructions in memory – as illustrated below – in order to address this issue also known as “instruction starvation”. BOLT works with applications built by any compiler, including the […]

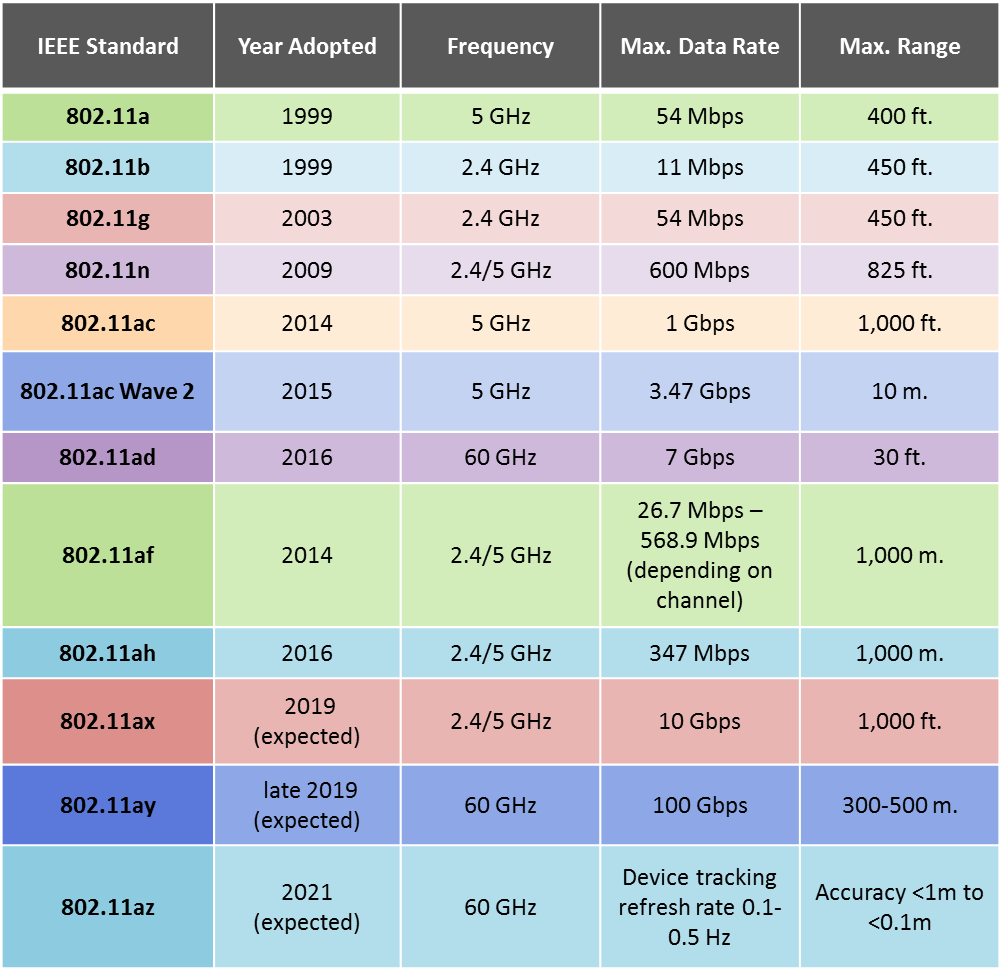

Qualcomm QCA64x8 and QCA64x1 802.11ay WiFi Chipsets Deliver 10 Gbps Bandwidth

WiFi has evolved in recent years with the introduction of 802.11ad and 802.11ax (now called WiFi 6). THe latter is now official, and in the last year several 802.11ax chipsets and WiFi 6 routers have been announced, but I’ve not heard much about 802.11ad with claims of up to 7Gbps bandwidth at 60 GHz when unveiled in 2016. The latter have been supplanted by 802.11ay, with Qualcomm having just unveiled QCA64x8 and QCA64x1 802.11ay chipsets capable of delivering 10Gbps and operating at a frequency of 60 GHz. According to Wikipedia, 802.11ay is not really a new standard, but just an evolution of 802.11ad adding four times the bandwidth and up to 4 MIMO streams. Qualcomm chipsets will enable 10+ Gps speeds with wire-equivalent latency, while keeping the power consumption low, and bring the ability to play 4K UltraHD videos over WiFi, virtual / augmented reality games, fixed wireless mesh backhaul, […]

Facebook Oculus Go Standalone VR Headset Launched for $199 and Up

Oculus has developed virtual reality headset for several years, starting with VR development kits, before launching consumer VR headsets working with either a powerful computer (Oculus Rift), or a smartphone (Oculus Gear VR). But the company – now part of Facebook – has more recently been working on an all-in-one virtual reality headset called Oculus Go that works without external hardware, and launched it today starting at $199.00. Oculus Go specifications: SoC – Qualcomm Snapdragon 821 octa-core Mobile VR Platform System Memory – Storage – 32 or 64GB storage Display – 5.5″ display with 2560 x 1440 resolution; 538ppi; up to 72 Hz refresh rate Fresnel lenses Audio – Built-in spatial audio and integrated microphone Battery – Lithium-Ion battery good for about two hours for games to up to 2.5 hours for streaming media and video. Dimensions – 190mm x 105mm x 115mm Weight – 468 grams The company explains […]

Oculus Rift Virtual Reality Development Kit 2 Becomes Open Source Hardware

Oculus Rift DK2 virtual reality headset and development kit started to ship in summer 2014. The DK2 is kind of VR headset that is connected to a more powerful computer via USB and HDMI, includes hardware for positional tracking, a 5″ display ,and two lenses for each eye. Since then the company has been purchased by Facebook, and they’ve now decided to make the headset fully open source hardware. The release includes schematics, board layout, mechanical CAD, artwork, and specifications under a Creative Commons Attribution 4.0 license, as well as firmware under “BSD+PATENT” licenses which you’ll all find on Github. The release is divided into four main folders: Documentation with high-level specifications for the DK2 headset, sensor, and firmware. Cable with schematics and high level specifications for the cables. Custom assembly that would be hard to recreate from source. Allegedly the most complex part of the design Sensor with […]