Raspberry Pi 4 SBC was released at the end of June with a new Broadcom BCM2711B SoC that also includes VideoCore 6 (VC6) GPU for 2D and 3D graphics, and that could also be used for general-purpose GPU computing (GPGPU). In the past we’ve seen companies such as Idein leveraged VideoCore 4 GPGPU capabilities in Raspberry Pi 3 / Zero to accelerate image recognition, and they released a python library (py-videocore) for that purpose. The problem is that the VideoCore 6 GPU found in RPi 4 is quite different than the VideoCore 4 GPU in earlier versions of the Raspberry Pi Foundation board as forum member phiren explains: I’ve been looking though the open source drivers and here are some of my observations: vc6 is clearly derived from vc4, but it is significantly different. vc6 is only a slight extension over vc5 The QPU pipeline stays mostly the same, you […]

Actcast Combines IFTTT-like Service with AI and Raspberry Pi 3 / Zero

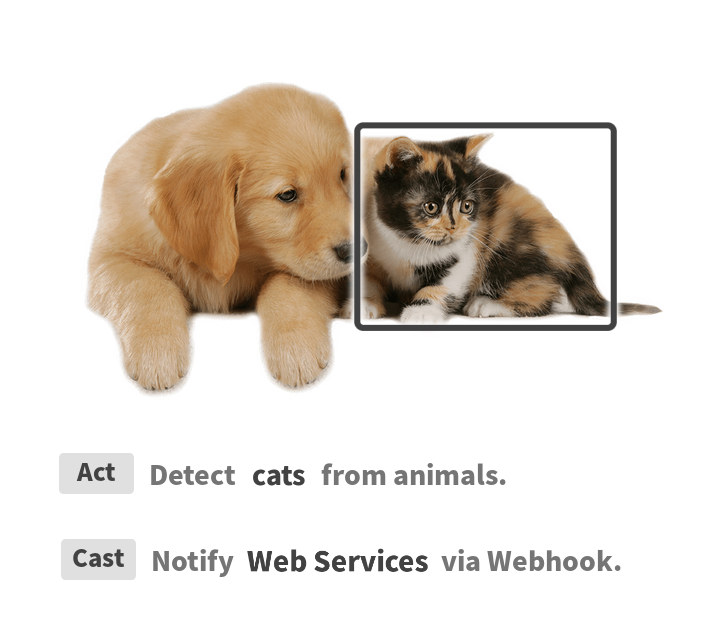

In a report on April 30, 2017, Idein had developed GPGPU accelerated object recognition for the Raspberry Pi platform. That development led to the beta release of the ActCast IoT platform, which was announced on July 29, 2019, and uses deep learning algorithms for object and subject recognition. The program is for use with IoT and AI. The idea is to increase performance and link the system to the web for even more solutions. What it Does The use of physical world information in IoT projects has many applications. Such as a doorbell that sees a person, can then recognize the person. Ultimately letting the user know over the web through a smartphone, the person should be let in. And then the system unlocks the door. So Actcast is a bit like IFTTT with artificial intelligence / computer vision capabilities. Edge Computing Bringing the source of data closer to the […]

How to Get Started with OpenCL on ODROID-XU4 Board (with Arm Mali-T628MP6 GPU)

Last week, I reviewed Ubuntu 18.04 on ODROID-XU4 board testing most of the advertised features. However I skipped on the features listed in the Changelog: GPU hardware acceleration via OpenGL ES 3.1 and OpenCL 1.2 drivers for Mali T628MP6 GPU While I tested OpenGL ES with tools like glmark2-es2 and es2gears, as well as WebGL demos in Chromium, I did not test OpenCL, since I’m not that familiar with it, except it’s used for GPGPU (General Purpose GPU) to accelerate tasks like image/audio processing. That was a good excuse to learn a bit more, try it out on the board, and write a short guide to get started with OpenGL on hardware with Arm Mali GPU. The purpose of this tutorial is to show how to run an OpenCL sample, and OpenCL utility, and I won’t go into the nitty gritty of OpenCL code. If you want to learn more […]

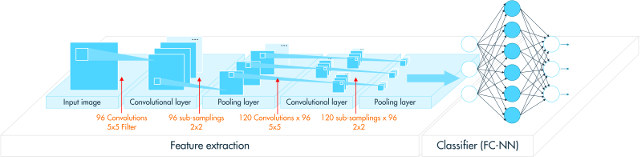

Getting Started with OpenCV for Tegra on NVIDIA Tegra K1, CPU vs GPU Computer Vision Comparison

This is a guest post by Leonardo Graboski Veiga, Field Application Engineer, Toradex Brasil Introduction Computer vision (CV) is everywhere – from cars to surveillance and production lines, the need for efficient, low power consumption yet powerful embedded systems is nowadays one of the bleeding edge scenarios of technology development. Since this is a very computationally intensive task, running computer vision algorithms in an embedded system CPU might not be enough for some applications. Developers and scientists have noticed that the use of dedicated hardware, such as co-processors and GPUs – the latter traditionally employed for graphics rendering – can greatly improve CV algorithms performance. In the embedded scenario, things usually are not as simple as they look. Embedded GPUs tend to be different from desktop GPUs, thus requiring many workarounds to get extra performance from them. A good example of a drawback from embedded GPUs is that they are […]

Imagination PowerVR “Furian” Series8XT GT8525 GPU Targets High-end Smartphones, Virtual Reality and Automotive Products

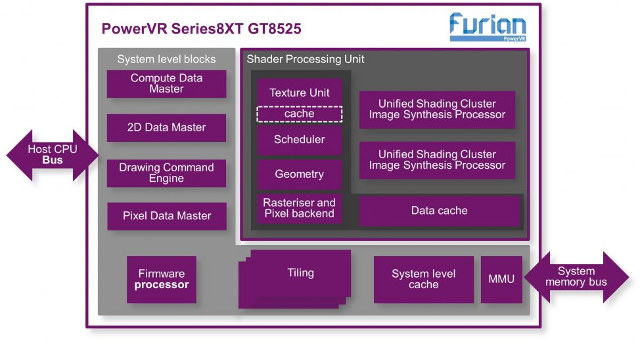

Imagination Technologies has unveiled their first GPU based on PowerVR Furian architecture with Series8XT GT8525 GPU equipped with two clusters and designed for SoCs going to into products such as high-end smartphones and tablets, mid-range dedicated VR and AR devices, and mid- to high-end automotive infotainment and ADAS systems. The Furian architecture is said to allow for improvements in performance density, GPU efficiency, and system efficiency, features a new 32-wide ALU cluster design, and can be manufactured using sub-14nm (e.g. 7nm process once available). PowerVR GT8525 GPU supports compute APIs such as OpenCL 2.0, Vulkan 1.0 and OpenVX 1.1. Compared to the previous Series7XT GPU family, Series8XT GT8525 GPU delivers 80% higher fps in Trex benchmark, an extra 50% fps in GFXbench Manhattan benchmark, 50% higher fps in Antutu, doubles the fillrate throughput for GUI, and increases GFLOPs for compute applications by over 50%. GT8525 GPU is available for licensing […]

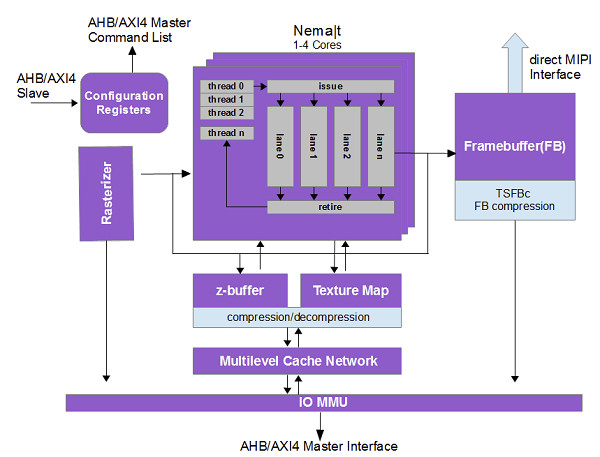

Think Silicon Ultra Low Power NEMA GPUs are Designed for Wearables and IoT Applications

When you have to purchase a wearable device, let’s say a smartwatch or fitness tracker, you have to make trade offs between user interface and battery life. For example, a fitness tracker such as Xiaomi Mi Band 2 will last about 2 weeks per charge with a limited display, while Android smartwatches with a much better interface need to be recharged every 1 or 2 days. Think Silicon aims to improve battery life of the devices with nicer user interfaces thanks to their ultra-low power NEMA 2D, 3D, and GP GPU that can be integrated into SoCs with ARM Cortex-M and Cortex-A cores. The company has three family of GPUs: NEMA|p pico 2D GPU with one core 4bpp framebuffer, 6bpp texture with/out alpha Fill Rate – 1pixel/cycle Silicon Area – 0.07 mm2 with 28nm process Power Consumption – leakage power GPU consumption of 0.06mW; with compression (TSFSc): 0.03 mW NEMA|t […]

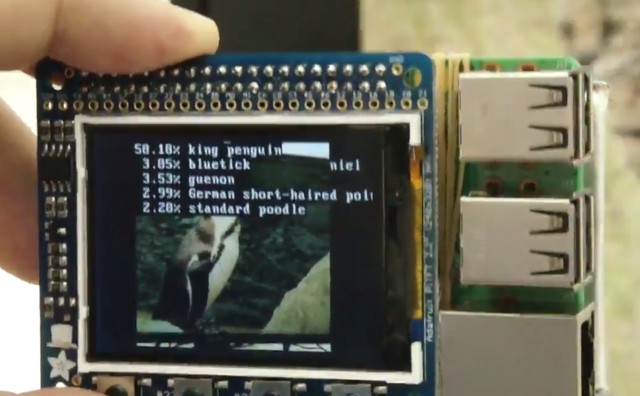

GPU Accelerated Object Recognition on Raspberry Pi 3 & Raspberry Pi Zero

You’ve probably already seen one or more object recognition demos, where a system equipped with a camera detects the type of object using deep learning algorithms either locally or in the cloud. It’s for example used in autonomous cars to detect pedestrian, pets, other cars and so on. Kochi Nakamura and his team have developed software based on GoogleNet deep neural network with a a 1000-class image classification model running on Raspberry Pi Zero and Raspberry Pi 3 and leveraging the VideoCore IV GPU found in Broadcom BCM283x processor in order to detect objects faster than with the CPU, more exactly about 3 times faster than using the four Cortex A53 cores in RPi 3. They just connected a battery, a display, and the official Raspberry Pi camera to the Raspberry Pi boards to be able to recognize various objects and animals. The first demo is with Raspberry Pi Zero. […]

Open Source ARM Compute Library Released with NEON and OpenCL Accelerated Functions for Computer Vision, Machine Learning

GPU compute promises to deliver much better performance compared to CPU compute for application such a computer vision and machine learning, but the problem is that many developers may not have the right skills or time to leverage APIs such as OpenCL. So ARM decided to write their own ARM Compute library and has now released it under an MIT license. The functions found in the library include: Basic arithmetic, mathematical, and binary operator functions Color manipulation (conversion, channel extraction, and more) Convolution filters (Sobel, Gaussian, and more) Canny Edge, Harris corners, optical flow, and more Pyramids (such as Laplacians) HOG (Histogram of Oriented Gradients) SVM (Support Vector Machines) H/SGEMM (Half and Single precision General Matrix Multiply) Convolutional Neural Networks building blocks (Activation, Convolution, Fully connected, Locally connected, Normalization, Pooling, Soft-max) The library works on Linux, Android or bare metal on armv7a (32bit) or arm64-v8a (64bit) architecture, and makes use […]