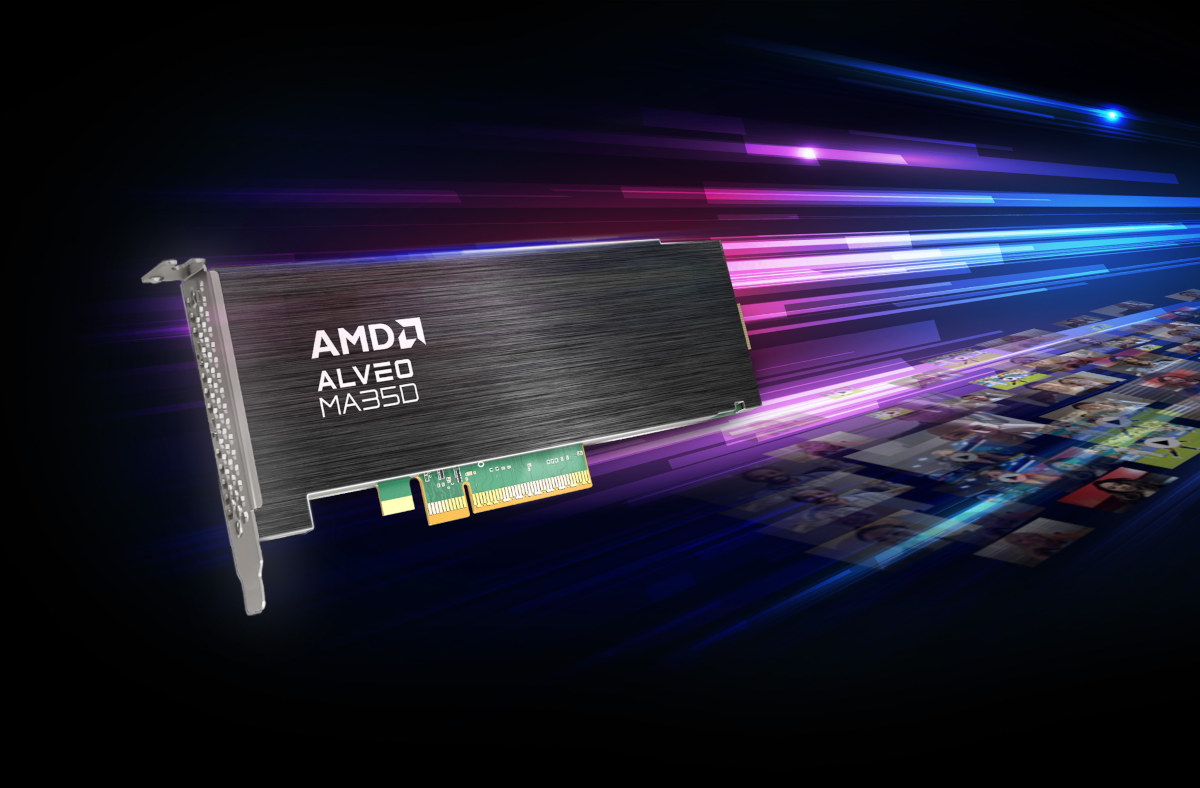

AMD Alveo MA35D media accelerator PCIe card is based on a 5nm ASIC capable of transcoding up to 32 Full HD (1080p60) AV1 streams in real-time and designed for low-latency, high-volume interactive streaming applications such as watch parties, live shopping, online auctions, and social streaming. AMD says the Alveo MA35D utilizes a purpose-built VPU to accelerate the entire video pipeline, and the ASIC can also handle up to 8x 4Kp60, or 4x 8Kp30 AV1 streams per card. H.264 and H.265 codecs are also supported, and the company claims its “next-generation AV1 transcoder engines” deliver up to a 52% reduction in bitrate at the same video quality against “an open source x264 veryfast SW model”. AMD Alveo MA350 highlights: Auxiliary CPU – 2x 64-bit quad-core RISC-V to perform control and board management tasks AI Processor – 22 TOPS per card for AI-enabled “smart streaming” for video quality optimization Memory – 16GB […]

FOSDEM 2023 schedule – Open-source Embedded, Mobile, IoT, Arm, RISC-V, etc… projects

After two years of taking place exclusively online, FOSDEM 2023 is back in Brussels, Belgium with thousands expected to attend the 2023 version of the “Free and Open Source Developers’ European Meeting” both onsite and online. FOSDEM 2023 will take place on February 4-5 with 776 speakers, 762 events, and 63 tracks. As usual, I’ve made my own little virtual schedule below mostly with sessions from the Embedded, Mobile and Automotive devroom, but also other devrooms including “Open Media”, “FOSS Educational Programming Languages devroom”, “RISC-V”, and others. FOSDEM Day 1 – Saturday February 4, 2023 10:30 – 10:55 – GStreamer State of the Union 2023 by Olivier Crête GStreamer is a popular multimedia framework making it possible to create a large variety of applications dealing with audio and video. Since the last FOSDEM, it has received a lot of new features: its RTP & WebRTC stack has greatly improved, Rust […]

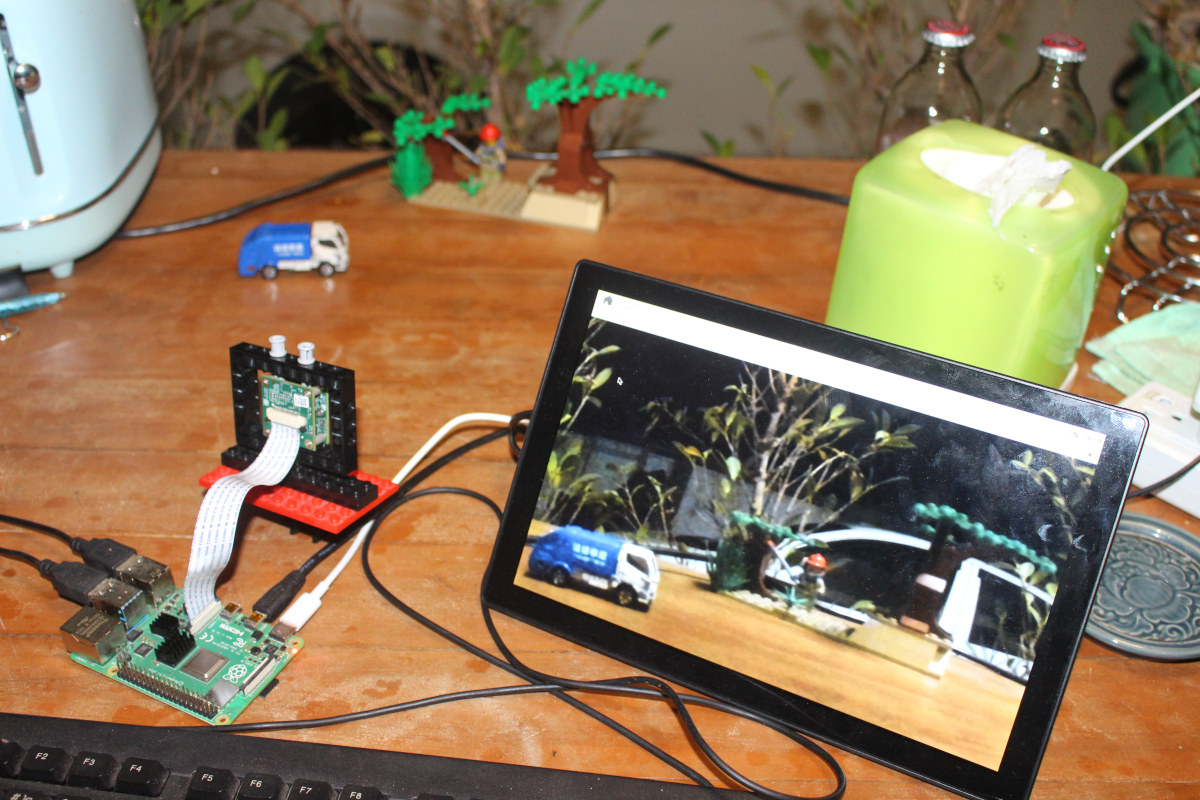

Getting started with e-CAM20_CURB camera for Raspberry Pi 4

e-con Systems e-CAM20_CURB is a 2.3 MP fixed focus global shutter color camera designed for the Raspberry Pi 4, and the company has sent us a sample for evaluation and review. We’ll start by providing specifications, before checking out the package content, connecting the camera to the Raspberry Pi 4 with a DIY LEGO mount, showing how to access the resources for the camera, and trying tools provided in the Raspberry Pi OS or Yocto Linux image. e-CAM20_CURB specifications The camera is comprised of two boards with the following specifications: eCAM217_CUMI0234_MOD full HD color camera with 4-lane MIPI CSI-2 interface ON Semiconductor AR0234CS CMOS sensor with 1/2.6″ optical form-factor Global Shutter Onboard ISPimage sensor from ON Semiconductor Uncompressed UYVY streaming HD (1280 x 720) up to 120 fps Full HD (1920 x 1080) up to 65 fps 2.3 MP (1920 x 1200) up to 60 fps External Hardware Trigger Input […]

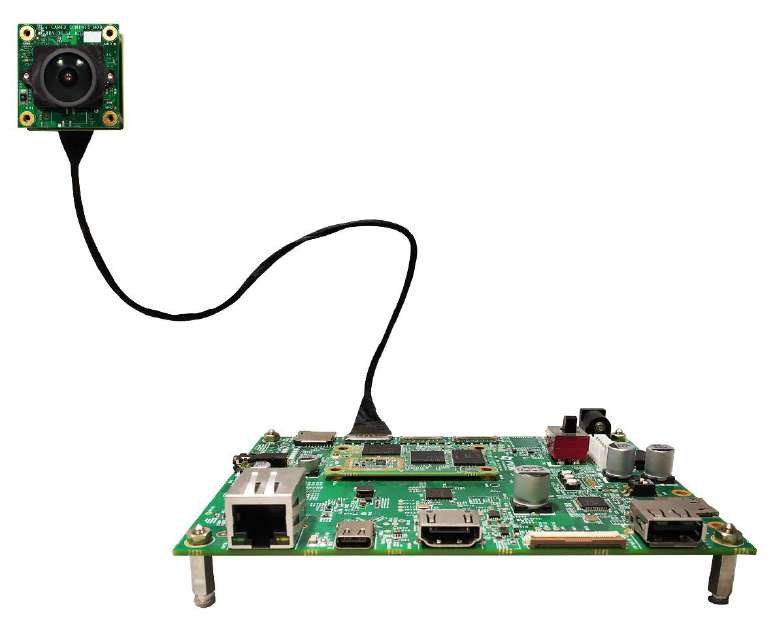

Qualcomm Edge AI Vision kit combines Qualcomm QCS610 SoC & Sony IMX415 4K ultra low light camera

e-con Systems has recently launched the qSmartAI80_CUQ610, a Qualcomm Edge AI vision kit based on Qualcomm QCS610 octa-core Cortex-A76/A55 processor and featuring a camera module based on Sony STARVIS IMX415 4K ultra low light sensor. The kit is comprised of a QCS610 module with 4GB RAM and 16GB eMMC flash and carrier board designed by VVDN Technologies, as well as e-con Systems 4K camera module, and designed to run vision machine learning and deep learning models at the edge. qSmartAI80_CUQ610 “Qualcomm Edge AI Vision kit” specifications: System-on-Module SoC – Qualcomm QCS610 octa-core processor with 2x Kryo 460 Gold cores @ 2.2 GHz (Cortex-A76 class), and 6x Kryo 430 Silver low-power cores @ 1.8GHz (Cortex-A55 class), Adreno 612 GPU @ 845 MHz, with OpenGL ES 3.2, Vulkan 1.1, OpenCL 2.0, Hexagon DSP with Hexagon Vector eXtensions (HVX), Spectra 250L ISP, 4Kp30 VPU H.265 encode/decode; Note: Qualcomm QCS410 SoC is available upon […]

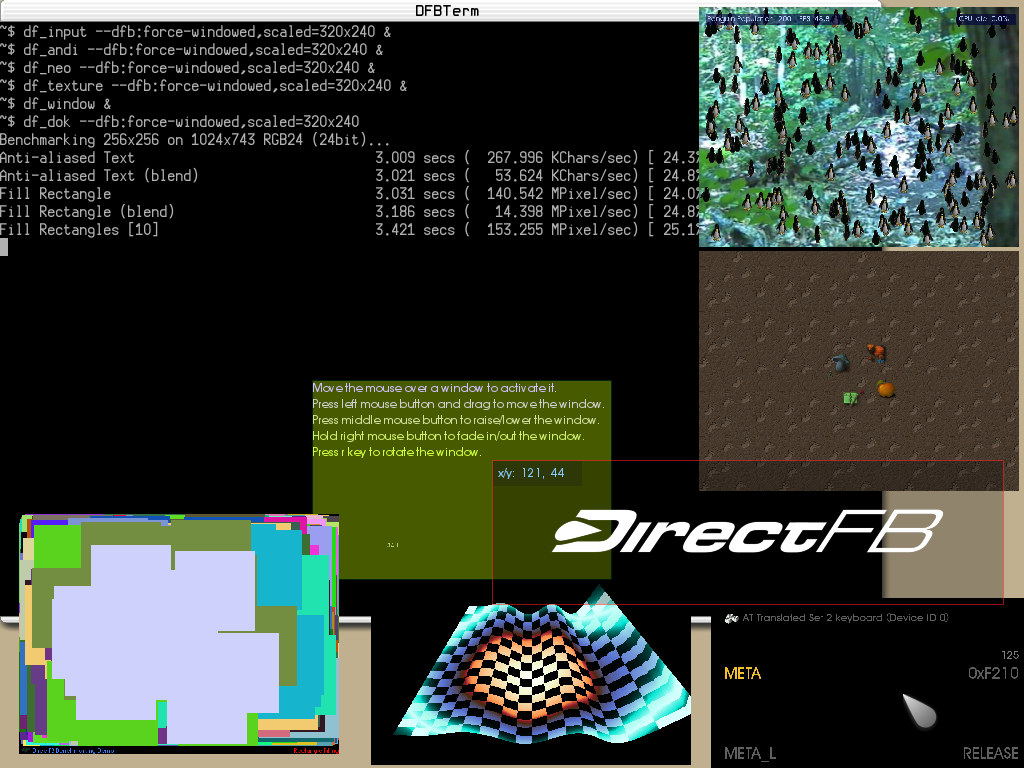

DirectFB2 project brings back DirectFB graphics library for Linux embedded systems

DirectFB2 is a new open-source project that brings back DirectFB, a graphics library optimized for Linux-based embedded systems that was popular several years ago for 2D user interfaces but has since mostly faded away. DirectFB2 attempts to preserve the original DirectFB backend while adding new features such as modern 3D APIs like Vulkan and OpenGL ES. I personally used it in 2008-2009 while working with Sigma Designs media processors that relied on the DirectFB library to render the user interfaces for IPTV boxes, karaoke machines, and so on. I remember this forced me to switch from a MicroWindows + Framebuffer solution, but the DirectFB API was easy enough to use and allowed us to develop a nicer user interface. I found out about the new project while checking out the FOSDEM 2022 schedule and a talk entitled “Back to DirectFB! The revival of DirectFB with DirectFB2” which will be presented […]

Customize GStreamer build with only the features needed for your application

Thanks to a partnership between Collabora and Huawei is now possible to build Gstreamer with just the features required for a specific application, reducing the binary size for space-constrained embedded systems. Gstreamer is a very popular open-source multimedia framework used in a wide variety of projects and products, and with an impressive number of features spread over 30 libraries and more than 1600 elements in 230 plugins. This is not a problem on desktop PC and most smartphones, but the size of the binary may be too large for some systems, and until recently it was no easy way to customize GStreamer build for a specific application. But Collabora changed the code to allow gst-build to generate a minimal GStreamer build. The company built upon a new feature from GStreamer 1.18, released in September 2020, that makes it possible to build all of GStreamer into a single shared library named […]

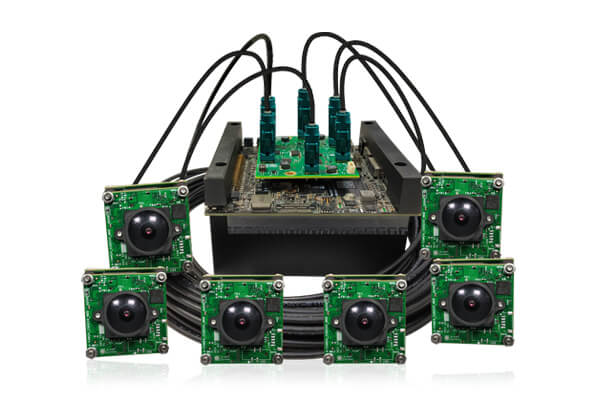

GMSL2 camera kit supports 15-meter long cables, up to six cameras with NVIDIA Jetson Xavier AGX

We’ve recently seen it’s possible to use a long cable with the Raspberry Pi camera thanks to THine camera extension kit that works with 20-meter LAN cables using V-by-One HS technology. e-con Systems has now launched a similar solution for NVIDIA Jetson Xavier AGX, one of the most powerful Arm devkits available in 2021, with NileCAM21 Full HD GMSL2 HDR Camera that supports up to 15-meter long cable as well as LFM (LED Flickering Mitigation) technology. NileCAM21 camera features and specifications: Based on OnSemi AR0233 Full HD camera module with S-mount interchangeable lens holder Gigabit Multimedia Serial Link 2 (GMSL2) interface with FAKRA connector Shielded coaxial cable for transmission of power and data over long distances (up to 15m) High Dynamic Range (HDR) with LED Flickering Mitigation (LFM) Supported resolutions and max frame rates for uncompressed UYVY streaming VGA (640 x 480) up to 60 fps qHD (960 x 540) […]

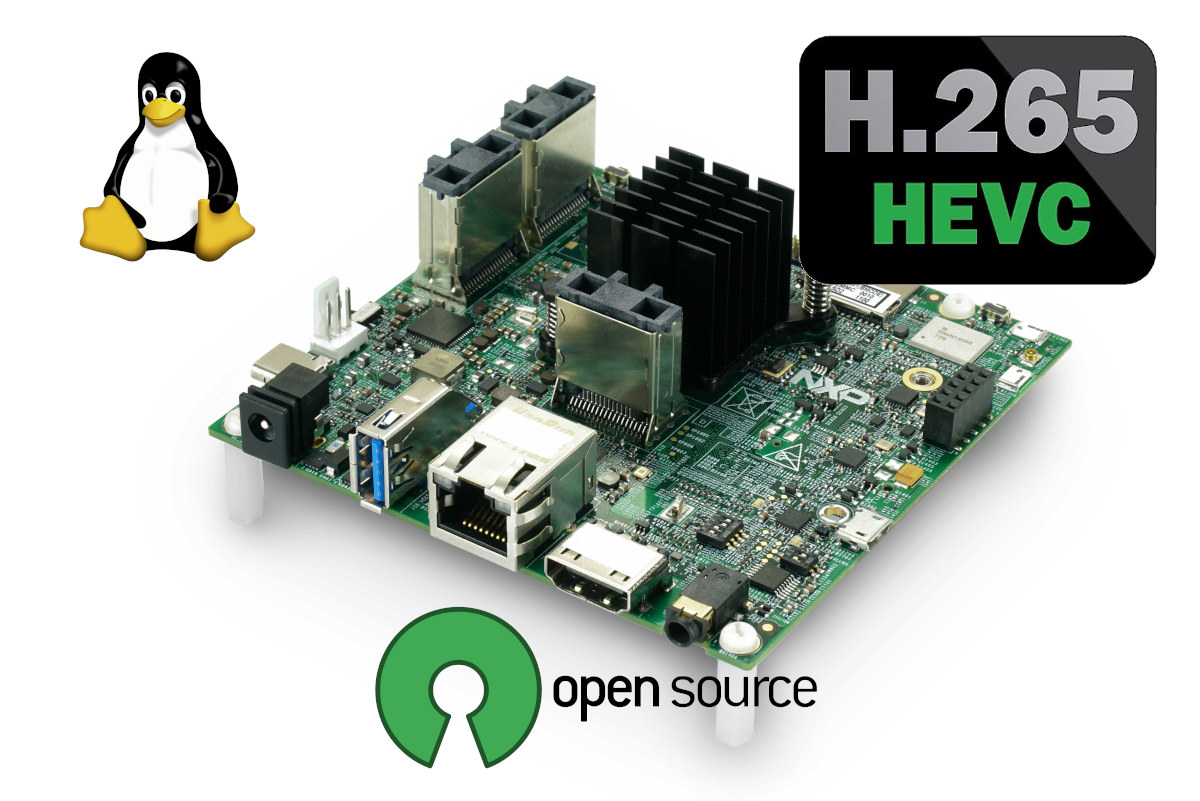

Open-source H.265/HEVC Hantro G2 decoder driver added to NXP i.MX 8M in Linux

Getting open-source multimedia drivers on Arm Linux is one of the most difficult tasks, that’s why there’s no much talk about open-source GPU drivers for 2D & 3D graphics acceleration, but work on video hardware decoding and encoding is also a challenge. We’ve previously seen Bootlin work on Cedrus open-source driver for Allwinner VPU (Video Processing Unit), but Collabora has been working on open-source drivers for VeriSilicon’s Hantro G1 and G2 VPU found in some Rockchip, NXP, and Microchip processors. The company previously managed to have Hantro G1 open-source driver for JPEG, MPEG-2, VP8, and H.264 codecs, but H.265/HEVC relies on Hantro G2, and the patch for H.265 hardware video decoding on NXP i.MX 8M Quad has just been submitted to mainline Linux. Benjamin Gaignard explains more in his commit message: The IMX8MQ got two VPUs but until now only G1 has been enabled This series aim to add the […]