The STMicro VL53L9 sensor is the latest addition to the company’s FlightSense product family. The direct Time-of-Flight (ToF) 3D LIDAR (light detection and ranging) sensor offers a resolution of up to 2,300 zones. The module is described as all-in-one and easy to integrate. It comes in a small, reflowable package that contains all the necessary components for sensing objects and processing images. The sensor features an array of single photon avalanche diodes (SPADs) for photon detection, a post-processing SoC, and two vertical surface emitting lasers (VCSELs) powered by a dedicated bipolar-CMOS-DMOS (BCD) VCSEL. The VL53L9 is a multi-zone ToF sensor similar to the VL53L7CX and the VL53L8, meaning that it offers multi-zone distance measurements up to 54 x 42 zones with a wide 54° x 42° field-of-view. Unlike most IR sensors, the VL53L9 sensor uses backside illumination direct ToF technology to ensure absolute distance measurement, regardless of the target color […]

ROCK Base triple-frequency RTK/GNSS base station works with Web3 GEODNET decentralized network

ROCK Robotic has announced the ROCK Base triple-band multi-constellation RTK/GNSS base station that works with the Web3 GEODNET decentralized GNSS reference network, with the solution designed to offer centimeter accuracy to support applications such as civil surveying, high-definition mapping, digital twin creation, and robotic solutions. Real-time Kinematic (RTK) is a relative positioning technique relying on GNSS (GPS, Galileo, GLONASS, Beidou …) and allowing centimeter positioning accuracy. The technique requires an RTK “Base station” like the ROCK Base and a lower-cost “Rover station” typically attached to a vehicle or drone. For higher accuracy, it’s also possible to use a Network RTK: Network RTK is based on the use of several widely spaced permanent stations. Data from reference stations is combined in a common data processing in a control center. The control center computes corrections for the spatially correlated errors within the network. These corrections are transmitted to the rover. Comparing to […]

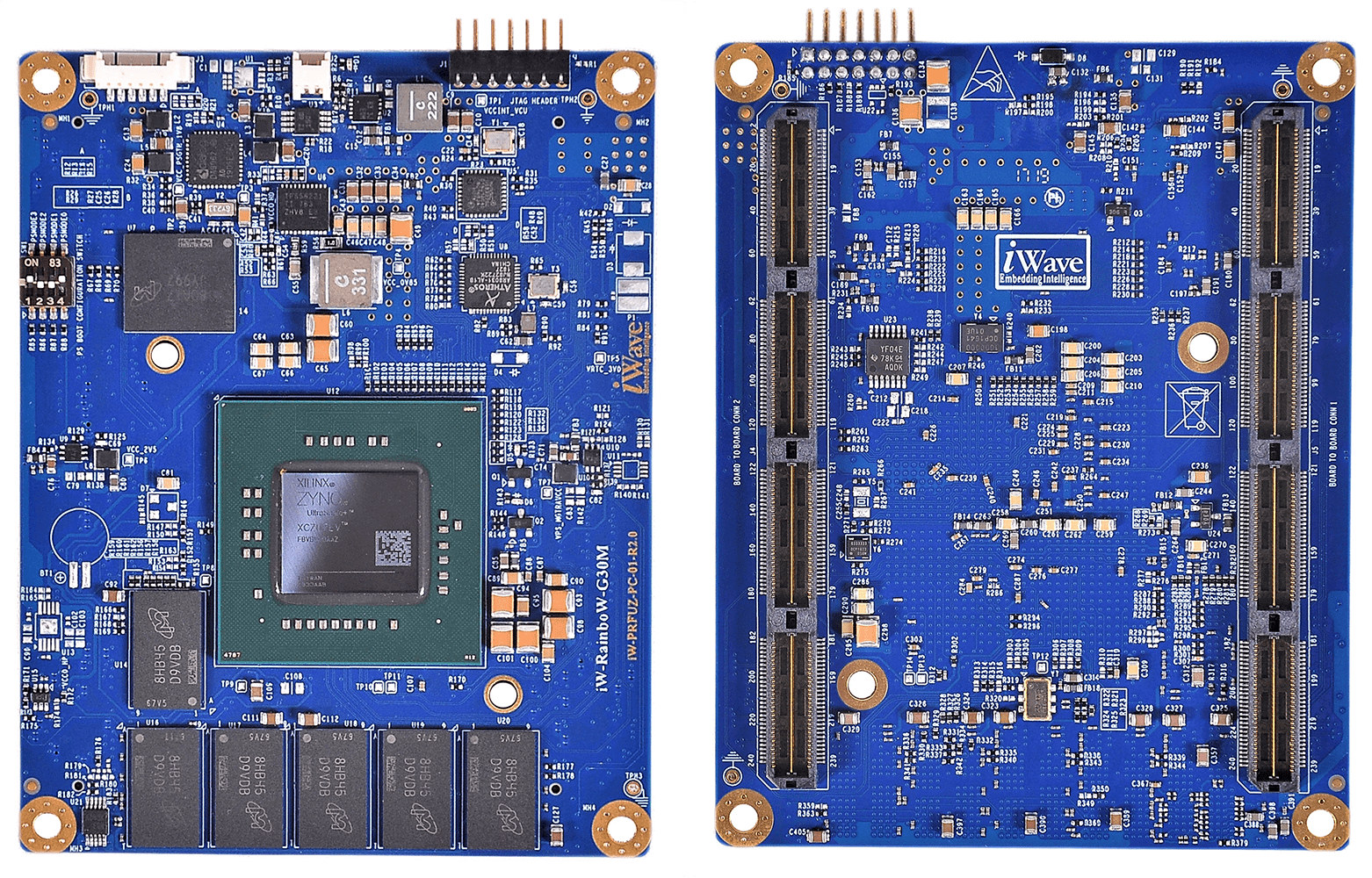

Zynq UltraScale+ SoM with up to 12GB RAM targets LiDAR applications

iWave Systems iW-RainboW-G30M is a system-on-module (SoM) based on AMD Xilinx Zynq UltraScale+ ZU4/ZU5/ZU7 FPGA MPSoC specially geared towards LiDAR applications for scientific and military applications. The module comes with up to 12GB of RAM, 4GB for the programmable logic (PL) and 8GB for the Arm Cortex-A53/R5-based Processing System (PS), two 240-pin high-density, high-speed connectors with 142 user I/Os, 16x GTH transceivers up to 16.3Gbps, and four GTR transceivers up to 6Gbps. iW-RainboW-G30M specifications: FPGA MPSoC – AMD Xilinx Zynq Ultrascale+ ZU4, ZU5, or ZU7 MPSoC with Processing System (PS) featuring 2x or 4x Arm Cortex-A53 core @ 1.5 GHz, two Cortex-R5 cores @ 600MHz, H.264/H.265 Video Encoder/Decoder (VCU), ARM Mali-400MP2 GPU @ 677MHz, and Programming Logic (PL)/FPGA with up to 504K Logic cells & 230K LUTs System Memory 4GB DDR4 64-bit RAM with ECC for PS (upgradeable up to 8GB) 2GB DDR4 16-bit RAM for PL (upgradeable up to […]

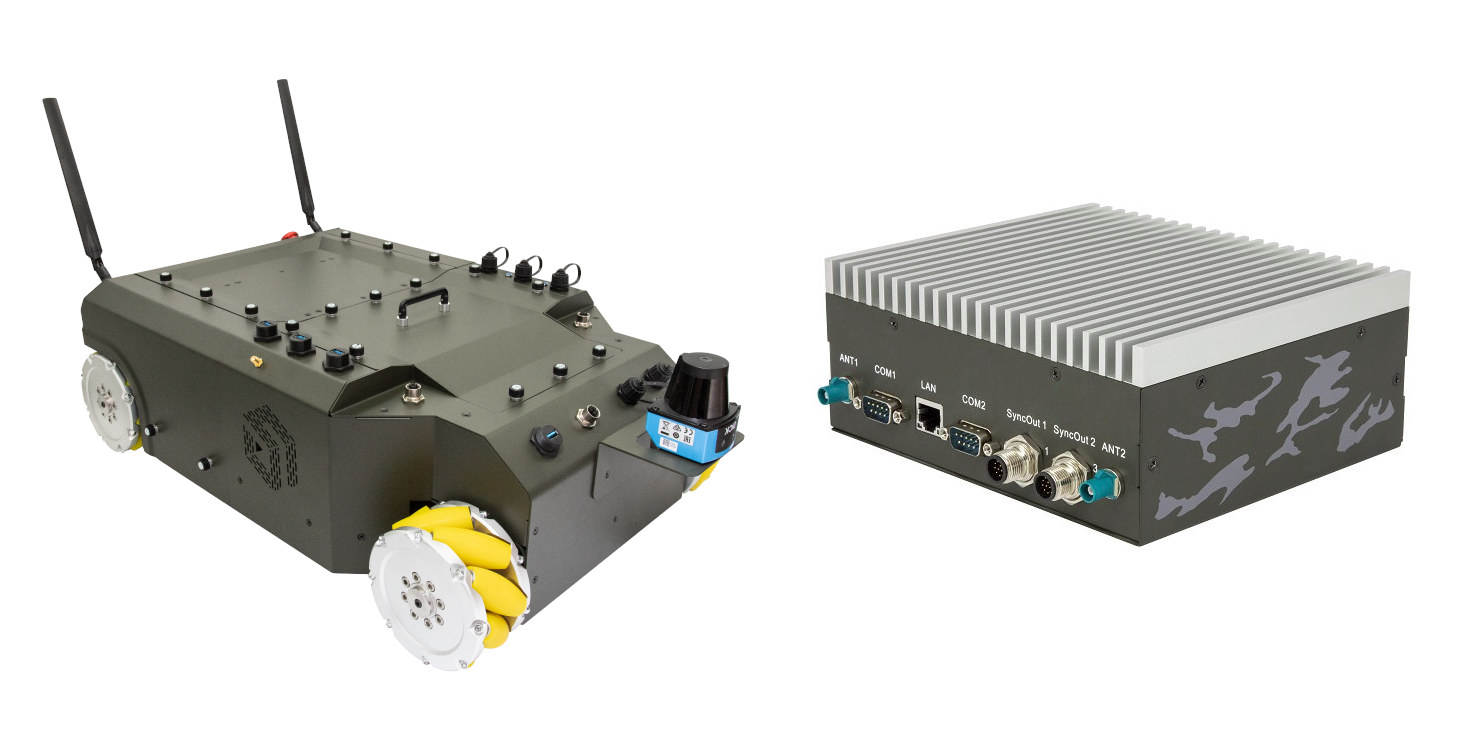

SyncBot educational mobile robot supports NVIDIA Xavier NX or Intel Tiger Lake controller

Syncbotic Syncbot is a four-wheel autonomous mobile robot (AMR) platform for research and education that can be fitted with an NVIDIA Xavier NX or an Intel Apollo Lake/Tiger Lake-based controller running Ubuntu 20.04 operating system with ROS 2 framework, and comes with an motion control MCU board with an EtherCat master and running Zephyr OS. The robot comes with four 400W TECO servo motors, can handle up to 80kg payloads for sensors and a robotic arm, features 12V and 24V power output for sensors, four USB 3.0 ports, and can also be equipped with an eight-camera kit with Intel RealSense and ToF cameras. Syncbot AMR specifications: Robot Controller Platform (one or the other) SyncBotic A100 evaluation ki (Apollo Lake E3940) SyncBotic SBC-T800 series (Intel Tiger Lake UP3) SyncBotic SBC W series (Intel Tiger Lake UP3, waterproof version) SyncBotic NSync-200 series (NVIDIA NX) Dimensions – 200 x 190 mm STM32-based Motion […]

Onion Tau is an affordable 3D depth LiDAR camera (Crowdfunding)

Onion is better known for its Omega IoT boards running OpenWrt, but the company has now come up with a completely different product: Onion Tau 3D depth camera equipped with a 160×60 LiDAR sensor. The device plugs like a USB webcam to a host computer or board, but instead of transferring standard images, the camera produces 3D depth data that can be used to detect thin objects, track moving objects, and be integrated into other applications leveraging environment mapping such as SLAM (Simultaneous localization and mapping). Onion Tau LiDAR camera (TA-L10) specifications: Depth technology – LiDAR Time of Flight Depth stream output – 160 x 60 @ 30 fps Depth range – 0.1 to 4.5 meters Depth field of view (FOV) – 81˚ x 30° Grayscale 2D camera image sent with 3D depth map data Host interface – USB Type-C port Dimensions – 90 x 41 x 20 mm; 4x […]

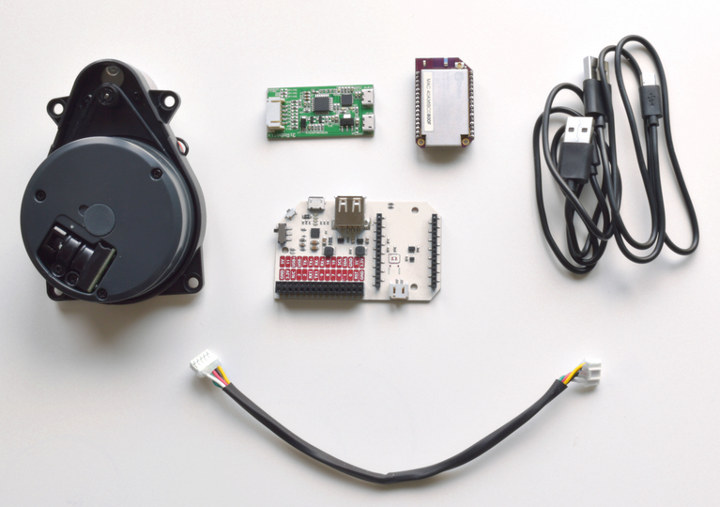

$200 Omega2 WiFi LIDAR Kit Comes with a 360˚ 2D LIDAR Scanner

Onion Omega2 is a tiny WiFi board running OpenWrt that launched for as low as $5 via a crowdfunding campaign around 2 years ago, and at the time I reviewed Onion Omega2+ board – which comes with more memory and storage – together with its dock, and I found it was fairly easy to get started with the solution. You can now buy the board for a little over $10, but the company also offers kits, and their latest product is Omega2 wireless LIDAR kit with an indoor 360˚ 2D LiDAR scanner that uses rotating laser ranging to measure and map exact distances to indoor surroundings. The kit is comprised of the following items: Onion Omega2+ board with MediaTek MT7688 MIPS processor, 128MB RAM, 32MB flash Power Dock 2 with 30-pin expansion header and USB host port Delta2B 360˚ LiDAR Scanner Up to 5000 samples/second Range – 0.2 to 8 […]

Getting Started with TinyLIDAR Time-of-Flight Sensor on Arduino and Raspberry Pi

TinyLIDAR is an inexpensive and compact board based on STMicro VL53L0X Time-of-Flight (ToF) ranging sensor that allows you to measure distance up to 2 meters using infrared signals, and with up to 60 Hz. Contrary to most other VL53L0X boards, it also includes an STM32L0 micro-controller that takes care of most of the processing, frees up resource on your host board (e.g. Arduino UNO), and should be easier to control thanks to I2C commands. The project was successfully funded on Indiegogo by close to 600 backers, and the company contacted me to provided a sample of the board, which I have now received, and tested with Arduino (Leonardo), and Raspberry Pi (2). TinyLIDAR Unboxing I was expecting a single board, but instead I received a bubble envelop with five small zipped packages. Opening them up revealed three TinyLIDAR boards, the corresponding Grove to jumper cables, and a bracket PCB for […]

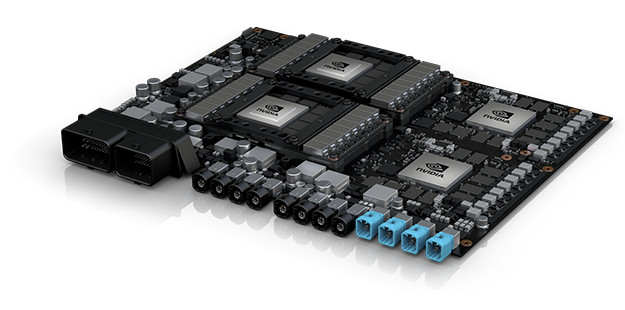

NVIDIA DRIVE PX Pegasus Platform is Designed for Fully Autonomous Vehicles

Many companies are now involved in the quest to develop self-driving cars, and getting there step by step with 6 levels of autonomous driving defined based on info from Wikipedia: Level 0 – Automated system issues warnings but has no vehicle control. Level 1 (”hands on”) – Driver and automated system shares control over the vehicle. Examples include Adaptive Cruise Control (ACC), Parking Assistance, and Lane Keeping Assistance (LKA) Type II. Level 2 (”hands off”) – The automated system takes full control of the vehicle (accelerating, braking, and steering), but the driver is still expected to monitor the driving, and be prepared to immediately intervene at any time. You’ll actually have your hands on the steering wheel, just in case… Level 3 (”eyes off”) – The driver can safely turn their attention away from the driving tasks, e.g. the driver can text or watch a movie. The system may ask […]