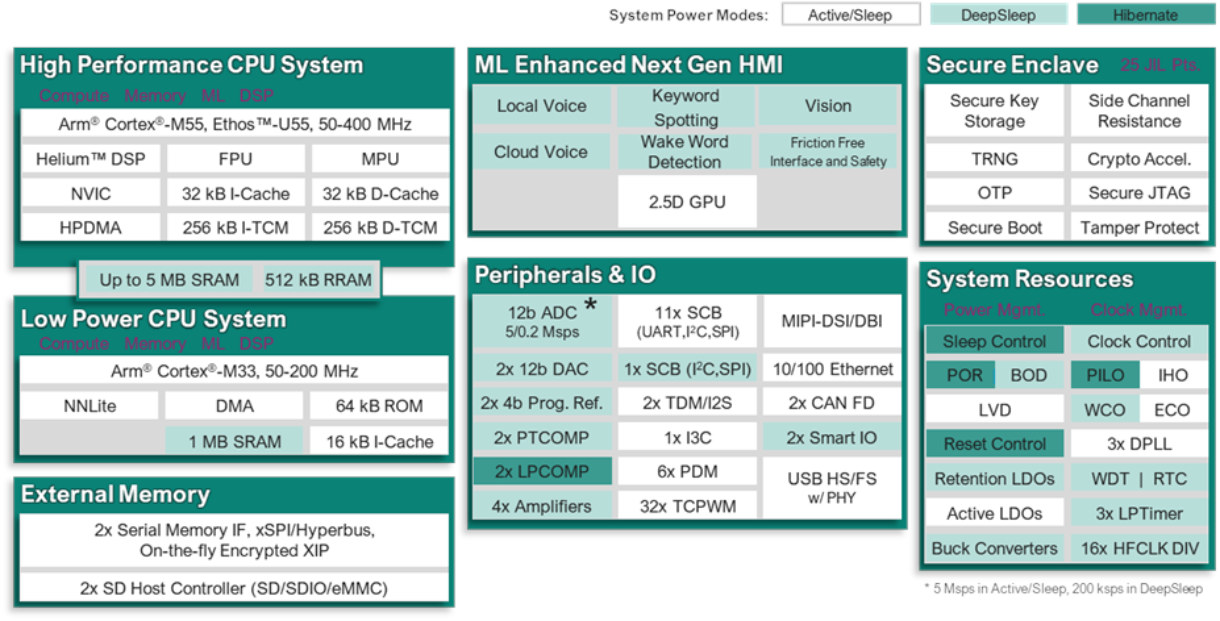

Infineon PSOC Edge E81, E83, and E84 MCU series are dual-core Cortex-M55/M33 microcontrollers with optional Arm Ethos U55 microNPU and 2.5D GPU designed for IoT, consumer, and industrial applications that could benefit from machine learning acceleration. This is a follow-up to the utterly useless announcement by Infineon about PSoC Edge Cortex-M55/M33 microcontrollers in December 2023 with the new announcement introducing actual parts that people may use in their design. The PSOC Edge E81 series is an entry-level ML microcontroller, the PSOC Edge E83 series adds more advanced machine learning with the Ethos-U55 microNPU, and the PSOC Edge E84 series further adds a 2.5D GPU for HMI applications. Infineon PSOC Edge E81, E83, E84-series specifications: MCU cores Arm Cortex-M55 high-performance CPU system up to 400 Mhz with FPU, MPU, Arm Helium support, 256KB i-TCM, 256KB D-TCM, 4MB SRAM (Edge E81/E83) or 5MB SRAM (Edge E84) Arm Cortex-M33 low-power CPU system up […]

Octavo OSD32MP2 System-in-Package (SiP) packs an STM32MP25 SoC, DDR4, EEPROM, and passive components into a single chip

Octavo Systems OSD32MP2 is a family of two System-in-Package (SiP) modules, comprised of the OSD32MP2 and OSD32MP2-PM, based on the STMicro STM32MP25 Arm Cortex-A35/M33 AI processor, DDR4 memory, and various components to reduce the complexity, size, and total cost of ownership of solutions based on the STM32MP2 chips. The OSD32MP2 is a larger, yet still compact, 21x21mm package with the STM32MP25, DDR4, EEPROM, oscillators, PMIC, passive components, and an optional RTC, while the OSD32MP2-PM is even smaller at 14x9mm and combines the STM32MP25, DDR4, and passive components in a single chip. OSD32MP2 specifications: SoC – STMicro STM32MP25 CPU – Up to 2x 64-bit Arm Cortex-A35 @ 1.5 GHz MCU 1x Cortex-M33 @ 400 MHz with FPU/MPU; 1x Cortex M0+ @ 200 MHz in SmartRun domain GPU – VeriSilicon 3D GPU @ 900 MHz with OpenGL ES 3.2 and Vulkan 1.2 APIs support VPU – 1080p60 H.264, VP8 video decoder/encoder Neural […]

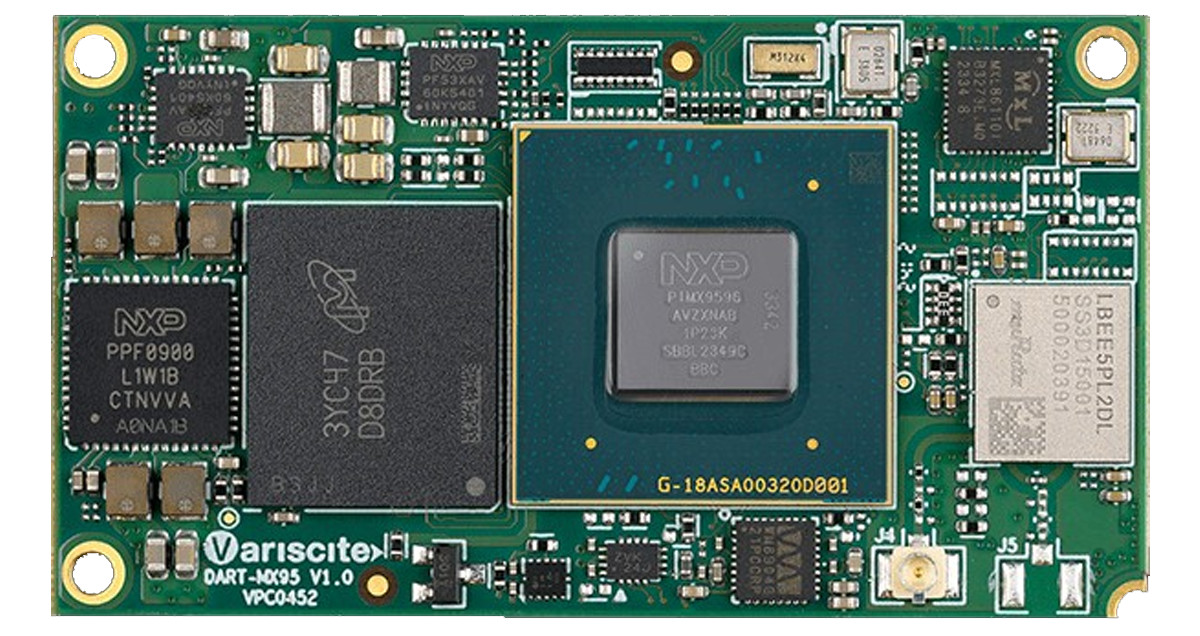

Variscite DART-MX95 SoM – Edge Computing with dual GbE, 10GbE, Wi-Fi 6, and AI/ML capabilities

Introduced at Embedded World 2024, the Variscite DART-MX95 SoM is powered by NXP’s i.MX 95 SoC and features an array of high-speed peripherals including dual GbE, 10GbE, and dual PCIe interfaces. Additionally, this SoM supports up to 16GB LPDDR5 RAM and up to 128GB of eMMC storage. It features a MIPI-DSI display interface, multiple audio interfaces, MIPI CSI2 for camera connectivity, USB ports, and a wide range of other functionalities, making it highly versatile for a variety of applications. Previously, we have covered many different SoMs designed by Variscite, including the Variscite VAR-SOM-6UL, Variscite DART-MX8M, and Variscite DART-6UL SoM, among others. Feel free to check those out if you are looking for products with NXP SoCs. Variscite DART-MX95 SoM Specifications SoC – NXP i.MX 95 CPU Up to 6x 2.0GHz Arm Cortex-A55 cores Real-time co-processors – 800MHz Cortex-M7 and 250MHz Cortex-M33. 2D/3D Graphics Acceleration 3D GPU with OpenGL ES 3.2, […]

Arm Ethos-U85 NPU delivers up to 4 TOPS for Edge AI applications in Cortex-M7 to Cortex-A520 SoCs

Arm has just Introduced its third-generation NPU for edge AI with the Arm Ethos-U85 that scales from 256 GOPS to 4 TOPS or up to four times the maximum performance of the previous generation Ethos-U65 microNPU, while also delivering 20% higher power efficiency. While previous Arm microNPUs were paired with Cortex-M microcontroller-class cores potentially embedded into a Cortex-A application processor, the new Ethos-U85 can be married with Cortex-M microcontrollers and Cortex-A application processors up to the Cortex-A510/A520 Armv9 cores. Arm expects the Ethos-U85 to find its way into SoC designed for factory automation and commercial or smart home cameras with support for the new Transformer Networks and the more traditional Convolutional Neural Networks (CNNs). The Arm Ethos-U85 supports 128 to 2,048 MACs with performance ranging from 256 GOPS to 4 TOPS at 1 GHz, embeds 29 to 267KB RAM, offers SRAM, DRAM, and flash interface for external memory, and up […]

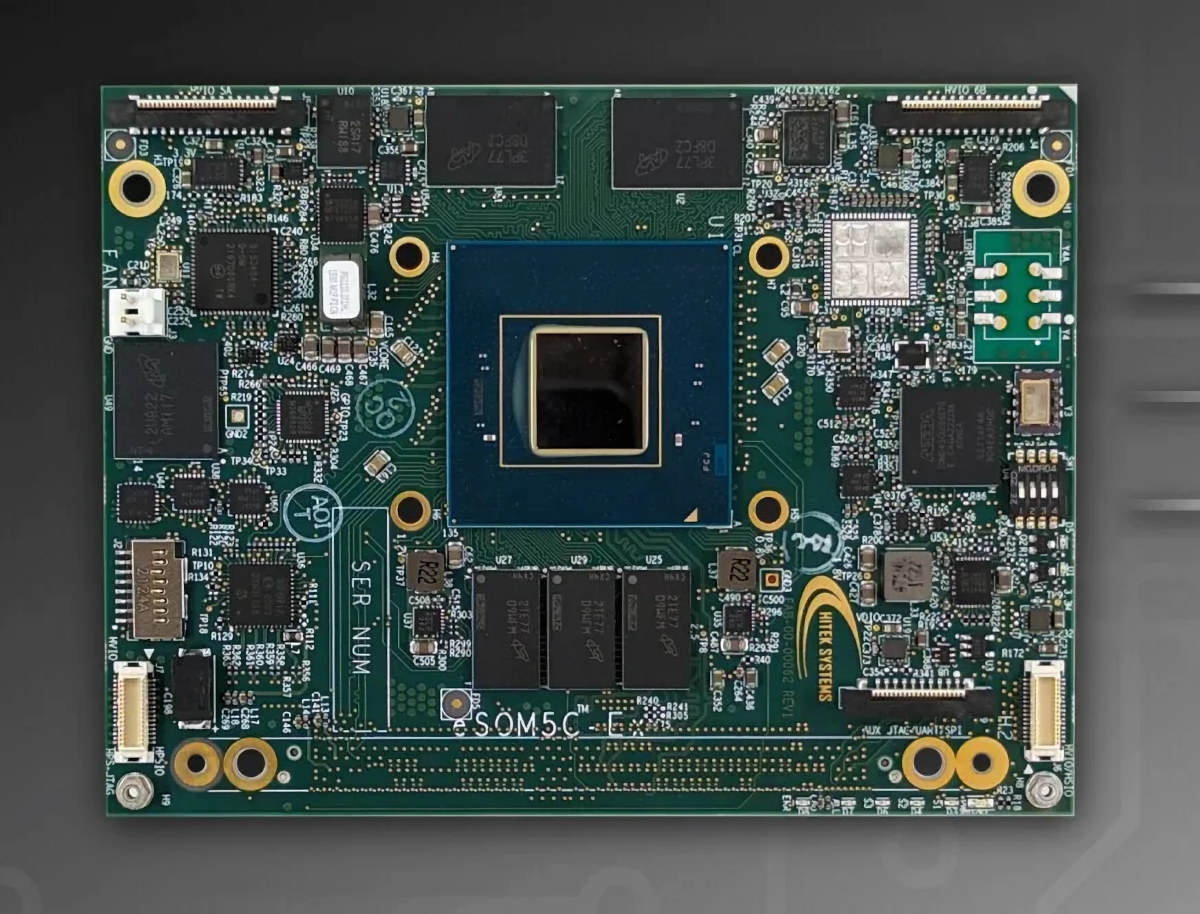

Intel Agilex 5 SoC FPGA embedded SoM targets 5G equipment, 100GbE networking, Edge AI/ML applications

Hitek Systems eSOM5C-Ex is a compact embedded System-on-Module (SOM) based on the mid-range Intel Agilex 5 SoC FPGA E-Series and a pin-to-pin compatible with the company’s earlier eSOM7C-xF based on the Agilex 7 FPGA F-Series. The module exposes all I/Os, including up to 24 transceivers, through the same 400-pin high-density connector found in the Agilex 7 FPGA-powered eSOM7-xF and the upcoming Agilex 5 FPGA D-Series SOM that will allow flexibility from 100K to 2.7 million logic elements (LEs) for the whole product range. Hitek eSOM5C-Ex specifications: SoC FPGA – Intel Agilex 5 E-series group A and Group B FPGAs in B32 package Supported variants: A5E065A/B, A5E043A/B and A5E043A/B Hard Processing System (HPS) – Dual-core Cortex-A76 and dual-core Cortex-A55 FPGA Up to 656,080 Logic elements 24 x transceivers up to 28Gbps System Memory Up to 2x 8GB LPDDR4 for FPGA 2 or 4GB DDR4 for HPS Storage – 32GB eMMC flash, […]

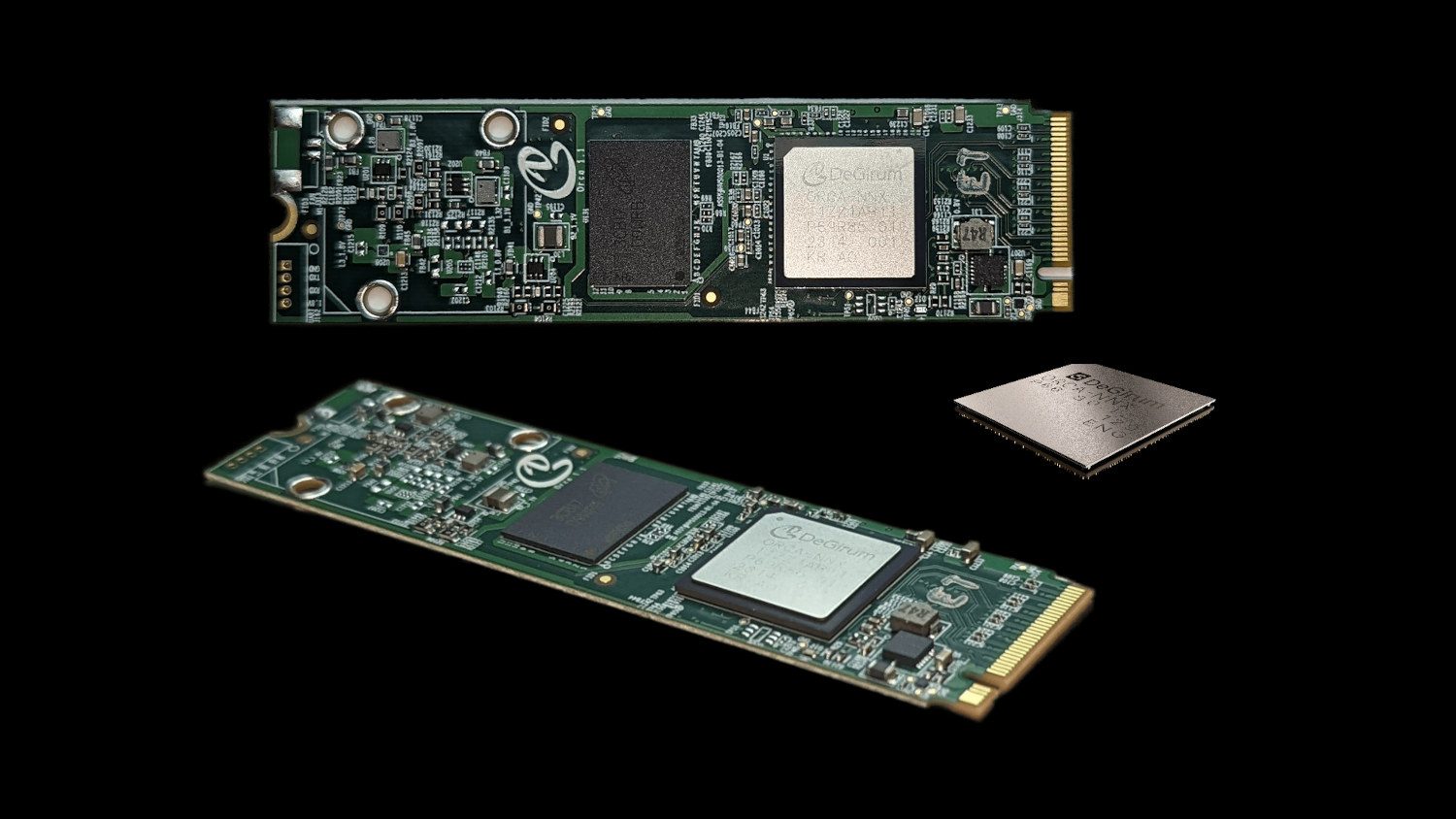

DeGirum ORCA M.2 and USB Edge AI accelerators support Tensorflow Lite and ONNX model formats

I’ve just come across an Atom-based Edge AI server offered with a range of AI accelerator modules namely the Hailo-8, Blaize P1600, Degirum ORCA, and MemryX MX3. I had never heard about the last two, and we may cover the MemryX module a little later, but today, I’ll have a closer at the Degirum ORCA chip and M.2 PCIe module. The DeGirum ORCA is offered as an ASIC, an M.2 2242 or 2280 PCIe module, or (soon) a USB module and supports TensorFlow Lite and ONNX model formats and INT8 and Float32 ML precision. They were announced in September 2023, and have already been tested in a range of mini PCs and embedded box PCs from Intel (NUC), AAEON, GIGABYTE, BESSTAR, and Seeed Studio (reComputer). DeGirum ORCA specifications: Supported ML Model Formats – ONNX, TFLite Supported ML Model Precision – Float32, Int8 DRAM Interface – Optional 1GB, 2GB, or 4GB […]

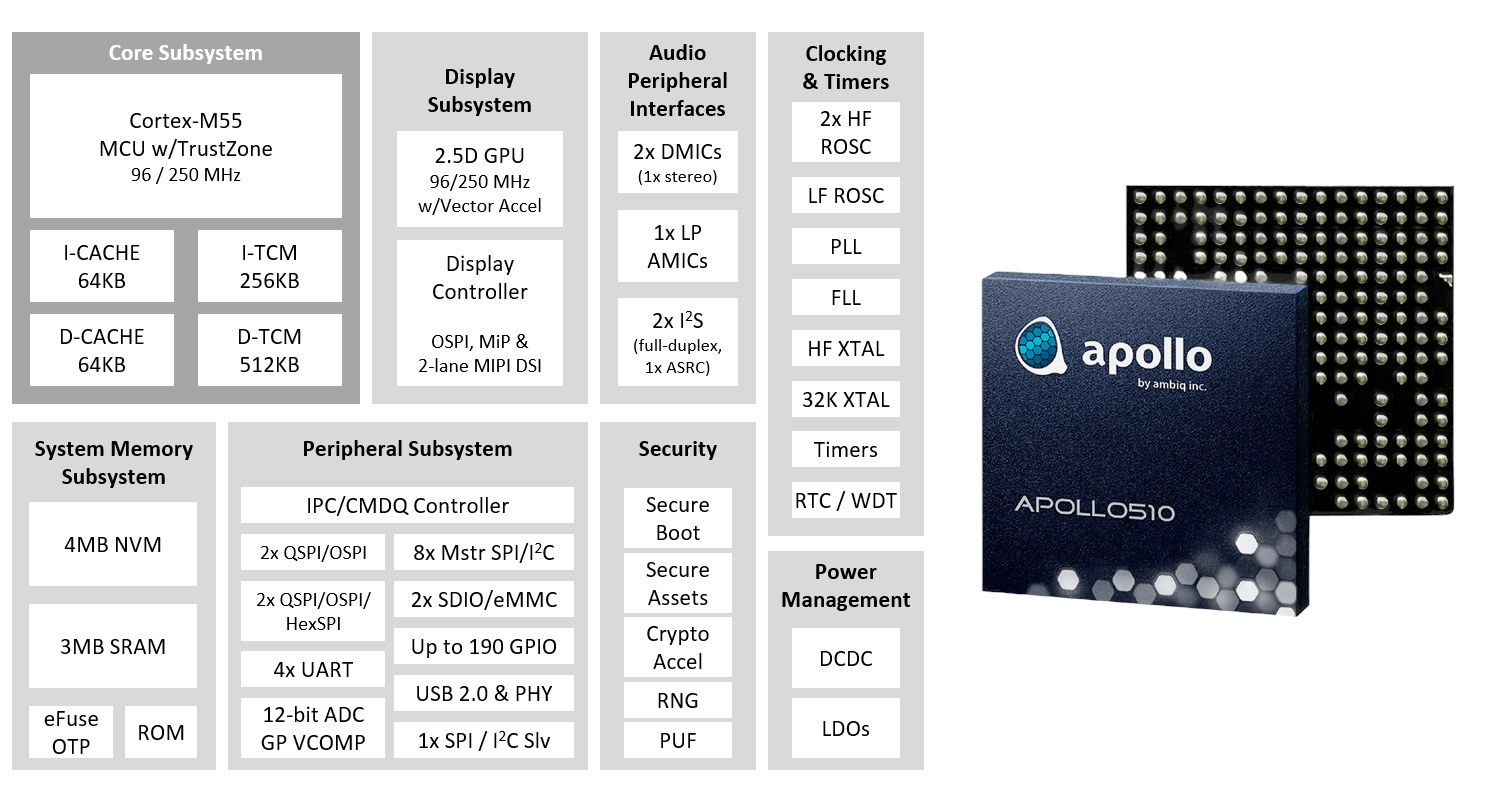

Ambiq Apollo510 Arm Cortex-M55 MCU delivers up to 30x better power efficiency for AI/ML workloads

Ambiq Apollo510 Arm Cortex-M55 microcontroller delivers 30 times better power efficiency than typical Cortex-M4 designs and 10 times the performance of the Apollo4 Cortex-M4 sub-threshold microcontroller for AI and ML workloads. The new MCU also comes with 4MB NVM, 3.75MB SRAM, a 2.5D GPU with vector graphics acceleration that’s 3.5 times faster than the Apollo4 Plus, and support for low-power Memory-in-Pixel (MiP) displays. Like all other Ambiq microcontrollers, the Apollo510 operates at sub-threshold voltage for ultra-low power consumption and implements security with the company’s secureSPOT platform with Arm TrustZone technology. Ambiq Apollo510 specifications: MCU Core – Arm Cortex-M55 core up to 250 MHz with Arm Helium MVE, Arm TrustZone, FPU, MPU, 64KB I-cache, 64KB D-cache, 256KB I-TCM (Tighly Coupled Memory), 256KB D-TCM, Graphics – 2.5D GPU clocked at 96 MHz or 250 MHz with vector graphics acceleration, anti-aliasing hardware acceleration, rasterizer/full alpha blending/texture mapping, texture/framebuffer compression (TSC4, 6, 6A and […]

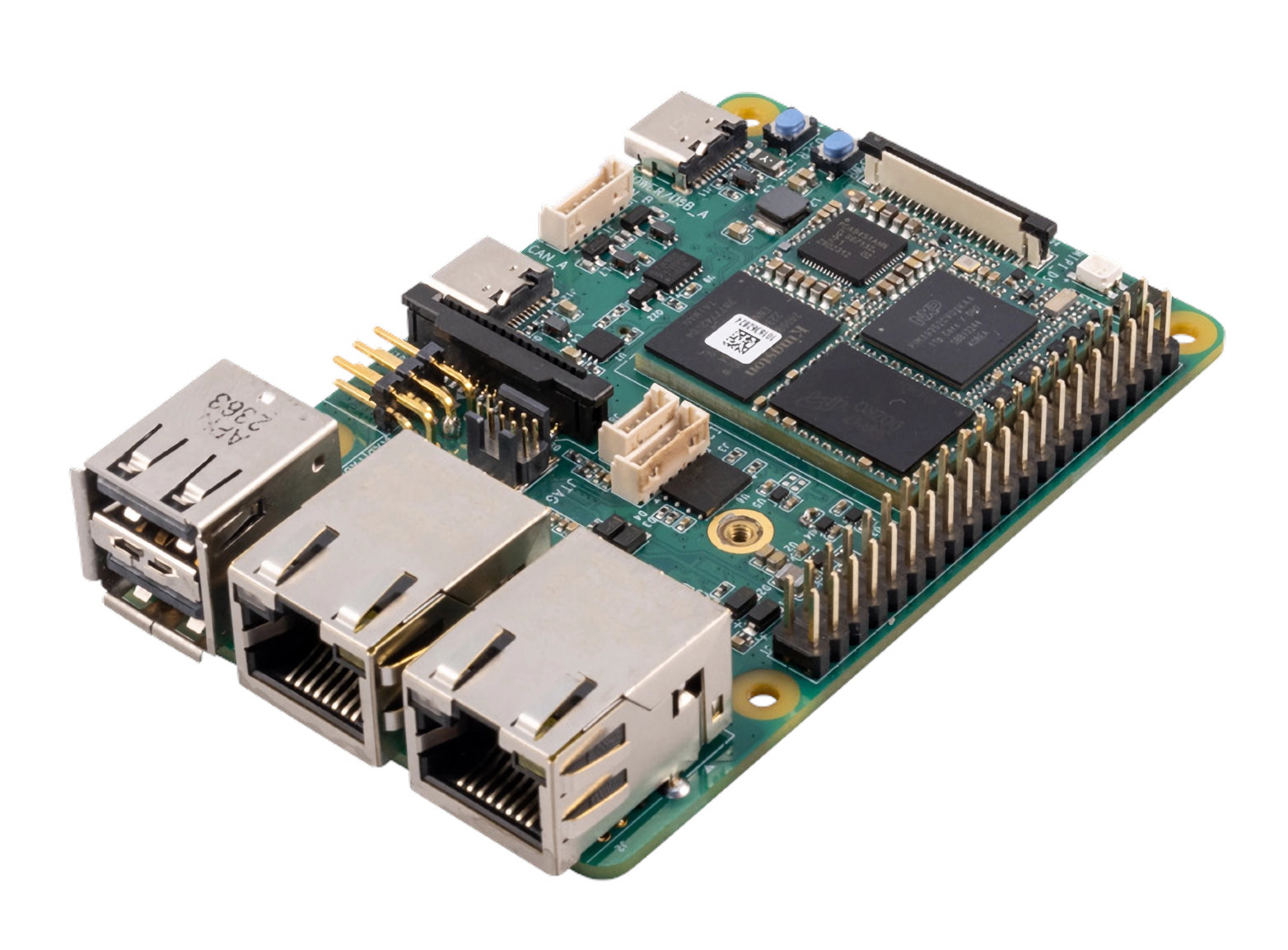

MaaXBoard OSM93 – Business card-sized SBC features NXP i.MX 93 AI SoC, supports Raspberry Pi HATs

MaaXBoard OSM93 is a single board computer (SBC) based on a Size-S OSM module powered by an NXP i.MX 93 Cortex-M55/M33 AI SoC and offered in a business card form factor with support for Raspberry Pi HAT boards through a 40-pin GPIO header and mounting holes. The board also comes with 2GB LDDR4, 16GB eMMC flash, MIPI CSI and DSI interfaces for optional camera and display modules, two gigabit Ethernet ports, optional support for WiFi 6, Bluetooth 5.3, and 802.15.4, three USB 2.0 ports, and two CAN FD interfaces with on-board transceivers. MaaXBoard OSM93 specifications: SoC – NXP i.MX93 CPU 2x Arm Cortex-A55 up to 1.7 GHz 2x Arm Cortex-M33 up to 250 MHz GPU – 2D GPU with blending/composition, resize, color space conversion NPU – 1x Arm Ethos-U65 NPU @ 1 GHz up to 0.5 TOPS Memory – 640 KB OCRAM w/ ECC Security – EdgeLock Secure Enclave System […]