ArduCam KingKong is a Smart Edge AI camera based on the Raspberry Pi CM4 and system-on-module based on Intel Myriad X AI accelerator that follows the Raspberry Pi 5-powered Arducam PiINSIGHT camera introduced at the beginning of the year. The new product launch aims to provide a complete Raspberry Pi-based camera rather than an accessory for the Raspberry Pi 4/5. Smart cameras built around the Raspberry Pi CM4 are not new as we previously covered the EDATEC ED-AIC2020 IP67-rated industrial AI Edge camera and the StereoPi v2 stereoscopic camera used to create 3D video and 3D depth maps. The ArduCam KingKong adds another option suitable for computer vision applications with an AR0234 global shutter module, PoE support, and a CNC metal enclosure. ArduCam KingKong specifications: SoM – Raspberry Pi Compute Module 4 (CM4) by default CM4104000 Wireless 4GB RAM Lite (0GB eMMC). AI accelerator – Luxonis OAK SOM BW1099 based on Intel […]

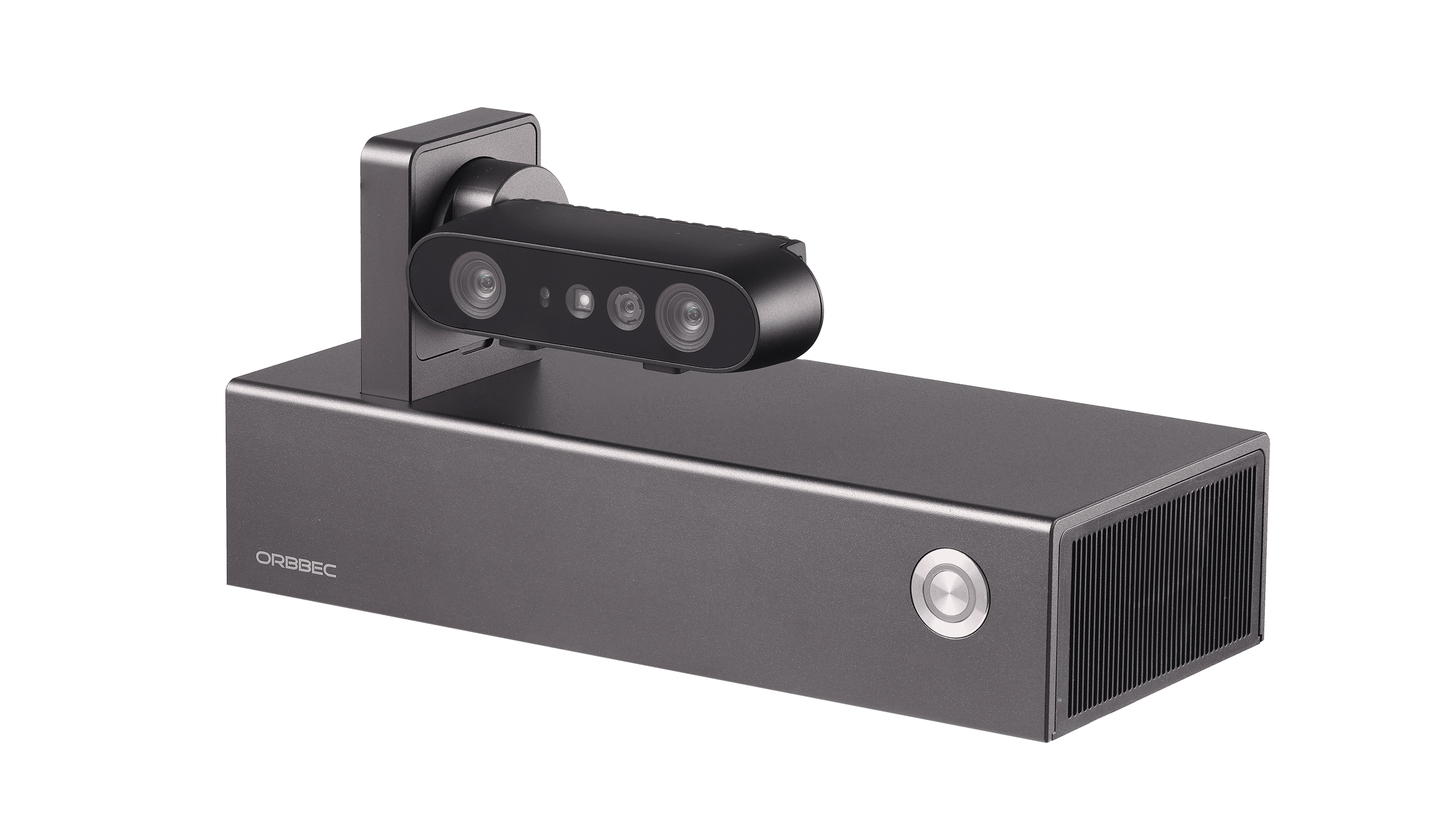

Persee N1 – A modular camera-computer based on the NVIDIA Jetson Nano

The Persee N1 is a modular camera-computer kit recently launched by 3D camera manufacturer, Orbbec. Not too long ago, we covered their 3D depth and RGB USB-C camera, the Femto Bolt. The Persee N1 was designed for 3D computer vision applications and is built on the Nvidia Jetson platform. It combines the quad-core processor of the Jetson Nano with the imaging capabilities of a stereo-vision camera. The Jetson Nano’s impressive GPU makes it particularly appropriate for edge machine learning and AI projects. The company also offers the Femto Mega, an advanced and more expensive alternative that uses the same Jetson Nano SoM. The Persee N1 camera-computer also features official support for the open-source computer vision library, OpenCV. The camera is suited for indoor and semi-outdoor operation and uses a custom application-specific integrated circuit (ASIC) for enhanced depth processing. It also provides advanced features like multi-camera synchronization and IMU (inertial measurement […]

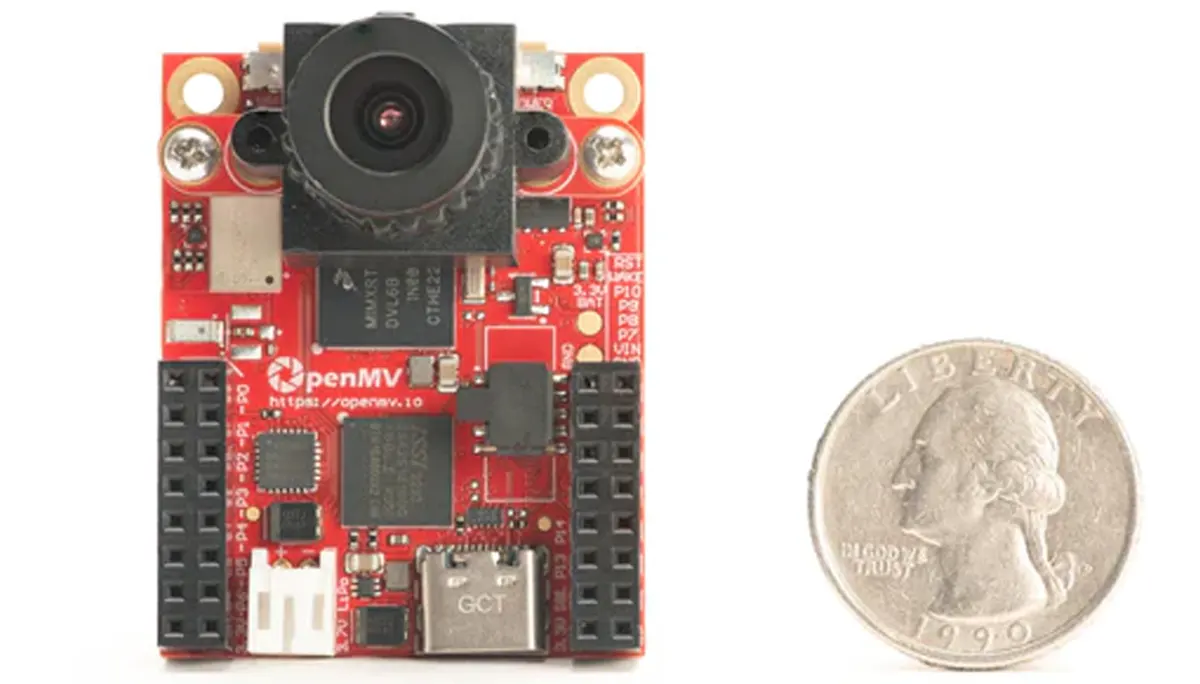

OpenMV CAM RT1062 camera for machine vision is programmable with MicroPython

Following the success of the OpenMV Cam H7 and the original OpenMV VGA Camera, OpenMV recently launched the OpenMV CAM RT1062 powered by NXP’s RT1060 processor. This new camera module integrates a range of features, including a high-speed USB-C (480Mbps) interface, an accelerometer, and a LiPo connector for portability. Similar to its predecessor, this camera module also features a removable camera system, and it is built around the OV5640 image sensor which is more powerful in terms of resolution and versatility. However, the previous Omnivision OV7725 sensor, used in the OpenMV Cam H7 has a far superior frame rate and low-light performance. OpenMV provides a Generic Python Interface Library for USB and WiFi Comms and an Arduino Interface Library for I2C, SPI, CAN, and UART Comms which can be used to interface your OpenMV Cam to other systems. To program the board, you can use MicroPython 3 with OpenMV IDE, […]

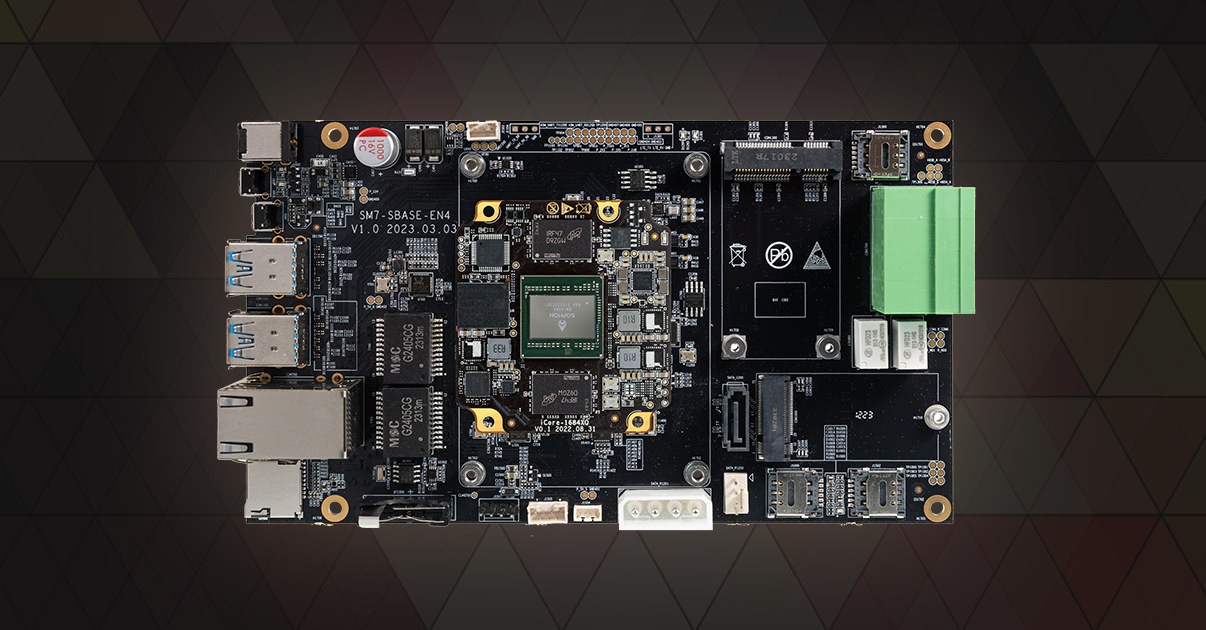

Firefly AIO-1684XQ motherboard features BM1684X AI SoC with up to 32 TOPS for video analytics, computer vision

Firefly AIO-1684XQ is a motherboard based on SOPHGO SOPHON BM1684X octa-core Cortex-A53 AI SoC delivering up to 32TOPS for AI inference, and designed for computer vision applications and video analytics. The headless machine vision board is equipped with 16GB RAM, 64GB eMMC flash, and 128MB SPI flash, and comes with a SATA 3.0 port, dual Gigabit Ethernet, optional 4G LTE or 5G modules, four USB 3.0 ports, and a terminal block with two RS485 interface, two relay outputs, and a few GPIOs. Firefly AIO-1684XQ specifications: SoC – SOPHGO SOPHON BM1684X CPU – Octa-core Arm Cortex-A53 processor @ up to 2.3 GHz TPU – Up to 32TOPS (INT8), 16 TFLOPS (FP16/BF16), 2 TFLOPS (FP32) VPU Up to 32-channel H.265/H.264 1080p25 video decoding Up to 32-channel 1080p25 HD video processing (decoding + AI analysis) Up to 12-channel H.265/H.264 1080p25fps video encoding System Memory – 16GB LPDDR4x Storage 64GB eMMC flash 128MB SPI […]

IP67-rated CM4 AI camera uses Raspberry Pi Compute Module 4 for computer vision applications

EDATEC ED-AIC2020 is an IP67-rated, Raspberry Pi CM4-based industrial AI camera equipped with a fixed or liquid lens and LED illumination that leverages the Raspberry Pi Compute Module 4 to run computer vision applications using OpenCV, Python, And Qt. We’ve previously written about Raspberry Pi Compute Module-based smart cameras such as the Q-Wave Systems EagleEye camera (CM3+) working with OpenCV and LabVIEW NI Vision and the StereoPi v2 (CM4) with stereo vision. But the EDATEC ED-AIC2000 is the first ready-to-deploy Raspberry Pi CM4 AI camera we’ve covered so far. EDATEC “CM4 AI camera” (ED-AIC2020) specifications: SoM – Raspberry Pi Compute Module 4 up to 8GB RAM, up to 32GB eMMC flash Camera 2.0MP global shutter or 5.0MP rolling shutter Acquisition rate – Up to 70 FPS Aiming point – Red cross laser Built-in LED illumination (optional) Scanning field Electronic liquid lens Fixed focal length lens Networking Gigabit Ethernet M12 port Communication protocols – Ethernet/IP, PROFINET, Modbus […]

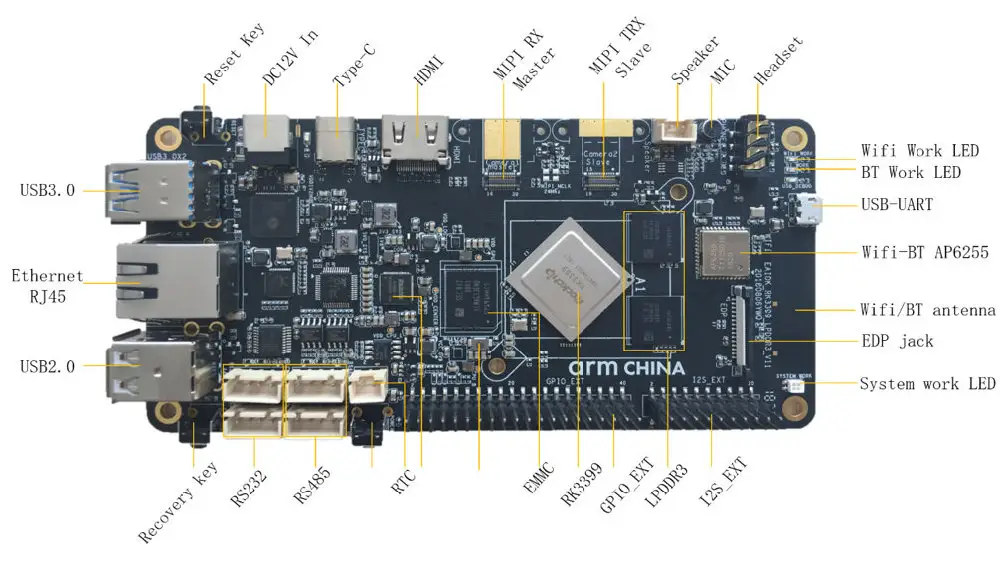

Open AI Lab EAIDK-610 devkit targets computer vision education with OpenCV

Open AI Lab EAIDK-610 is an embedded AI development kit powered by a Rockchip RK3399 processor, recently added to Linux 6.1 and described as “popularly used by university students” in the kernel changelog. But I had never heard about it, and it turns out it’s because it’s popular with students in China, and most documentation is written in Chinese. The development board is equipped with 4GB LPDDR3, a 16GB eMMC flash, HDMI video output, Gigabit Ethernet and WiFi 5, a few USB ports, a 40-pin GPIO header, and more. EAIDK-610 specifications: SoC – Rockchip RK3399 System Memory – 4GB LPDDR3 Storage – 16GB eMMC flash and MicroSD card slot Video Output HDMI 2.0 up to 4Kp60 MIPI DSI up to 1280×720 @ 60 fps 4-lane eDP 1.3 Audio – Speaker header, built-in microphone, 3.5mm audio jack, I2S header, digital audio via HDMI Camera I/F – 2x MIPI CSI up to […]

NVIDIA Jetson Nano based AI camera devkit enables rapid computer vision prototyping

ADLINK “AI Camera Dev Kit” is a pocket-sized NVIDIA Jetson Nano devkit with an 8MP image sensor, industrial digital inputs & outputs, and designed for rapid AI vision prototyping. The kit also features a Gigabit Ethernet port, a USB-C port for power, data, and video output up to 1080p30, a microSD card with Linux (Ubuntu 18.04), and a micro USB port to flash the firmware. As we’ll see further below it also comes with drivers and software to quickly get started with AI-accelerated computer vision applications. AI Camera Dev Kit specifications: System-on-Module – NVIDIA Jetson Nano with CPU – Quad-core Arm Cortex-A57 processor GPU – NVIDIA Maxwell architecture with 128 NVIDIA cores System Memory – 4 GB 64-bit LPDDR4 Storage – 16 GB eMMC Storage – MicroSD card socket ADLINK NEON-series camera module Sony IMX179 color sensor with rolling shutter Resolution – 8MP (3280 x 2464) Frame Rate (fps) – […]

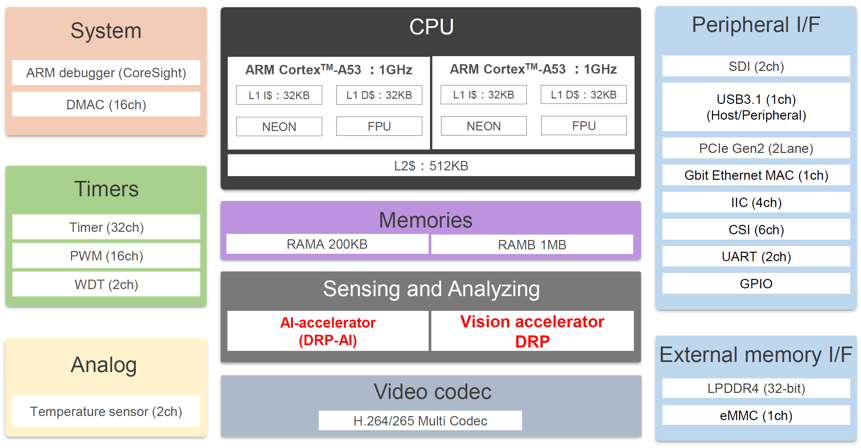

Renesas RZ/V2MA microprocessor embeds AI & OpenCV accelerators for image processing

Renesas has launched the RZ/V2MA dual-core Arm Cortex-A53 microprocessor with a low-power (1TOPS/W) DRP-AI accelerator and one OpenCV accelerator for rule-based image processing enabling vision AI applications. The MPU also supports H.265 and H.264 video decoding and encoding, offers LPDDR4 memory and eMMC flash interfaces, as well as Gigabit Ethernet, a USB 3.1 interface, PCIe Gen 2, and more. The RZ/V2MA microprocessor targets applications ranging from AI-equipped gateways to video servers, security gates, POS terminals, and robotic arms. Renesas RZ/V2MA specifications: CPU – 2x Arm Cortex-A53 up to 1.0GHz Memory – 32-bit LPDDR4-3200 Storage – 1x eMMC 4.5.1 flash interface Vision and Artificial Intelligence accelerator DRP-AI at 1.0 TOPS/W class OpenCV Accelerator (DRP) Video H.265/H.264 Multi Codec Encoding: h.265 up to 2160p, h.264 up to 1080p Decoding: h.265 up to 2160p, h.264 up to 1080p Networking – 1x Gigabit Ethernet USB – 1x USB 3.1 Gen1 host/peripheral up to 5 […]