The Connectivity Standards Alliance has recently introduced its IoT Device Security Specification 1.0, a way to defragment many security standards into one. This common scheme and certification standard will ensure that devices meet local requirements in each country. With this approach, a single test from the alliance ensures that the product can be sold globally without any compliance issues. IoT Device Security Specification 1.0 at a glance: Unified Security Standard – Integrates the major cybersecurity baselines from the United States, Singapore, and Europe into one comprehensive framework. Product Security Verified Mark – A new certification mark that indicates compliance with the IoT Device Security Specification, designed to enhance consumer trust and product marketability. No Hardcoded Default Passwords – Ensures all IoT devices utilize unique authentication credentials out of the box, improving initial security. Unique Identity for Each Device – Assigns a distinct identity to every device, crucial for traceability and secure […]

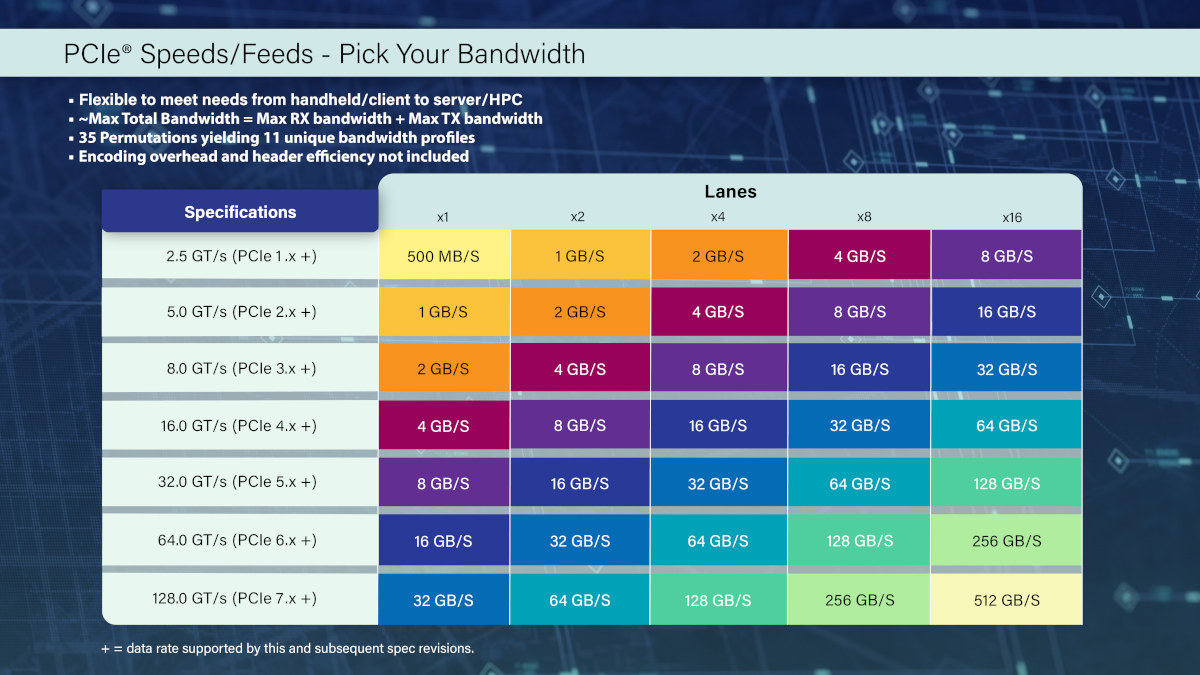

PCIe 7.0 to support up to 512GB/s bidirectional transfer rates

The PCI-SIG first unveiled the PCIe Express (PCIe) 7.0 specification at US DevCon in June 2022 with claims of bidirectional data rates of up to 512GB/s in x16 configuration, and the standard is now getting closer to the full release in 2025 with the release of the specification version 0.5. PCIe 7.0 increases data transfer speeds to 128 GT/s per pin doubling the 64 GT/s of PCIe 6.0 and quadrupling the 32 GT/s of PCIe 5.0, delivering up to 256 GB/s in each direction in x16 configuration, excluding encoding overhead. In other words, the total maximum bandwidth of a PCIe 7.0 x1 interface (32GB/s) would be equivalent to PCIe Gen3 x16 or PCIe Gen4 x8 as shown in the table below. PCIe 7.0 highlights: 128 GT/s raw bit rate and up to 512 GB/s bidirectionally via x16 configuration PAM4 (Pulse Amplitude Modulation with 4 levels) signaling Doubles the bus frequency […]

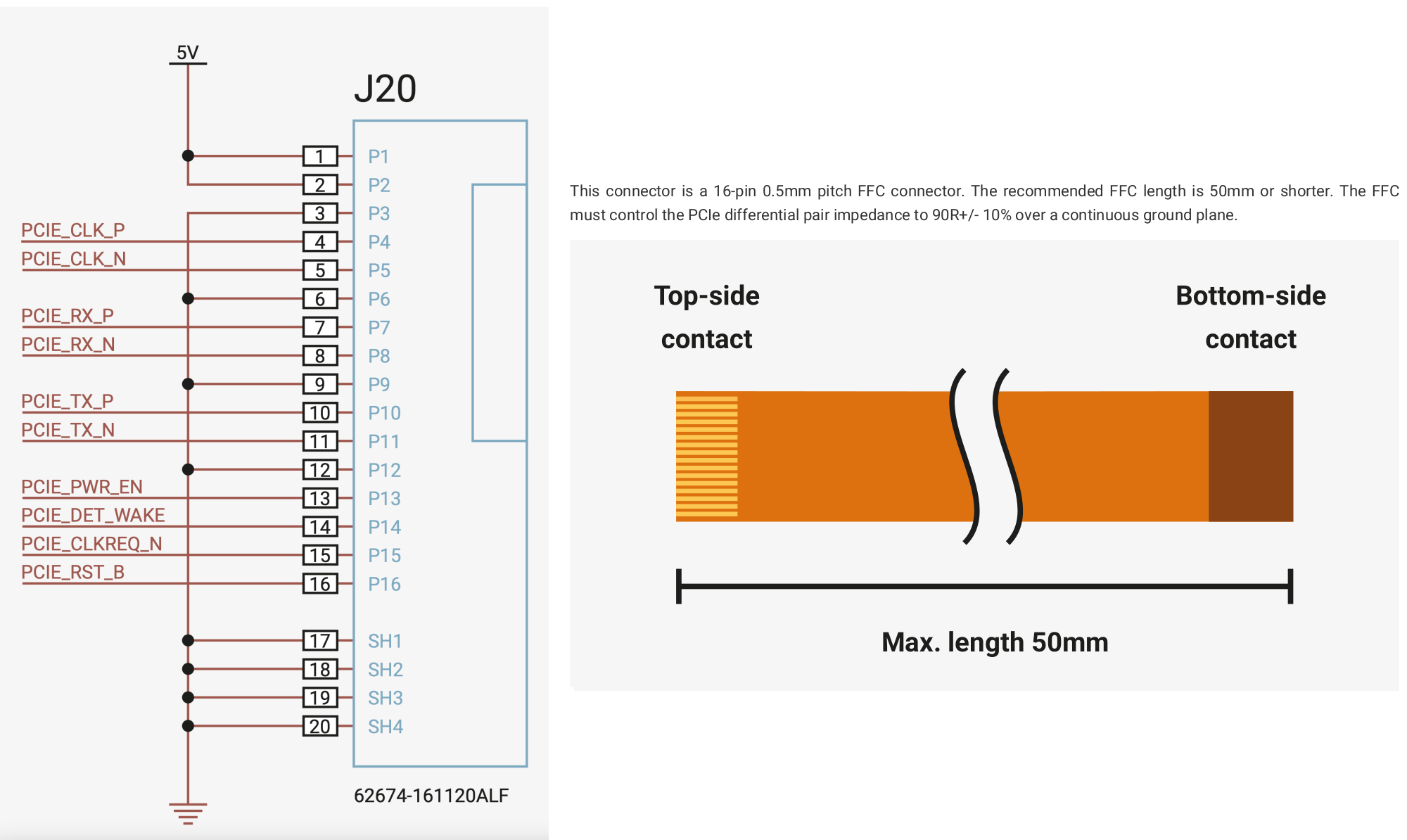

Raspberry Pi releases PCIe FFC connector specifications, new HAT+ standard

Raspberry Pi has released two new specifications one for the PCIe FFC connector and related cable and the other for the new Raspberry Pi HAT+ (HAT Plus) standard that’s simpler, takes into account new features in Raspberry Pi 4/5, and has fewer rules around mechanical dimensions. PCIe FFC connector specifications The Raspberry Pi 5 was announced over 2 months ago with a new PCIe FFC connector, and people may been playing around with it and even launching products such as an M.2 HAT for the Raspberry Pi 5 since then even though the pinout and specifications were not available. But Raspberry Pi has now released the specifications (PDF) for the PCIe FFC found in the Raspberry Pi 5 and likely future models as well. The 16-pin 0.5mm pitch FFC connector features a single lane PCIe interface, something we knew already, but the pinout diagram and recommendations for the FFC cable […]

FCC and NIST unveils the Cyber Trust Mark, a voluntary US IoT security label

Representatives of the Federal Communications Commission (FCC) and the National Institute of Standards and Technology (NIST) have recently unveiled a U.S. national IoT security label at the White House called the “U.S. Cyber Trust Mark” to inform consumers about the security, safety, and privacy of a specific IoT and Smart Home device. IoT security has been a problem for years with routers shipping with telnet enabled with default usernames and passwords, vulnerabilities in SDKs, unencrypted passwords transmitted over the network, millions of devices with older microcontrollers without built-in hardware security features, etc… There have been industry efforts to solve this such as the Arm PSA initiative, as well as regulations to prevent default usernames/passwords in new devices, but nothing about IoT security that can help a consumer find out if a device is supposed to be secure or not. The Cyber Trust Mark is supposed to address this issue. The […]

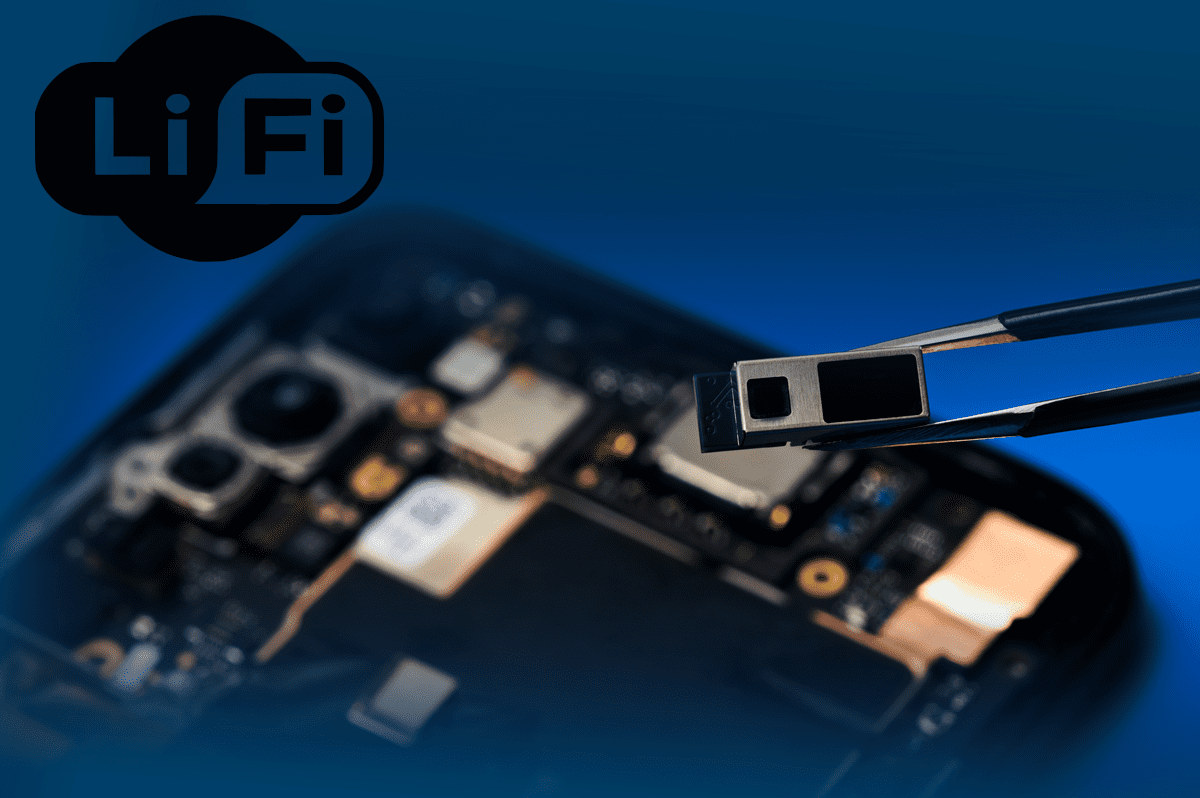

LiFi 802.11bb standard uses light for in-room data transmission up to 224GB/s

The 802.11bb WiFi-like standard, also called LiFi, was ratified in June 2023. It enables data transmission up to 224GB/s at a few meters range within a room using light instead of RF signals used in most other wireless standards. The technology has been worked on for many years, and we first covered (a version of) LiFi in 2014 that was still part of the IEEE 802.15 standard with speeds up to 1 Gbps. But the Light Communications 802.11bb Task Group was only formed in 2018 chaired by pureLiFi and supported by Fraunhofer HHI, and led to be ratification of the IEEE 802.11bb standard last month. In a typical LiFi setup, you’d have a LiFi-capable router connected to your local network and the Internet, a LiFi-enabled light bulb on a ceiling, and one or more LiFi receivers. From the end-user perspective, it would work like accessing a WiFi access point. We’re […]

Bluetooth 5.4 adds electronic shelf label (ESL) support

The Bluetooth Special Interest Group (SIG) has just adopted the Bluetooth 5.4 Core Specification with features such as PAwR and EAD designed for Electronic Shelf Label (ESL) systems. The Bluetooth 5.3 Core Specification was adopted in August 2021 with various improvements, and Bluetooth 5.4 now follows with features that appear to be mainly interesting for large-scale Bluetooth networks with support for bi-directional communication with thousands of end nodes from a single access point, as would be the case for Electronic Shelf Label or Shelf Sensor systems. Four new features have been added to Bluetooth 5.4: Periodic Advertising with Responses (PAwR) – PAwR is a new Bluetooth Low Energy (LE) logical transport that provides a way to perform energy-efficient, bi-directional, communication in a large-scale one-to-many topology with up to up to 32,640 devices. Devices can also be allocated to groups allowing them to listen only to their group’s transmissions. An Electronic […]

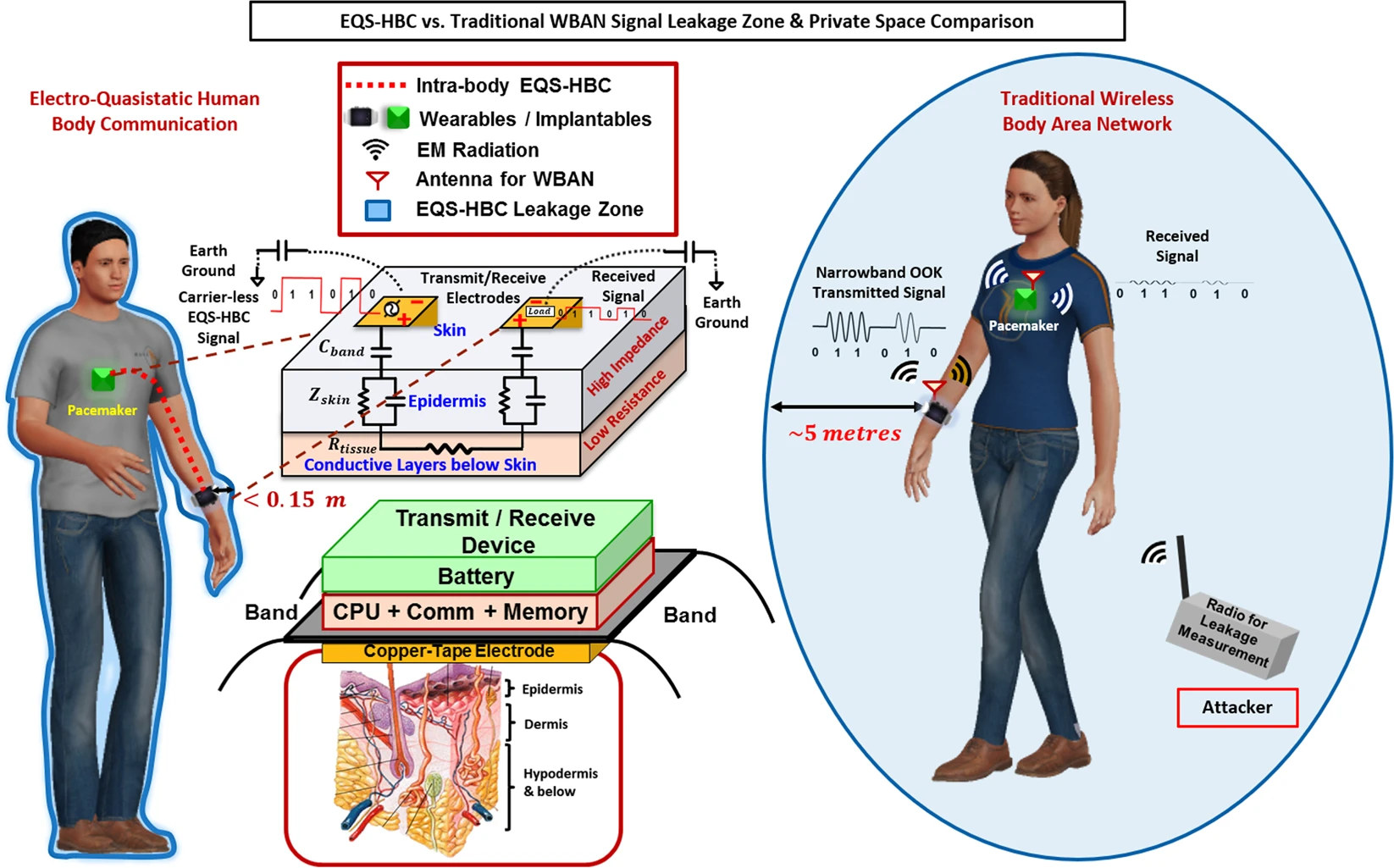

The Wi-R protocol relies on body for data communication, consumes up to 100x less than Bluetooth

The Wi-R protocol is a non-radiative near-field communication technology that uses Electro-Quasistatic (EQS) fields for communication enabling the body to be used as a conductor and that consumes up to 100x less energy per bit compared to Bluetooth. In a sense, Wi-R combines wireless and wired communication. Wi-R itself only has a wireless range of 5 to 10cm, but since it also uses the body to which the Wi-R device is attached, the range on the conductor is up to 5 meters. While traditional wireless solutions like Bluetooth create a 5 to 10-meter field around a person, the Wi-R protocol creates a body area network (BAN) that could be used to connect a smartphone to a pacemaker, smartwatch, and/or headphones with higher security/privacy and longer battery life. One of the first Wi-R chips is Ixana YR11 with up to 1Mbps data rate, and they are working on a YR21 […]

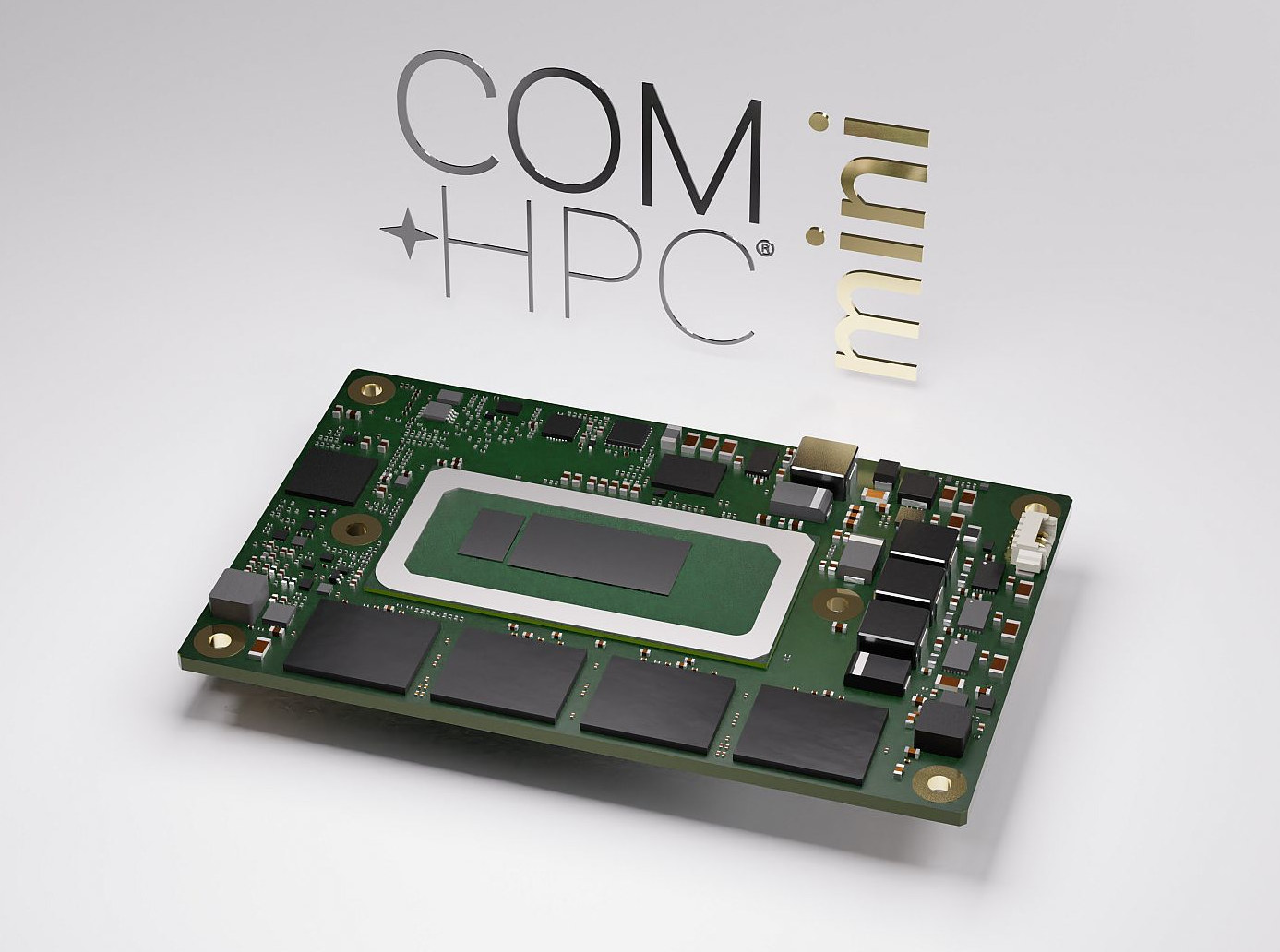

Credit card-sized COM-HPC Mini modules to support PCIe Gen4 and Gen5 interfaces

PICMG has announced that the COM-HPC Mini form factor’s pinout and dimensions definitions were finalized, with the tiny credit card-sized modules able to handle PCIe Gen4 and Gen5 interfaces, of course, depending on whether the selected CPU supports those. The COM-HPC “High-Performance Computing” form factor was created a few years ago due to the lack of interfaces on the COM Express form factor with “only” 440 pins and potential issues to handle PCIe Gen 4 clock speeds and throughputs. So far, we had COM-HPC Client Type modules from 95 x 120mm (Size A) to 160 x 120mm (Size C) and Server Type modules with either 160 x 160mm (Size D) or 200 x 160mm (Size E) dimensions. The COM-HPC Mini brings a smaller (95 x 70 mm) credit card-sized form factor to the COM-HPC standard. The way they cut the size of the COM-HPC Size A form factor by half […]