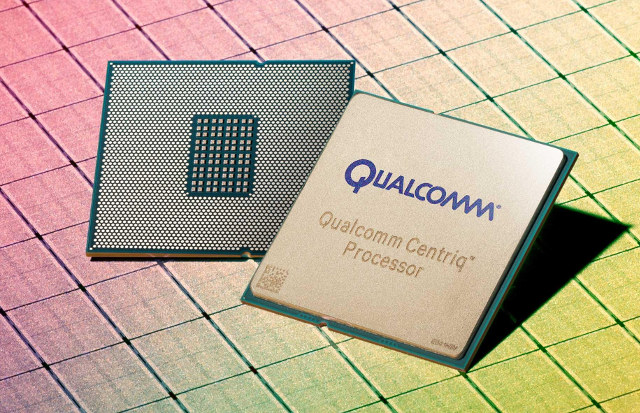

Qualcomm Centriq 2400 ARM Server-on-Chip has been four years in the making. The company announced sampling in Q4 2016 using 10nm FinFET process technology with the SoC featuring up to 48 Qualcomm Falkor ARMv8 CPU cores optimized for datacenter workloads. More recently, Qualcomm provided a few more details about the Falkor core, fully customized with a 64-bit only micro-architecture based on ARMv8 / Aarch64. Finally, here it is as the SoC formally launched with the company announcing commercial shipments of Centriq 2400 SoCs. Qualcom Centriq 2400 key features and specifications: CPU – Up to 48 physical ARMv8 compliant 64-bit only Falkor cores @ 2.2 GHz (base frequency) / 2.6 GHz (peak frequency) Cache – 64 KB L1 instructions cache with 24 KB single-cycle L0 cache, 512 KB L2 cache per duplex; 60 MB unified L3 cache; Cache QoS Memory – 6 channels of DDR4 2667 MT/s for up to 768 […]

GIGABYTE MA10-ST0 Server Motherboard is Powered by Intel Atom C3958 “Denverton” 16-Core SoC

Last year, we wrote about Intel Atom C3000 series processor for micro-servers with the post also including some details about MA10-ST0 motherboard. GIGABYTE has finally launched the mini-ITX board with an unannounced Atom C3958 16-core Denverton processor. GIGABYTE MA10-ST0 server board specifications: Processor – Intel Atom C3958 16-core processor @ up to 2.0GHz with 16MB L2 cache (31W TDP) System Memory – 4x DDR4 slots for dual channels memory @ 1866/2133/2400 MHz with up to 128GB ECC R-DIMM, up to 64GB for ECC/non-ECC UDIMM Storage 32GB eMMC flash 4x Mini-SAS up to 16 x SATA 6Gb/s ports 2x Mini-SAS ports are shared with PCIe x8 slot Connectivity 2x 10Gb/s SFP+ LAN ports 2x 1Gb/s LAN ports (Intel I210-AT) 1x 10/100/1000 management LAN Video – VGA port up to 1920×1200@60Hz 32bpp; Aspeed AST2400 chipset with 2D Video Graphic Adapter with PCIe bus interface USB – 2x USB 2.0 ports Expansion Slots […]

SolidRun MACCHIATOBin Mini-ITX Networking Board is Now Available for $349 and Up

SolidRun MACCHIATOBin is a mini-ITX board powered by Marvell ARMADA 8040 quad core Cortex A72 processor @ up to 2.0 GHz and designed for networking and storage applications thanks to 10 Gbps, 2.5 Gbps, and 1 Gbps Ethernet interfaces, as well as three SATA port. The company is now taking order for the board (FCC waiver required) with price starting at $349 with 4GB RAM. MACCHIATOBin board specifications: SoC – ARMADA 8040 (88F8040) quad core Cortex A72 processor @ up to 2.0 GHz with accelerators (packet processor, security engine, DMA engines, XOR engines for RAID 5/6) System Memory – 1x DDR4 DIMM with optional ECC and single/dual chip select support; up to 16GB RAM Storage – 3x SATA 3.0 port, micro SD slot, SPI flash, eMMC flash Connectivity – 2x 10Gbps Ethernet via copper or SFP, 2.5Gbps via SFP, 1x Gigabit Ethernet via copper Expansion – 1x PCIe-x4 3.0 slot, Marvell […]

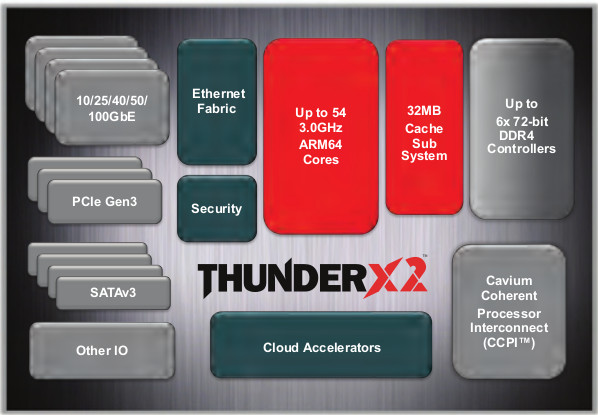

Cavium introduces 54 cores 64-bit ARMv8 ThunderX2 SoC for Servers with 100GbE, SATA 3, PCIe Gen3 Interfaces

Cavium announced their first 64-bit ARM Server SoCs with the 48-core ThunderX at Computex 2014. Two years later, the company has now introduced the second generation, aptly named ThunderX2, with 54 64-bit ARM cores @ up to 3.0 GHz and promising two to three times more performance than the previous generation. Key features of the new server processor include: 2nd generation of full custom Cavium ARM core; Multi-Issue, Fully OOO; 2.4 to 2.8 GHz in normal mode, Up to 3 GHz in Turbo mode. Up to 54 cores per socket delivering > 2-3X socket level performance compared to ThunderX Cache – 64K I-Cache and 40K D-Cache, highly associative; 32MB shared Last Level Cache (LLC). Single and dual socket configuration support using 2nd generation of Cavium Coherent Interconnect with > 2.5X coherent bandwidth compared to ThunderX System Memory 6x DDR4 memory controllers per socket supporting up to 3 TB RAM in […]

openSUSE 12.2 for ARM is Now Available for Beagleboard, Pandaboard, Efixa MX and More

The first stable release of openSUSE for ARM has just been announced. openSUSE 12.2 for ARM is officially available for the Beagleboard, Beagleboard xM, Pandaboard, Pandaboard ES, Versatile Express (QEMU) and the rootfs can be mounted with chroot, but “best effort’ ports have been made for Calxeda Highbank server, i.MX53 Loco development board, CuBox computer, Origen Board and Efika MX smart top. Work is also apparently being done on a Raspberry Pi port which should be available for the next release. openSUSE developers explains that almost all of openSUSE builds runs on these platforms (about 5000 packages). Visit “OpenSUSE on your ARM board” for download links and instructions for a specific ARM board. More details are available on the wiki page. openSUSE has limited resources for ARM development, so If you’d like to help with development (e.g. fixing builds), visit ARM distribution howto page to find out how to get […]

Wyse Announced Two Linux Thin clients: Z50S and Z50D

Wyse Technology announced that its fastest thin clients ever, the Z90D7 and Z90DW are now shipping at VMworld 2011. You can have a look at AMD Embedded G-Series mini-PC, motherboard and thin client for details about the devices. In addition, Wyse also introduced two new Linux-based members of its Z class family – the Wyse Z50S and Wyse Z50D. Both thin clients run Wyse Enhanced SUSE Linux Enterprise. The press release also indicated that “the Z50 thin clients are built on the same exact advanced single and dual core processor hardware platform as the Wyse Z90 thin clients, the upcoming Linux-based Wyse Z50 promises more of the same industry leading power and capability on an enterprise-class Linux operating system”. Wyse did not provide further details but based on the statement above, we can probably safely assume that Z50S will use the single core AMD G-T52R and the Z50D will be […]

Bootloader to OS with Unified Extensible Firmware Interface (UEFI)

Unified Extensible Firmware Interface (UEFI) is a specification detailing an interface that helps hand off control of the system for the pre-boot environment (i.e.: after the system is powered on, but before the operating system starts) to an operating system, such as Windows or Linux. UEFI aims to provides a clean interface between operating systems and platform firmware at boot time, and supports an architecture-independent mechanism for initializing add-in cards. UEFI will overtime replace vendor-specific BIOS. It also allows for fast boot and support for large hard drives (> 2.2 TB). There are several documents fully defining the UEFI Specification, API and testing requirements: The UEFI Specification (version 2.3.1) describes an interface between the operating system (OS) and the platform firmware. It describes the requirements for the following components, services and protocols: Boot Manager Protocols – Compression Algorithm Specification EFI System Table Protocols – ACPI Protocols GUID Partition Table (GPT) […]