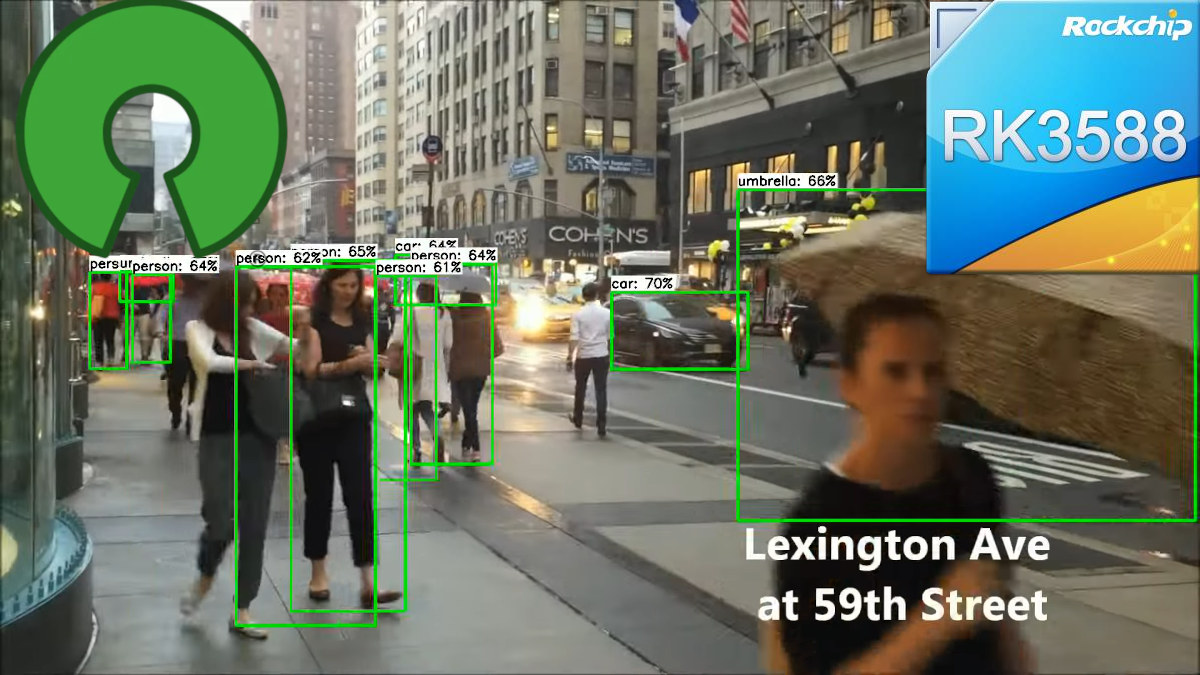

Tomeu Vizoso has been working on an open-source driver for NPU (Neural Processing Unit) found in Rockchip RK3588 SoC in the last couple of months, and the project has nicely progressed with object detection working fine at 30 fps using the SSDLite MobileDet model and just one of the three cores from the AI accelerator. Many recent processors include AI accelerators that work with closed-source drivers, but we had already seen reverse-engineering works on the Allwinner V831’s NPU a few years ago, and earlier this year, we noted that Tomeu Vizoso released the Etvaniv open-source driver that works on Amlogic A311D’s Vivante NPU. Tomeu has now also started working on porting his Teflon TensorFlow Lite driver to the Rockchip RK3588 NPU which is closely based on NVIDIA’s NVDLA open-source IP. He started his work in March leveraging the reverse-engineering work already done by Pierre-Hugues Husson and Jasbir Matharu and was […]

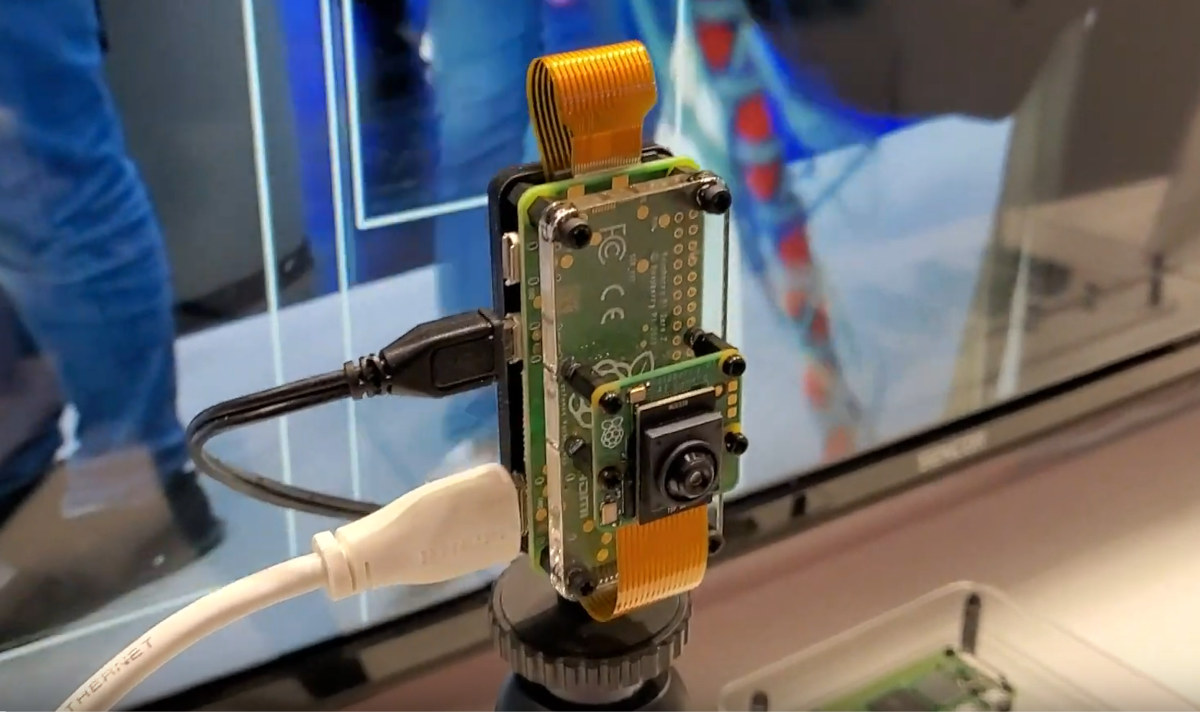

Raspberry Pi at Embedded World 2024: AI camera, M.2 HAT+ M Key board, and 15.6-inch monitor

While Raspberry Pi has not officially announced anything new for Embedded World 2024 so far, the company is currently showcasing some new products there including an AI camera with a Raspberry Pi Zero 2 W and a Sony IMX500 AI sensor, the long-awaited M.2 HAT+ M Key board, and a 15.6-inch monitor. Raspberry Pi AI camera Raspberry Pi AI Camera kit content and basic specs: SBC – Raspberry Pi Zero 2 W with Broadcom BCM2710A1 quad-core Arm Cortex-A53 @ 1GHz (overclockable to 1.2 GHz), 512MB LPDDR2 AI Camera Sony IMX500 intelligent vision sensor 76-degree field of view Manually adjustable focus 20cm cables The kit comes preloaded with MobileNet machine vision model but users can also import custom TensorFlow models. This new kit is not really a surprise as we mentioned Sony and Raspberry Pi worked on exactly this when we covered the Sony IMX500 sensor. I got the information from […]

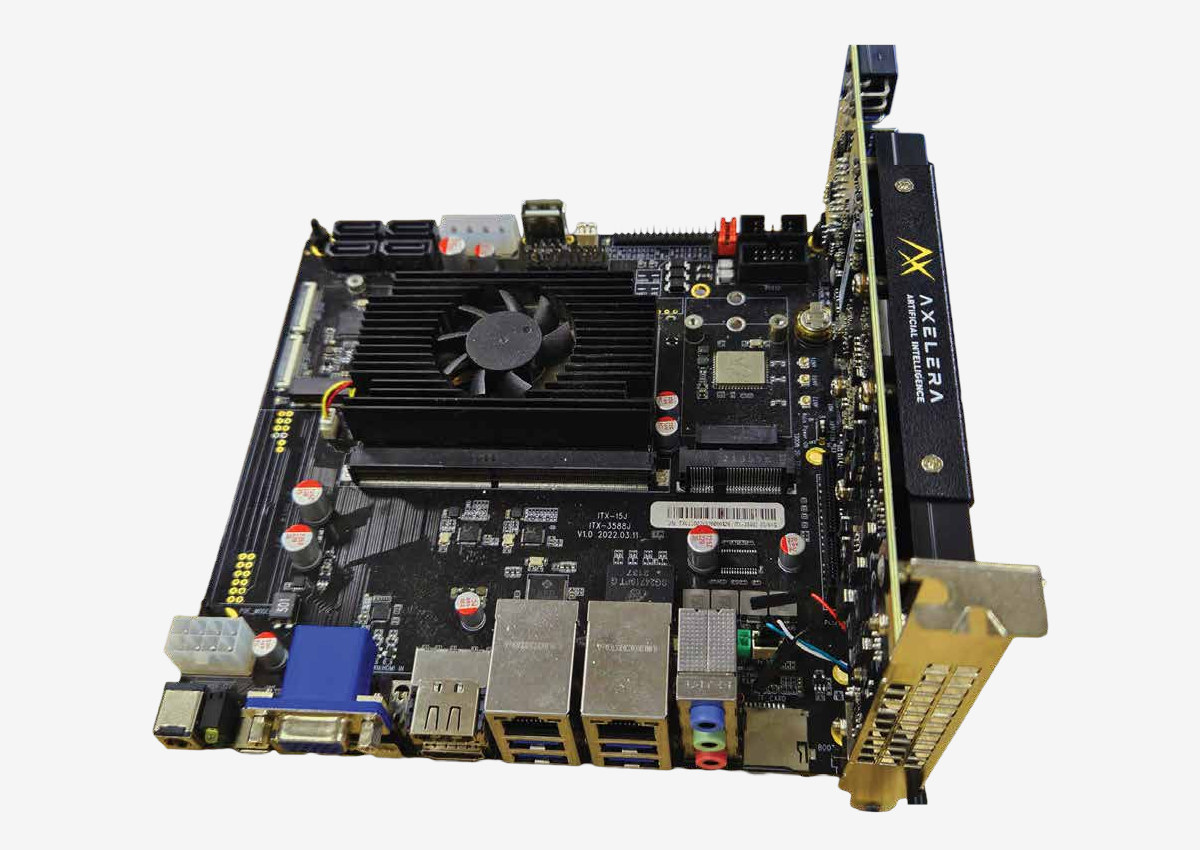

Axelera Metis PCIe Arm AI evaluation kit combines Firefly ITX-3588J mini-ITX motherboard with 214 TOPS Metis AIPU PCIe card

Axelera has announced the general availability of several Metis PCIe AI Evaluation Kits that combine the company’s 214 TOPS Metis AIPU PCIe card with x86 platforms such as Dell 3460XE workstation and Lenovo ThinkStation P360 Ultra computers, Advantech MIC-770v3 or ARC-3534 industrial PCs, or the Firefly ITX-3588J mini-ITX motherboard powered by a Rockchip RK3588 octa-core Cortex-A76/A55 SoC. We’ll look into detail about the latter in this post. When Axelera introduced the Metis Axelera M.2 AI accelerator module in January 2023 I was both impressed and doubtful of the performance claims of the company since packing a 214 TOPS Metis AIPU in a power-limited M.2 module seemed like a challenge. But it was hard to check independently since the devkits were not available yet although the company only started their early-access program in August last year. Now, anybody with an 899 Euros and up budget can try out their larger Metis […]

Hailo-10 M.2 Key-M module brings Generative AI to the edge with up to 40 TOPS of performance

Hailo-10 is a new M.2 Key-M module that brings Generative AI capabilities to the edge with up to 40 TOPS of performance at low power. It targets AI PCs supporting only the Windows 11 operating system on x86 or Aarch64 targets at this time. Hailo claims the Hailo-10 is faster and more energy efficient than integrated neural processing unit (NPU) solutions found in Intel SoCs and delivers at least twice the performance at half the power of Intel’s Core Ultra “AI Boost” NPU. Hailo-10 module specifications: AI accelerator – Hailo-10H System Memory – 8GB LPDDR4 on module Host interface – 4-lane PCIe Gen 3 Power consumption – Less than 3.5W (typical) for the chip Form factor – M.2 Key M 2242 / 2280 Supported AI frameworks – TensorFlow, TensorFlow Lite, Keras, PyTorch & ONNX The Hailo-10 can run Llama2-7B with up to 10 tokens per second (TPS) at under 5W […]

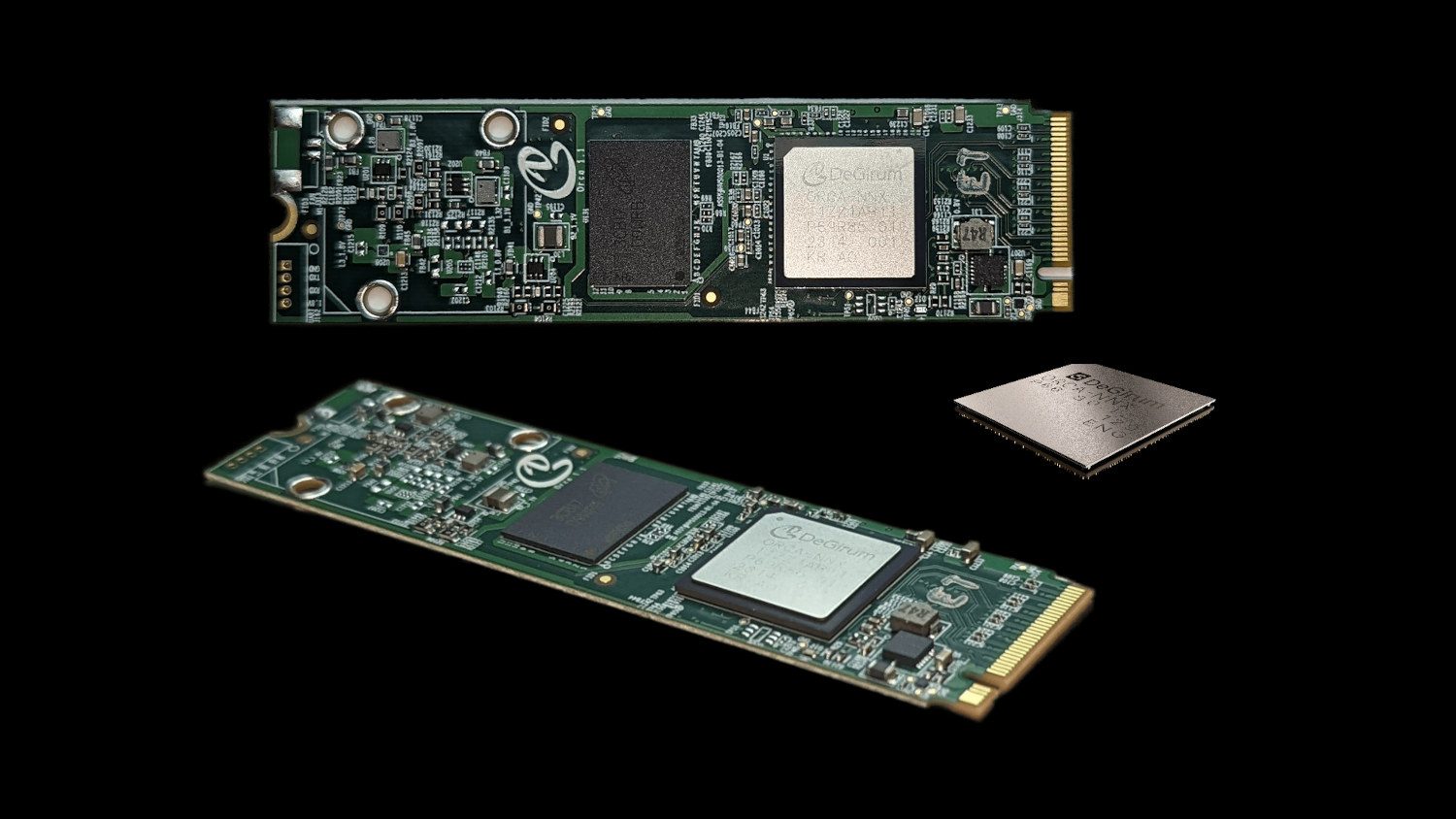

DeGirum ORCA M.2 and USB Edge AI accelerators support Tensorflow Lite and ONNX model formats

I’ve just come across an Atom-based Edge AI server offered with a range of AI accelerator modules namely the Hailo-8, Blaize P1600, Degirum ORCA, and MemryX MX3. I had never heard about the last two, and we may cover the MemryX module a little later, but today, I’ll have a closer at the Degirum ORCA chip and M.2 PCIe module. The DeGirum ORCA is offered as an ASIC, an M.2 2242 or 2280 PCIe module, or (soon) a USB module and supports TensorFlow Lite and ONNX model formats and INT8 and Float32 ML precision. They were announced in September 2023, and have already been tested in a range of mini PCs and embedded box PCs from Intel (NUC), AAEON, GIGABYTE, BESSTAR, and Seeed Studio (reComputer). DeGirum ORCA specifications: Supported ML Model Formats – ONNX, TFLite Supported ML Model Precision – Float32, Int8 DRAM Interface – Optional 1GB, 2GB, or 4GB […]

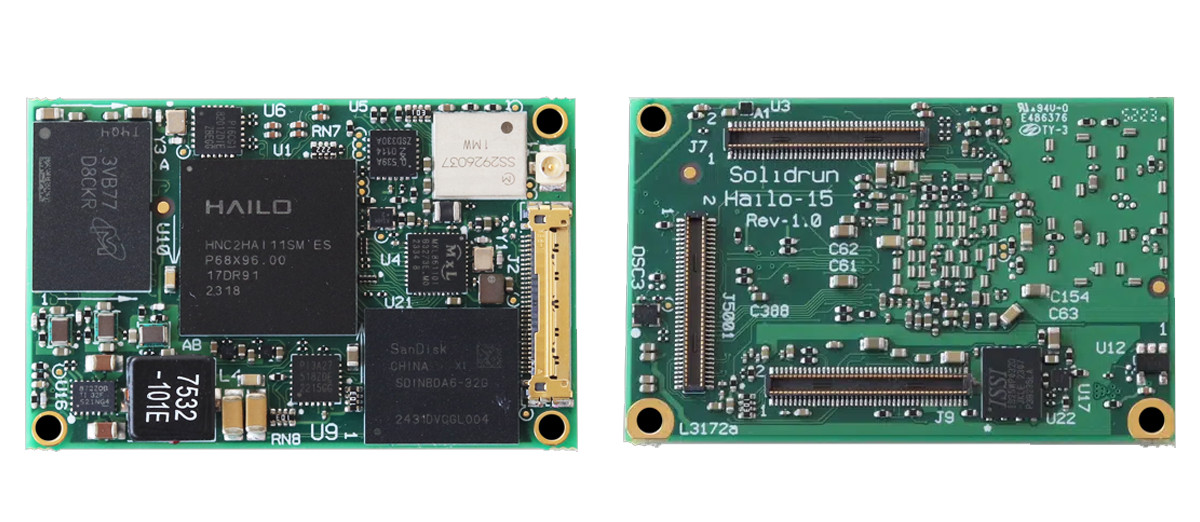

SolidRun launches Hailo-15 SOM with up to 20 TOPS AI vision processor

In March last year, we saw Hailo introduce their quad-core Cortex-A53-based Hailo-15 AI Vision processor. The processor features an advanced computer vision engine and can deliver up to 20 TOPS of processing power. However, after that initial release, we didn’t find it in any commercial products with the SoC. But in a recent development, SolidRun has released a SOM that not only features the Hailo-15 SoC but also integrates up to 8GB LPDDR4 RAM and 256GB eMMC storage along with dual camera support with H.265/4 Video Encoder. This is not the first SOM that SolidRun has released. Previously, we wrote about the SolidRun RZ/G2LC SOM, and before that, SolidRun launched the LX2-Lite SOM along with the ClearFog LX2-Lite dev board. Last month, they released their first COM Express module based on the Ryzen V3000 Series APU. Specification of SolidRun’s Hailo-15 SOM: SoC – Hailo-15 with 4 x Cortex A53 @ 1.3GHz; […]

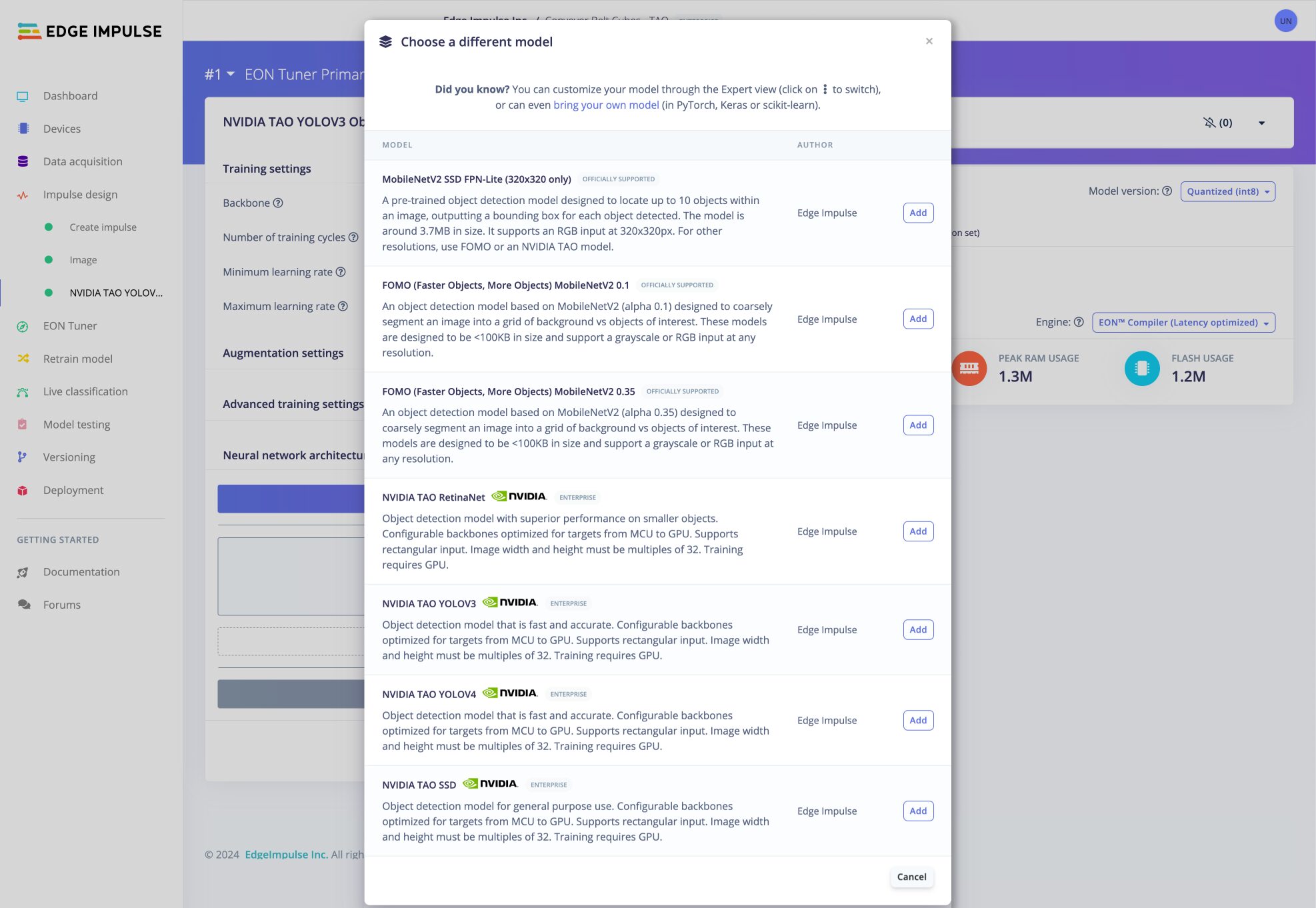

Edge Impulse machine learning platform adds support for NVIDIA TAO Toolkit and Omniverse

Edge Impulse machine learning platform for edge devices has released a new suite of tools developed on NVIDIA TAO Toolkit and Omniverse that brings new AI models to entry-level hardware based on Arm Cortex-A processors, Arm Cortex-M microcontrollers, or Arm Ethos-U NPUs. By combining Edge Impulse and NVIDIA TAO Toolkit, engineers can create computer vision models that can be deployed to edge-optimized hardware such as NXP I.MX RT1170, Alif E3, STMicro STM32H747AI, and Renesas CK-RA8D1. The Edge Impulse platform allows users to provide their own custom data with GPU-trained NVIDIA TAO models such as YOLO and RetinaNet, and optimize them for deployment on edge devices with or without AI accelerators. NVIDIA and Edge Impulse claim this new solution enables the deployment of large-scale NVIDIA models to Arm-based devices, and right now the following object detection and image classification tasks are available: RetinaNet, YOLOv3, YOLOv4, SSD, and image classification. You can […]

Waveshare Jetson Nano powered mini-computer features a sturdy metal case

Waveshare has launched the Jetson Nano Mini Kit A, a mini-computer kit powered by Jetson Nano. This kit features the Jetson Nano Module, a cooling fan, and a WiFi module, all inside a sturdy metal case. The mini-computer is built around Nvidia’s Jetson platform housing the Jetson Nano module and features multiple interfaces, including USB connectors, an Ethernet port, an HDMI port, CSI, GPIO, I2C, and RS485 interfaces. It also has an onboard M.2 B KEY slot for installing either a WiFi or 4G module and is compatible with TensorFlow, and PyTorch which makes it well-suited for various AI applications. Waveshare Mini-Computer Specification: GPU – NVIDIA Maxwell architecture with 128 NVIDIA CUDA cores CPU – Quad-core ARM Cortex-A57 processor @ 1.43 GHz Memory – 4 GB 64-bit LPDDR4 1600 MHz; 25.6 GB/s bandwidth Storage – 16 GB eMMC 5.1 Flash Storage, microSD Card Slot Display Output – HDMI interface with […]