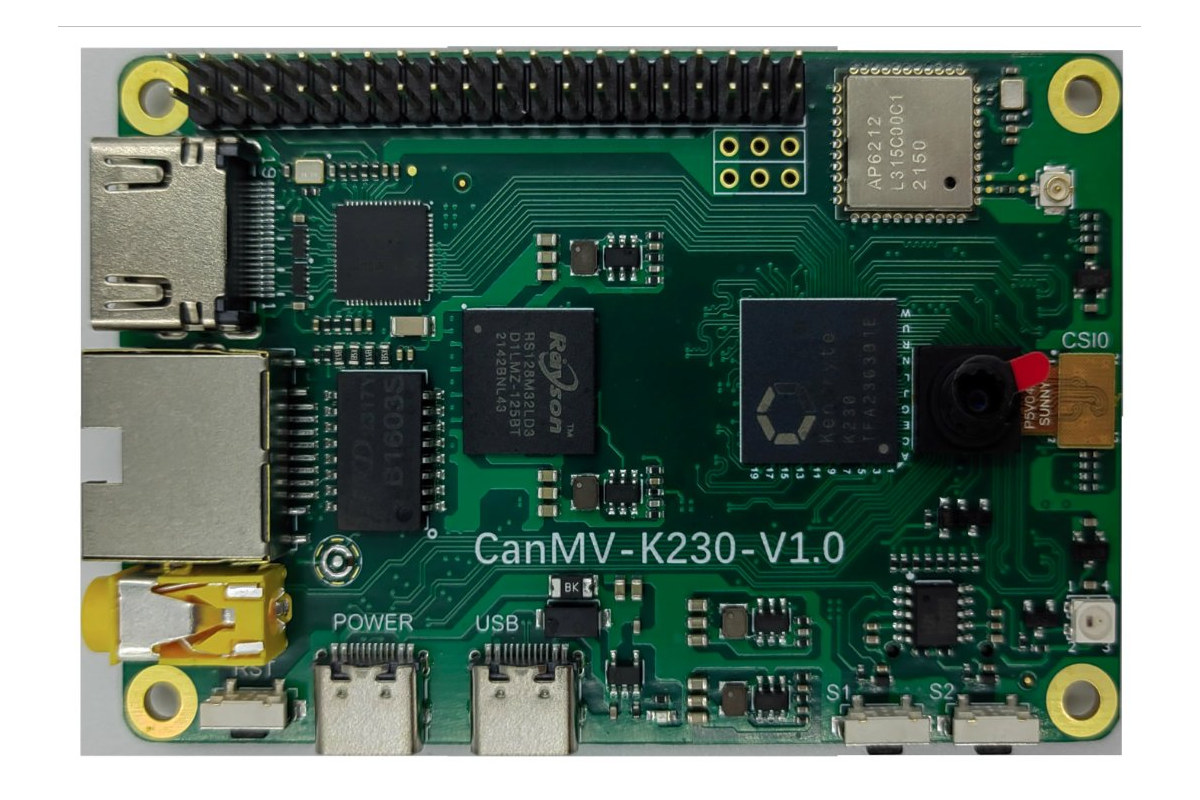

CanMV-K230 is a credit card-sized development board for AI and computer vision applications based on the Kendryte K230 dual-core C908 64-bit RISC-V processor with built-in KPU (Knowledge Process Unit) and various interfaces such as MIPI CSI inputs and Ethernet. The first Kendryte RISC-V AI processor was launched in 2018 with the K210 which I tested with the Grove AI HAT and Maixduino board and found fun to experiment with, but noted that performance was limited. Since then the company introduced the K510 mid-range AI processor with a more powerful 3 TOPS AI accelerator, and the K230 entry-level successor to the K210 – which was planned for 2022 in a 2021 roadmap – has now just been launched and integrated into the CanMV-K230 development board. CanMV-K230 specifications: SoC – Kendryte K230 CPU 64-bit RISC-V processor @ 1.6GHz with RISC-V Vector Extension 1.0, FPU 64-bit RISC-V processor @ 800MHz with support for […]

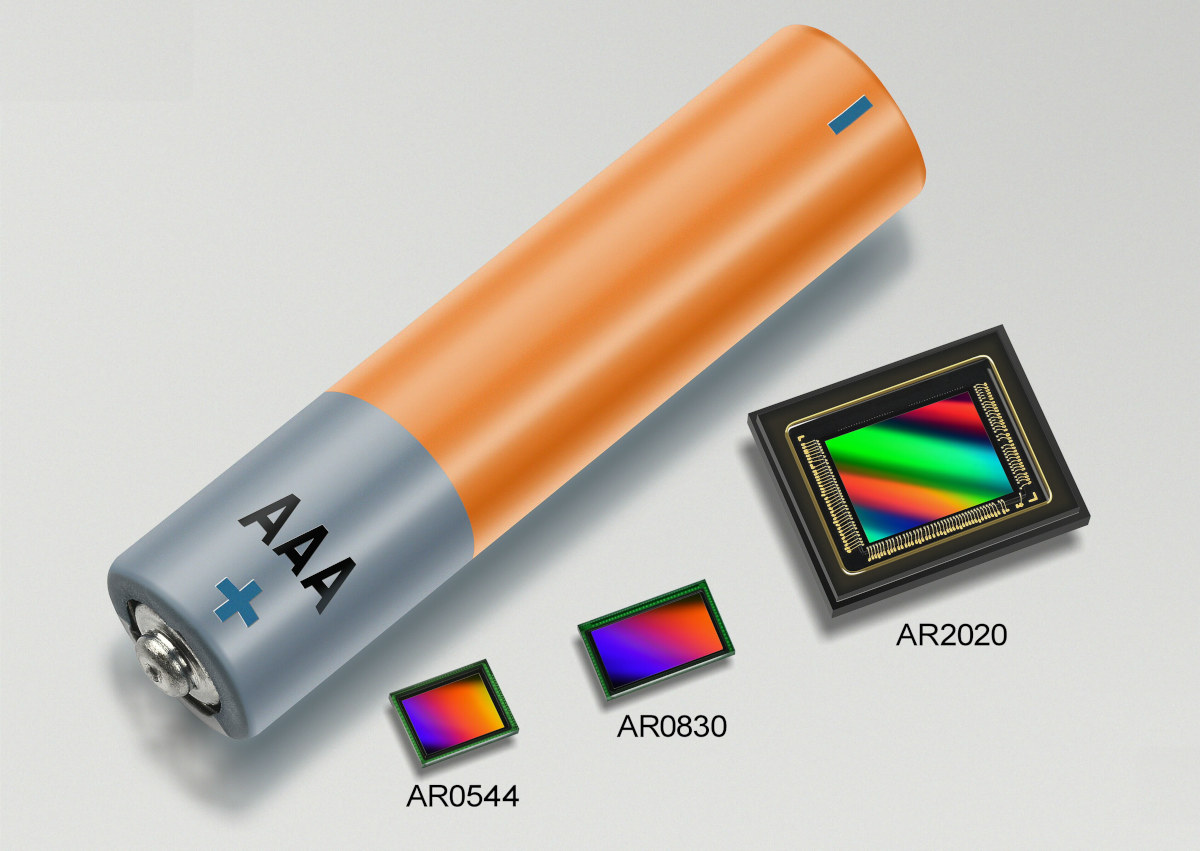

Tiny low-power Hyperlux LP camera sensors can extend battery life by up to 40%

onsemi has introduced the Hyperlux LP image sensor family for battery-powered industrial and commercial cameras with resolutions from 5MP to 20MP and a low power consumption that can extend battery life by up to 40% based on the company’s internal testing. Three models of the 1.4 µm pixel sensors will initially be offered: the 5MP AR0544, the 8MP AR0830, and the 20MP AR2020. Those camera sensors should eventually be found in a wide range of products such as smart doorbells, security cameras, AR/VR/XR headsets, devices leveraging machine vision, and video conferencing. The AR2020 is a 1/1.8inch Back-Side Illuminated (BSI) Stacked CMOS digital image sensor with a 5120 x 3840 active pixel array with a rolling shutter readout and capable of capturing images in either linear or enhanced Dynamic Range (eDR) modes. Its smaller siblings support the same features, but the AR0830 is a 1/2.9-inch image sensor with a 3840 […]

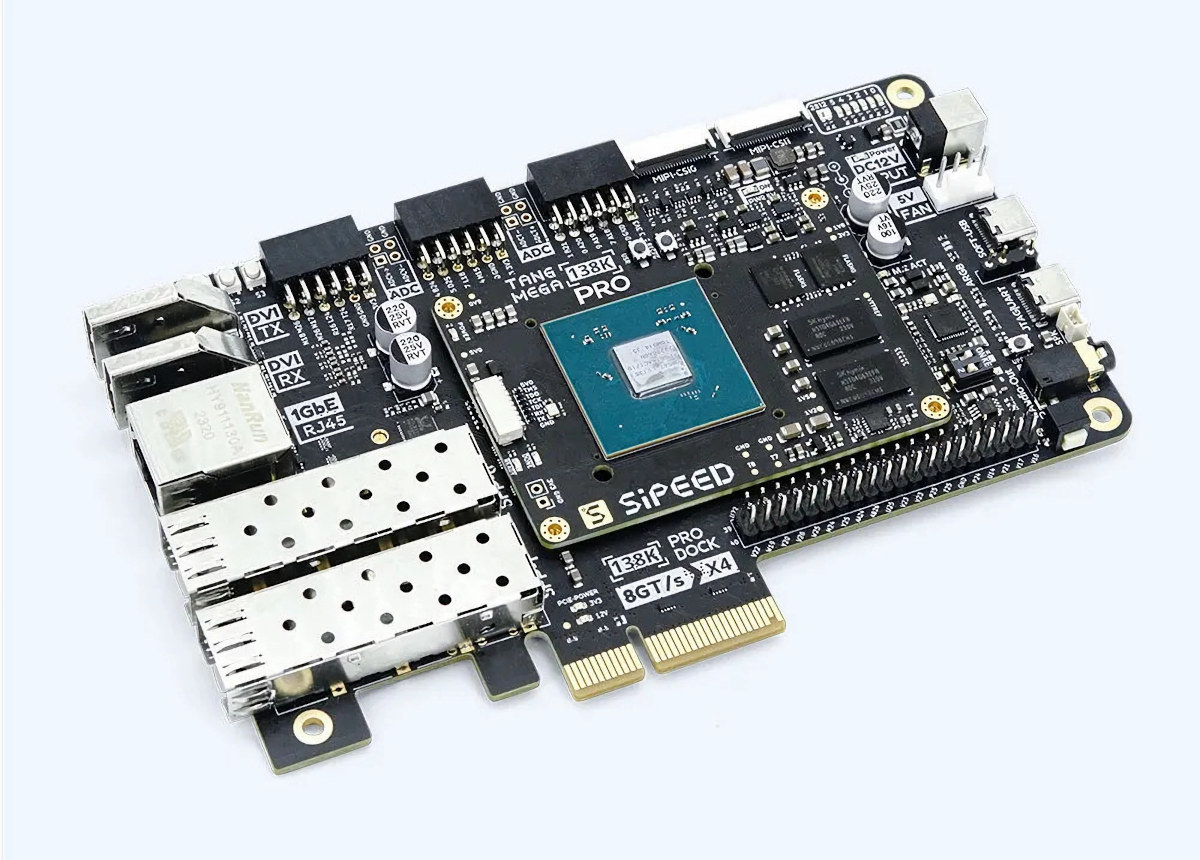

Sipeed Tang Mega 138K Pro Dock features GOWIN GW5AST FPGA + RISC-V SoC

Sipeed has launched another FPGA board part of their Tang family with the Tang Mega 138K Pro Dock powered by a GOWIN GW5AST SoC with 138K logic elements as well as an 800 MHz AE350_SOC RISC-V hardcore unit, and featuring a PCIe 3.0 x4 interface, DVI Rx and Tx, two SFP+ cages, a Gigabit Ethernet RJ45 port, and more. We’ve previously seen companies like AMD (Xilinx) and Microchip produce FPGA SoCs with hard cores such as the Zynq Ultrascale+ family (4x Cortex-A53) or the PolarFire MPSoC (4x 64-bit SiFive U54 RISC-V cores), but it’s the first time I see GOWIN introduce an FPGA + RISC-V SoC, as all the previous parts that came to my attention were FPGA devices. Sipeed Tang Mega 138K Pro Dock specifications: System-on-Module – Sipeed Tang Mega 138K Pro SoC FPGA – GOWIN GW5AST-LV138FPG676A with 138,240 LUT4 1,080 Kb Shadow SRAM (SSRAM) 6,120 Kb Block SRAM […]

Arduino GIGA R1 WiFi board gets touchscreen display shield

The Arduino GIGA Display Shield is a 3.97-inch RGB touchscreen display designed for the Arduino GIGA R1 WiFi board introduced a few months ago with an STM32H7 dual-core Cortex-M7/M4 microcontroller and a Murata 1DX module for WiFi 4 and Bluetooth 5.1 connectivity. Besides featuring an 800×480 touchscreen display, the new shield offers some other features such as an MP34DT06JTR digital microphone, a Bosch BMI270 six-axis IMU, a 20-pin Arducam camera connector, and an RGB LED. Arduino GIGA Display Shield (ASX00039) specifications: Display – 3.97-inch touchscreen display with 800×480 resolution (model: KD040WVFID026-01-C025A), 16.7 million colors, 5-point touch, connected over I2C Camera I/F – 20-pin ArduCam camera connectors Sensors Bosch SensorTech BMI270 6-axis IMU with 16-bit tri-axial gyroscope and a 16-bit tri-axial accelerometer STMicro MP34DT06JTR MEMS microphone Misc -1x RGB LED (I2C) Supply Voltage – 3.3V Dimensions – 106 x 80 mm The new shield can be mounted to the GIGA R1 […]

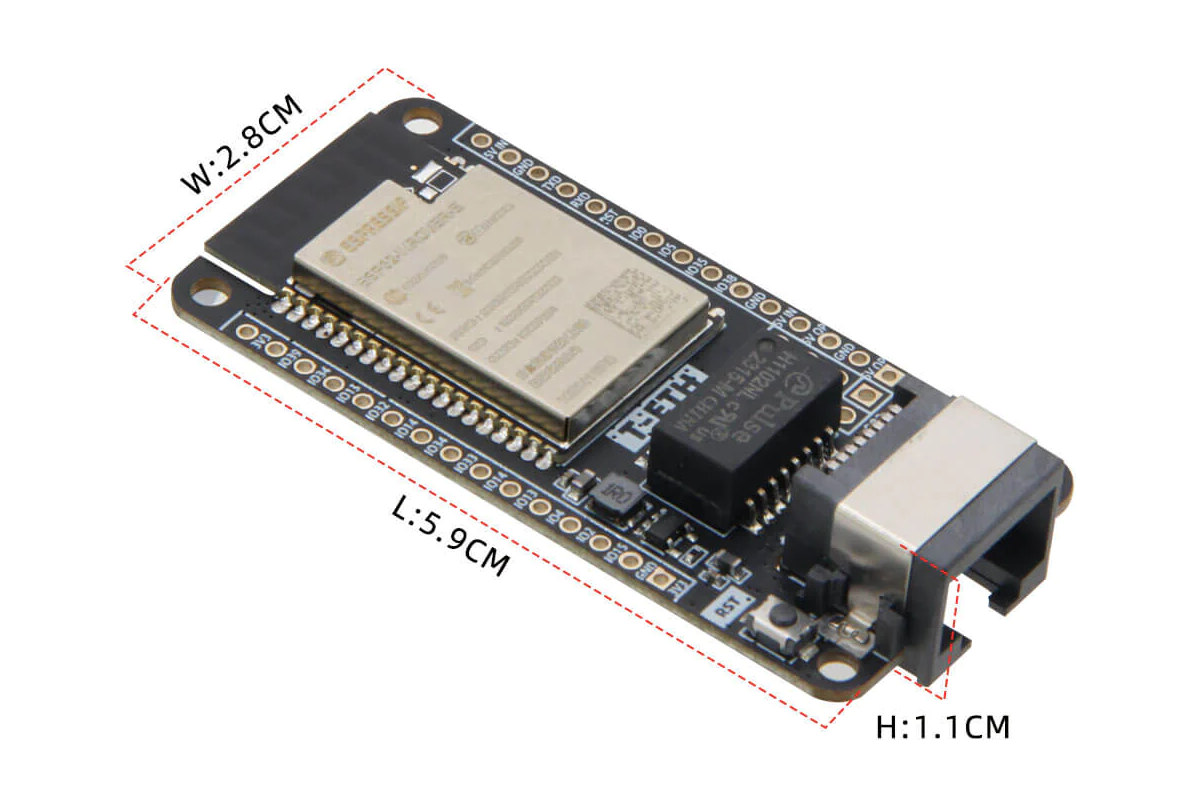

LILYGO T-ETH-Lite – An ESP32-S3 board with Ethernet, optional PoE support

LILYGO T-ETH-Lite ESP32-S3 is a new ESP32-S3 WiFi and Bluetooth development board with a low-profile Ethernet RJ45 connector using a WIZnet W5500 Ethernet controller, supporting PoE with an extra shield, and also equipped with a microSD card socket and expansion I/Os. ESP32-based development boards with Ethernet have been around for years including LILYGO’s own “TTGO T-Lite W5500“, but so far we haven’t many based on the more recent ESP32-S3 microcontroller except for the SB Components’ ESPi board that we covered last April. But LILYGO T-ETH-Lite ESP32-S3 adds another cost-effective board with Ethernet. LILYGO T-ETH-Lite ESP32-S3 specifications: Wireless module ESP32-S3-WROOM-1 MCU – ESP32-S3 dual-core LX7 microprocessor @ up to 240 MHz with Vector extension for machine learning Memory – 8MB PSRAM Storage – 16MB SPI flash Connectivity – WiFi 4 and Bluetooth 5 with LE/Mesh PCB antenna Storage – MicroSD card slot Connectivity 802.11 b/g/n WiFi 4 up to 150 Mbps and […]

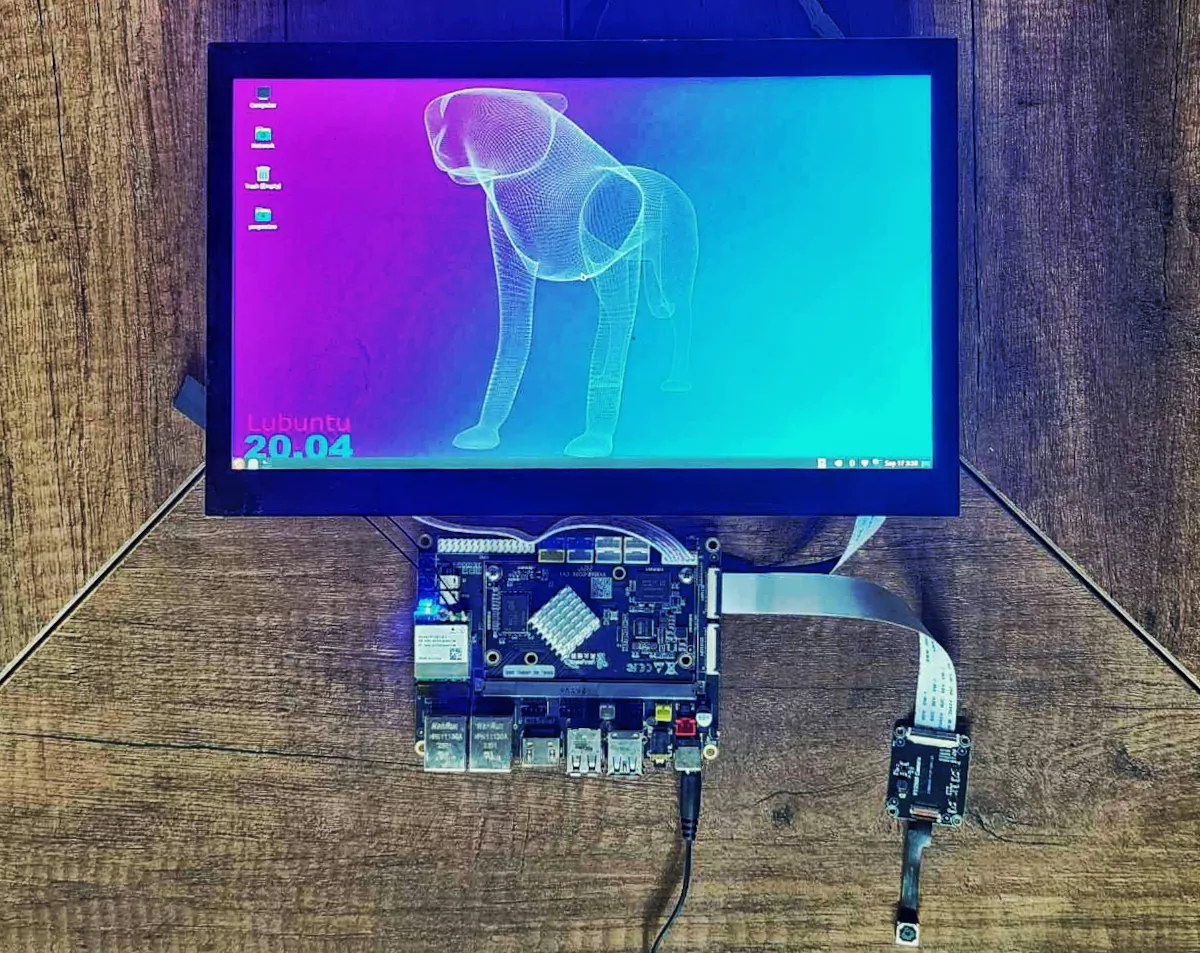

Review of Youyeetoo Rockchip RK3568 SBC with Lubuntu 20.04 and the RKNPU2 AI SDK

We’ve already reviewed the Rockchip RK3568-power Youyeetoo YY3568 SBC with Android 11 – and listed the specifications and checked out the hardware kit – in the first part of the review. We now had time to switch to Lubuntu 20.04, perform some basic tests, and also have a closer look at the RKNPU2 AI SDK for the built-in 0.8 TOPS AI accelerator found in the Rockchip RK3568 SoC. Installing Ubuntu or Debian on YY3568 SBC The company provides both Debian and Ubuntu images for the YY3568 SBC with different images depending on the boot device (SD card or eMMC flash) and video interface used (DSI, eDP, HDMI). Our YY3568 “Bundle 5” kit comes with an 11.6-inch eDP display so we’ll select the “Ubuntu 20” image with edp in the file name. The RKDevTool program is used to flash Linux images and it’s the same procedure as we used with Android […]

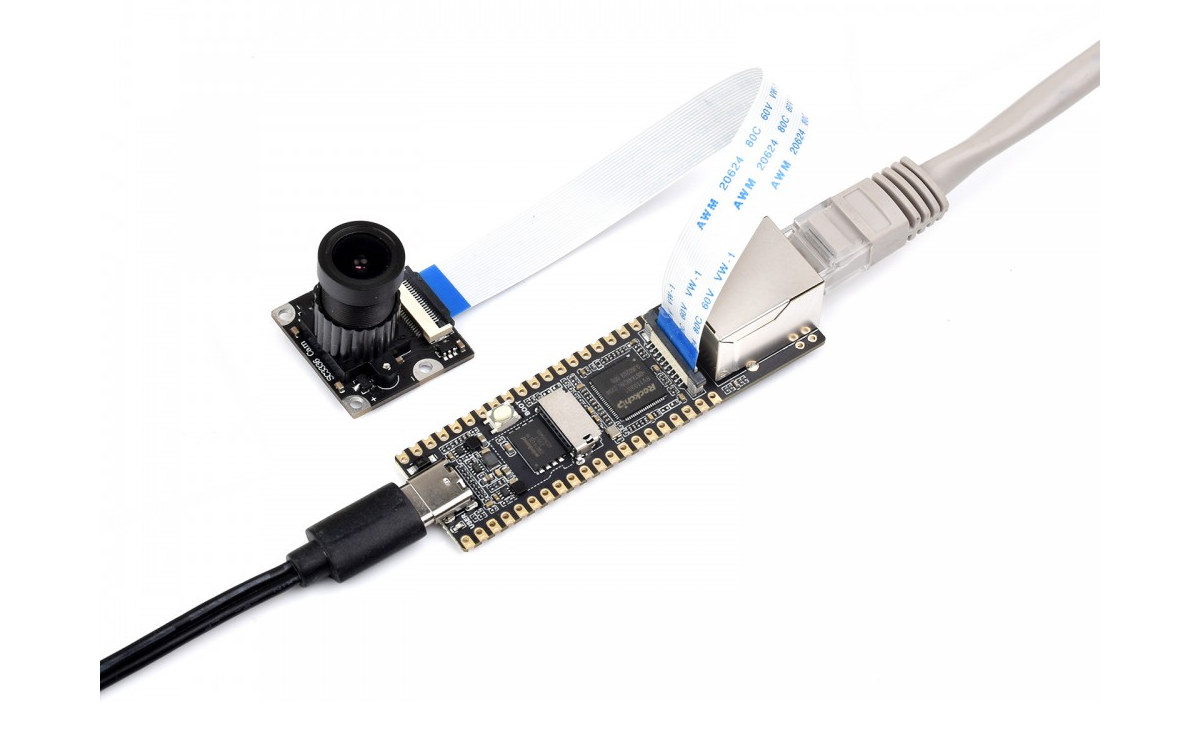

LuckFox Pico Rockchip RV1103 Cortex-A7/RISC-V camera board comes with an optional Ethernet port

LuckFox Pico is a small Linux camera board based on the Rockchip RV1103 Cortex-A7 and RISC-V AI camera SoC and offered with an Ethernet port in a longer version of the PCB called LuckFox Pico Plus. Both models come with 64MB RAM (apparently embedded in RV1103), a microSD card slot for storage, a MIPI CSI camera connector, a USB Type-C port for power, and a few through holes for expansion through GPIO, I2C, UART, and so on. LuckFox Pico and Pico Plus specifications: SoC – Rockchip RV1103 with Arm Cortex-A7 processor @ 1.2GHz, RISC-V core, 64MB DDR2, 0.8 TOPS NPU, 4M @ 30 fps ISP Storage MicroSD card slot LuckFox Plus only – 1Gbit (128MB) SPI flash (W25N01GV) Camera – 2-lane MIPI CSI connector Networking (LuckFox Pico Plus only) – 10/100M Ethernet RJ45 port USB – USB 2.0 Host/Device Type-C port Expansion – 2x 20-pin headers with up to 24x […]

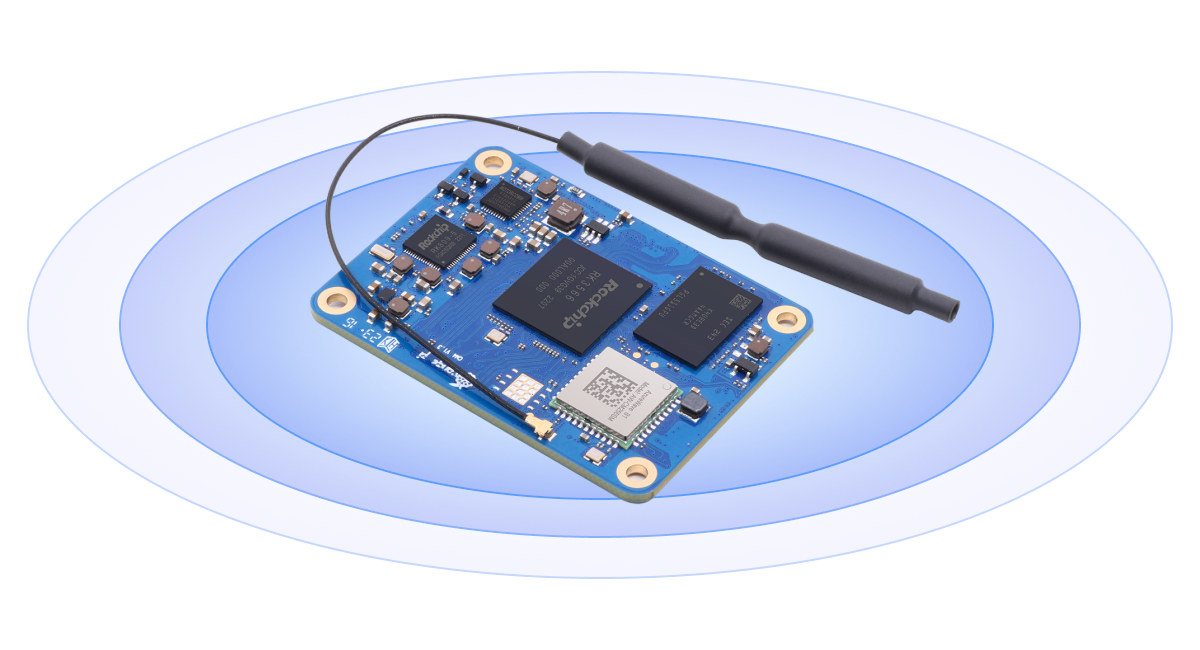

Orange Pi Compute Module 4 – A low-cost Rockchip RK3566-powered alternative to Raspberry Pi CM4

Orange Pi Compute Module 4 is a system-on-module mechanically and electrically compatible with the Raspberry Pi CM4, but powered by a Rockchip RK3566 quad-core Arm Cortex-A55 AI processor just like the Radxa CM3 introduced a few years ago, or more recently the Banana Pi BPI-CM2 (RK3568). The new module, also called Orange Pi CM4 for shorts, comes with 1GB to 8GB RAM, 8GB to 128GB eMMC flash, and an optional 128/256MBit SPI flash, as well as a Gigabit Ethernet PHY and on-board WiFi 5 and Bluetooth 5.0. It comes with the two 100-pin high-density connectors found on the Raspberry Pi CM4, and a smaller 24-pin connector for extra I/Os. Orange Pi Compute Module 4 specifications: SoC – Rockchip RK3566 quad-core Arm Cortex-A55 processor @ 1.8 GHz with Arm Mali-G52 2EE GPU, 0.8 TOPS AI accelerator, 4Kp60 H.265/H.264/VP9 video decoding, 1080p100f H.265/H.264 video encoding System Memory – 1GB, 2GB, 4GB, or […]