Seeed Studio has launched a Kickstarter campaign for the SenseCAP Watcher, a physical AI agent capable of monitoring a space and taking actions based on events within that area. Described as the “world’s first Physical LLM Agent for Smarter Spaces,” the SenseCAP Watcher leverages onboard and cloud-based technologies to “bridge the gap between digital intelligence and physical applications.” The SenseCAP Watcher is powered by an ESP32-S3 microcontroller coupled with a Himax WiseEye2 HX6538 chip (Cortex-M55 and Ethos-U55 microNPU) for image and vector data processing. It builds on the Grove Vision AI V2 module and comes in a form factor about one-third the size of an iPhone. Onboard features include a camera, touchscreen, microphone, and speaker, supporting voice command recognition and multimodal sensor expansion. It runs the SenseCraft software suite which integrates on-device tinyML models with powerful large language models, either running on a remote cloud server or a local computer […]

Firefly EC-R3576PC FD is an Embedded Large-Model Computer based on Rockchip RK3576 processor

Firefly EC-R3576PC FD is described as an “Embedded Large-Model Computer” powered by a Rockchip RK3576 octa-core Cortex-A72/A53 processor with a 6 TOPS NPU and supporting large language models (LLMs) such as Gemma-2B, LlaMa2-7B, ChatGLM3-6B, or Qwen1.5-1.8B. It looks to be based on the ROC-RK3576-PC SBC we covered a few weeks ago, and also designed for LLM. But the EC-R3576PC FD is a turnkey solution that will work out of the box and should deliver decent performance now that the RKLLM toolkit has been released with NPU acceleration. However, note there are some caveats doing that on RK3576 instead of RK3588 that we’ll discuss below. Firefly EC-R3576PC FD specifications: SoC – Rockchip RK3576 CPU 4x Cortex-A72 cores at 2.2GHz, four Cortex-A53 cores at 1.8GHz Arm Cortex-M0 MCU at 400MHz GPU – ARM Mali-G52 MC3 GPU clocked at 1GHz with support for OpenGL ES 1.1, 2.0, and 3.2, OpenCL up to 2.0, and […]

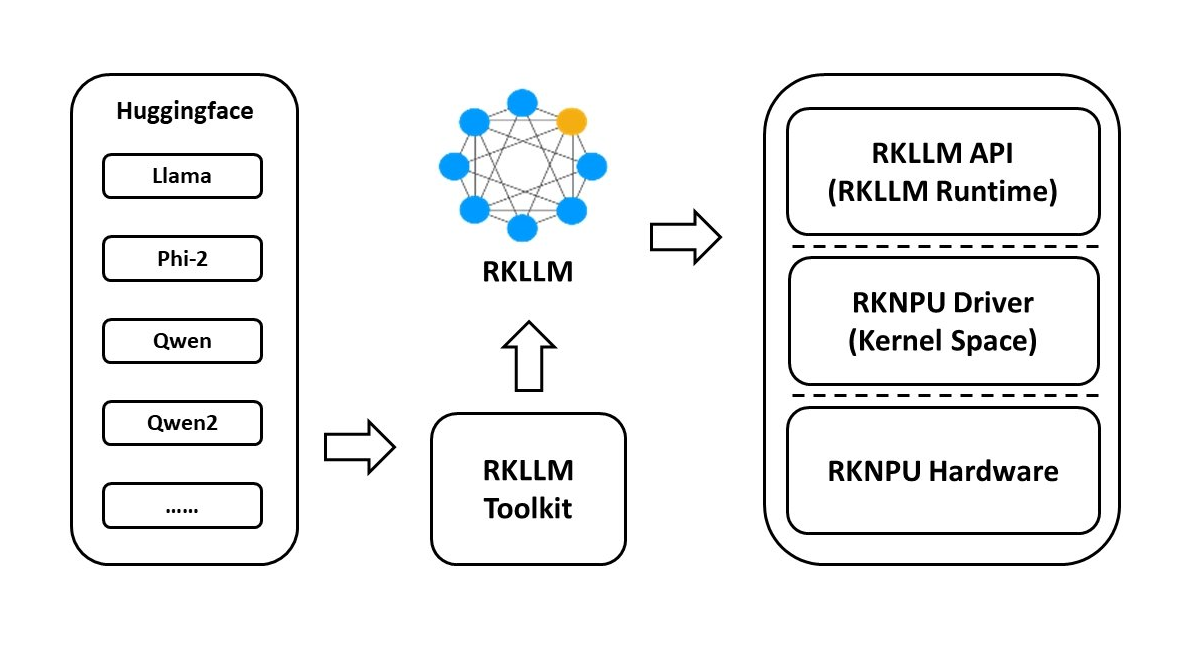

Rockchip RKLLM toolkit released for NPU-accelerated large language models on RK3588, RK3588S, RK3576 SoCs

Rockchip RKLLM toolkit (also known as rknn-llm) is a software stack used to deploy generative AI models to Rockchip RK3588, RK3588S, or RK3576 SoC using the built-in NPU with 6 TOPS of AI performance. We previously tested LLM’s on Rockchip RK3588 SBC using the Mali G610 GPU, and expected NPU support to come soon. A post on X by Orange Pi notified us that the RKLLM software stack had been released and worked on Orange Pi 5 family of single board computers and the Orange Pi CM5 system-on-module. The Orange Pi 5 Pro‘s user manual provides instructions on page 433 of the 616-page document, but Radxa has similar instructions on their wiki explaining how to use RKLLM and deploy LLM to Rockchip RK3588(S) boards. The stable version of the RKNN-LLM was released in May 2024 and currently supports the following models: TinyLLAMA 1.1B Qwen 1.8B Qwen2 0.5B Phi-2 2.7B Phi-3 […]

Radxa Fogwise Airbox AI box review – Part 2: Llama3, Stable Diffusion, imgSearch, Python SDK, YOLOv8

After checking out Radxa Fogwise Airbox hardware in the first part of the review last month, I’ve now had time to test the SOPHGO SG2300x-powered AI box with an Ubuntu 20.04 Server image preloaded with CasaOS as well as Stable Diffusion and Llama3 containers. I’ll start the second part of the review by checking out the pre-installed Stable Diffusion text-to-image generator and Llama3 AI chatbot, then manually install imgSearch AI-powered image search engine in CasaOS web dashboard, test the Python SDK in the command line, and run some AI vision models, namely Resnet50 and YOLOv8. Radxa Fogwise Airbox OS installation Radxa only provided an Ubuntu Server 20.04 image last month with only the basics pre-installated. The company has now improved the documentation and also made two images available for the Radxa Fogwise Airbox: Base image (1.2GB) – Based on Ubuntu Server 20.04; contains only Sophon base SDK and backend. Full […]

Radxa Fogwise Airbox edge AI box review – Part 1: Specifications, teardown, and first try

Radxa Fogwise Airbox, also known as Fogwise BM168M, is an edge AI box powered by a SOPHON BM1684X Arm SoC with a built-in 32 TOPS TPU and a VPU capable of handling the decoding of up to 32 HD video streams. The device is equipped with 16GB LPDDR4x RAM and a 64GB eMMC flash and features two gigabit Ethernet RJ45 jacks, a few USB ports, a speaker, and more. Radxa sent us a sample for evaluation. We’ll start the Radxa Fogwise Airbox review by checking out the specifications and the hardware with an unboxing and a teardown, before testing various AI workloads with Tensorflow and/or other frameworks in the second part of the review. Radxa Fogwise Airbox specifications The specifications below come from the product page as of May 30, 2024: SoC – SOPHON SG2300x CPU – Octa-core Arm Cortex-A53 processor up to 2.3 GHz VPU Decoding of up to […]

Leveraging GPT-4o and NVIDIA TAO to train TinyML models for microcontrollers using Edge Impulse

We previously tested Edge Impulse machine learning platform showing how to train and deploy a model with IMU data from the XIAO BLE sense board relatively easily. Since then the company announced support for NVIDIA TAO toolkit in Edge Impulse, and now they’ve added the latest GPT-4o LLM to the ML platform to help users quickly train TinyML models that can run on boards with microcontrollers. What’s interesting is how AI tools from various companies, namely NVIDIA (TAO toolkit) and OpenAI (GPT-4o LLM), are leveraged in Edge Impulse to quickly create some low-end ML model by simply filming a video. Jan Jongboom, CTO and co-founder at Edge Impulse, demonstrated the solution by shooting a video of his kids’ toys and loading it in Edge Impulse to create an “is there a toy?” model that runs on the Arduino Nicla Vision at about 10 FPS. Another way to look at it […]

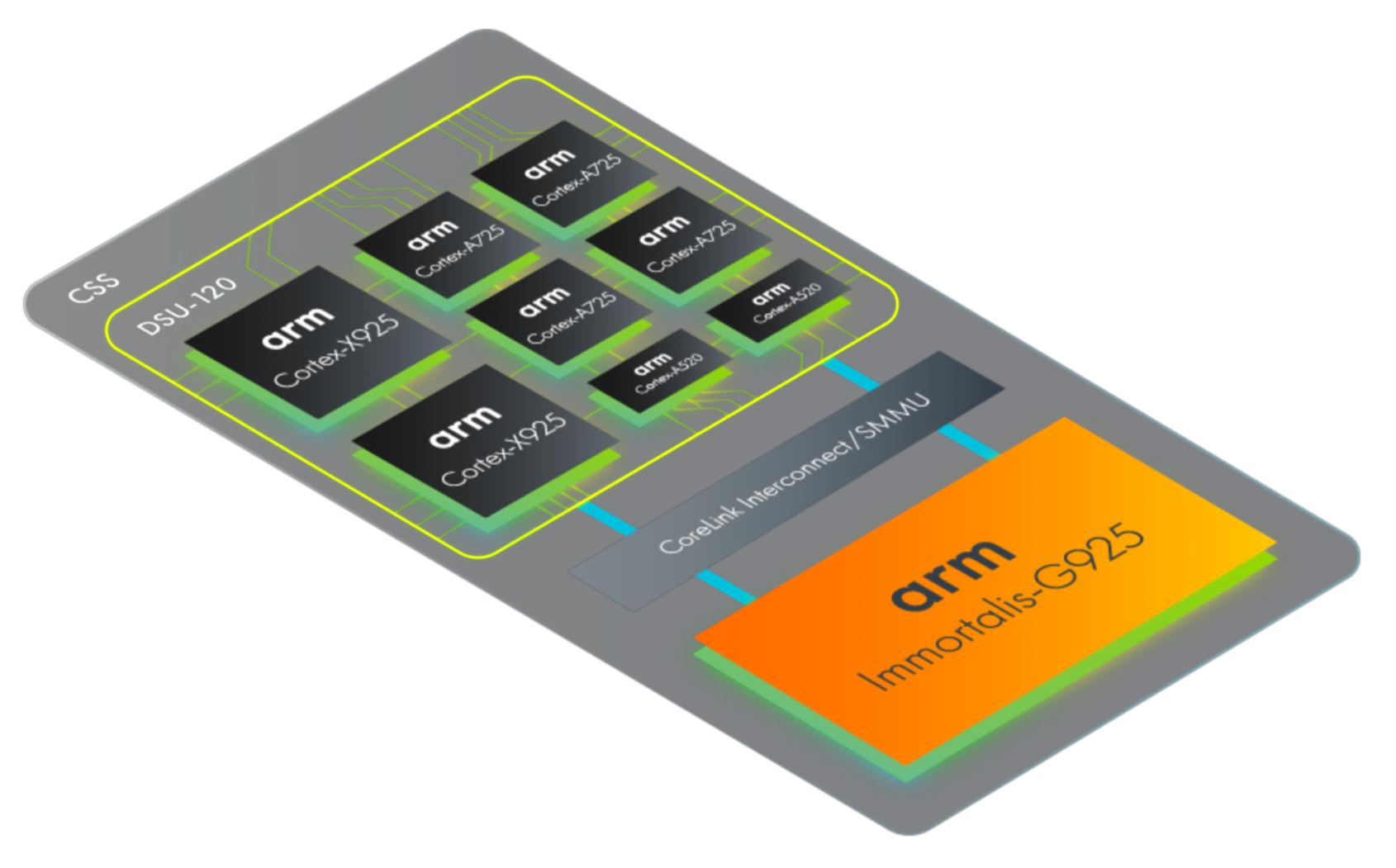

Arm unveils Cortex-X925 and Cortex-A725 CPUs, Immortalis-G925 GPU, Kleidi AI software

Arm has just announced new Armv9 CPUs and Immortalis GPUs for mobile SoCs, as well as the Kleidi AI software optimized for Arm CPUs from Armv7 to Armv9 architectures. New Armv9.2 CPU cores include the Cortex-X925 “Blackhawk” core with significant CPU and AI performance improvements, the Cortex-A725 with improved performance efficiency, and a refreshed version of the Cortex-A520 providing 15 percent efficiency improvements. Three new GPUs have also been introduced namely the up-to-14-core Immortalis-G925 flagship GPU which delivers up to 37% 3D graphics performance improvements over last year’s 12-core Immortalis-G720, the Mali-G725 with 6 to 9 cores for premium mobile handsets, and the Mali-G625 GPU with one to five cores for smartwatches and entry-level mobile devices. Arm Cortex-X925 The Arm Cortex-X925 delivers 36 percent single-threaded peak performance improvements in Geekbench 6.2 against a Cortex-X4-based Premium Android smartphone, and about 41 percent better AI performance using the time-to-first token of tiny-LLama […]

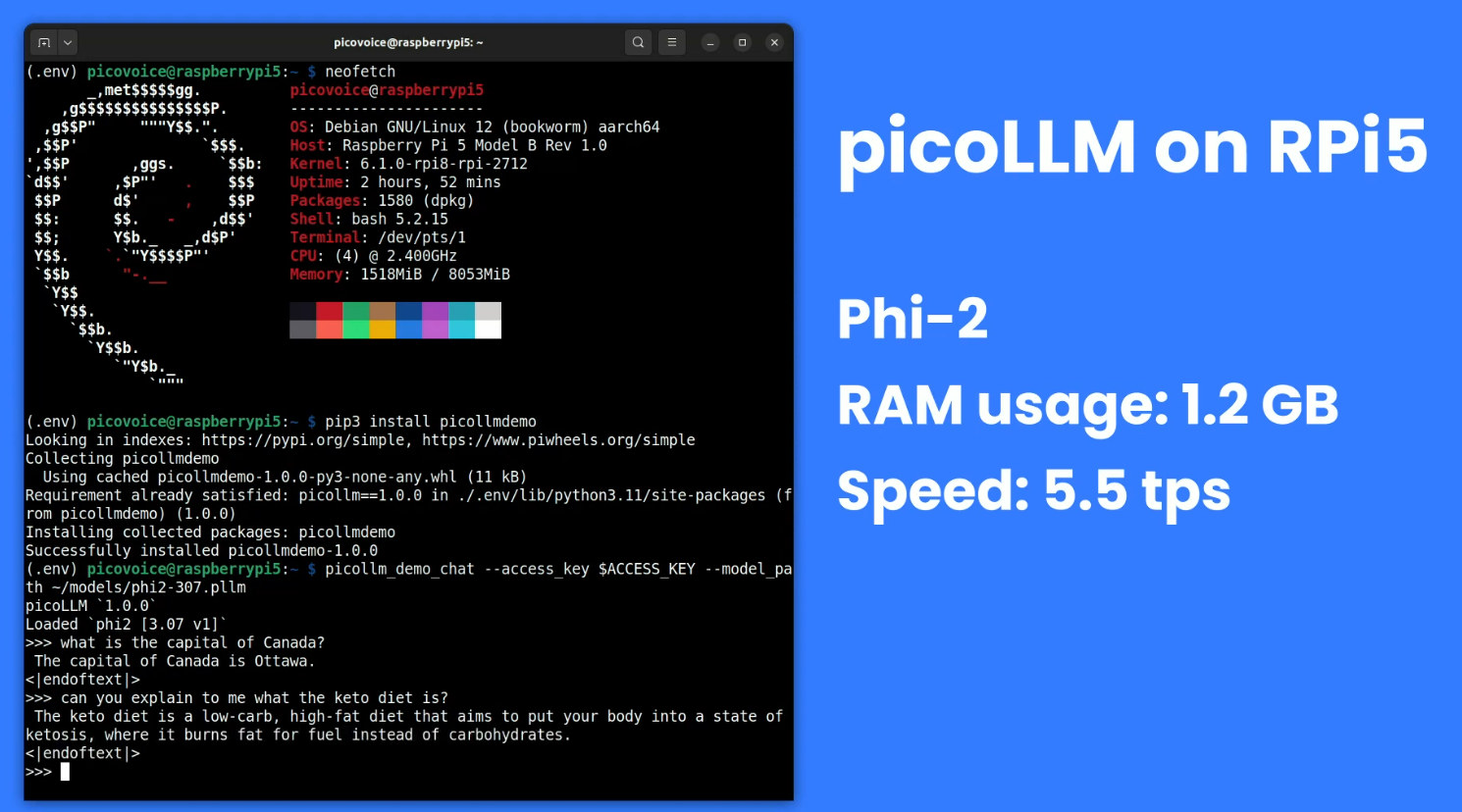

picoLLM is a cross-platform, on-device LLM inference engine

Large Language Models (LLMs) can run locally on mini PCs or single board computers like the Raspberry Pi 5 but with limited performance due to high memory usage and bandwidth requirements. That’s why Picovoice has developed the picoLLM Inference Engine cross-platform SDK optimized for running compressed large language models on systems running Linux (x86_64), macOS (arm64, x86_64), and Windows (x86_64), Raspberry Pi OS on Pi 5 and 4, Android and iOS mobile operating systems, as well as web browsers such as Chrome, Safari, Edge, and Firefox. Alireza Kenarsari, Picovoice CEO, told CNX Software that “picoLLM is a joint effort of Picovoice deep learning researchers who developed the X-bit quantization algorithm and engineers who built the cross-platform LLM inference engine to bring any LLM to any device and control back to enterprises”. The company says picoLLM delivers better accuracy than GPTQ when using Llama-3.8B MMLU (Massive Multitask Language Understanding) as a […]