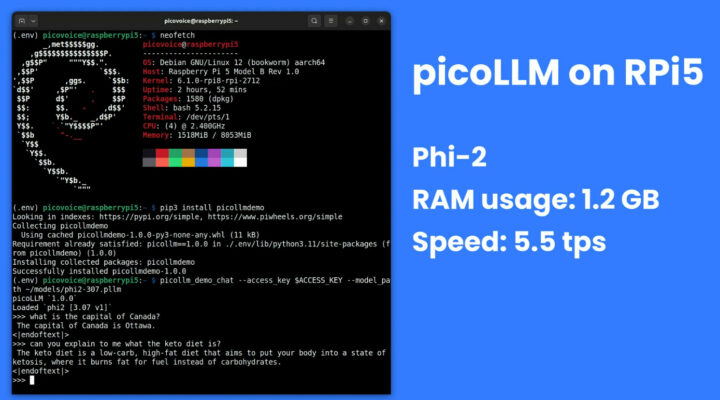

Large Language Models (LLMs) can run locally on mini PCs or single board computers like the Raspberry Pi 5 but with limited performance due to high memory usage and bandwidth requirements. That’s why Picovoice has developed the picoLLM Inference Engine cross-platform SDK optimized for running compressed large language models on systems running Linux (x86_64), macOS (arm64, x86_64), and Windows (x86_64), Raspberry Pi OS on Pi 5 and 4, Android and iOS mobile operating systems, as well as web browsers such as Chrome, Safari, Edge, and Firefox.

Alireza Kenarsari, Picovoice CEO, told CNX Software that “picoLLM is a joint effort of Picovoice deep learning researchers who developed the X-bit quantization algorithm and engineers who built the cross-platform LLM inference engine to bring any LLM to any device and control back to enterprises”.

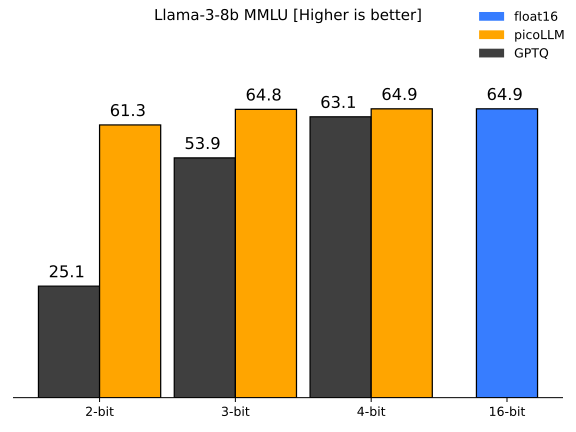

The company says picoLLM delivers better accuracy than GPTQ when using Llama-3.8B MMLU (Massive Multitask Language Understanding) as a metric as shown in the diagram below with the most gain being when using 2-bit settings. The 4-bit INT result has the same MMLU score as the 16-bit float result.

You’ll find some demos, the SDK, and demos for various programming languages and platforms on GitHub. The solution is completely free for open-weight models but requires an access key that is verified by connecting to a server. After access key verification, all LLM processing is done offline and on-device.

We’ve written about Picovoice since 2020, as they offer easy-to-use voice activity detection (VAD) (Cobra), custom wake word (Porcupine), speech-to-text (Leopard and Cheetah), and voice recognition (Rhino) solutions that are fairly easy to use and work on low-end hardware such as Raspberry Pi and Arduino. The company has now combined several of those software solutions with the picoLLM implementation to create an LLM-powered voice assistant written in Python and shown to run on the Raspberry Pi 5 in the video embedded below.

Picovoice solutions are usually free for hobby projects with some limitations, and you should be able to reproduce the demo above on a Raspberry Pi 5 by following the instructions on GitHub.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

They should have compared to the various intermediary quantization levels supported in GGUF format (4_0, 4_1, 4K_S, 4K_M, 5_0, 5_1, 5K_S, 5K_M etc). There are very good tradeoffs there. For example for me Q5K_M is always as good as Q8 and takes way less space (5.5 bits per token approx). Also the test in the capture shows nothing, any tiny model with even bad quantization will be able to respond to such easy questions. Getting a valid response to a question such as “What do monkey eggs become when they fall on the floor from their nest in the tree… Read more »

Most low power applications only need very limited vocabulary, and specific needs, so why bother with LARGE language model and why not have highly OPTIMIZED language models? I understand they wanna target as big of market as possible, but customization makes more sense to me than floods of LLM.