NVIDIA DGX Spark may look like a mini PC, but under the hood, it’s a powerful AI supercomputer based on the NVIDIA GB10 20-core Armv9 SoC with Blackwell architecture delivering up to 1,000 TOPS (FP4) of AI performance, and high memory bandwidth (273 GB/s) with 128 GB 256-bit LPDDR5x. The GB10 SoC is equipped with ten Cortex-X925 cores, ten Cortex-X725 cores, a Blackwell GPU, 5th Gen Tensor cores, and 4th Gen RT cores. The system also features a 1 TB or 4TB SSD, an HDMI 2.1a video output port, 10GbE and WiFi 7 networking, and four USB4 ports. NVIDIA DGX Spark specifications: SoC – NVIDIA GB10 CPU – 20-core Armv9 processor with 10x Cortex-X925 cores and 10x Cortex-A725 cores Architecture – NVIDIA Grace Blackwell GPU – Blackwell Architecture CUDA Cores – Blackwell Generation 5th Gen Tensor cores 4th Gen RT (Ray Tracing) cores Tensor Performance – 1000 AI TOPS (FP4) […]

MediaTek Genio 720 and 520 AIoT SoCs target generative AI applications with 10 TOPS AI accelerator

The announcement of the MediaTek Genio 720 and Genio 520 octa-core Cortex-A78/A55 AIoT SoCs is one of the news I missed at Embedded World 2025. The new models appear to be updates to the Genio 700 and Genio 500 with a beefier NPU, and the Taiwanese company says the new Genio series supports generative AI models, human-machine interface (HMI), multimedia, and connectivity features for smart home, retail, industrial, and commercial IoT devices. Both are equipped with a 10 TOPS NPU/AI accelerator for transformer and convolutional neural network (CNN) models and support up to 16GB of LPDDR5 memory to handle “edge-optimized” (i.e. quantized) large language models (LLMs) such as Llama, Gemini, Phi, and DeepSeek, and other generative AI tasks. MediaTek Genio 720 and Genio 520 specifications: Octa-core CPU Genio 520 2x Arm Cortex-A78 up to 2.2 GHz (Commercial) or 2.0 GHz (Industrial) 6x Arm Cortex-A55 up to 2.0 GHz (Commercial) or […]

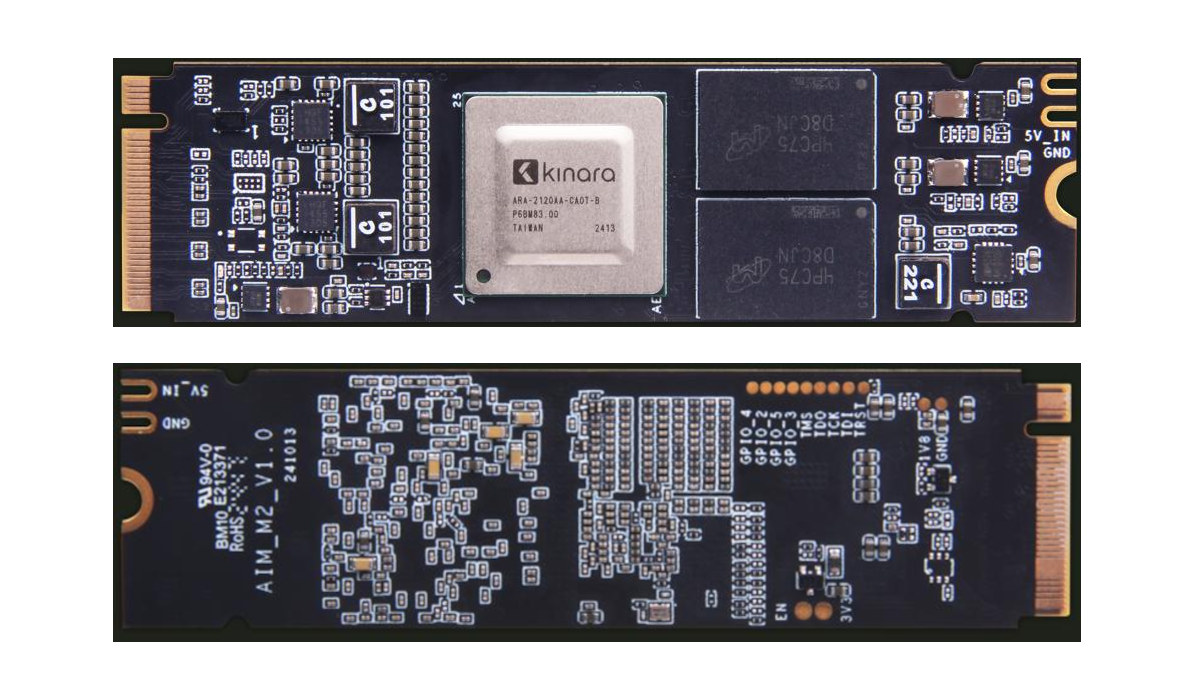

Geniatech AIM M2 M.2 module features Kinara Ara-2 40 TOPS AI accelerator

There are plenty of M.2 AI modules based on accelerators such as Hailo-8, MemryX MX3, or Axelera AI, but the Geniatech AIM M2 module is based on the Kinara Ara-2 40 TOPS AI accelerator that we’ve yet to cover here on CNX Software. The Key-M module is designed to handle Generative AI and transformer-based models such as Stable Diffusion at a lower price point than competitors, and operates at a typical sub-2W power consumption in computer vision workloads. Target applications include AI assistants/Copilot, gaming, smart retail, physical security, and factory automation. Geniatech AIM M2 specifications: AI Accelerator Kinara Ara-2 NPU with 40 TOPS of AI power Package – 17x17mm FCBBA System Memory – 16GB RAM (4GB/8GB Option) Host interface – PCIe Gen4 x4 Security – Secure boot and encrypted memory access Misc – Heatsink cooling Supply Voltage – 3.3V Power Consumption – Under 2 Watts (typical) TDP – 12 Watts […]

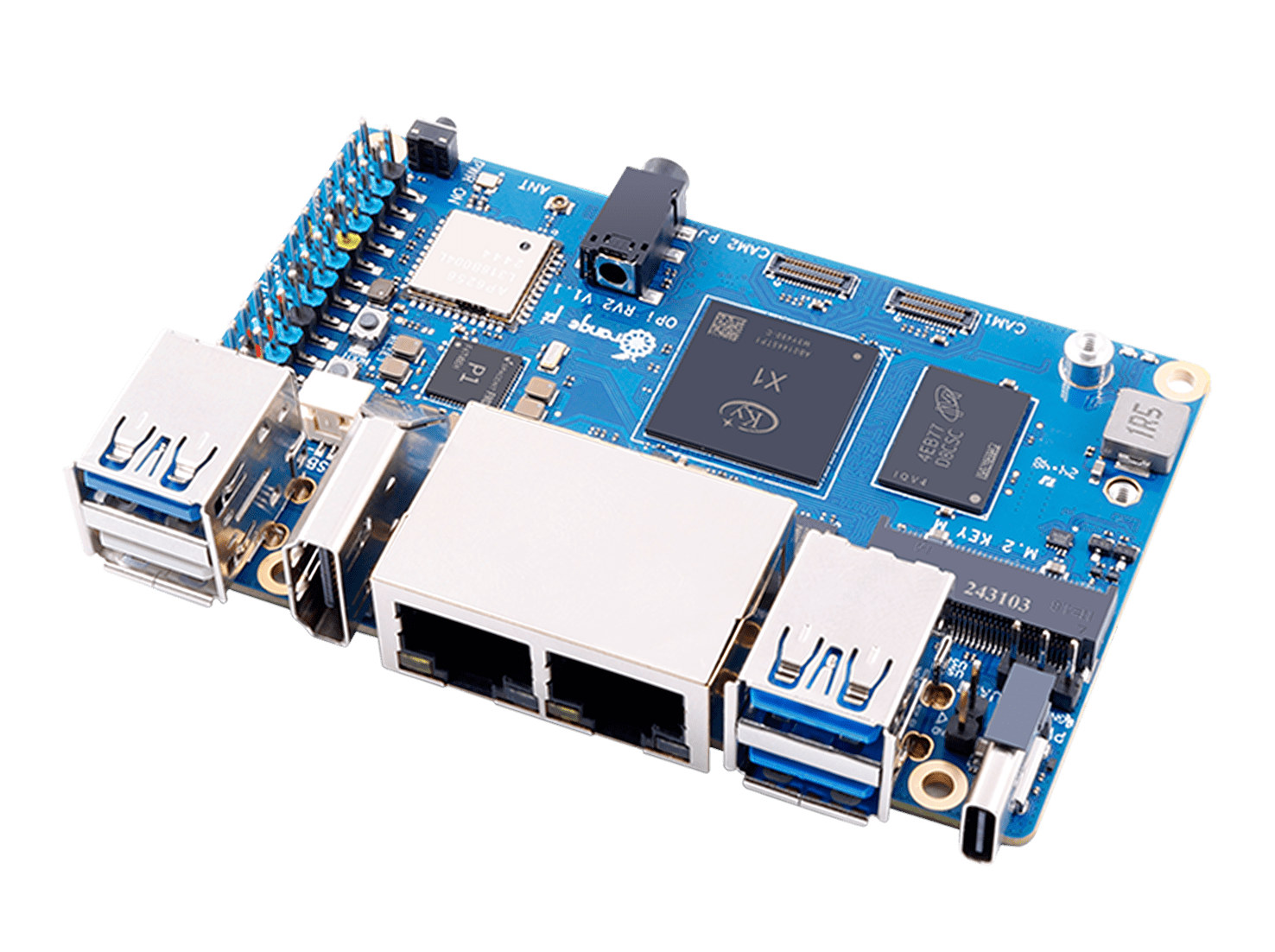

Orange Pi RV2 – A $30+ RISC-V SBC powered by Ky X1 octa-core SoC with a 2 TOPS AI accelerator

While the Orange Pi RV RISC-V SBC introduced at the Orange Pi Developer Conference 2024 last year is yet to be launched (should be up in a few days), the company has just launched the Orange Pi RV2 powered by the Ky X1 octa-core RISC-V SoC with a 2 TOPS AI accelerator, up to 8GB LPDD4X, optional eMMC flash moduyle, two M.2 sockets for storeage, dual gigabit Ethernet, WiFi 5, and more. While RISC-V has made a lot of progress over the years, Linux RISC-V SBCs were often synonymous with relatively expensive hardware for developers, since software is often unsuitable for production, at least for applications using graphics. The Orange Pi RV2 addresses the cost issue since the octa-core RISC-V SBC sells for just $30 to $49.90 depending on the configuration. Orange Pi RV2 specifications: SoC – Ky X1 CPU – 8-core 64-bit RISC-V processor GPU – Not mentioned VPU […]

Home Assistant 2025.3 released with dashboard view headers, tile card improvements, better SmartThings integration

The popular Home Assistant has gotten an update with version 2025.3 that brings dashboard view headers, several tile card improvements, better map clustering, streamed responses from LLMs, and improved SmartThings integrations. Let’s have a look at some of the improvements starting with dashboard view headers which allow users to add a title and welcoming text to dashboards using Markdown and templates. You can also add badges next to the headers. Home Assistant 2025.3 also brings plenty of improvements to tile cards: Adding a circular background around tiles that perform actions, and leaving the ones that just show extra information without a circle Features of a tile card can now be positioned inline in the tile card (e.g. on/off button) New features: switch toggle, counter toggle (to increase, decrease, or reset a counter entity), animations when hovering over atile card Editor improvements for ease of use The new version of Home […]

The One Smart AI Pen – A ballpoint pen with Bluetooth and a microphone for translation, LLM integration, note taking (Crowdfunding)

You may have seen the “Sell me that pen. It’s AI-powered” meme if you are a social media user. It may have started as a joke, but Zakwan Ahmad made the meme become reality with “The One Smart AI Pen” which is basically a standard ballpoint pen with a battery, Bluetooth connectivity, a microSD card, and a microphone. The AI part is not exactly inside the pen per se, but in a smartphone’s app called Hearit.ai that allows the user to translate his/her voice input, use a range of LLMs such as ChatGPT, recording a meeting, or taking notes, for example, to schedule events or meetings. The One Smart AI Pen specifications: “AI chip” – Not clear why it’s needed here… unless it transcribes audio into text inside the pen (as opposed to inside the phone) Storage – MicroSD card slot inside the pen Wireless – Bluetooth 5.2 with up […]

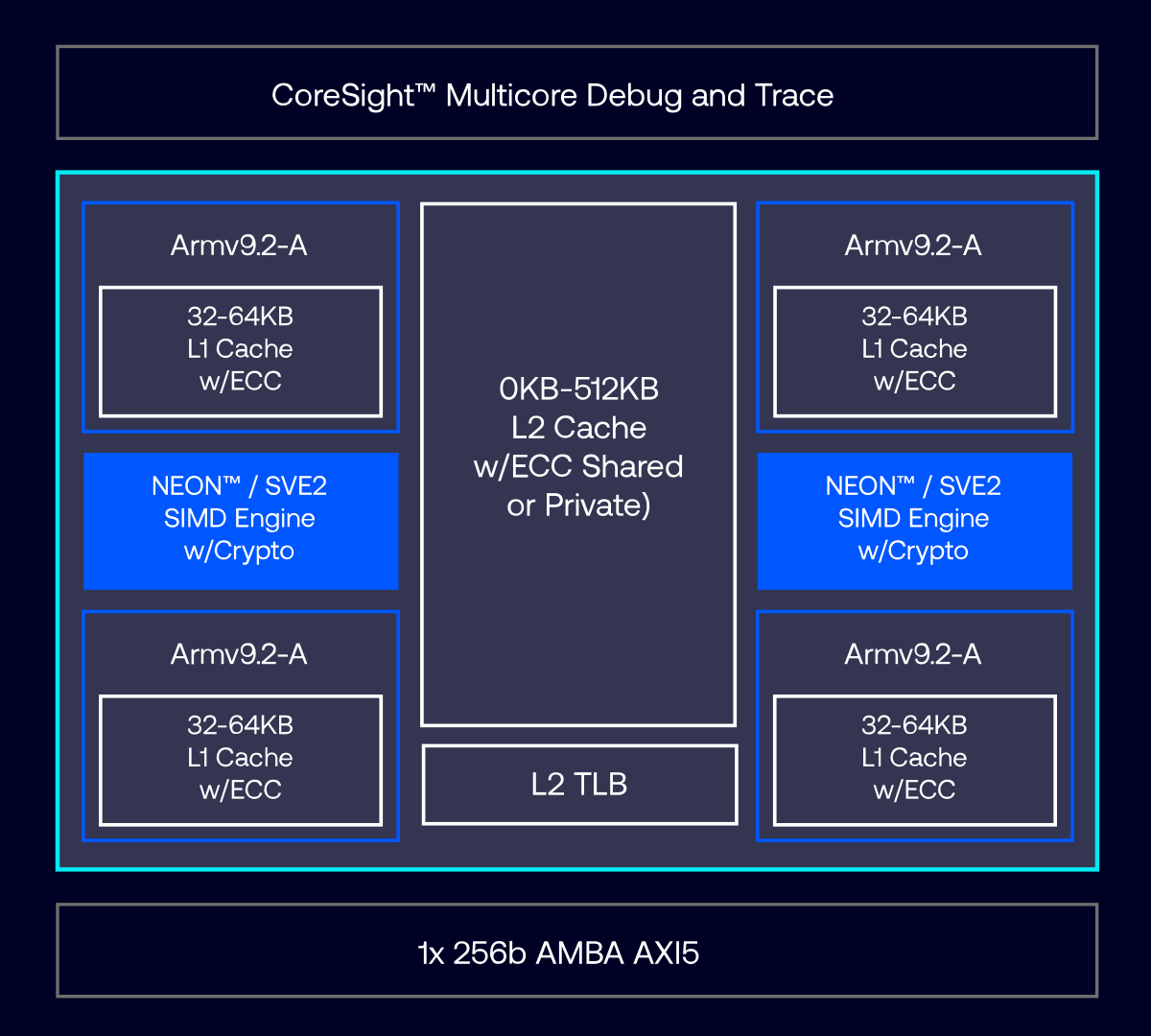

Arm Cortex-A320 low-power CPU is the smallest Armv9 core, optimized for Edge AI and IoT SoCs

Arm Cortex-A320 is a low-power Armv9 CPU core optimized for Edge AI and IoT applications, with up to 50% efficiency improvements over the Cortex-A520 CPU core. It is the smallest Armv9 core unveiled so far. The Armv9 architecture was first introduced in 2021 with a focus on AI and specialized cores, followed by the first Armv9 cores – Cortex-A510, Cortex-A710, Cortex-X2 – unveiled later that year and targeting flagship mobile devices. Since then we’ve seen Armv9 cores on a wider range of smartphones, high-end Armv9 motherboards, and TV boxes, The upcoming Rockchip RK3688 AIoT SoC also features Armv9 but targets high-end applications. The new Arm Cortex-A320 will expand Armv9 usage to a much wider range of IoT devices including power-constrained Edge AI devices. Arm Cortex-A320 highlights: Architecture – Armv9.2-A (Harvard) Extensions Up to Armv8.7 extensions QARMA3 extensions SVE2 extensions Memory Tagging Extensions (MTE) (including Asymmetric MTE) Cryptography extensions RAS extensions […]

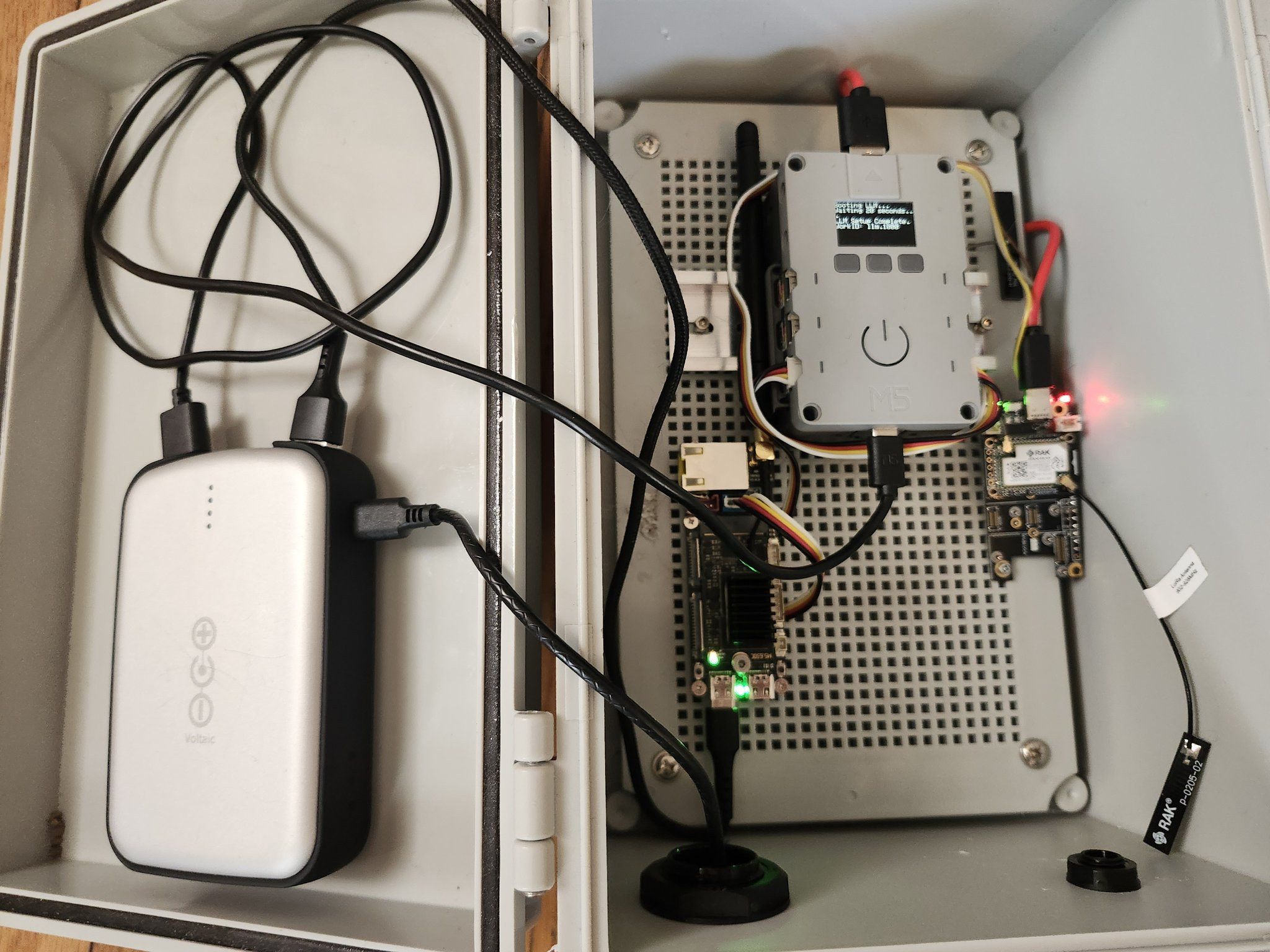

Solar-powered LLM over Meshtastic solution may provide live-saving instructions during disasters and emergencies

People are trying to run LLMs on all sorts of low-end hardware with often limited usefulness, and when I saw a solar LLM over Meshtastic demo on X, I first laughed. I did not see the reason for it and LoRa hardware is usually really low-end with Meshtastic open-source firmware typically used for off-grid messaging and GPS location sharing. But after thinking more about it, it could prove useful to receive information through mobile devices during disasters where power and internet connectivity can not be taken for granted. Let’s check Colonel Panic’s solution first. The short post only mentions it’s a solar LLM over Meshtastic using M5Stack hardware. On the left, we must have a power bank charge over USB (through a USB solar panel?) with two USB outputs powering a controller and a board on the right. The main controller with a small display and enclosure is an ESP32-powered […]