Youtuber and tech enthusiast Binh Pham has recently built a portable plug-and-play AI and LLM device housed in a USB stick called the LLMStick and built around a Raspberry Pi Zero W. This device portrays the concept of a local plug-and-play LLM which you can use without the internet. After DeepSeek shook the world with its performance and open-source accessibility, we have seen tools like Exo that allow you to run large language models (LLMs) on a cluster of devices, like computers, smartphones, and single-board computers, effectively distributing the processing load. We have also seen Radxa release instructions to run DeepSeek R1 (Qwen2 1.5B) on a Rockchip RK3588-based SBC with 6 TOPS NPU. Pham thought of using the llama.cpp project as it’s specifically designed for devices with limited resources. However, running llama.cpp on the Raspberry Pi Zero W wasn’t straightforward and he had to face architecture incompatibility as the old […]

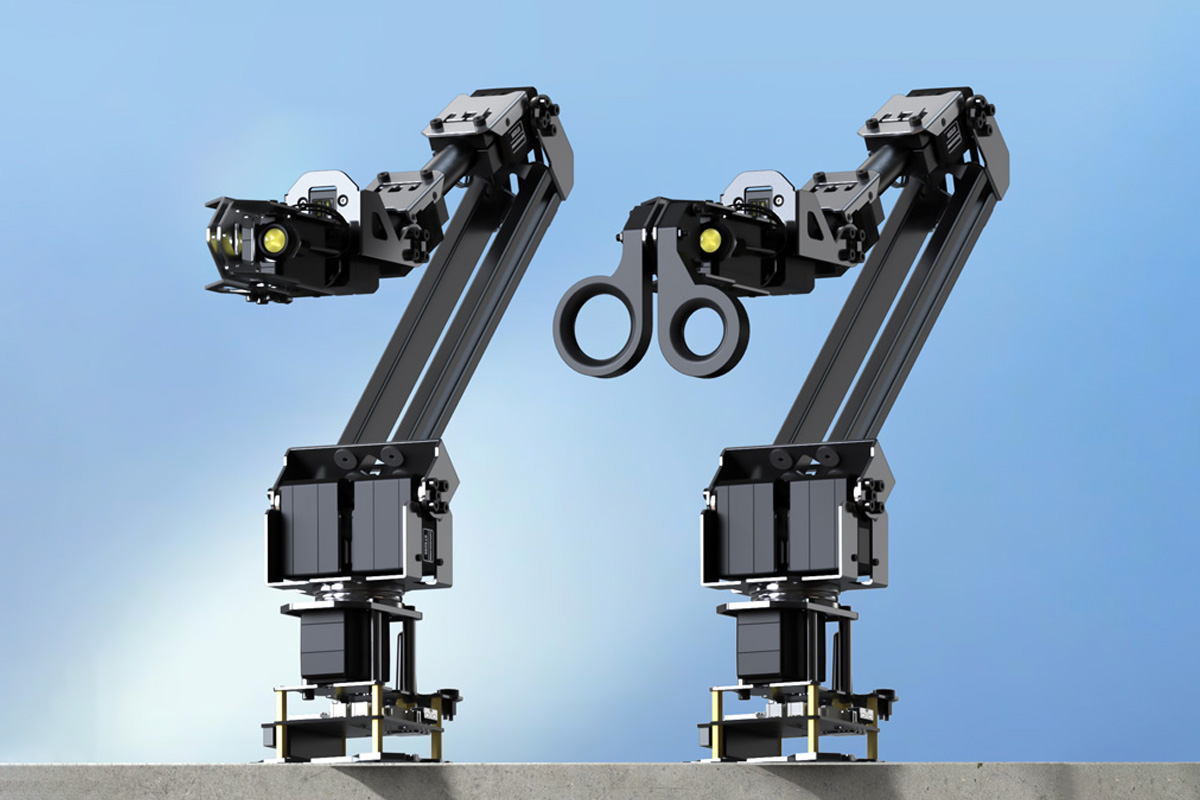

Waveshare ESP32 robotic arm kit with 5+1 DoF supports ROS2, LeRobot, and Jetson Orin NX integration

Waveshare has recently released the RoArm-M3-Pro and RoArm-M3-S, a 5+1 DOF high-torque ESP32 robotic arm kit. The main difference between the two is that the RoArm-M3-Pro features all-metal ST3235 bus servos for durability and longevity, on the other hand, the RoArm-M3-S uses standard servo motors which are less durable for long-term use. These robotic arms feature a lightweight structure, a 360° omnidirectional base, and five flexible joints, which together create a 1m workspace with a 200 grams @ 0.5m payload. A 2-DOF wrist joint enables multi-dimensional clamping and precise force control. It integrates an ESP32 MCU, supporting multiple wireless control modes via a web app, it also supports inverse kinematics for accurate positioning, curve velocity control for smooth motion, and adaptive force control. The design is open source and with ROS2 compatibility, it allows secondary development via JSON commands and ESP-NOW for multi-device communication. Compatible with the LeRobot AI framework, […]

FOSSASIA 2025 – Operating systems, open hardware, and firmware sessions

The FOSSASIA Summit is the closest we have to FOSDEM in Asia. It’s a free and open-source event taking place each year in Asia, and FOSSASIA 2025 will take place in Bangkok, Thailand on March 13-15 this year. It won’t have quite as many speakers and sessions as in FOSDEM 2025 (968 speakers, 930 events), but the 3-day event will still have over 170 speakers and more than 200 sessions. Most of the sessions are for high-level software with topics like AI and data science, databases, cloud, and web3, but I also noticed a few sessions related to “Hardware and firmware” and “Operating System” which are closer to what we cover here at CNX Software. So I’ll make a virtual schedule based on those two tracks to check out any potentially interesting talks. None of those sessions take place on March 13, so we’ll only have a schedule for March […]

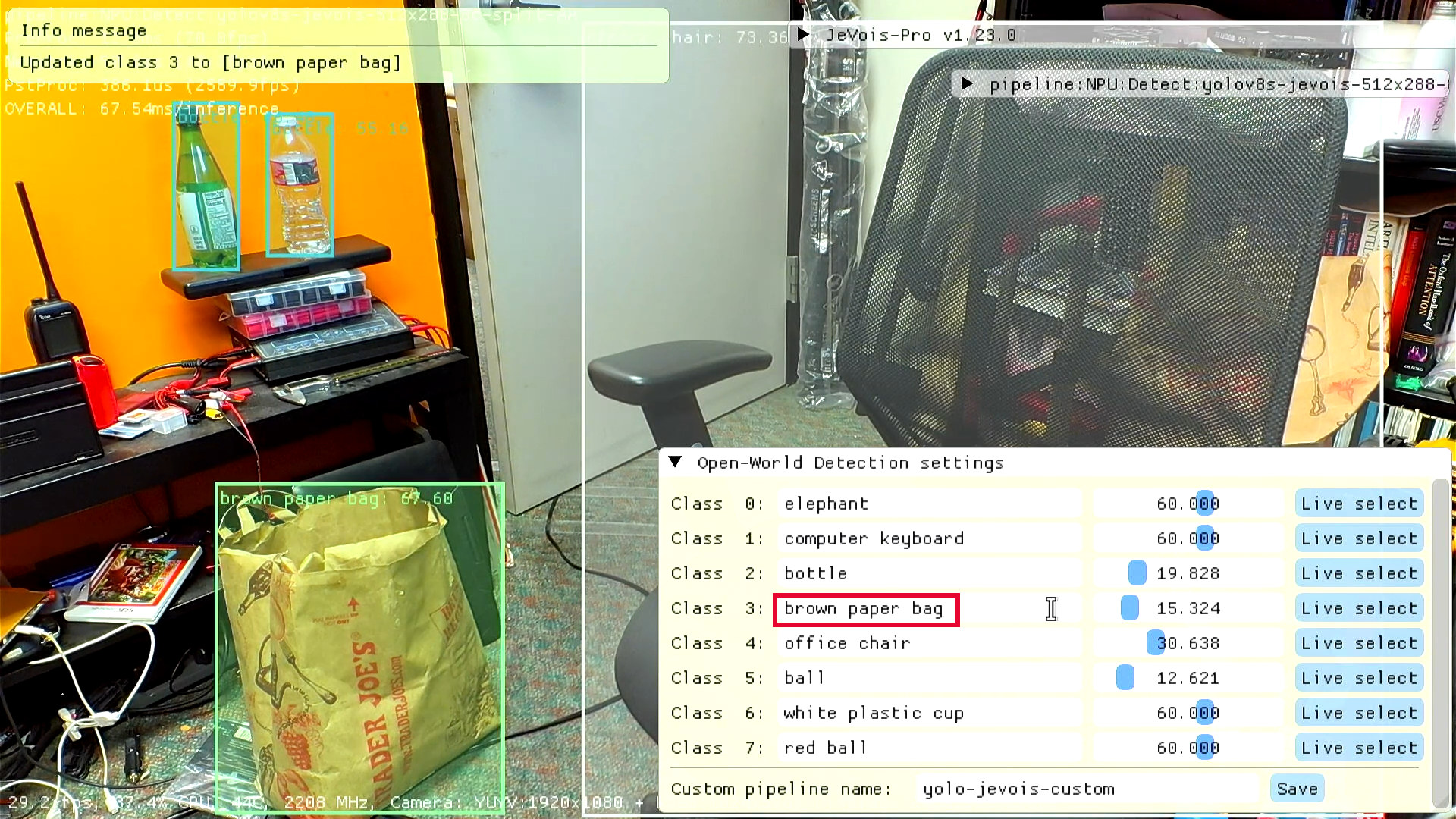

YOLO-Jevois leverages YOLO-World to enable open-vocabulary object detection at runtime, no dataset or training needed

YOLO is one of the most popular edge AI computer vision models that detects multiple objects and works out of the box for the objects for which it has been trained on. But adding another object would typically involve a lot of work as you’d need to collect a dataset, manually annotate the objects you want to detect, train the network, and then possibly quantize it for edge deployment on an AI accelerator. This is basically true for all computer vision models, and we’ve already seen Edge Impulse facilitate the annotation process using GPT-4o and NVIDIA TAO to train TinyML models for microcontrollers. However, researchers at jevois.org have managed to do something even more impressive with YOLO-Jevois “open-vocabulary object detection”, based on Tencent AI Lab’s YOLO-World, to add new objects in YOLO at runtime by simply typing words or selecting part of the image. It also updates class definitions on […]

Silicon Labs unveils low-cost BG22L BLE 5.4 and BG24L BLE 6.0 SoCs

Silicon Labs has unveiled the BG22L and BG24L SoCs for low-cost, ultra-low-power Bluetooth LE connectivity. These are Lite versions of the company’s BG22 and BG24 SoC families introduced in 2020 and 2022 respectively. The 38.4 MHz Silabs BG22L Arm Cortex-M33 SoC targets high-volume, cost-sensitive Bluetooth 5.4 applications like asset tracking tags and small appliances. In comparison, the 78 MHz BG24L Cortex-M33 SoC offers an affordable entry-level solution with AI/ML acceleration and Bluetooth 6.0 Channel Sounding to locate items or implement access control in crowded areas such as warehouses and multi-family housing. Since the specs for the BG22L and BG24L are similar to the ones for the BG22 and BG24 chips I won’t reproduce those here, and instead highlights the main features and cost-saving measures. Silicon Labs BG22L Bluetooth LE 5.4 SoC Silicon Labs BG22L highlights: MCU – Arm Cortex-M33 @ 38.4 MHz with DSP and FPU (BG22 is clocked at […]

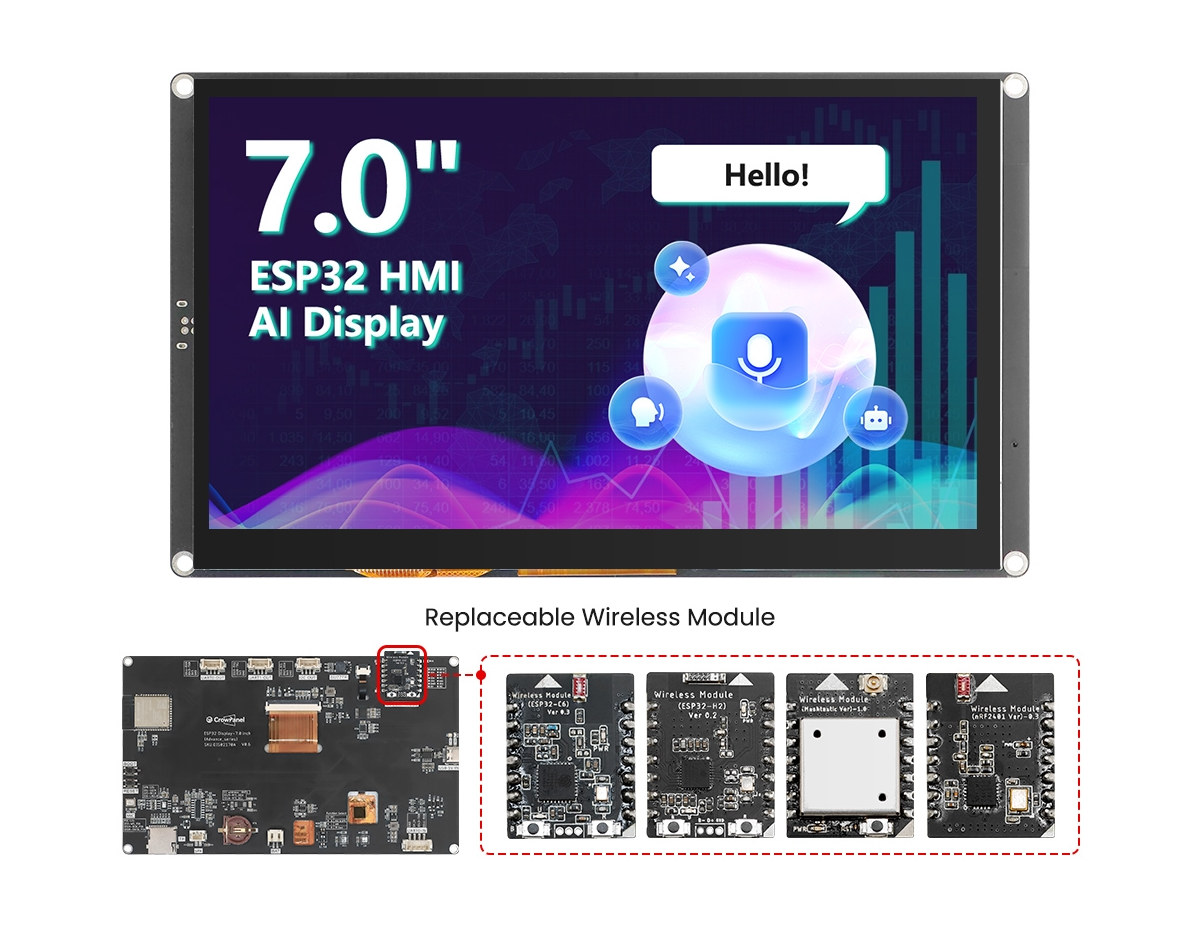

CrowPanel Advance: ESP32-S3 displays with replaceable WiFi 6, Thread, Zigbee, LoRa, and 2.4GHz wireless modules

Elecrow’s CrowPanel Advance is a family of 2.8-inch to 7-inch ESP32-S3 WiFi and BLE displays that supports replaceable modules for Thread/Zigbee/Matter, WiFi 6, 2.4GHz, and LoRa (Meshtastic) connectivity. Those are updates of the CrowPanel (Basic) displays introduced last year. They feature an ESP32-S3-WROOM-1-N16R8 module soldered on the back plus headers taking ESP32-H2, ESP32-C6, SX1262 LoRa transceiver, or nRF2401 2.4GHz wireless MCU. CrowPanel Advance 7-inch specifications: Wireless Module – ESP32-S3-WROOM-1-N16R8 SoC – ESP32-S3 CPU – Dual-core LX7 processor with up to 240MHz Memory – 512KB SRAM, 8MP PSRAM Storage – 384KH ROM Wireless – WiFi 4 and Bluetooth 5.0 with BLE Storage – 16MB flash PCB antenna Storage – MicroSD card slot Display 7.0-inch IPS capacitive touchscreen display with 800×480 resolution (SC7277 driver) Viewing Angle: 178° Brightness: 400 cd/m²(Typ.) Color Depth – 16-bit Active Area – 156 x 87mm Audio Built-in microphone Speaker connector Wireless Expansion ESP32-H2 module with 802.15.4 radio […]

Seeed Studio introduces ESP32-C3-based Modbus Vision RS485 and SenseCAP A1102 LoRaWAN outdoor Edge AI cameras

Seeed Studio has recently released the Modbus Vision RS485 and SenseCAP A1102 (LoRaWAN) outdoor Edge AI cameras based on ESP32-C3 SoC through the XIAO-ESP32C3 module for WiFi and the Himax WiseEye2 processor for vision AI. Both are IP66-rated AI vision cameras designed for home and industrial applications. The RS485 camera is designed for industrial systems and features a Modbus interface, making it suitable for factory automation and smart buildings. The SenseCAP A1102 uses LoRaWAN for long-range, low-power monitoring in remote locations. Both offer advanced AI for tasks like object detection and facial recognition. Besides its built-in RS485 interface, the Modbus Vision RS485 also supports LoRaWAN and 4G LTE connectivity via external Data Transfer Units (DTUs). With over 300 pre-trained AI models, the camera can do object detection and classification tasks making it suitable for industrial automation, smart agriculture, environmental monitoring, and other AI-driven applications requiring high performance. The SenseCAP A1102 […]

FOSDEM 2025 schedule – Embedded, Open Hardware, RISC-V, Edge AI, and more

FOSDEM 2025 will take place on February 1-2 with over 8000 developers meeting in Brussels to discuss open-source software & hardware projects. The free-to-attend (and participate) “Free and Open Source Software Developers’ European Meeting” grows every year, and in 2025 there will be 968 speakers, 930 events, and 74 tracks. Like every year since FOSDEM 2015 which had (only) 551 events, I’ll create a virtual schedule with sessions most relevant to the topics covered on CNX Software from the “Embedded, Mobile and Automotive” and “Open Hardware and CAD/CAM” devrooms, but also other devrooms including “RISC-V”, “FOSS Mobile Devices”, “Low-level AI Engineering and Hacking”, among others. FOSDEM 2025 Day 1 – Saturday 1 10:30 – 11:10 – RISC-V Hardware – Where are we? by Emil Renner Berthing I’ll talk about the current landscape of available RISC-V hardware powerful enough to run Linux and hopefully give a better overview of what to […]