LLMStick – An AI and LLM USB device based on Raspberry Pi Zero W and optimized llama.cpp

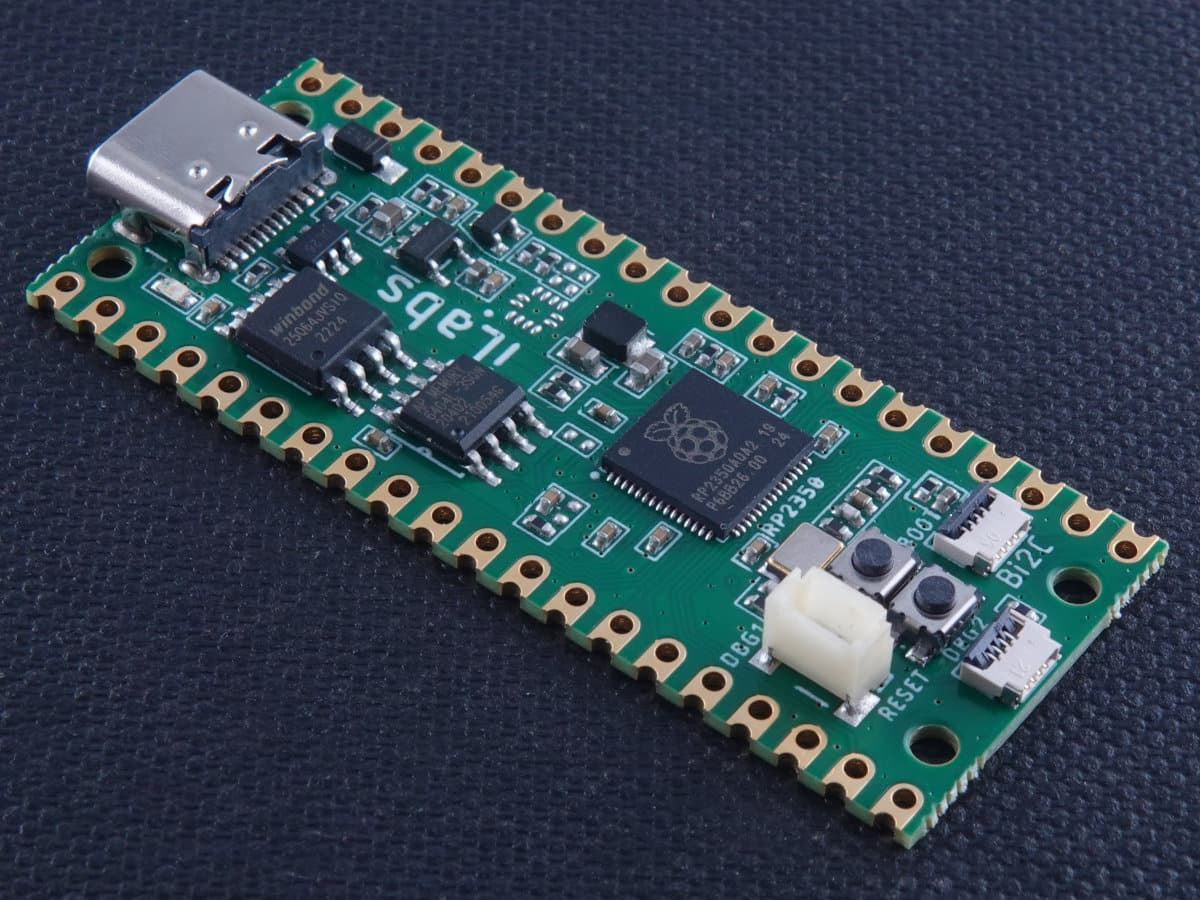

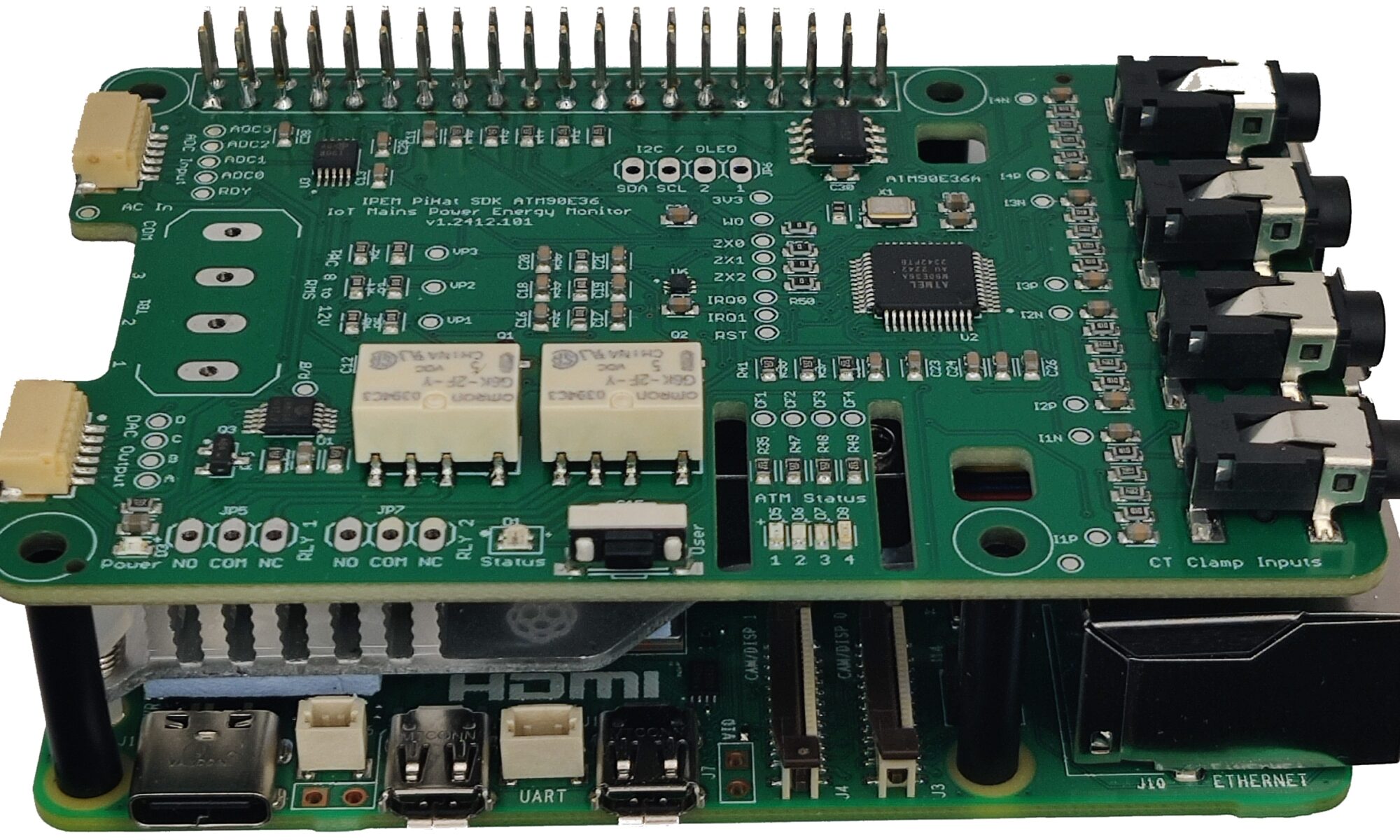

Youtuber and tech enthusiast Binh Pham has recently built a portable plug-and-play AI and LLM device housed in a USB stick called the LLMStick and built around a Raspberry Pi Zero W. This device portrays the concept of a local plug-and-play LLM which you can use without the internet. After DeepSeek shook the world with its performance and open-source accessibility, we have seen tools like Exo that allow you to run large language models (LLMs) on a cluster of devices, like computers, smartphones, and single-board computers, effectively distributing the processing load. We have also seen Radxa release instructions to run DeepSeek R1 (Qwen2 1.5B) on a Rockchip RK3588-based SBC with 6 TOPS NPU. Pham thought of using the llama.cpp project as it’s specifically designed for devices with limited resources. However, running llama.cpp on the Raspberry Pi Zero W wasn’t straightforward and he had to face architecture incompatibility as the old […]