AAEON UP Squared Pro 710H Edge is a mini PC with an onboard Hailo-8 edge 26 TOPS AI accelerator and an Intel Processor N97 or Core i3-N305 Alder Lake-N CPU designed for edge AI applications. The fanless Edge AI mini PC comes up to 16GB LPDDR5 soldered-on RAM, up to 128GB eMMC flash, and offers dual 4K video output through HDMI and DisplayPort connector, dual 2.5GbE, optional WiFi and cellular connectivity, two USB 3.2 ports, an RS232/RS422/RS485 COM port, a 40-pin female GPIO header, and a wide 12 to 36V DC input. UP Squared Pro 710H Edge specifications: Alder Lake N-series SoC (one or the other) Intel Processor N97 quad-core processor up to 3.6 GHz with 6MB cache, 24EU Intel UHD Graphics Gen 12 @ 1.2 GHz; TDP: 12W Intel Core i3-N305 octa-core processor up to 3.8 GHz with 6MB cache, 32EU Intel UHD Graphics Gen 12 @ 1.25 GHz; […]

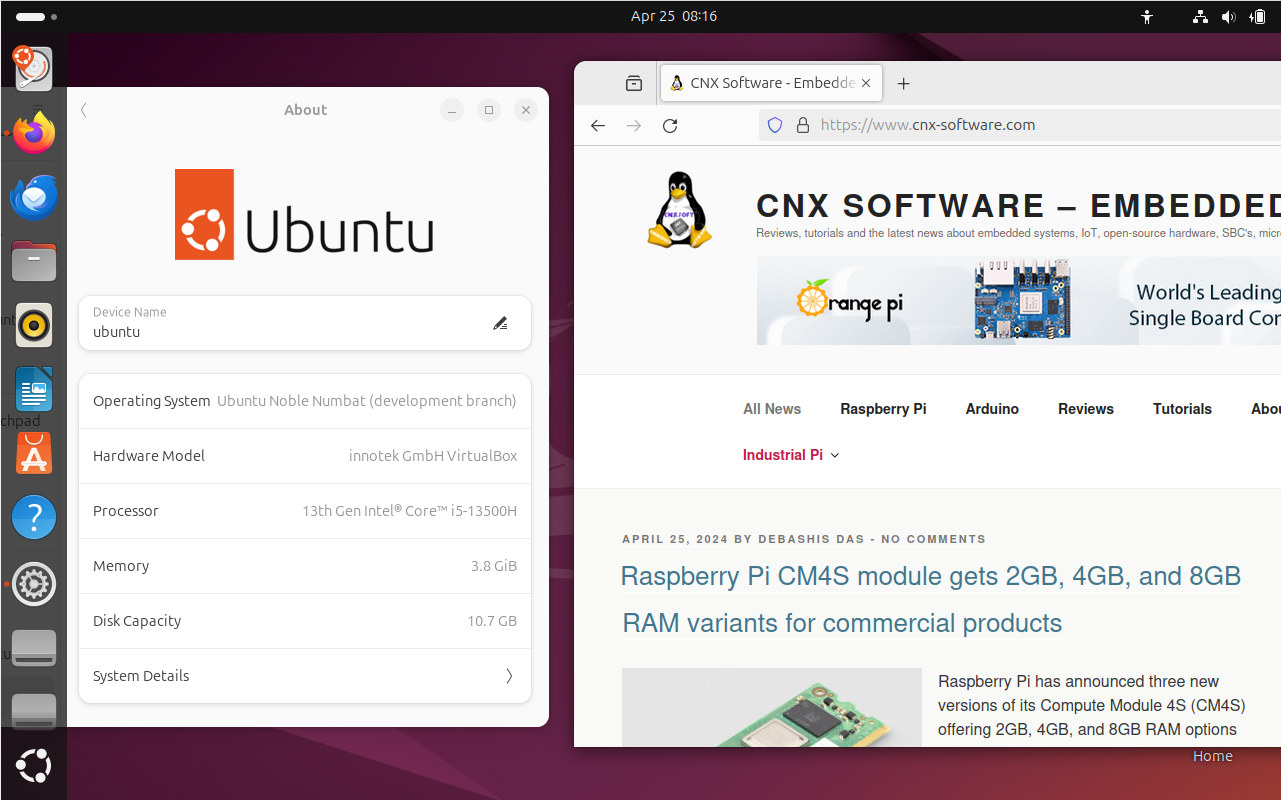

GEEKOM XT12 Pro review – Part 3: Ubuntu 24.04 on an Intel Core i9-12900H mini PC

We’ve already looked at GEEKOM XT12 Pro specifications and hardware design in the first part of the review and followed up by reviewing the Intel Core i9-12900 mini PC with Windows 11 Pro in the second part. We’ve now had time to review the GEEKOM XT12 Pro mini PC with the just-released Ubuntu 24.04 operating system to evaluate the compatibility and performance in Linux. In this third and final part of the review, we’ll test features in detail, evaluate performance with benchmarks, test storage and network capabilities, check YouTube video playback at 4K and 8K resolution, perform a stress test to check the cooling solution and provide numbers for fan noise and power consumption of the GEEKOM XT12 Pro mini PC. Ubuntu 24.04 installation and system information We’ve resized the Windows 11 by half in order to install Ubuntu 24.04 in dual boot configuration. After that, we inserted a USB […]

Muse Book laptop features SpacemiT K1 octa-core RISC-V AI processor, up to 16GB RAM

SpacemiT, a chip design company from China with RISC-V as its core technology, recently unveiled the Muse Book laptop based on the K1 octa-core RISC-V chip. Unlike our daily laptops, it has many interesting unique features and is mainly sold to hardware engineers and DIY enthusiasts. This Muse Book runs the Bianbu OS operating system based on the Debian distribution and optimized to run on the SpacemiT K1 octa-core RISC-V SoC. Let’s first take a look at its external interfaces. On the left side of the laptop, there are two USB Type-C interfaces, a USB 3.0 Type-A port, a 3.5mm headphone jack, a microSD card slot, and a reset pinhole. The 8-pin header on the right side of the laptop is quite interesting, and SpacemiT hopes the Muse Book can become one of the most convenient hardware development platforms for RISC-V. In addition to the power pins, users will find […]

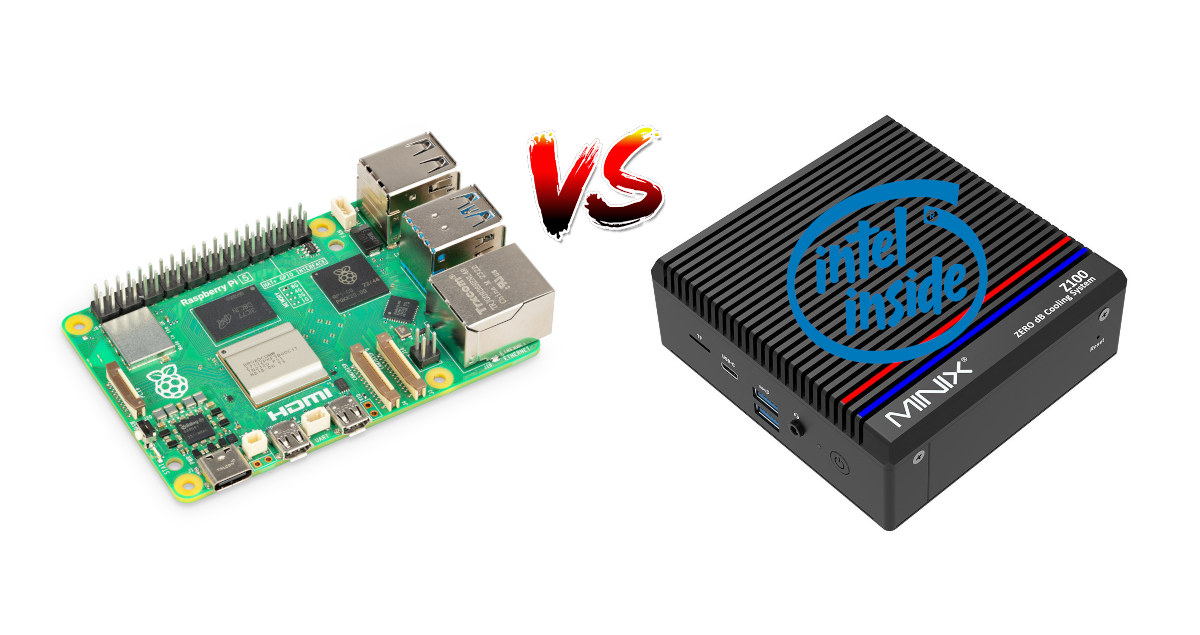

Raspberry Pi 5 vs Intel N100 mini PC comparison – Features, Benchmarks, and Price

The Raspberry Pi 5 Arm SBC is now powerful enough to challenge some Intel systems in terms of performance, while Intel has made the Intel Alder Lake-N family, notably the Intel Processor N100, inexpensive and efficient enough to challenge Arm systems when it comes to price, form factor, and power consumption. So we’ll try to match the Raspberry Pi 5 to typical Intel processor N100 mini PCs with a comparison of features/specifications, performance (benchmarks), and pricing with different use cases. That’s something I’ve been wanting to look into for a while but I was busy with reviews and other obligations (Hello, Mr. Taxman!), and this weekend I had some spare time to carry on the comparison. Raspberry Pi 5 vs Intel N100 mini PC specifications I’ll start by comparing the specifications of a Raspberry Pi 5 against the ones for typical Intel Processor N100-based mini PCs also mentioning optional features […]

Azulle Access Pro Intel N100 PC stick ships with Windows 11, Linux, or Zoom

Azulle Access Pro is a PC stick based on an Intel N100 quad-core Alder Lake” processor with up to 8GB RAM, 128GB eMMC flash, and a male HDMI port which is offered with Windows 11 Pro, Linux, or “Zoom” operating systems (more on that below). The mini PC also provides a microSD card slot, gigabit Ethernet, WiFi 6 and Bluetooth 5.2 with an external antenna, USB 3.0 Type-A and Type-C ports, and a 3.5mm audio jack. We’ve written about pocket-sized mini PCs based on Alder Lake-N processors before, for instance, the MeLe PCG02 Pro N100 or the slightly wider MeLe Quieter4C, but the Azulle model is different since it comes with a male HDMI connector which would allow users to connect it directly into a TV or monitor without a cable. Azulle Access Pro specifications: SoC – Intel Processor N100 quad-core Alder Lake-N processor @ up to 3.4 GHz (Turbo) […]

Ubuntu 24.04 LTS “Noble Numbat” released with Linux 6.8, up to 12 years of support

Canonical has just released Ubuntu 24.04 LTS “Noble Numbat” distribution a little over two years after Ubuntu 22.04 LTS “Jammy Jellyfish” was released. The new version of the operating system comes with the recent Linux 6.8 kernel, GNOME 46, and a range of updates and new features we’ll discuss in this post. As a long-term support release, Ubuntu 24.04 LTS gets a 12-year commitment for security maintenance and support, with five years of free security maintenance on the main Ubuntu repository, and Ubuntu Pro extending that commitment to 10 years on both the main and universe repositories (also free for individuals and small companies with up to 5 devices). This can be extended a further 2-year, or 12 years in total, for Ubuntu Pro subscribers who purchase the Legacy Support add-on. Canonical explains the Linux 6.8 kernel brings improved syscall performance, nested KVM support on ppc64el, and access to the […]

Firefly AIBOX-1684X compact AI Box delivers 32 TOPS for large language models, image generation, video analytics, and more

Firefly AIBOX-1684X is a compact AI Box based on SOPHON BM1684X octa-core Arm Cortex-53 processor with a 32 TOPS AI accelerator suitable for large language models (LLM) such as Llama 2, Stable Diffusion image generation solution, and traditional CNN and RNN neural network architectures. Firefly had already released several designs based on the SOPHON BM1684X AI processor with the full-featured Firefly EC-A1684XJD4 FD Edge AI computer and the AIO-1684XQ motherboard, but the AIBOX-1684X AI Box offers the same level of performance, just without as many interfaces, in a compact enclosure measuring just 90.6 x 84.4 x 48.5 mm. AIBOX-1684X AI box specifications: SoC – SOPHGO SOPHON BM1684X CPU – Octa-core Arm Cortex-A53 processor @ up to 2.3 GHz TPU – Up to 32TOPS (INT8), 16 TFLOPS (FP16/BF16), 2 TFLOPS (FP32) VPU Up to 32-channel H.265/H.264 1080p25 video decoding Up to 32-channel 1080p25 HD video processing (decoding + AI analysis) Up […]

Testing ntttcp as an iperf3 alternative in Windows 11 (and Linux)

ntttcp (Windows NT Test TCP) is a network benchmarking utility similar to iperf3 that works in both Windows and Linux written and recommended by Microsoft over iperf3, so we’ll test the alternative in this mini review. iperf3 is a utility of choice for our reviews of single board computers and mini PCs running either Windows or Linux, but we’ve noticed that while Ethernet (up to 2.5GbE) usually performs just as well in Windows and Linux, WiFi is generally much faster in Ubuntu 22.04 than in Windows 11. So when XDA developers noticed a post by Microsoft saying iperf3 should not be used on Windows 11, it caught my attention. Microsoft explains iperf3 should not be used in Windows for three main reasons: The maintainer of iperf – ESnet (Energy Sciences Network) – says “iperf3 is not officially supported on Windows, but iperf2 is. We recommend you use iperf2. Some people […]