Teledyne DALSA has recently announced the Tetra line scan camera family with 2.5GbE networking, up to 8K resolution, a line rate of up to 150 kHz, and offered in monochrome or color versions. Traditional cameras (aka scan camera) will capture a full frame, but line scan cameras will only capture one line at a time to scan an object or scene as it moves past the camera providing higher resolution image provided the speed of the scan and the motion of the object are syncrhonized, for instance, on a production line. Line scan cameras like the Tetra are typically used for quality control at the factory, document scanning, license plate scanning, and scientific research (e.g. satellite imaging). Teledyne Tetra specifications: Color cameras Tetra 2k Color – TL-GC-02K05T-00 Resolution – 2048 x 3 Pixel size – 14 μm Max line rate – 50 kHz x 3 Tetra 4k Color – […]

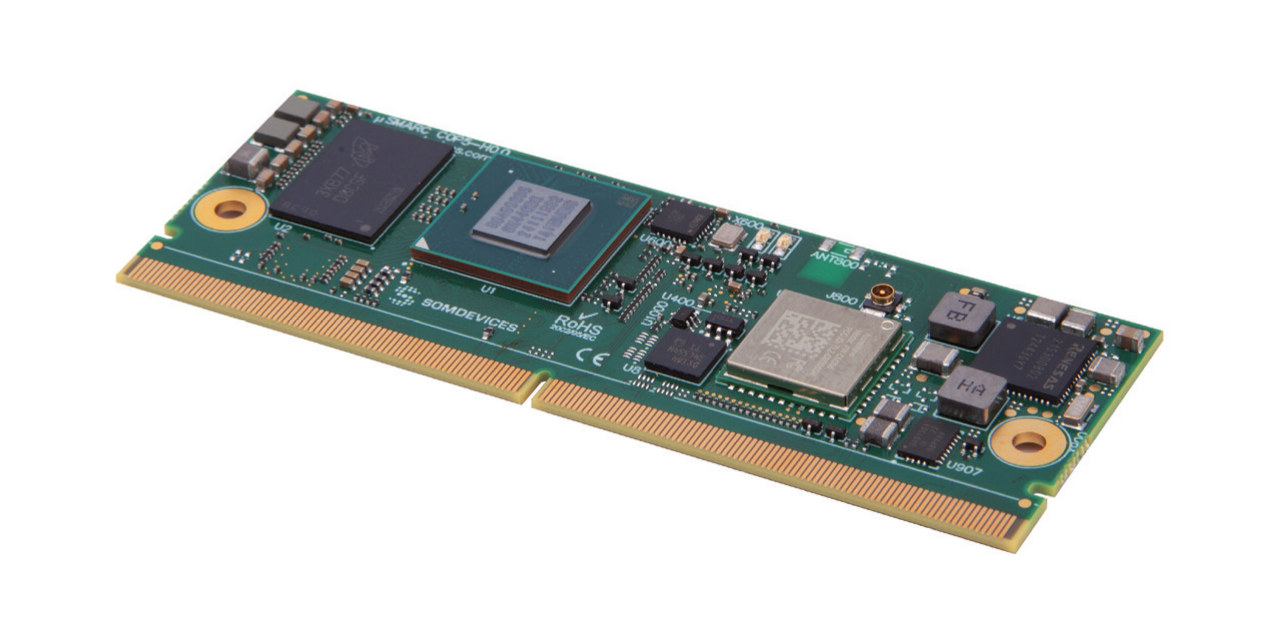

SOMDEVICES µSMARC RZ/V2N system-on-module packs Renesas RZ/V2N MPU in a 82x30mm “micro SMARC” form factor

SOMDEVICES µSMARC RZ/V2N is a system-on-module powered by the Renesas RZ/V2N AI MPU and offered in a micro SMARC (µSMARC) form factor that’s quite smaller at 82x30mm than standard 82x50mm SMARC modules. The company told CNX Software it’s still 100% compatible with SMARC 2.1 with a 314-pin MXM 3.0 edge connector. It’s also cost-effective with a compact PCB and can be used in space-constrained applications. The µSMARC features up to 8GB LPDDR4, up to 128GB eMMC flash, two gigabit Ethernet PHY, and an optional WiFi 5 and Bluetooth 5.1 wireless module. µSMARC RZ/V2N specifications: SoC – Renesas RZ/V2N CPU Quad-core Arm Cortex-A55 @ 1.8 GHz Arm Cortex-M33 @ 200 MHz GPU – Arm Mali-G31 3D graphics engine (GE3D) with OpenGL ES 3.2 and Open CL 2.0 FP VPU – Encode & decode H.264 – Up to 1920×1080 @ 60 fps (Renesas specs, but SOMDEVICES also mentions up to 4K @ 30 […]

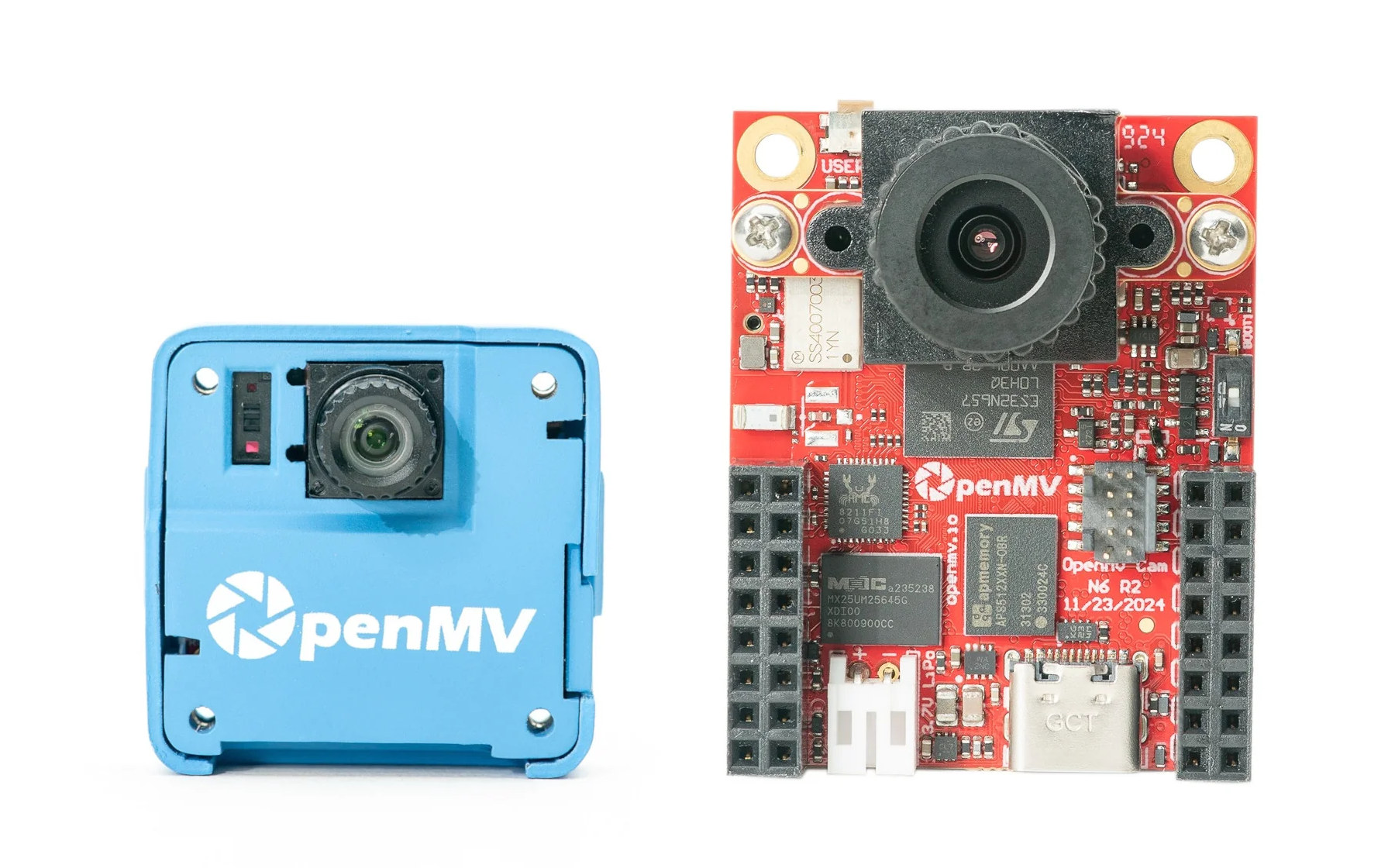

MicroPython-programmable OpenMV N6 and AE3 AI camera boards run on battery for years (Crowdfunding)

OpenMV has launched two new edge AI camera boards programmable with MicroPython: the OpenMV AE3 powered by an Alif Ensemble E3 dual Cortex-M55, dual Ethos-U55 micro NPU SoC, and the larger OpenMV N6 board based on an STMicro STM32N6 Cortex-M55 microcontroller with a 1 GHz Neural-ART AI/ML accelerator. Both can run machine vision workloads for several years on a single battery charge. The OpenMV team has made several MCU-based camera boards and corresponding OpenMV firmware for computer vision, and we first noticed the company when they launched the STM32F427-based OpenMV Cam back in 2015. A lot of progress has been made over the years in terms of hardware, firmware, and software, but the inclusion of AI accelerators inside microcontrollers provides a leap in performance, and the new OpenMV N6 and AE3 are more than 100x faster than previous OpenMV Cams for AI workloads. For example, users can now run object […]

IMDT V2N Renesas RZ/V2N SoM powers Edge AI SBC with HDMI, MIPI DSI, dual GbE, WiFi 4, and more

IMD Technologies has recently introduced the IMDT V2N SoM based on the newly launched Renesas RZ/V2N low-power AI MPU and its SBC carrier board designed for robotics, smart cities, industrial automation, and IoT applications. The SoM ships with 8GB RAM and 32GB eMMC flash, and supports various interfaces through B2B connectors including two 4-lane MIPI CSI-2 interfaces, a MIPI DSI display interface, and more. The SBC supports MIPI DSI or HDMI video output, dual GbE, WiFi 4 and Bluetooth 5.2, as well as expansion capabilities through M.2 sockets. IMDT V2N SBC specifications: IMDT V2N SoM SoC – Renesas RZ/V2N Application Processor – Quad-core Arm Cortex-A55 @ 1.8 GHz (0.9V) / 1.1 GHz (0.8V) L1 cache – 32KB I-cache (with parity) + 32KB D-cache (with ECC) per core L3 cache – 1MB (with ECC, max frequency 1.26 GHz) Neon, FPU, MMU, and cryptographic extension (for security models) Armv8-A architecture System Manager […]

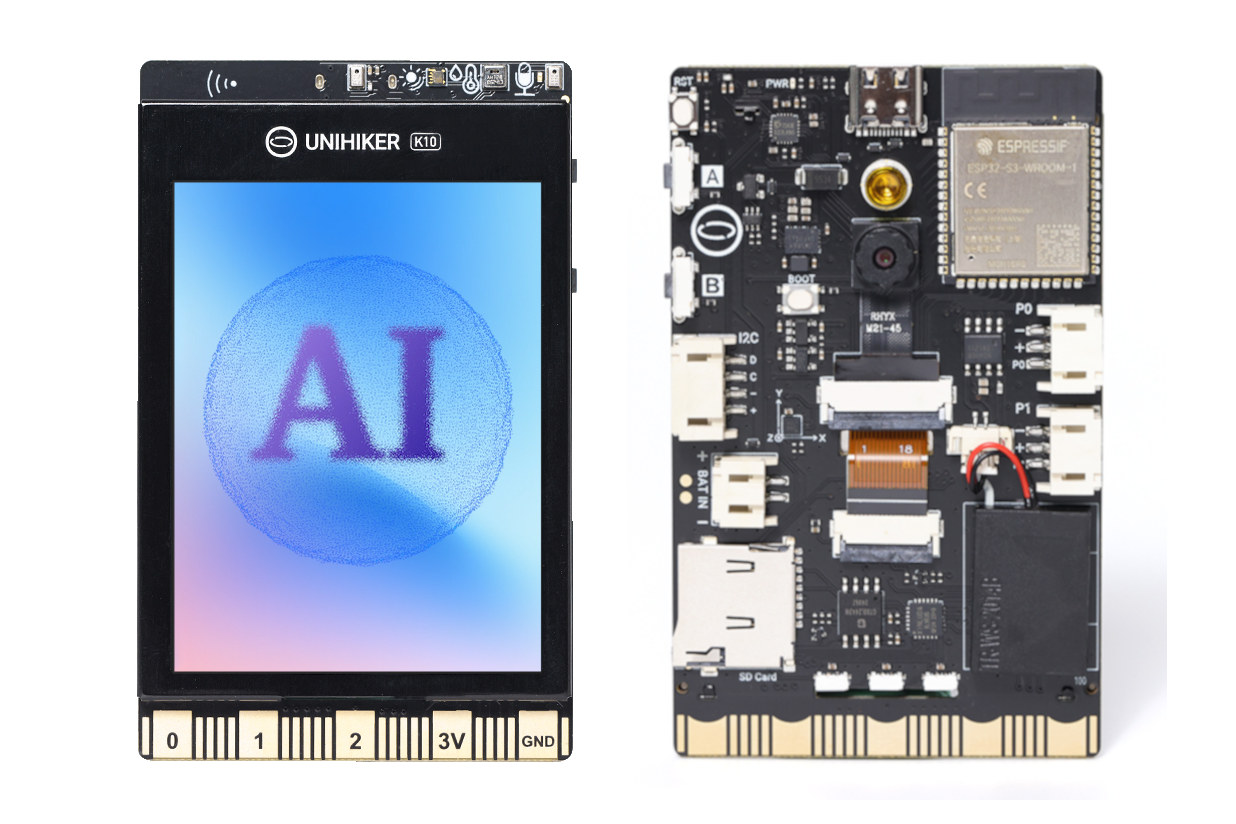

UNIHIKER K10 low-cost TinyML education platform supports image detection and voice recognition

UNIHIKER K10 is a low-cost STEM education platform for TinyML applications that leverages the ESP32-S3 wireless microcontroller with vector extensions for workloads such as image detection or voice recognition. The UNIHIKER K10 also features a built-in 2.8-inch color display, a camera, a speaker, a 2-microphone array, a few sensors, a microSD card, and a BBC Micro:bit-like edge connector for power signals and GPIOs. It’s a cost-optimized version of its Linux-based big brother – the UNIHIKER M10 – first unveiled in 2022. Arnon also reviewed the UNIHIKER in 2023, showing how to configure it, use the SIoT platform with MQTT message, and program it with Jupyter Notebook, Python, or Visual Studio Code. Let’s have a closer look at the new ESP32-S3 variant. UNIHIKER K10 specifications: Core module – ESP32-S3-WROOM-1 MCU – ESP32-S3N16R8 dual-core Tensilica LX7 up to 240 MHz with 512KB SRAM, 8MB PSRAM, 16MB flash Wireless – WiFi 4 and […]

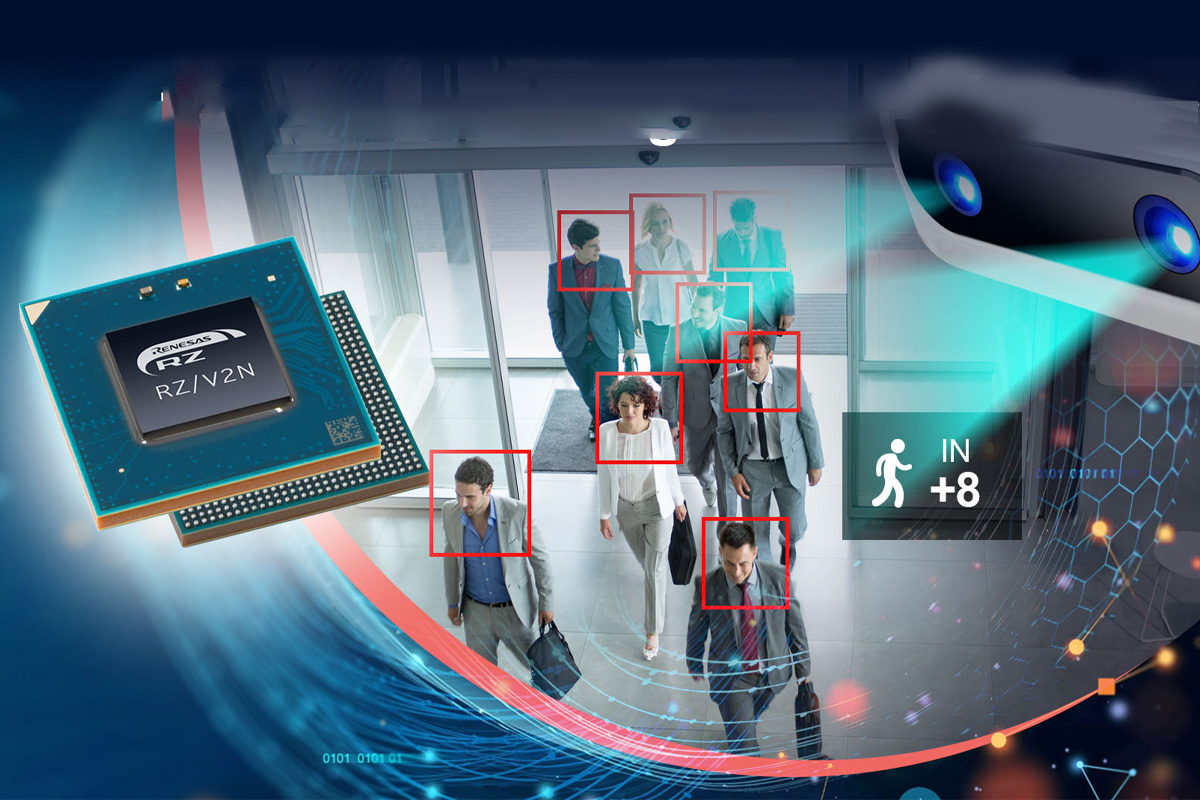

Renesas RZ/V2N low-power AI MPU integrates up to 15 TOPS AI power, Mali-C55 ISP, dual MIPI camera support

Renesas has recently introduced the RZ/V2N low-power Arm Cortex-A55/M33 microprocessor designed for machine learning (ML) and computer vision applications. It features the company’s DRP-AI3 coprocessor, delivering up to 15 TOPS of INT8 “pruned” compute performance at 10 TOPS/W efficiency, making it a lower-power alternative to the RZ/V2H. Built for mid-range AI workloads, it includes four Arm Cortex-A55 cores (1.8GHz), a Cortex-M33 sub-CPU (200MHz), an optional 4K image signal processor, H.264/H.265 hardware codecs, an optional Mali-G31 GPU, and a dual-channel four-lane MIPI CSI-2 interface. The chip is around 38% smaller than the RZ/V2H MPU and operates without active cooling. The RZ/V2N is suitable for applications like endpoint vision AI, robotics, and industrial automation. Renesas RZ/V2N specifications CPU Application Processor – Quad-core Arm Cortex-A55 @ 1.8 GHz (0.9V) / 1.1 GHz (0.8V) L1 cache – 32KB I-cache (with parity) + 32KB D-cache (with ECC) per core L3 cache – 1MB (with ECC, […]

Zant – An open-source Zig SDK for neural network deployment on microcontrollers

Zant is an open-source, cross-platform SDK written in Zig and designed to simplify deploying Neural Networks (NN) on microcontrollers. It comprises a suite of tools to import, optimize, and deploy NNs to low-end hardware. The developers behind the project developed Zant (formerly known as Zig-ant) after noticing many microcontrollers lacked robust deep learning libraries, and made sure it would be on various platforms such as ARM Cortex-M or RISC-V microcontrollers, or even x86 targets. Contrary to platforms like Edge Impulse that focus on network creation, Zant is about deployment and outputs a static, highly optimized library ready to be integrated into any existing work stack. Zant highlights: Optimized Performance – Supports quantization, pruning, and hardware acceleration techniques such as SIMD and GPU offloading. Low memory footprint – Zant employs memory pooling, static allocation, and buffer optimization to work on resources-constrained targets. Ease of Integration: With a modular design, clear APIs, […]

Edge video processing platform features NXP i.MX 8M Plus, i.MX 93, or i.MX 95 SoC, supports up to 23 camera types

DAB Embedded AquaEdge is a compact computer based on NXP i.MX 8M Plus, i.MX 93, or i.MX 95 SoC working as an edge video processing platform and supporting 23 types of vision cameras with resolution from VGA up to 12MP, and global/rolling shutter. The small edge computer features a gigabit Ethernet RJ45 jack with PoE to power the device. It is also equipped with a single GSML2 connector to connect a camera whose input can be processed by the built-in AI accelerator found in the selected NXP i.MX processors. Other external ports include a microSD card slot, a USB 3.0 Type-A port, and a mini HDMI port (for the NXP i.MX 8M Plus model only). DAB Embedded AquaEdge specifications: SoC / Memory / Storage options NXP i.MX 8M Plus CPU – Quad-core Cortex-A53 processor @ 1.8GHz, Arm Cortex-M7 real-time core AI accelerator – 2.3 TOPS NPU VPU Encoder up to […]