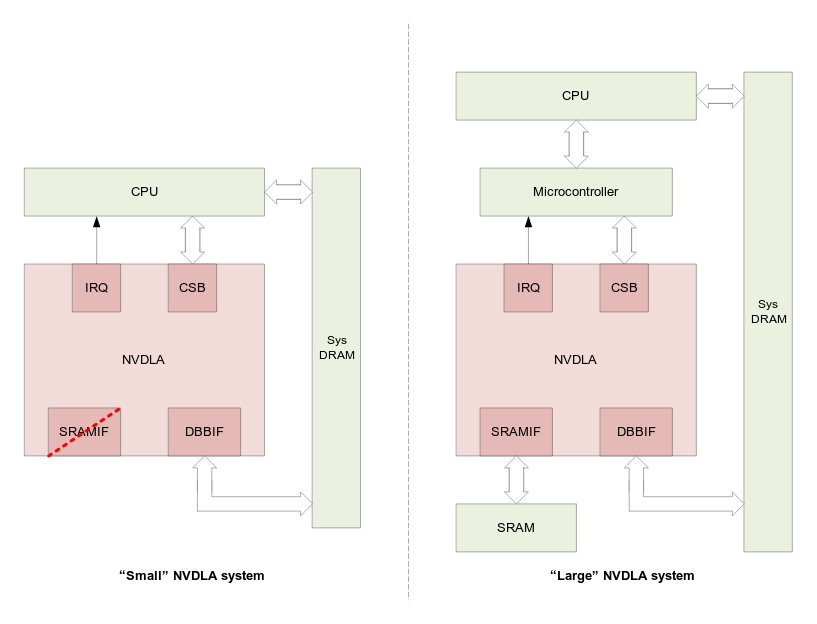

A large patchset has been submitted to mainline Linux for NVIDIA NVDLA AI accelerator Direct Rendering Manager (DRM) driver, accompanied by an open-source user mode driver. The NVDLA (NVIDIA Deep Learning Accelerator) can be found in recent Jetson modules such as Jetson AGX Xavier and Jetson AGX Orin, and since NVDLA was made open-source hardware in 2017, it can also be integrated into third-party SoCs such as StarFive JH7100 Vision SoC and Allwinner V831 processor. I actually assumed everything was open-source already since we were told that NVDLA was a “complete solution with Verilog and C-model for the chip, Linux drivers, test suites, kernel- and user-mode software, and software development tools all available on Github’s NVDLA account.” and the inference compiler was open-sourced in September 2019. But apparently not, as developer Cai Huoqing submitted a patchset with 23 files changed, 13243 insertions, and the following short description: The NVIDIA Deep […]

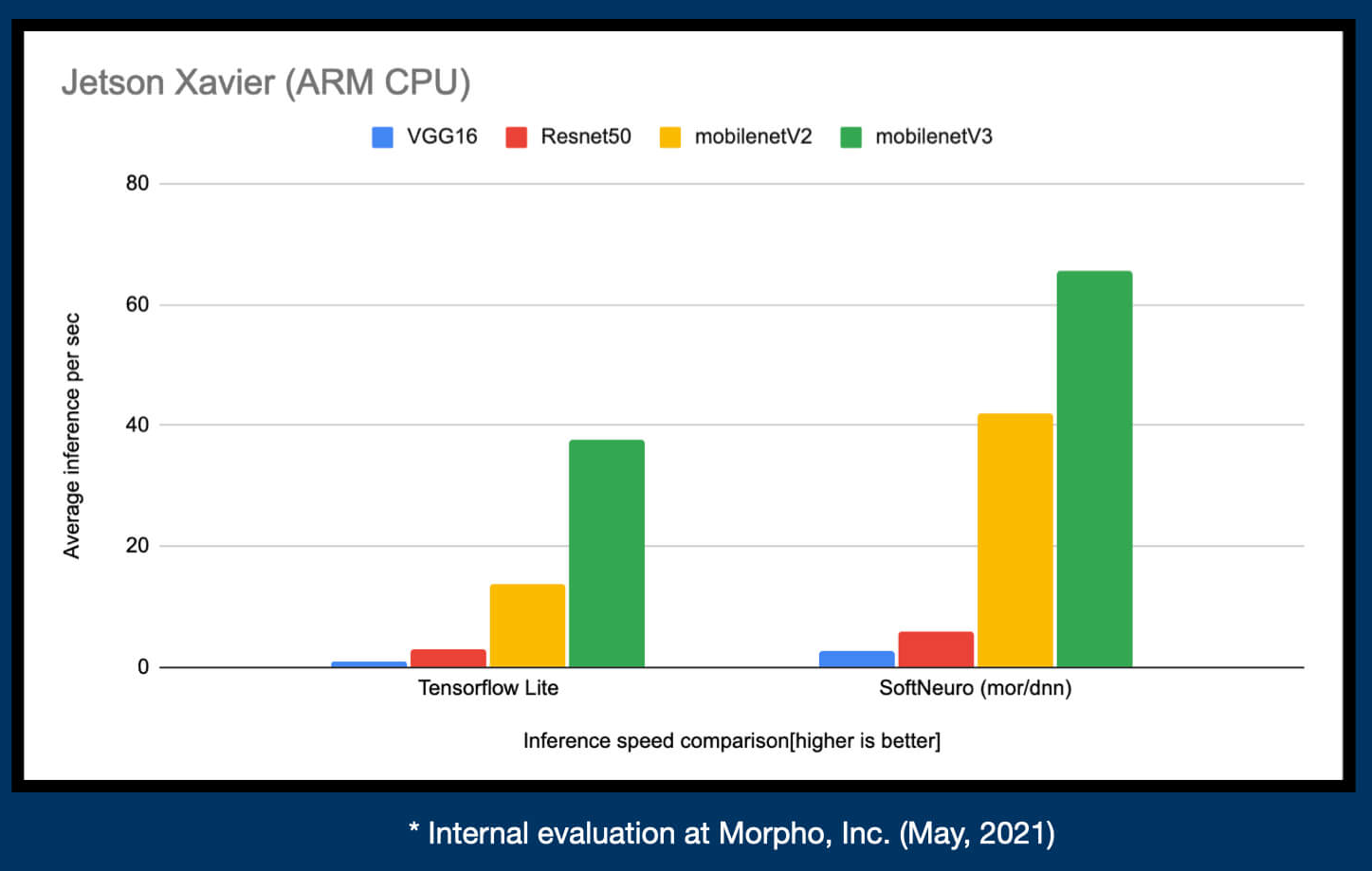

Download a free trial of the SoftNeuro Deep Learning SDK for Intel and Arm targets (Sponsored)

Morpho, a global research & development company established in Japan in 2004 and specialized in imaging technology, is now offering a free trial for the SoftNeuro deep learning SDK working on Intel processors with AVX2 SIMD extensions, 64-bit Arm targets, while also leveraging OpenCL and/or CUDA. Some of the advantages of SoftNeuro are that the framework is easy to use even for those without any knowledge about deep learning, it’s fast thanks to the separation of the layers and their execution patterns, and it can run on several different hardware and OS being cross-platform. SoftNeuro relies on its own storage format (DNN format) to deliver the above advantages. But you can still use models trained with any mainstream deep learning framework. TensorFlow and Keras models can be directly converted to the DNN format, while models from other frameworks can be converted first to the ONNX format and then to the […]

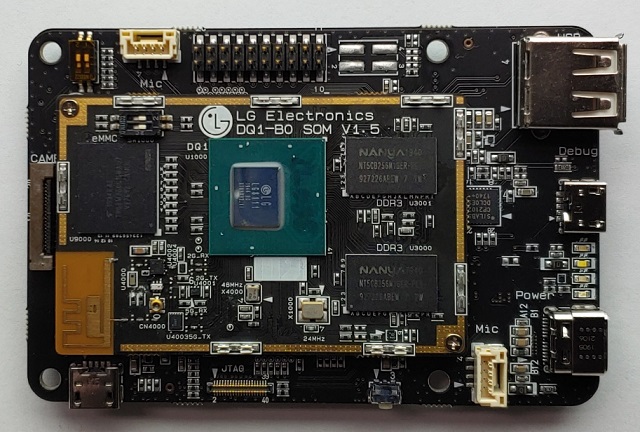

LG launches LG8111 AI SoC and development board for Edge AI processing

LG Electronics has designed LG8111 AI SoC for on-device AI inference and introduced the Eris Reference Board based on the processor. The chip supports hardware processing in artificial intelligence functions such as video, voice, and control intelligence. LG8111 AI development board is capable of implementing neural networks for deep learning specific algorithms due to its integrated “LG-Specific AI Processor.” Also, the low power and the low latency feature of the chip enhances its self-learning capacity. This enables the products with LG8111 AI chip to implement “On-Device AI.” Components and Features of the LG8111 AI SoC LG Neural engine, the AI accelerator has an extensive architecture for “On-Device” Inference/Leaning with its support on TensorFlow, TensorFlow Lite, and Caffe. The CPU of the board comes with four Arm Cortex A53 cores clocked at 1.0 GHz, with an L1 cache size of 32KB and an L2 cache size of 1MB. The CPU also […]

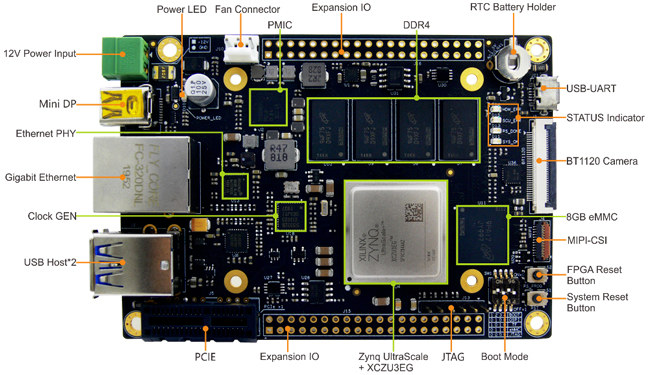

Zynq UltraScale+ Arm FPGA FZ3 Deep Learning Accelerator Card Supports Baidu Brain AI Tools

MYIR’s FZ3 card is a deep learning accelerator board powered by Xilinx Zynq UltraScale+ ZU3EG Arm FPGA MPSoC delivering up to 1.2TOPS for artificial intelligence products based on Baidu Brain AI open platform. The FZ3 card also features 4GB RAM, 8GB eMMC flash, USB 2.0 & USB 3.0 ports, Gigabit Ethernet, DisplayPort (DP) output, PCIe interface, MIPI-CSI and more. MYIR FZ3 card specifications: SoC – Xilinx Zynq UltraScale+ XCZU3EG-1SFVC784E (ZU3EG) MPSoC Quad-core Arm Cortex-A53 @ 1.2 GHz Dual-core Arm Cortex-R5 processor @ 600MHz Arm Mali-400MP2 GPU FPGA fabric System Memory – 4GB DDR4 Storage – 8GB eMMC flash, 32MB QSPI flash, 32KB EEPROM, MicroSD card slot Video Output – 1x Mini DisplayPort up to 4Kp30 Camera I/F 1 x MIPI-CSI Interface (25-pin 0.3mm pitch FPC connector) 1 x BT1120 Camera Interface (32-pin 0.5mm pitch FPC connector) Connectivity – 1x Gigabit Ethernet USB – 1x USB 2.0 Host, 1x USB 3.0 Host […]

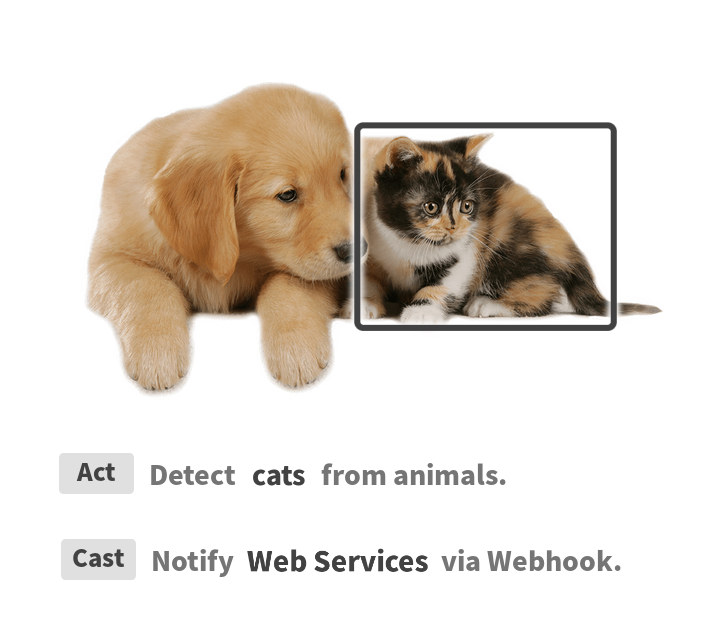

Actcast Combines IFTTT-like Service with AI and Raspberry Pi 3 / Zero

In a report on April 30, 2017, Idein had developed GPGPU accelerated object recognition for the Raspberry Pi platform. That development led to the beta release of the ActCast IoT platform, which was announced on July 29, 2019, and uses deep learning algorithms for object and subject recognition. The program is for use with IoT and AI. The idea is to increase performance and link the system to the web for even more solutions. What it Does The use of physical world information in IoT projects has many applications. Such as a doorbell that sees a person, can then recognize the person. Ultimately letting the user know over the web through a smartphone, the person should be let in. And then the system unlocks the door. So Actcast is a bit like IFTTT with artificial intelligence / computer vision capabilities. Edge Computing Bringing the source of data closer to the […]

LG Unveils 8K, HDMI 2.1 Capable TVs Powered by Alpha 9 Gen 2 Processor

Last year Samsung unveiled their first 8K TV right before CES 2018 with a 85″ QLED model that can now be yours for a cool $15,000, and capable of upscaling SD / HD content to 8K using artificial intelligence since 8K content was not – and still is not – broadly available. One year has passed, and now LG has announced two new 8K TVs with HDMI 2.1 inputs just before CES 2019. Both 88″ Z9 OLED TV and 75″ SM99 8K LCD TV are powered by the company’s α9 (Alpha 9) Gen 2 processor running deep learning algorithms to deliver enhanced picture and sound. The press release gives an overview of how AI works on both video and audio as follows: In addition to content source detection, the new processor finely adjusts the tone mapping curve in accordance with ambient conditions to offer optimized screen brightness, leveraging its ability […]

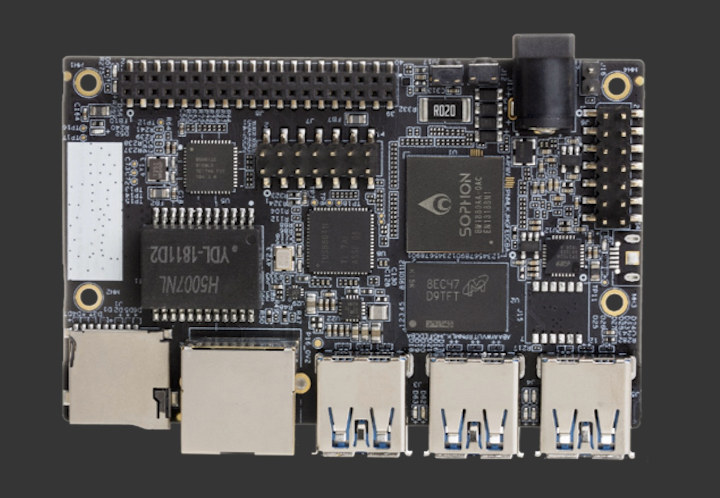

96Boards AI Sophon Edge Developer Board Features Bitmain BM1880 ASIC SoC

Bitmain, a company specializing in cryptocurrency, blockchain, and artificial intelligence (AI) application, has just joined Linaro, and announced the first 96Boards AI platform featuring an ASIC: Sophon BM1880 Edge Development Board, often just referred to as “Sophon Edge”. The board conforms to the 96Boards CE specification, and include two Arm Cortex-A53 cores, a Bitmain Sophon Edge TPU delivering 1 TOPS performance on 8-bit integer operations, USB 3.0 and gigabit Ethernet. Sophon Edge specifications: SoC ASIC – Sophon BM1880 dual core Cortex-A53 processor @ 1.5 GHz, single core RISC-V processor @ 1 GHz, 2MB on-chip RAM, and a TPU (Tensor Processing Unit) that can provide 1TOPS for INT8,and up to 2 TOPs by enabling Winograd convolution acceleration System Memory – 1GB LPDDR4 @ 3200Mhz Storage – 8GB eMMC flash + micro SD card slot Video Processing – H.264 decoder, MJPEG encoder/decoder, 1x 1080p @ 60fps or 2x 1080p @ 30fps H.264 decoder, […]

Embedded Linux Conference Europe & OpenIoT Summit Europe 2018 Schedule

The Embedded Linux Conference & OpenIoT Summit 2018 took place in March of this year in the US, but the European version of the events are now planned to take place on October 21-24 in Edinburg, UK, and the schedule has already been released. So let’s make a virtual schedule to find out more about some of interesting subjects that are covered at the conferences. The conference and summit really only officially start on Monday 22, but there are a few talks on Sunday afternoon too. Sunday, October 21 13:30 – 15:15 – Tutorial: Introduction to Quantum Computing Using Qiskit – Ali Javadi-Abhari, IBM Qiskit is a comprehensive open-source tool for quantum computation. From simple demonstrations of quantum mechanical effects to complicated algorithms for solving problems in AI and chemistry, Qiskit allows users to build and run programs on quantum computers of today. Qiskit is built with modularity and extensibility […]