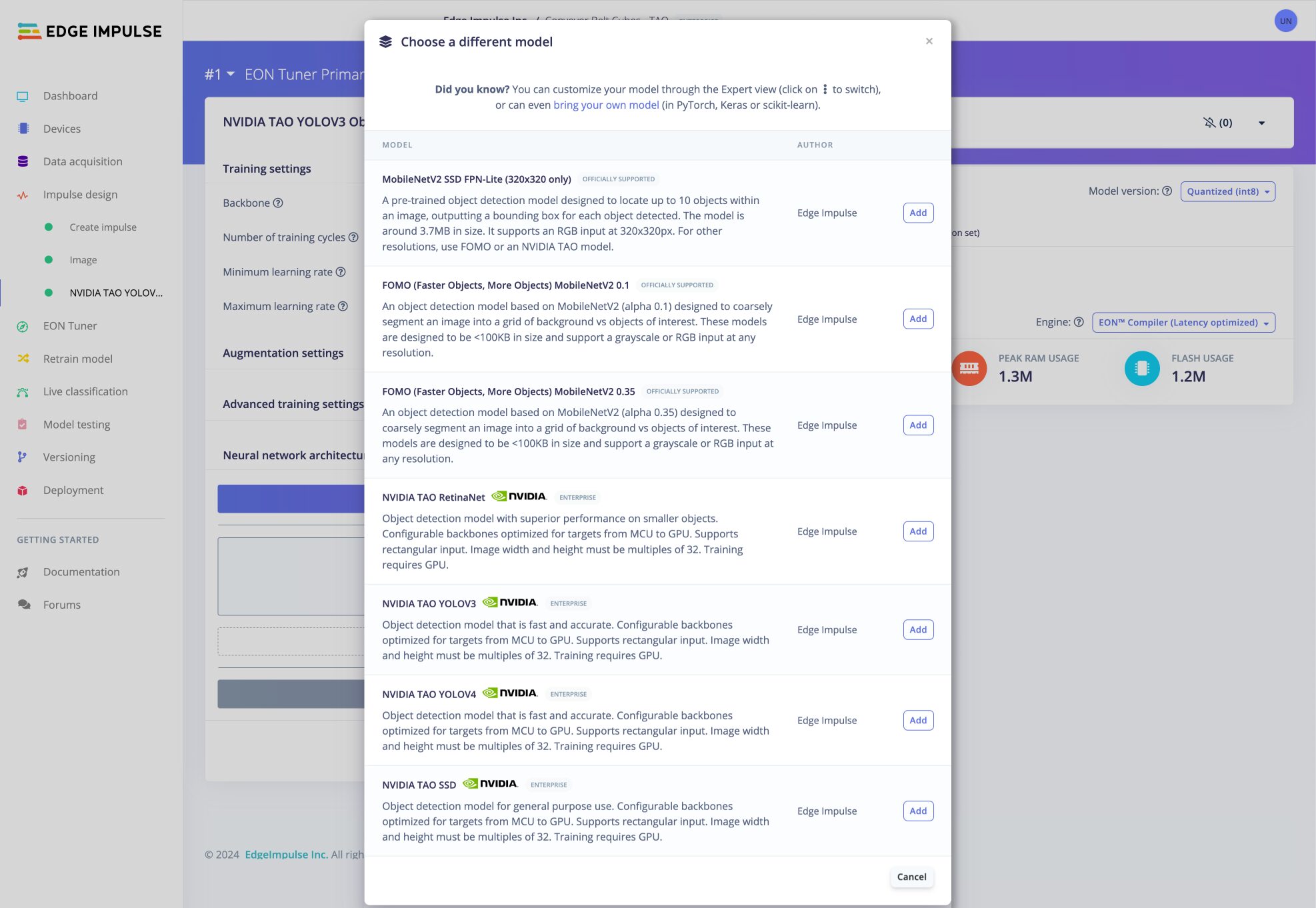

Edge Impulse machine learning platform for edge devices has released a new suite of tools developed on NVIDIA TAO Toolkit and Omniverse that brings new AI models to entry-level hardware based on Arm Cortex-A processors, Arm Cortex-M microcontrollers, or Arm Ethos-U NPUs. By combining Edge Impulse and NVIDIA TAO Toolkit, engineers can create computer vision models that can be deployed to edge-optimized hardware such as NXP I.MX RT1170, Alif E3, STMicro STM32H747AI, and Renesas CK-RA8D1. The Edge Impulse platform allows users to provide their own custom data with GPU-trained NVIDIA TAO models such as YOLO and RetinaNet, and optimize them for deployment on edge devices with or without AI accelerators. NVIDIA and Edge Impulse claim this new solution enables the deployment of large-scale NVIDIA models to Arm-based devices, and right now the following object detection and image classification tasks are available: RetinaNet, YOLOv3, YOLOv4, SSD, and image classification. You can […]

Waveshare Jetson Nano powered mini-computer features a sturdy metal case

Waveshare has launched the Jetson Nano Mini Kit A, a mini-computer kit powered by Jetson Nano. This kit features the Jetson Nano Module, a cooling fan, and a WiFi module, all inside a sturdy metal case. The mini-computer is built around Nvidia’s Jetson platform housing the Jetson Nano module and features multiple interfaces, including USB connectors, an Ethernet port, an HDMI port, CSI, GPIO, I2C, and RS485 interfaces. It also has an onboard M.2 B KEY slot for installing either a WiFi or 4G module and is compatible with TensorFlow, and PyTorch which makes it well-suited for various AI applications. Waveshare Mini-Computer Specification: GPU – NVIDIA Maxwell architecture with 128 NVIDIA CUDA cores CPU – Quad-core ARM Cortex-A57 processor @ 1.43 GHz Memory – 4 GB 64-bit LPDDR4 1600 MHz; 25.6 GB/s bandwidth Storage – 16 GB eMMC 5.1 Flash Storage, microSD Card Slot Display Output – HDMI interface with […]

Mixtile Core 3588E SoM review – Part 2: Ubuntu 22.04, hardware features, RK3588 AI samples, NVIDIA Jetson compatibility

We’ve already had a look at the Mixtile Core 3588E NVIDIA Jetson Nano/TX2 NX/Xavier NX/Orin Nano compatible Rockchip RK3588 SO-DIMM system-on-module in the first part of the review with an unboxing and first boot with an Ubuntu 22.04 OEM installation. I’ve now had more time to play with the devkit comprised of a Core 3588 module in 16GB/128GB configuration and a Leetop A206 carrier board with low-level features testing, some benchmarks, multimedia testing with 3D graphics acceleration and video playback, some AI tests using the built-in 6 TOPS NPU and the RKNPU2 toolkit, and finally I also tried out the system-on-module with the carrier board from an NVIDIA Jetson Nano developer kit. Ubuntu 22.04 System info We had already checked some of the system information in the first part of the Mixtile Core 3588E review, but here’s a reminder:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

jaufranc@Mixtile-RK3588E:~$ uname -a Linux Mixtile-RK3588E 5.10.160-rockchip #18 SMP Wed Dec 6 15:11:42 UTC 2023 aarch64 aarch64 aarch64 GNU/Linux jaufranc@Mixtile-RK3588E:~$ cat /etc/lsb-release DISTRIB_ID=Ubuntu DISTRIB_RELEASE=22.04 DISTRIB_CODENAME=jammy DISTRIB_DESCRIPTION="Ubuntu 22.04.3 LTS" jaufranc@Mixtile-RK3588E:~$ df -h Filesystem Size Used Avail Use% Mounted on tmpfs 1.6G 2.7M 1.6G 1% /run /dev/mmcblk0p2 113G 7.5G 101G 7% / tmpfs 7.7G 0 7.7G 0% /dev/shm tmpfs 5.0M 8.0K 5.0M 1% /run/lock tmpfs 4.0M 0 4.0M 0% /sys/fs/cgroup /dev/mmcblk0p1 512M 101M 411M 20% /boot/firmware tmpfs 1.6G 60K 1.6G 1% /run/user/0 tmpfs 1.6G 76K 1.6G 1% /run/user/130 tmpfs 1.6G 68K 1.6G 1% /run/user/1000 jaufranc@Mixtile-RK3588E:~$ free -mh total used free shared buff/cache available Mem: 15Gi 601Mi 13Gi 47Mi 1.4Gi 14Gi Swap: 2.0Gi 0B 2.0Gi |

I also ran inxi to check a few more details. […]

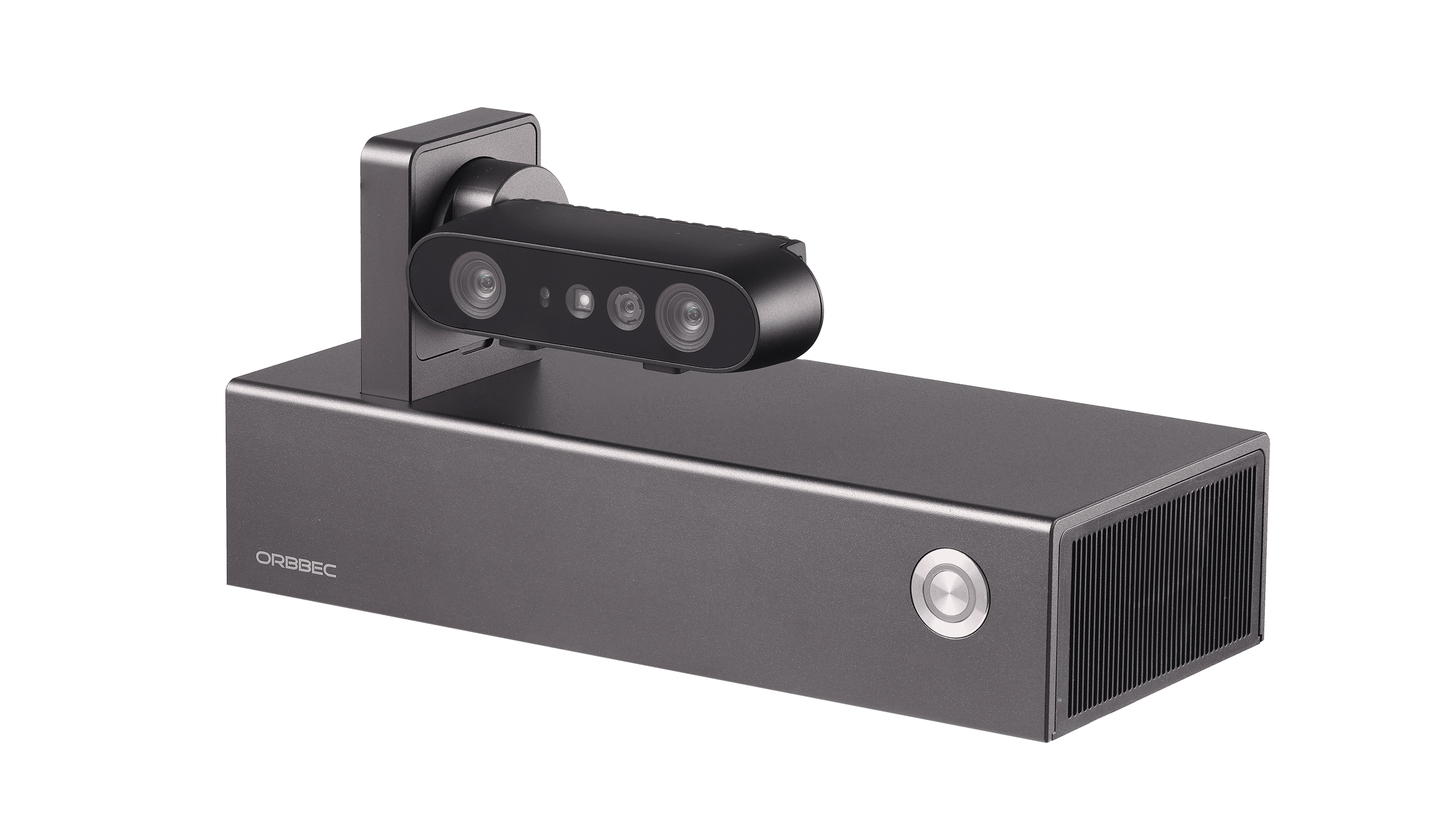

Orbbec Femto Mega 3D depth camera review – Part 1: Unboxing, teardown, and first try

Orbbec sent us a Femto Mega 4K RGB and 3D depth and camera for review. The camera is powered by an NVIDIA Jetson Nano module, features Microsoft ToF technology, and outputs RGB, TOF, and IR data through a USB-C port or a gigabit Ethernet port. I’ll start the two-part with an unboxing, a teardown, and a quick try with the OrbbecViewer program on Ubuntu 22.04 in the first part of the review because checking out the software and SDK in more detail in the second part later on. Femto Mega depth and RGB camera unboxing I received the camera in a cardboard package showing the camera model and key features: “ORBBEC Femto Mega DEPTH+RGB CAMERA”. The package’s content is comprised of a USB-C cable to connect to the host, the camera, and a 12V/2A power adapter. The front side of the camera features the RGB camera sensor, the TOF (Time-of-Flight) […]

Persee N1 – A modular camera-computer based on the NVIDIA Jetson Nano

The Persee N1 is a modular camera-computer kit recently launched by 3D camera manufacturer, Orbbec. Not too long ago, we covered their 3D depth and RGB USB-C camera, the Femto Bolt. The Persee N1 was designed for 3D computer vision applications and is built on the Nvidia Jetson platform. It combines the quad-core processor of the Jetson Nano with the imaging capabilities of a stereo-vision camera. The Jetson Nano’s impressive GPU makes it particularly appropriate for edge machine learning and AI projects. The company also offers the Femto Mega, an advanced and more expensive alternative that uses the same Jetson Nano SoM. The Persee N1 camera-computer also features official support for the open-source computer vision library, OpenCV. The camera is suited for indoor and semi-outdoor operation and uses a custom application-specific integrated circuit (ASIC) for enhanced depth processing. It also provides advanced features like multi-camera synchronization and IMU (inertial measurement […]

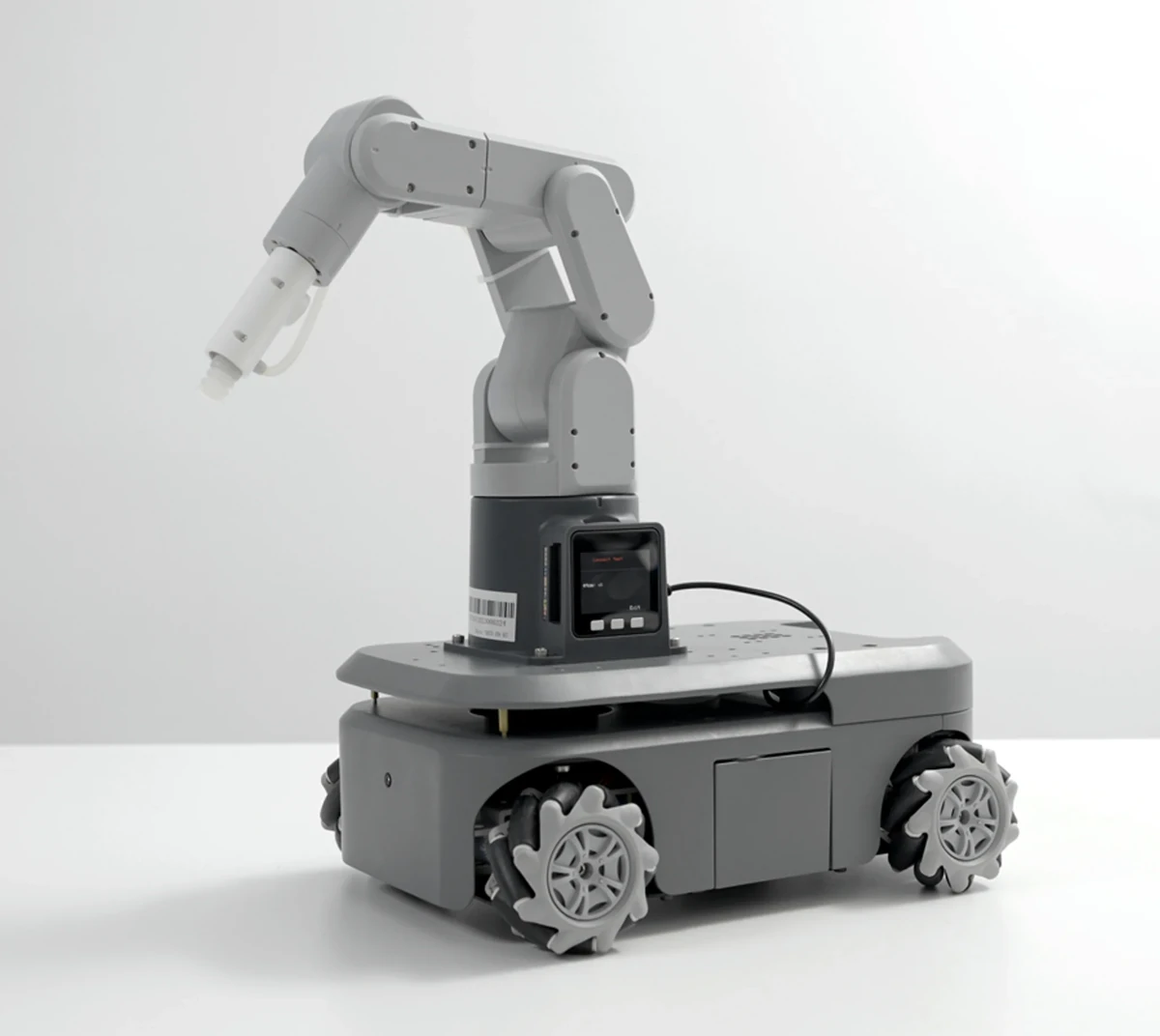

myAGV 2023 four-wheel mobile robot ships with Raspberry Pi 4 or Jetson Nano

Elephant Robotics myAGV 2023 is a 4-wheel mobile robot available with either Raspberry Pi 4 Model B SBC or NVIDIA Jetson Nano B01 developer kit, and it supports five different types of robotic arms to cater to various use cases. Compared to the previous generation myAGV robot, the 2023 model is fitted with high-performance planetary brushless DC motors, supports vacuum placement control, can take a backup battery, handles large payloads up to 5 kg, and integrates customizable LED lighting at the rear. myAGV 2023 specifications: Control board Pi model – Raspberry Pi 4B with 2GB RAM Jetson Nano model – NVIDIA Jetson Nano B01 with 4GB RAM Wheels – 4x Mecanuum wheels Motor – Planetary brushless DC motor Maximum linear speed – 0.9m/s Maximum Payload – 5 kg Video Output Pi model – 2x micro HDMI ports Jetson Nano model – HDMI and DisplayPort video outputs Camera Pi model – […]

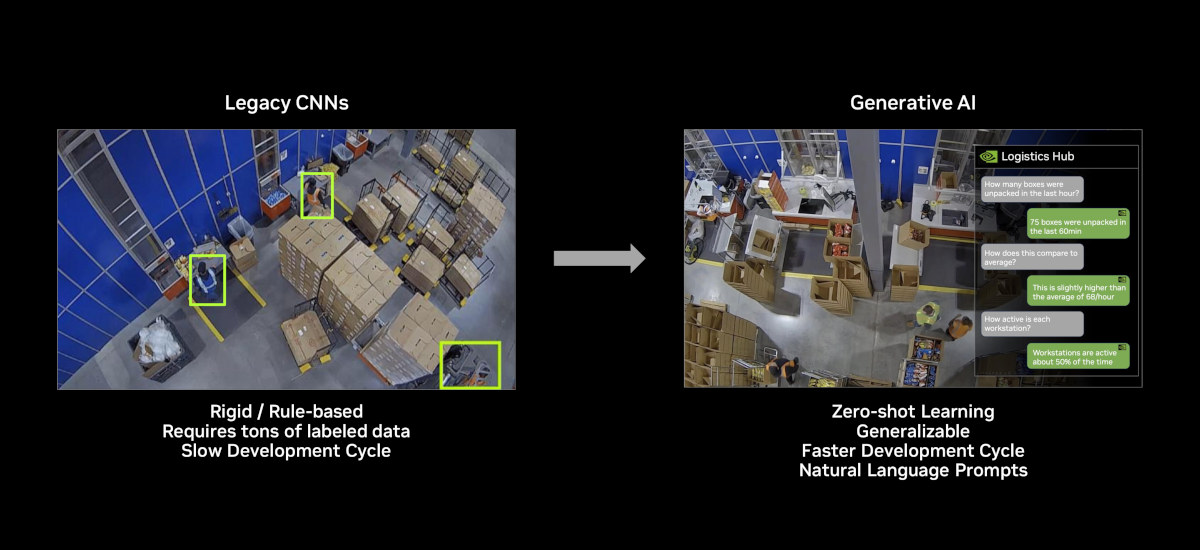

Generative AI on NVIDIA Jetson Orin, Jetpack 6 SDK to support multiple OSes

NVIDIA has had several announcements at ROSCon 2023 related to robotics & embedded with highlights including generative AI on the NVIDIA Jetson Orin module and the Jetpack 6 SDK will be released next month (November 2023) with supports for Ubuntu as usual, but also other operating systems and platforms such as Debian, Yocto, Wind River, Redhawk RTOS, and Balena. Generative AI on NVIDIA Jetson Orin There’s been a lot of hype in the last year about generative AI thanks to services such as ChatGPT, Google Bard, or Microsoft Bing Chat. But those rely on closed-source software that runs on powerful servers in the cloud. As we noted in our article about the “AI in a box” offline LLM solution there are some open-source projects such as Whisper speech-to-text model and Llama2 language models that could be run on embedded hardware at the edge, but as noted by some readers platforms […]

Linux 6.5 release – Notable changes, Arm, RISC-V and MIPS architectures

Linus Torvalds has just announced the release of Linux 6.5 on the Linux Kernel Mailing List (LKML): So nothing particularly odd or scary happened this last week, so there is no excuse to delay the 6.5 release. I still have this nagging feeling that a lot of people are on vacation and that things have been quiet partly due to that. But this release has been going smoothly, so that’s probably just me being paranoid. The biggest patches this last week were literally just to our selftests. The shortlog below is obviously not the 6.5 release log, it’s purely just the last week since rc7. Anyway, this obviously means that the merge window for 6.6 starts tomorrow. I already have ~20 pull requests pending and ready to go, but before we start the next merge frenzy, please give this final release one last round of testing, ok? Linus The earlier […]