Aetina has launched fanless edge AI embedded box PCs powered by NVIDIA Jetson Orin system-on-modules (SoM), namely the AIE-CN31/41, AIE-CO21/31, AIE-PN32/42, and AIE-PO22/32 models featuring the Orin NX or Orin Nano modules, and the AIE-PX11/12/21/22 and AIE-PX13/23 embedded systems fitted with the more powerful Jetson AGX Orin module. All new fanless Ubuntu 20.04 embedded computers are suitable for AI-powered applications and work in an operating temperature range of -25°C to +55°C. When I first read the email press release, I thought it was a refresh of an old PR since I had written about Aetina NVIDIA Jetson Orin Nano and Orin NX edge embedded systems last January. But those are new models with similar specifications, but housed in a fanless enclosure with a large heatsink, while variants unveiled at CES 2023 were all actively cooled. Let’s have a closer look at the AIE-CO21, AIE-CO31, AIE-CN31, and AIE-CN41 models with some […]

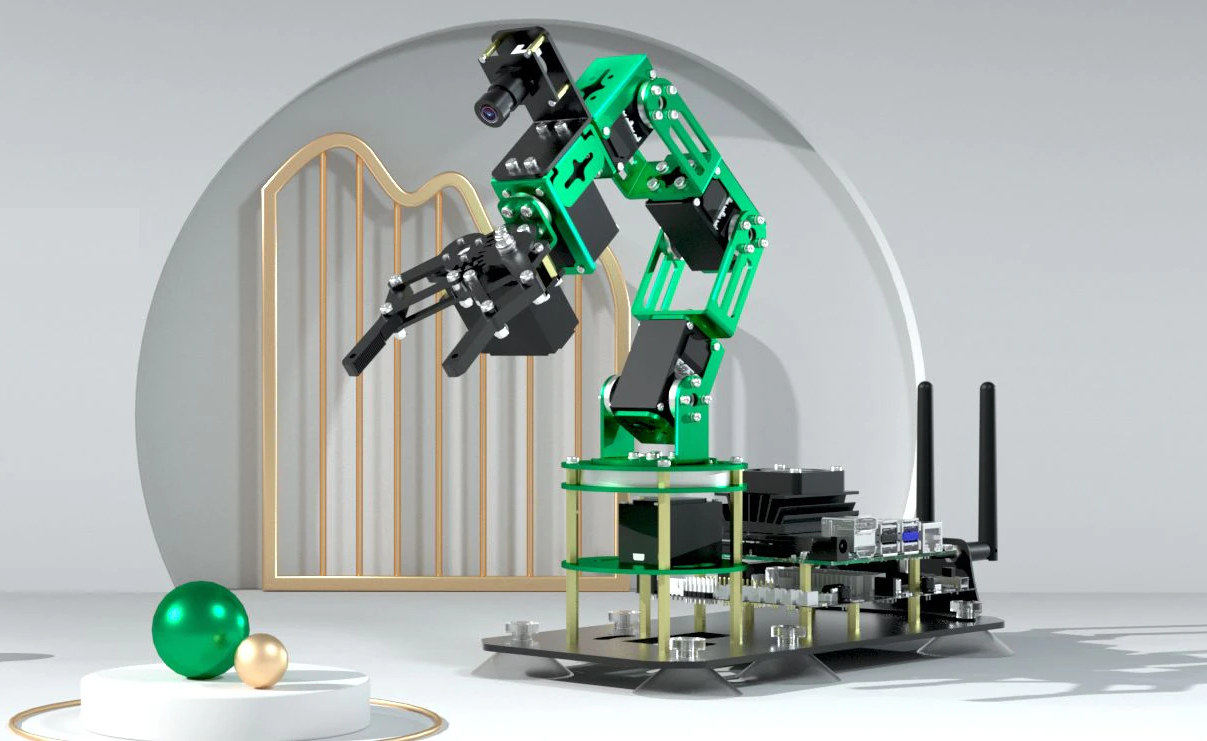

Yahboom DOFBOT 6 DoF AI Vision robotic arm for Jetson Nano sells for $289 and up

Robotic arms can be expensive especially if you want one with AI Vision support, but Yahboom DOFBOT robotic arm designed for NVIDIA Jetson Nano offers a lower cost alternative as the 6 DoF robot arm sells for about $289 with a VGA camera, or $481 with the Jetson Nano SBC included. We previously published a review of the myCobot 280 Pi robotic arm from Elephant Robotics, and while it’s working well, supports computer vision through the Raspberry Pi, and is nicely packaged, it sells for around $800 and up depending on the accessories, and one reader complained the “price tag is still way too high for exploration“. The DOFBOT robotic arm is looking more like a DIY build, but its price may make it more suitable for education and hobbyists. DOFBOT robotic arm main components and specifications: SBC – NVIDIA Jetson Nano B01 developer kit recommended, but Raspberry Pi, Arduino, […]

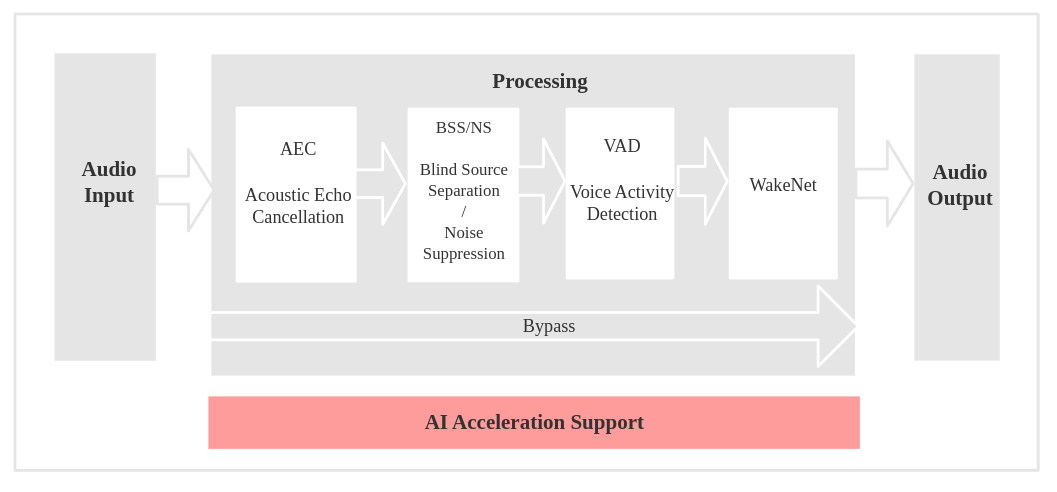

Espressif ESP-SR enables on-device speech recognition framework on ESP32-S3 and ESP32 WiSoCs

Espressif ESP-SR is a speech recognition framework enabling on-device speech recognition on ESP32 and ESP32-S3 wireless microcontrollers with the latter being recommended due to its vector extension for AI acceleration and larger, high-speech octal SPI PSRAM. The ESP-SR framework was first released on December 17, 2021 with version 1.0, before the v1.20 update was introduced in March of this year, but I only found out about ESP-SR offline speech recognition solution through a tweet by John Lee showing an ESP-SR demo video by @ThatProject. Comrades of the world, liberate your hands from the chains of typing and touching germy switches! Embrace the revolutionary power of speech recognition with ESP32-S3 + ESP-SR. Let your words flow freely, for the proletariat shall not be silenced by keyboards or bourgeois input… pic.twitter.com/bm3udteB3o — John Lee (@EspressifSystem) July 15, 2023 I initially was confused since ESP32 boards have supported speech recognition for years using […]

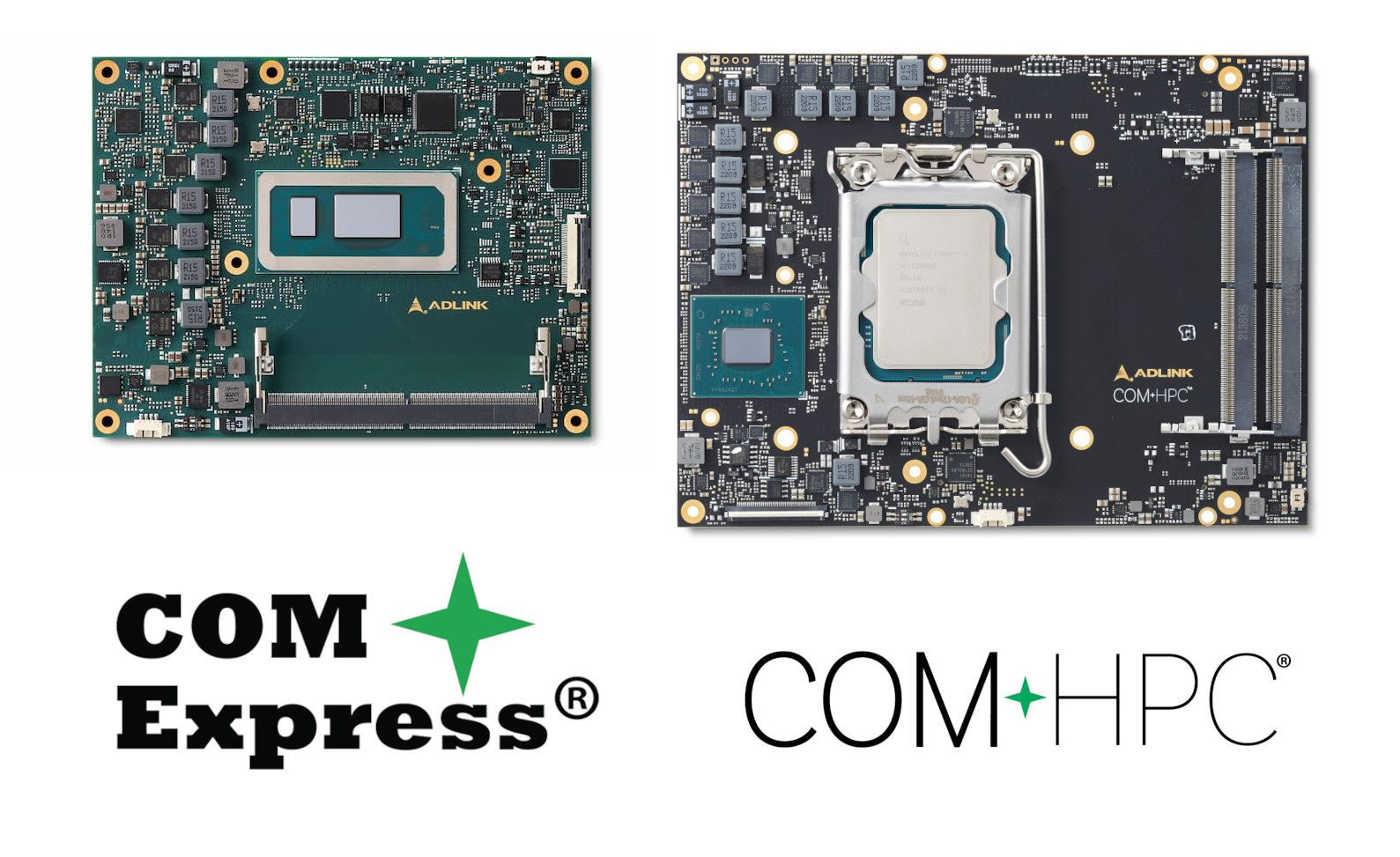

Selecting Raptor Lake COM-HPC or COM Express modules for your application (Sponsored)

ADLINK Technology introduced the COM-HPC-cRLS (Raptor Lake-S) COM-HPC size C module and Express-RLP Raptor Lake-P COM Express Type 6 module at the beginning of the year, and while we covered the specifications for both in detail at the time of the announcement, we’ll compare the advantages and benefits of the two types of 13th generation Raptor Lake modules in this post and compare 12th gen Alder Lake-S and 13th Gen Raptor Lake-S processor performance to help potential buyers select the right one for their applications. Raptor Lake COM-HPC vs COM Express vs modules The table above provides a high-level comparison between the COM-HPC-cRLS (Raptor Lake-S) and the Express-RLP (Raptor Lake-P) modules. The Raptor Lake-S socketed processor family found in the COM-HPC provides more processing power, supports up to 128GB DDR5, and the COM-HPC standard adds support for PCIe Gen5 which is not possible with the COM Express standard. The Raptor […]

UniHiker review – A Linux-based STEM education platform with IoT and AI support, Micro:bit edge connector

DFRobot’s UniHiker is a STEM educational platform that was originally launched in China, but now UniHiker is now available worldwide through the DFRobot shop. The company has sent us a UniHiker sample for review, so let’s unpack the kit and learn how to use the UniHiker platform. The main component of the kit is the Linux-powered UniHiker board which features a 2.8-inch resistive touchscreen display and a BBC Micro:bit edge connector, so we can use expansion boards for the Micro:bit board. Let’s start unboxing it together. UniHiker unboxing DFRobot sent us the UniHiker platform by DHL. The package is a familiar-looking DFRobot box in orange color and comes with a plastic box to safely store the UniHiker board and accessories after use. The plastic box contains another plastic box with the board, some 3-pin and 4-pin cables for Gravity ports, and a USB Type-C cable. The UniHiker is like a […]

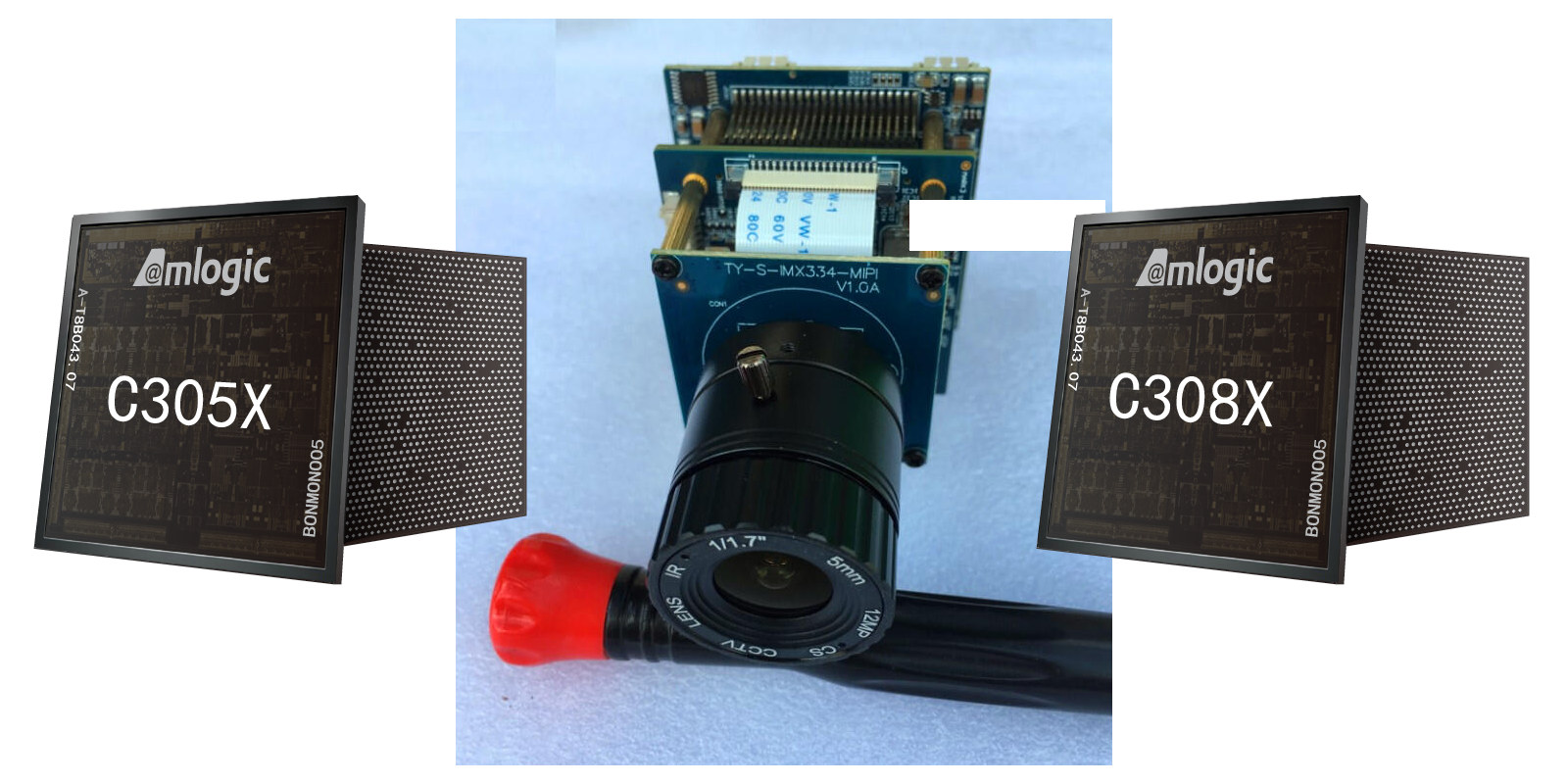

Amlogic C302X, C305X, and C308X Arm SoCs target Smart IP cameras

Amlogic C302X and C305X are dual-core Arm Cortex-A35 processors, while the C308X is a dual-core Cortex-A55 processor with all SoCs designed for Smart IP cameras with the integration of an AI accelerator up to 4 TOPS, and 1080p30 H.264/H.265 video encoding. I was first informed about Amlogic C302/C305/C308 SoCs for Smart cameras as far back as June 2019, or about four years ago, but there wasn’t enough information to write anything about those at the time. But I’m now seeing more details about the camera SoCs, now named C302X, C305X, and C308X, so it’s a good time to have a closer look. Amlogic C302X Amlogic does not have a product page for this model, so I need to rely on a recent commit in the Linux Kernel mainline list to extra some information. The Amlogic S302X is a dual-core Cortex-A35 processor found in the Amlogic AW409 development board (likely internal […]

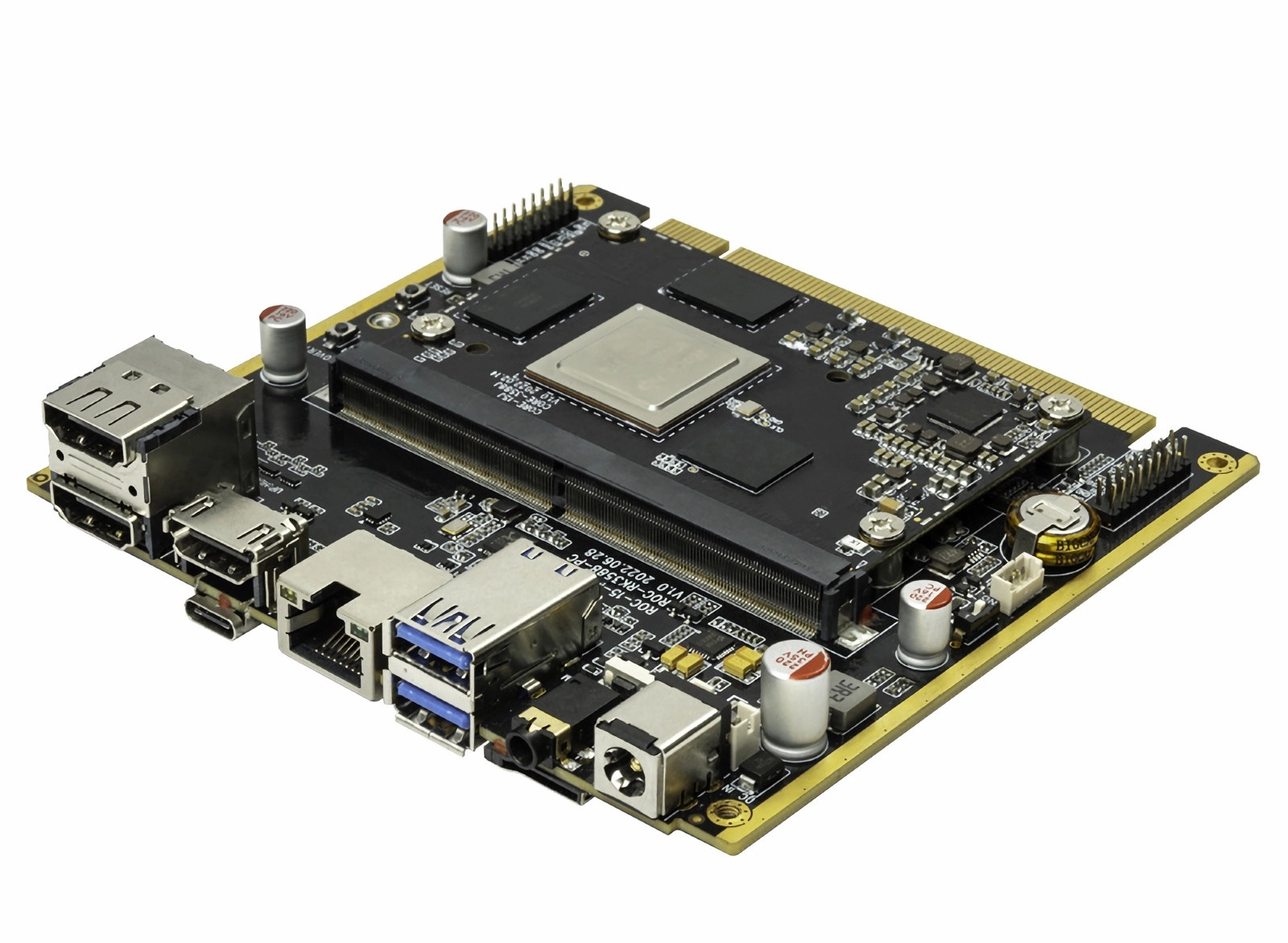

Firefly’s Rockchip RK3588 SBCs are now available with 32GB RAM

The Rockchip RK3588 datasheet clearly states the processor supports up to 32 GB RAM, and many vendors claimed their board supports up to 32GB RAM at launch, but none were to be found once you accessed a shop, and the maximum was 16GB RAM so far, likely due to availability and the high price of of the two 16GB RAM chips needed to get 32GB of RAM. But Firefly has now discreetly started to sell boards with 32GB RAM including the ROC-RK3588-PC, ROC-RK3588S-PC, and ITX-3358J mini-ITX motherboard. I’ve just noticed I never covered the former, so I’ll have a closer look at it today. Firefly ROC-RK3588S-PC specifications: SoM – Core-3588J system-on-module SoC – Rockchip RK3588 octa-core processor with 4x Cortex-A76 cores @ up to 2.4 GHz, four Cortex-A55 cores, Arm Mali-G610 MP4 quad-core GPU with OpenGL ES3.2 / OpenCL 2.2 / Vulkan 1.1 support, 6 TOPS NPU, and an 8Kp60 […]

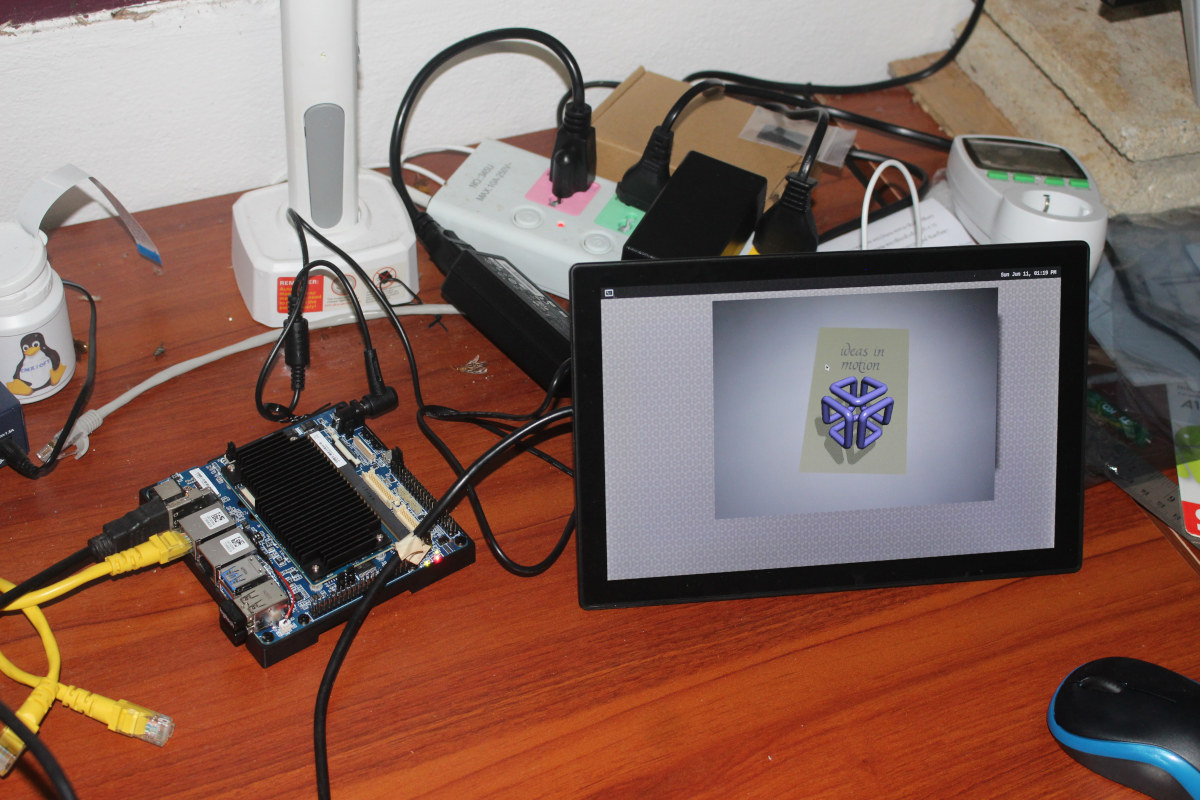

i-Pi SMARC 1200 (MediaTek Genio 1200) devkit tested with a Yocto Linux image

Last weekend I received ADLINK’s i-Pi SMARC 1200 development kit powered by MediaTek Genio 1200 Octa-core Cortex-A78/A55 AIoT processor, checked out the hardware and wanted to install the Yocto Linux image but stopped in my tracks because it looked like I had to install Ubuntu 18.04 first in a Virtual Machine or another computer. But finally, the documentation has been updated to clarify “Ubuntu 18.04 or greater” is required, and I had no problem flashing the image from a Ubuntu 22.04 laptop after installing dependencies and tools as follows:

|

1 2 3 4 5 6 7 8 9 10 |

$ sudo apt install android-tools-adb android-tools-fastboot $ echo 'SUBSYSTEM=="usb", ATTR{idVendor}=="0e8d", ATTR{idProduct}=="201c", MODE="0660", $ GROUP="plugdev"' | sudo tee -a /etc/udev/rules.d/96-rity.rules $ echo -n 'SUBSYSTEM=="usb", ATTR{idVendor}=="0e8d", ATTR{idProduct}=="201c", MODE="0660", TAG+="uaccess" SUBSYSTEM=="usb", ATTR{idVendor}=="0e8d", ATTR{idProduct}=="0003", MODE="0660", TAG+="uaccess" SUBSYSTEM=="usb", ATTR{idVendor}=="0403", MODE="0660", TAG+="uaccess" SUBSYSTEM=="gpio", MODE="0660", TAG+="uaccess" ' | sudo tee /etc/udev/rules.d/72-aiot.rules $ sudo udevadm control --reload-rules $ sudo udevadm trigger $ sudo usermod -a -G plugdev $USER $ pip3 install -U -e "git+https://gitlab.com/mediatek/aiot/bsp/aiot-tools.git#egg=aiot-tools" |

That’s it for the tools. Eventually, the development kit will support three images: Yocto Linux, Android 13 (July 2023), and Ubuntu 20.04 (Q3 2023). So that means only the Yocto Linux image is available from the download page at this time, and that’s what I’ll be using today. We’ll need to connect the micro USB to USB cable between the […]