Hardkernel teased us with ODROID HC1 Home Cloud server, and ODROID MC1 cluster last August with both solutions based on a cost down version of ODROID-XU4 board powered by Samsung Exynos 5422 octa-core Cortex-A15/A7 processor. ODROID-HC1 Home Cloud server was launched shortly after in September for $49.

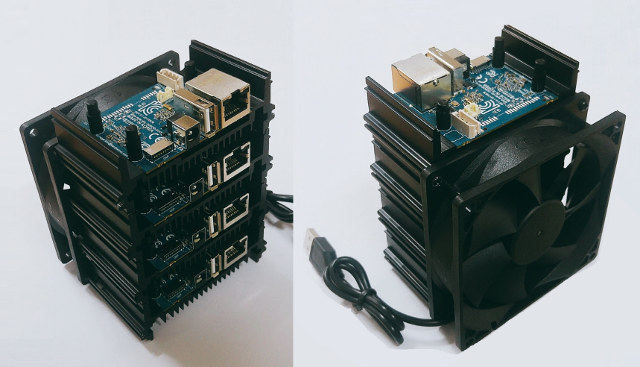

It took a little longer than expected for the cluster to launch, but ODROID-MC1 (My Cluster One) is finally here with four ODROID-XU4S boards, and a metal case with a cooling fan. The solution is sold for 264,000 Wons in South Korea, and $220 to the rest of the world.

ODROID-MC1 cluster specifications:

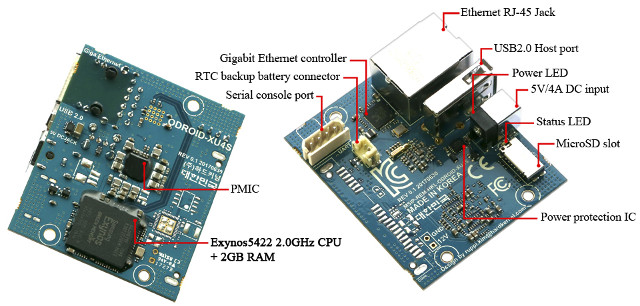

- Four ODROID-XU4S boards with

- SoC – Samsung Exynos 5422 quad core ARM Cortex-A15 @ 2.0GHz quad core ARM Cortex-A7 @ 1.4GHz with Mali-T628 MP6 GPU supporting OpenGL ES 3.0 / 2.0 / 1.1 and OpenCL 1.1 Full profile

- System Memory – 2GB LPDDR3 RAM PoP

- Network Connectivity – 10/100/1000Mbps Ethernet (via Realtek RTL8153 USB 3.0 to Ethernet bridge)

- USB – 1x USB 2.0 port

- Misc – Power LED, OS status LED, Ethernet LEDs, UART for serial console, RTC backup battery connector

- Power Supply – 5V/4A via 5.5/2.1mm DC jack; Samsung S2MPS11 PMIC, Onsemi NCP380 USB load switch and TI TPS25925 over-voltage, over-current protection IC

- Dimensions – ~ 112 x 93 x 72 mm

ODROID-XU4S is software compatible with ODROID-XU4 board, so you could just use the Ubuntu images (Linux 4.9 or Linux 4.14), and instructions from the XU4 Wiki, but to make things easier, they’ve provided several tutorials specific to the cluster use case:

ODROID-XU4S is software compatible with ODROID-XU4 board, so you could just use the Ubuntu images (Linux 4.9 or Linux 4.14), and instructions from the XU4 Wiki, but to make things easier, they’ve provided several tutorials specific to the cluster use case:

- Docker Swarm Getting Started Guide to automatize installation on all boards part of the cluster.

- Build Farm setup example with distcc to build the Linux kernel

- JAVA Parallel programming – An environment ready for experimenting with MPJ Express, a reference implementation of the mpiJava 1.2 API.

- PXE remote booting setup

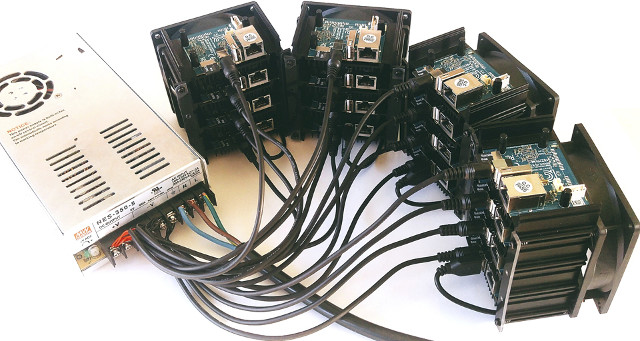

Note that the cluster is not sold with accessories by default, so you’ll need to make sure you also get a Gigabit switch with at least 5 ports, five Ethernet cables, four micro SD cards (8GB or greater), and four 5V/4A power supplies (or other 80W+ power supply arrangement as shown below).

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress. We also use affiliate links in articles to earn commissions if you make a purchase after clicking on those links.

The ODROID-XU4S PCB seems to be the HC1 one just without JMS578 and the SATA connector being soldered. Which is a bit sad since the Exynos features 2 pretty fast USB3 ports and the SuperSpeed data lines of one of them are not exposed now (only available on the PCB where the JMS578 is usually placed).

@tkaiser

Yes, it’s the same board.

@cnxsoft

Hmm… I was thinking about use cases where the individual cluster nodes could benefit from fast local storage since the combination of JMS578 and the Exynos (without USB3 hub in between) with recent kernel and appropriate settings does not perform that bad (any more):

I was thinking about mSATA or M.2 SSDs with a slightly larger cluster device but in case Hardkernel also sells the fan as add-on to be combined with 4 HC1 users with the need for such fast storage implementations can simply add normal SATA SSDs and build their cluster nodes with HC1 instead of MC1 🙂

@tkaiser

Well, if it is the same board, the usb3 lanes will be accessible. But unfortunately in the xu4 design the gigabit nic is connected to the USB3 OTG port… Else you would be able to use them as 4 fine USB3 devices with a lof of computing power.

I’ve been thinking about ordering one to see if it runs my build farm faster than my MiQi-based cluster. But I’m hesitating. I find the cluster a bit expensive for what it is : there’s no local storage so you have to add some. In the end it’ll be around $60 per board. Also I’ll have to buy a new, larger PSU, as the 4 MiQi and the switch only require a single 60W PSU to run stable.

In fact the board is a stripped down version of the HC1 (no SATA controller, smaller heatsink making it more likely to heat and make the fan spin), and buying 4 HC1 would be cheaper than a single MC1. I still find it a bit surprising.

And I’m still really uncertain it will build as fast as my MiQi-based cluster :-/ I’ll probably wait for other people buying one and reporting their findings.

Oh, didn’t notice that each node is $5 more expensive than the HC1 which for sure provides better heat dissipation — based on my tests I would assume your use case will run on a bunch of HC1 lying next to each other and not stacked up with ~1.8 Ghz on the A15 cores (w/o fan). With a totally silent fan (good 12V thingie for 10 bucks at 5V rail) and stacked 2.0 GHz might be possible as compile farm.

To somewhat justify the higher costs IMO they should at least ship with 4 SD cards flashed with PXE boot ready u-boot using a reasonable config falling back to a system test Linux buildroot thingie doing an extensive stress test (ideally using the great OpenBLAS Linpack which might be even able to detect underpowering issues), signalling with blue led when passed/failed and providing the test log at port 80 to be fetched automatically by a cluster master node (bonus: accepting on port 80 then PXE boot config as POST request and automatically adjusting u-boot config, rebooting afterwards). This would most probably ease a huge cluster bring-up a lot.

@willy

Hardkernel has some numbers in the build farm tutorial for the time it takes to build the Linux kernel using distcc with 2 to 8 nodes.

@cnxsoft

Yes I’ve already seen them but they just compare to their build node that they do not describe so it’s not easy to have a reference.

A standalone x86 machine with vt-d & PCI-E pass-through will beat this kind of cluster, if you want just try some new technology like Docker, Kubernetes & Hadoop. They should make it much smaller than x86 machine. For example use SPI flash to do PXE boot, use POE to fetch power from Switch directly.

IIRC the Exynos 5422 can only boot from MMC and you would need PoE+ (802.3at) since 15.4W per node aren’t sufficient with demanding tasks 🙂

Why not create a list of tests and results you would like from Odroid and send it to them?

Not at this price. To put it in rough numbers, you have 16 2GHz cores, 4 DDR3-1866 channels and 8 GB of RAM for $220. For massively scalable workloads (and there are not that many), it can definitely beat an x86. My home build farm made of 6 MiQi boards (4 A17 at 1.8 GHz each) builds my kernels 2.5 times faster than my quad-core Skylake at 4.4 GHz. Building any single file however is much slower, it’s globally faster because 24 files are built in parallel instead of 4, with a per-core performance loss ratio much lower than 6 🙂

@willy

I think you missed @fan‘s ‘if you want just try some new technology like Docker, Kubernetes & Hadoop’ clause?

Anyway: Since I had to mess around today with too many cables I thought about the MC1 setup too. IMO way too much cables and switchports needed (for a 200 node cluster you would need to waste 5 rack units for switches alone). Maybe Hardkernel could make use of an el cheapo switch IC like RealTek’s RTL8370 providing 8 GbE PHY and then create one cluster module using a huge 44x400mm metal heatsink with 7 Exynos 5422, 7 RTL8153 and some DC-DC circuitry to allow the thing to be powered with an approriate external voltage. 2 JMS578 for the outer Exynos providing one mSATA and one M.2 2280 slot for master node’s ‘local storage’ if needed.

I’m still impressed by HC1 heat dissipation –> the whole thing gets heated by the Exynos and can be cooled down with rather minimal efforts. So such a 7 node cluster module with 28 A15 and 28 A7 cores will need some ventilation to work at interesting clockspeeds but based on my experiences the needed fan can be rather silent if both fan and metal surface are large enough.

Now imagine a 3 RU enclosure with 3 x 120mm fans on each side, 3 rows with 9 such modules each and you have 756 A15 and 756 A7 cores in 3 rack units needing only 27 switch ports (hooray, 28 port switches exist 😉 ). Yeah, stupid idea but would be interesting 🙂

BTW: Just looked through my data when I tested with HC1 a while back. IMO the nicest DVFS OPP for cluster use cases is somewhere around 1.5GHz since energy consumption and heat emissions increase a lot above. So given Hardkernel’s offer would be a bit more competitive (maybe with such a module as above? 😉 ) throwing in more cores at lower clockspeed would increase the performance/watt ratio significantly compared to the try to run everything at 2.0 GHz.

I was reading a old 2016 article about a Pi 2 cluster and thought I would share incase others find anything of interest in the article

https://medium.com/@pieterjan_m/erlang-pi2-arm-cluster-vs-xeon-vm-40871d35d356

Also these

A Feasible Architecture for ARM-Based Microserver Systems Considering Energy Efficiency

http://ieeexplore.ieee.org/document/7831394/?reload=true

Denser, Cooler, Faster, Stronger: PHP On ARM Microservers

https://medium.com/@jezhalford/denser-cooler-faster-stronger-php-on-arm-microservers-eb9d0cb74226

The issue with the MC1 and the HC1 is the same. When you add import tax and transportation costs that same 220$ device suddenly costs 325 Euro. My single HC1 with shipping and import tax skyrocketed to almost 90 euro.

That price gap in comparison with lets say a AMD Ryzen 1600 with its 12 threads and massive speed difference ( been doing tests on test setups between the HC1 and 1600 ). So even a 4 version HC1 or 1 MC1, has a issue with price vs performance.

The moment you try to get the same performance with maybe 8 or 9 HC1s ( or two full MC1s! ), the power is a massive increased to the point that it actually loses to the AMD 1600. And while the Samsung ARM chip can be clocked at 1500mhz for best performance vs power, the same can be done with downclocking the AMD 1600, while still providing the performance boost.

For a long time i looked at ARM based system for a cheap hosting alternative vs a amd ryzen and frankly, the current offerings fall short. Mostly because import costs, the added transportation cost, the need for a extra switch 8 port switch ( those things also suck power, people forget about that ), the extra 8 socket power supply, the extra network cables etc …

The current offerings are too slow to compete with a cheap price or to expensive with a better speed.

In my personal opinion, the MC1 is useless. Anybody is better off buying 4 HC1 ( 20$ cheaper, more bigger heatsink, SATA capable if you need it ) and buy a fan of your choice in the EU.

If the MC1 had a internal netwerk switch or there was a bord where you can plug the bare units in, that can provide power and network capability for 220$ ( including the 4 samsung sockets ), then things have a better change ( still to expensive for the power when dealing with import and transportation but better then this ).

I find the HC1 and MC1 a missed opportunity ( for the EU anyway, if you can import in the US without tax, that may be a different issue ).

@Benny

Buying from EU distributors should be a little cheaper. For example, MC1 is sold for 264 Euros including VAT @ https://www.odroid.co.uk/ODROID-MC1

Shipping (to France for example) adds 13.50 Euros.

Check the list of distributors @ http://www.hardkernel.com/main/distributor.php