JPEG lossy compression is still used on most photos in the Internet, while PNG is still the preferred format for lossless compressions. Back in 2010, Google unveiled WebP to improve on both, but that’s only very recently that I started to see a few webp image on the Internet.

The company has been working on yet another image for with Pik lossy/lossless image format designed for high quality and fast decoding.

Some of the features enabling high quality:

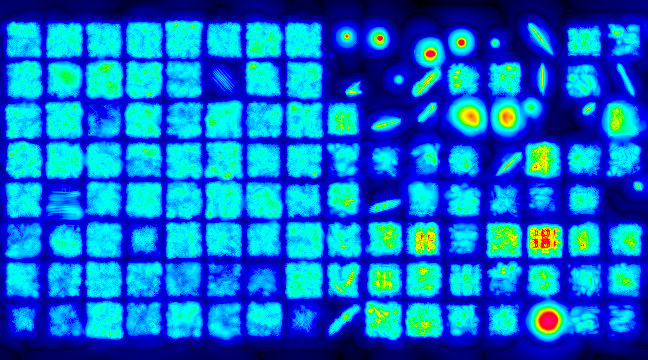

- Built-in support for psychovisual modeling via adaptive quantization and XYB color space

- 4×4..32×32 DCT, AC/DC predictors, chroma from luma, nonlinear loop filter, enhanced DC precision

- Full-precision (32-bit float) processing, plus support for wide gamut and high dynamic range

Features allowing faster decoding over 1 GB/s multi-threaded:

- Parallel processing of large images

- SIMD/GPU-friendly, benefits from SSE4 or AVX2

- Cache-friendly layout

- Fast and effective entropy coding: context modeling with clustering, rANS

Google Pik is royalty-free, and is said to achieve perceptually lossless encodings at about 40% of the JPEG bitrate, and store fully lossless at about 75% of 8-bit PNG size, or 60% of 16-bit PNG size.

The SIMD readme explains Pik leverages SSE4, AVX2, and ARMv8 instructions to improve performance.

You can give it a try by checkout the source code on Github. It should be noted that it’s pretty hard to change standards on the Internet, as shown by WebP, HEIF, and FLIF projects all of which are said to be technically superior to JPEG.

Via WorksonArm

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress. We also use affiliate links in articles to earn commissions if you make a purchase after clicking on those links.

> Google Pil is royalty-free

Typo.

And for those looking for a way to get smaller file sizes for images on the internet without (too much) visual harm look at https://tinypng.com — the methods how they shrink PNG and JPEG images are explained there (for JPEG at tinyjpg.com).

Re: tinypng and tinyjpg

Their methods are very rudimentary yet still very slooow.

PNG – They appear to be using color reduction — combining similar colors.

JPG – They appear to be simply removing metadata that a typical camera/phone places in image files. Aside from this, they utilize a very flowery description of what is already inherent in the JPEG algorithm.

At the same time, they lack the ability to re-scale images which is crucial for images on the internet.

An image file from your phone has millions of pixels — which makes sense if you intend to print a high quality photo. It makes no sense if you’re just texting a selfie to a friend, posting on Facecrook or inserting a snapshot into a web page. Re-scaling to a more sensible size for display on a computer screen typically saves a lot of storage space.

imageoptimizer.net offers more capability, flexibility and speed.

They remove metadata (which can be quite huge with images dropping out of Photoshop for example) but with PNG they do not ‘reduce’ color but switch to an optimized indexed color space. Wrt JPEG it’s explained what they do (JPEG allows for different compression schemes in different ‘parts’ of the image).

As for imageoptimizer.net I would believe the only benefit here is stripping (some) metadata and downscaling and reapplying different compression levels. Also it seems it doesn’t support PNG transparency (back to IE6 ‘capabilities’? Seriously?!)

At least for screenshots I really don’t want downscaling and a quick test reveals that imageoptimizer.net even increases file size for a typical macOS screenshot while tinypng (or switching to an indexed colorspace) reduces file size drastically (check metadata analysis at the ix.io link generated):

I guess it depends on the use case. With downscaling and decreasing image quality in mind imageoptimizer.net is an interesting option especially if users rely on Windows and download the program (me being a macOS users able to automate everything through ‘services’ fortunately don’t need to download apps)

For reference, I’m using EWWW Image Optimizer plugin on this site https://wordpress.org/plugins/ewww-image-optimizer/ that optimize PNG and JPG images that I upload. They also have a (paid) cloud-based solution @ https://ewww.io/

Also, there’s this nice simd ISA matrix in the simd seciton of the project that I find quite useful: https://github.com/google/pik/blob/master/pik/simd/instruction_matrix.pdf

Is that for their wrapper implementation though? For example, you can definitely do pairwise addition between 8-bit values with one instruction on x86 (pmaddubsw), but the table indicates that it takes 9. Another example is pairwise subtraction on ARM – it definitely doesn’t take 9 instructions to do.

I do see a lot of “9”s on the page – maybe that just means that it’s not implemented in SIMD at all?

It looks better if you ignore the “9”s, but still seems questionable, e.g. integer negation on x86 (8/16/32-bit) is one instruction (psign*), but listed as two on the table.

Yes, it appears to be for their wrapper. And yes, it’s a fuzzy table indeed, where ‘9’ appears to indicate ‘a few’. I haven’t gone over all cells, but those that I’ve glanced at seemed ok. BTW, pmaddubsw does 16-bit saturation of the pairwise results, so it’s not exactly one instruction that does the 8-bit pairwise job, like addp on asimd2 does. Re int negation in ssse3 — it appears they count the mask load as a separate op.

> BTW, pmaddubsw does 16-bit saturation of the pairwise results

8-bit addition cannot exceed the range of a 16-bit signed int, so saturation doesn’t apply here. The semantics are different to ARM’s addp in that it widens the result – it’s more like *addlp, though I’m sure emulating addp wouldn’t take 9 instructions.

> Re int negation in ssse3 — it appears they count the mask load as a separate op.

I suppose that’s a fair point, although it rarely applies in practice (since you can hold a register of all 1’s and just re-use that).

Though by that definition, I can’t think of any way to do int compare LE/GE/!= in 2 “instructions” on x86, unless there’s some magical way to do it I haven’t thought of.

movmskb (presumably they mean high bit of each element, not byte) emulation on ARM: I’d imagine different element sizes would be different in terms of speed (where smaller elements would be slower). Certainly, their implementation seems that way: https://github.com/google/pik/blob/e4b2c7ac71da9b35a9e45a107077f9d33a228012/pik/simd/arm64_neon.h#L2563

A lot of the table seems to be fair, but it’s also questionable in many places, I’d argue.

> 8-bit addition cannot exceed the range of a 16-bit signed int, so saturation doesn’t apply here. The semantics are different to ARM’s addp in that it widens the result – it’s more like *addlp, though I’m sure emulating addp wouldn’t take 9 instructions.

Saturation doesn’t apply, but the widening does, so it’s not a single op, was my point. To get the effect of asimd2’s addp you’d need something along:

pmaddubsw v0, v_ones

pmaddubsw v1, v_ones

pand v0, v_low_bytes_mask

pand v1, v_low_bytes_mask

packuswb v0, v1

which even without the multiplier/mask loading is still a far cry from a single op. Add to the fact how the table seems to count const loads as extra ops, and that operation goes beyond ‘5’ and into the ‘9’ category.

> I suppose that’s a fair point, although it rarely applies in practice (since you can hold a register of all 1’s and just re-use that).

True, but it seems these are the rules of the table /shrug

> Though by that definition, I can’t think of any way to do int compare LE/GE/!= in 2 “instructions” on x86, unless there’s some magical way to do it I haven’t thought of.

For ints the natural LE/GE solution is exactly two ops: LE == GT + not. For uints a two-op solution is not so intuitive — it’s umax + EQ.

> movmskb (presumably they mean high bit of each element, not byte) emulation on ARM: I’d imagine different element sizes would be different in terms of speed (where smaller elements would be slower).

Their implementation is indeed slower, but it does not need to be as slow, IMO. They can cut down on some of the pairwise ops by use of scalar regs, which would also likely co-issue better.

> A lot of the table seems to be fair, but it’s also questionable in many places, I’d argue.

I think that as long as the categories are taken as ‘1’ — native op, ‘2’ through ‘5’ — ‘about that count’, and ‘9’ as ‘clearly beyond 5’, it all fits together rather nicely.

> Add to the fact how the table seems to count const loads as extra ops, and that operation goes beyond ‘5’ and into the ‘9’ category.

I count 7 from your implementation, so I was right about it definitely not being 9.

> For ints the natural LE/GE solution is exactly two ops: LE == GT + not

According to the table, GT is 1 op, NOT is 2 ops, so GT+NOT = 3 ops, not 2.

You do bring up a good point with MAX+EQ though – I did not consider that.

Can you think of a 2 operation !=?

> I think that as long as the categories are taken as ‘1’ — native op, ‘2’ through ‘5’ — ‘about that count’, and ‘9’ as ‘clearly beyond 5’, it all fits together rather nicely.

I do find plenty other things questionable, e.g.

broadcast any lane 8/16-bit on x86: load indicies + pshufb (2 ops)

abs 64-bit x86: 5 ops

pxor xmm0, xmm0

vpcmpgtq xmm0, xmm0, v

pxor v, xmm0

psrlq xmm0, 63

paddq v, xmm0

BlendV with full bit mask: I guess they’re referring to ARM’s vbsl instruction, but x86’s pblendvb uses MSB of bytes, so arguably, there’s no native x86 operation here

I’m sure I could find more.

Then again, if you’re just arbitrarily going to assign meaning to it, well, I don’t have much to say since you’ll always be able to arbitrarily interpret it in a way that suits you.

> I count 7 from your implementation, so I was right about it definitely not being 9.

Many of the ops there are not 9 ops in either x86 or arm. I believe I’ve already explained my interpretation of the table, to which you disagree. So we agree to disagree. I’ll just comment on a couple of things below.

> According to the table, GT is 1 op, NOT is 2 ops, so GT+NOT = 3 ops, not 2.

You’re right — with the const load that would indeed account to 3 (as in the not case accounting to 2, which I missed).

> broadcast any lane 8/16-bit on x86: load indicies + pshufb (2 ops)

That’s a valid criticism — no idea what they meant there — likely a bug.

> abs 64-bit x86: 5 ops

You _really_ don’t wont to mix sse and avx ops on an amd64. Here’s a proper sse4.2 version:

pxor v_zeros, v_zeros

movdqa mask, v

pcmpgtq v_zeros, mask

pxor v, mask

psubq v, mask

avx would save the move, naturally. Perhaps the authors came up with a tad less optimal solution /shrug

> Then again, if you’re just arbitrarily going to assign meaning to it, well, I don’t have much to say since you’ll always be able to arbitrarily interpret it in a way that suits you.

I’m not “arguably assigning” meanings — clearly (aside from honest mistakes) the table contains loose rather than exact counts, ‘9’ being a very clear example of that — none of the implementations we’ve discussed so far accounts to exactly 9 ops, and I believe we’d be hard-pressed to find one that maps exactly to 9. If you think of it, ‘1’, ‘2’, ‘3’, ‘5’ and ‘9’ are rather odd mappings — something somewhere could map to 4, 6, 7, 8 or more than 9, no?

Could the table have been made exact? By all means. Nobody claimed it was precise in its current form, too boot — I surely didn’t, and the authors say nothing on the subject either. The very notion of counting ops is already an approximation in its own. But it’s the only table that I’ve seen so far where somebody actually bothered to provide even a loose estimate of mapping basic ops to various popular simd ISAs, and low and behold, most of those are not far off (bar from bugs) — if one could shave off an op — great.

> You _really_ don’t wont to mix sse and avx ops on an amd64

It was mostly to save typing a third parameter, since you get the idea anyway and the compiler would never mix the two. Though I really see no problem with it since there’s no transition penalty between 128b instructions, and SSE encodings are often shorter than VEX encodings.

> avx would save the move

I derped when I thought of the implementation, but the move isn’t necessary for SSE:

pxor xmm0, xmm0

pcmpgtq xmm0, v

paddq v, xmm0

pxor v, xmm0

So 4 ops (assuming you count the first “pxor” as an op), a far cry from 9, 7, >5 or whatever “9” means (unless it now means “4”, in which case 9 the table contains loose rather than exact counts

I see no indication on the table of such. “9” means 9, it doesn’t mean 7 or 2, or “about 6” – any 5 year old can tell you that. If they meant “more than 5”, then they could’ve written “>5” or “5+”, or if they weren’t sure, perhaps “~9”, or even a footnote somewhere like “Note 9 is used to refer to anything beyond 5”.

Claiming that the counts are loose is something you’ve just made up to suit your narrative, from what I can tell at least.

At the very best, the information is misleading.

> Nobody claimed it was precise in its current form

I claim that 1+2=2. I make no claims in regards to the precision of the answer. Do you agree?

> bar from bugs

Of which there seem to be many, but then, I’m sure you’ll just shrug them all off if I point them out.

My narrative? I’ve explained my ‘narrative’ about three posts ago. Judging by your last response, you’re yet to catch up with that.

> I derped when I thought of the implementation, but the move isn’t necessary for SSE:

Congratulations on shaving off the move from the sane implementation. Perhaps the authors have derped as well in some of their mappings? It’s funny when we hold others to high standards, when we can just derp and come around on a high horse later.

> I claim that 1+2=2. I make no claims in regards to the precision of the answer. Do you agree?

I do. What’s there to disagree about — approximation is at the very foundations of computer science and engineering in general, as some grown-ups could tell you.

I see the table bugs you a lot. You could point out any bugs/inconsistencies to the authors. Instead you’d rather lower the conversation to the numerical understanding of 5-year olds. I see no point in carrying on with this conversation, as I’ve said all there was to say from my POV. Have a productive day!

> I see the table bugs you a lot

It actually doesn’t.

Your claim that it’s useful is what bugs me a lot, even when a number of mistakes were pointed out. Or rather, the endorsement promoting misleading information being disseminated is what bugs me a lot.

Wrong information is not useful in my book. “1+2=2” is wrong, and hence not a useful statement in my book. Clearly you think otherwise, which is fine I suppose, but methinks that you may be alone with that view.

(note that *accurate* statements don’t have to be precise: “1+2 is between 2 and 4” is accurate, and hence, fine, although less _useful_ than the more precise answer)

> I’ve said all there was to say from my POV. Have a productive day!

Same to you. Thank you for sharing your opinions and responses.

PIK is merely a research project. PIK, avif and jpeg have joined together to make the Jpeg XL image format which will be ready later this year.