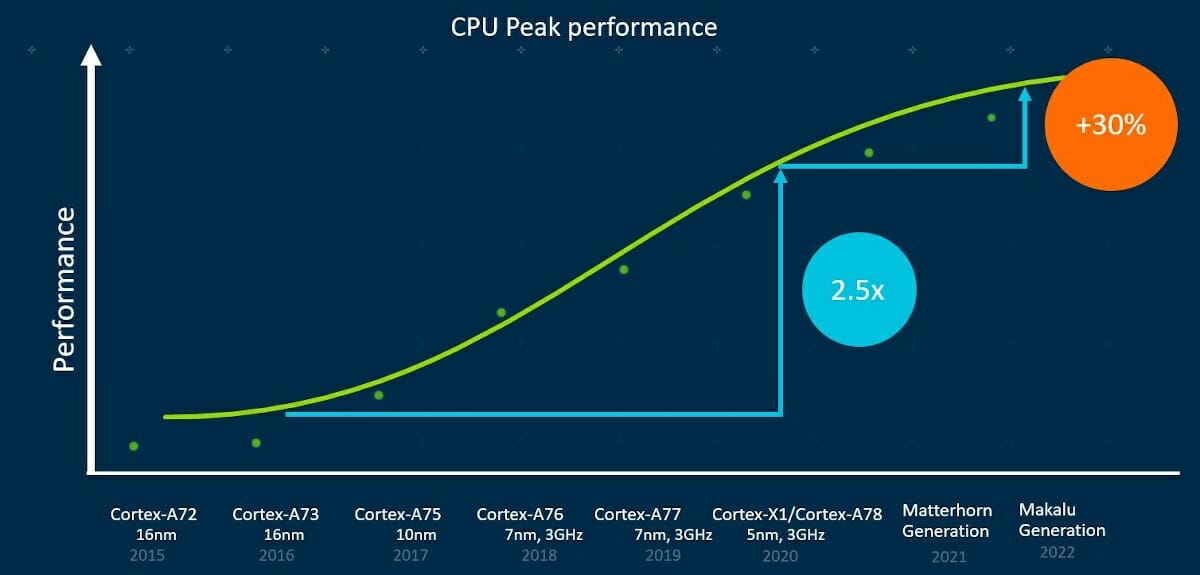

The Arm DevSummit 2020, previously known as Arm TechCon, is taking place virtually this week until Friday 9th, and besides some expected discussions about NVIDIA’s purchase of Arm, there have been some technical announcements, notably a high-performance CPU roadmap for the next two years, which will see Matterhorn (Cortex-A79?) in 2021, and Makalu (Cortex-A80?), the first 64-bit only Arm processor, in 2022.

The company did not provide many details about the new cores, but they expected a peak performance uplift of up to 30% from the Cortex-A78 to the future Makalu generations. It should be noted that while performance keeps improving, the curve has flattened a bit.

But the main announcement is that starting from 2022, all high-end Arm CPU cores (i.e. the “big” cores) will be 64-bit. So far, most Cortex-A cores supported both 32-bit (Aarch32) and 64-bit (Aarch64) architecture, and as we noted four years ago, the latter does not only makes it possible to address more memory, but 64-bit instructions can boost performance up 15 to 30% compared to 32-bit instructions.

Arm explains it did the move as complex digital immersion experiences and compute-intensive workloads from future AI, XR (AR and VR), and high-fidelity mobile gaming experiences require 64-bit for improved performance and compute capabilities. The move to 64-bit only will also lower the cost and time-to-market of mobile apps, since developers of app suitable for high-end devices will be able to focus on 64-bit development only.

https://twitter.com/ArmMobile/status/1313864805615370241

Since most phones will likely ship with dynamIQ SoCs combining 64-bit big and 32-bit/64-bit LITTLE Arm cores, “legacy” 32-bit apps should still be able to run on those phones, but only on the LITTLE cores. What’s a little confusing is that Arm talks about “64-bit only mobile devices expected to arrive by 2023”, implying 32-bit app will not be supported at all. We’ll have to wait a little longer to understand the implications of the move.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

SBC and TV, TV box manufactures can always work on better cooling to add more GHz to their designs, something GPU manufacturers have more experience in.

Would help with the lowering performance curve.

Lower end Phones A53 and A55 are 64 bit instruction sets ?.

All Cortex A7x/A5x are 64-bit, but they also support 32-bit instructions. Makalu will not support 32-bit instructions at all.

Yes I follow that but with lower Cores still around, middle to budget bottom price products that run 32bit and 64bit, could be viable for several years to come.

Unless Google says that’s it and removes all 32bit apk from Google Android.

TV, TV box etc

Is Amazon Fire TV 64bit or 64, 32bit ?

What Is Roku OS, 32, 64 or 64 bit?

I think that a (painful) model where the small cores support 32-bit but not the large one will appear and last a long time. While most modern OSes have no problem with 64-bit and will trivially switch (after taking 30% code size inflation and 50% data size inflation), other mainstream OSes like Windows are having a much more painful migration path due to being LLP64. To the ones not used to this, it means that a “long” remains 32-bit and cannot hold a pointer anymore once you switch to 64-bit. You have to store it into a long long but… Read more »

The cut-off is usually the amount of memory: on machines with less than 1GB, one would definitely want to run 32-bit code, while on machines with more than 2GB, one would definitely want to run 64-bit code. In the middle it could be either one, often depending on other factors like existing software. This means than any machine with a lot of RAM tends to have a 64-bit core (A35/A53/A55/A7x) out of necessity, while the low end machines tend to run exclusively 32-bit code even if they have 64-bit capable cores. All the current Amazon Fire tablets fall into this… Read more »

Hi Arnd! I generally agree with your point above, but there are two exceptions: even with less than 1GB you may be interested in 64-bit to get the new instructions that come with it by default (e.g. idiv) or optionally (crypto, crc32, etc). And even with more than 2GB you may be interested in a more compact and more efficient instruction set that often provides higher performance in user land thanks to better L1I cache hits, as long as your application does not need more than 2GB per process. That’s where the compatibility more is interesting. I’ve been doing that… Read more »

I am guessing there is a good three – five years, low price products life left for 32bit 64bit. People still buy RPI Zero.

Right, I was oversimplifying. When you have a specific application you are optimizing for, there are clearly additional factors and you may end up going the other way, as you describe. On the specific example of compilers, I have done some experiments as well with 32-bit binaries on x86. What I found there was that x32 compiler binaries tend to be the fastest by a good margin, but the complexity of maintaining them doesn’t seem worth the effort. 64-bit compilers appear to suffer mostly from the additional memory and cache usage of larger pointers within the internal data structures, while… Read more »

You have not misread your numbers, I made the exact same observations. I’m seeing about 30% performance loss when building 64-binaries from a 32-bit compiler, making it less interesting to use armv7 than armv8 to cross-compile x86_64. Like you I concluded that it was the heavy 64-bit calculations on 32-bit that was the culprit. And I also gave up on x32 given that nobody uses it, some issues remain in the configure scripts and it’s a bit of a pain to set it up. Do you know if there’s anything equivalent in the ARM world ? I’ve tried armv8l which… Read more »

There is an equivalent of x32 for arm64, but we never merged that into mainline because of the experience with x32 (and mips n32) showing that there just isn’t enough demand compared to work involved in properly supporting an extra ABI in distros. The arm64 ilp32 patches for kernel and glibc are cleanly implemented and I believe actually still have a small number of users, compiler support is actually all there, but the current approach of continuing to support aarch32 on the “little” Arm cores while moving the “big” cores to 64-bit only is a better outcome in my opinion.… Read more »

Interesting issues of choosing between 32 and 64 bit mode. Back when the i386 was new, I wanted to support both 16-bit and 32-bit code on UNIX. This would allow the majority of programs to be compiled compactly, with only the few that would benefit from being 32-bit compiled for 32-bit. But the 16-bit mode was so inferior that the UNIX distribution (System V Release 4) didn’t want to support 16-bit. With x86 and ARM it would be a lot easier to pull this 32 / 64 dual mode off. It used to be the case that Sun SPARC kernels… Read more »