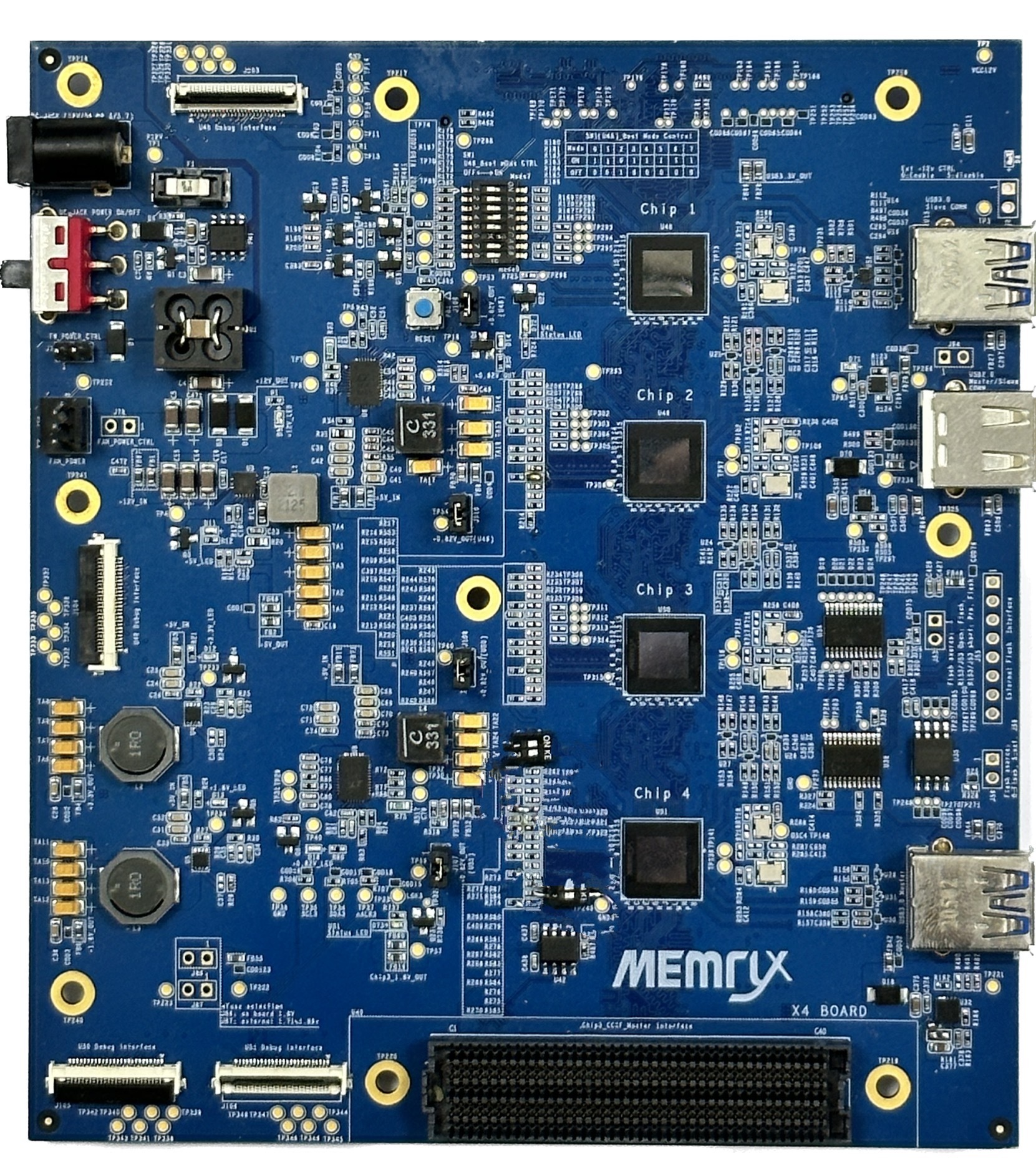

Jean-Luc noted the MemryX MX3 edge AI accelerator module while covering the DeGirum ORCA M.2 and USB Edge AI accelerators last month, so today, we’ll have a look at this AI chip and corresponding modules that run computer vision neural networks using common frameworks such as TensorFlow, TensorFlow Lite, ONNX, PyTorch, and Keras. MemryX MX3 Specifications MemryX hasn’t disclosed much performance stats about this chip. All we know is it offers more than 5 TFLOPs. The listed specifications include: Bfloat16 activations Batch = 1 Weights: 4, 8, and 16-bit ~10M parameters stored on-die Host interfaces – PCIe Gen 3 I/O and/or USB 2.0/3.x Power consumption – ~1.0W 1-click compilation for the MX-SDK when mapping neural networks that have multiple layers Under the hood, the MX3 features MemryX Compute Engines (MCE) which are tightly coupled with at-memory computing. This design creates a native, proprietary dataflow architecture that utilizes up to 70% […]

QEMU 9.0 released with Raspberry Pi 4 support and LoongArch KVM acceleration

QEMU 9.0 open-source emulator just came out the other day, and it brings on board major updates and improvements to Arm, RISC-V, HPPA, LoongArch, and s390x emulation. But the most notable updates are in Arm and LoongArch emulation. The QEMU 9.0 emulator now supports the Raspberry Pi 4 Model B, meaning you can run the 64-bit Raspberry Pi OS for testing applications without owning the hardware. However, QEMU 9.0 has some limitations since Ethernet and PCIe are not supported for the Raspberry Pi board. According to the developers, these features will come on board in a future release. For now, the emulator supports SPI and I2C (BSC) controllers. Still on ARM, QEMU 9.0 provides board support for the mp3-an536 (MPS3 dev board + AN536 firmware) and B-L475E-IOT01A IoT node, plus architectural feature support for Nested Virtualization, Enhanced Counter Virtualization, and Enhanced Nested Virtualization. If you develop applications for the LoongArch […]

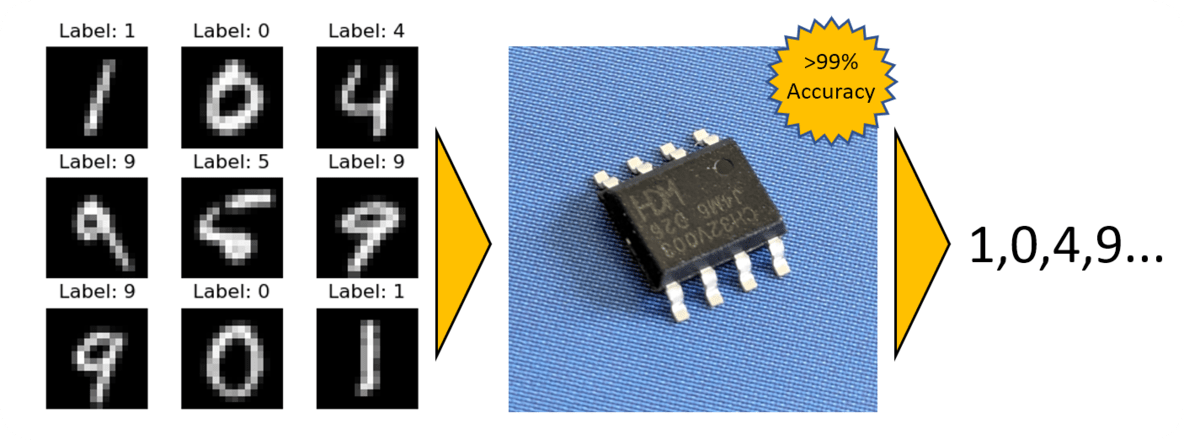

BitNetMCU project enables Machine Learning on CH32V003 RISC-V MCU

Neural networks and other machine learning processes are often associated with powerful processors and GPUs. However, as we’ve seen on the page, AI is also moving to the very edge, and the BitNetMCU open-source project further showcases that it is possible to run low-bit quantized neural networks on low-end RISC-V microcontrollers such as the inexpensive CH32V003. As a reminder, the CH32V003 is based on the QingKe 32-bit RISC-V2A processor, which supports two levels of interrupt nesting. It is a compact, low-power, general-purpose 48MHz microcontroller that has 2KB SRAM with 16KB flash. The chip comes in a TSSOP20, QFN20, SOP16, or SOP8 package. To run machine learning on the CH32V003 microcontroller, the BitNetMCU project does Quantization Aware Training (QAT) and fine-tunes the inference code and model structure, which makes it possible to surpass 99% test accuracy on a 16×16 MNIST dataset without using any multiplication instructions. This performance is impressive, considering […]

Android no longer supports RISC-V, for now…

Google dropped RISC-V support from the Android’s Generic Kernel Image in recently merged patches. Filed under the name “Remove ACK’s support for riscv64,” the patches with the description “support for risc64 GKI kernels is discontinued” on the AOSP tracker removed RISC-V kernel support, RISC-V kernel build support, and RISC-V emulator support. In simple terms, the next Android OS implementation that will use the latest GKI release won’t work on devices powered by RISC-V chips. Therefore, companies wanting to compile a RISC-V Android build will have to create and maintain their own branch from the Linux kernel (ACK RISC-V patches). These abbreviations can be confusing, so let’s focus on them starting with ACK. There’s the official Linux kernel, and Google does not certify Android devices that ship with this mainline Linux kernel. Google only maintains and certifies the ACK (Android Common Kernel), which are downstream branches from the official Linux kernel. One of the main ACK branches is the android-mainline […]

Ambarella CV75S AI SoC brings Vision Language Models (VLM) and Vision Transformer Networks to cameras

Ambarella has been expanding its AI SoC portfolio, and the latest addition is the CV75S family of 5nm chips. The company claims this family introduces the most cost- and power-efficient SoC option for running the latest AI-based image processing like vision language models (VLMs) and vision transformer networks in security, robotics, conferencing, and sports cameras. The CV75S family is the first in Ambarella’s lineup to integrate the latest CVflow 3.0 AI engine, which results in 3 times the performance compared to the former generation. CVflow 3.0 is a chip architecture designed based on a deep understanding of the core computer vision algorithms. It features a dedicated vision-processing engine that Ambarella has programmed using a high-level algorithm description and works with Tensorflow, Caffe, and PyTorch. This engine enables the SoC to perform trillions of operations each second at a fraction of the power consumption of leading GPUs and general-purpose CPU solutions. These chips also […]