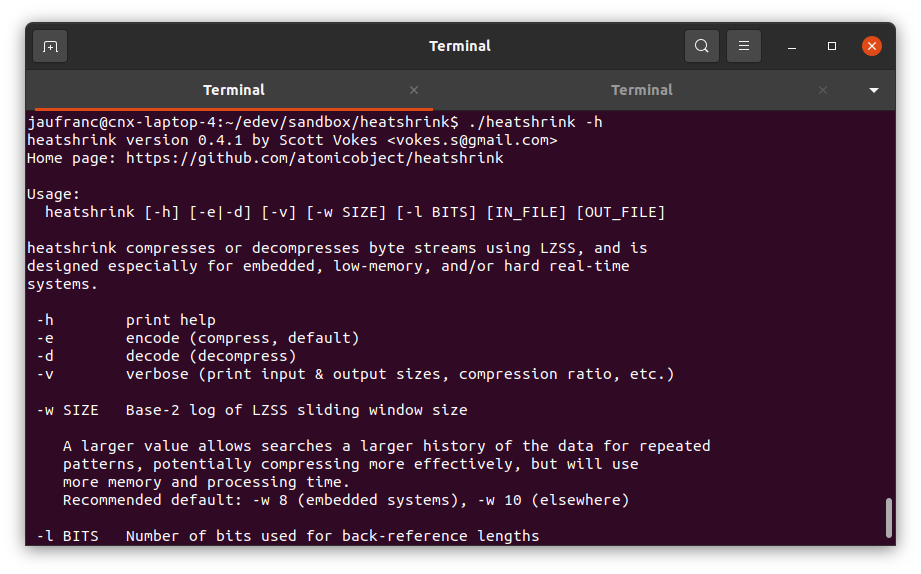

When I wrote about Bangle.js 2 JavaScript smartwatch yesterday, I noticed they used “Heatshrink compression” in ESPruino firmware. I can’t remember ever reading about Heatshrink before, and indeed there are no results while searching on CNX Software. Heatshrink is an open-source data compression library designed for resources-constrained embedded systems that works with as little as 50 bytes of RAM. That’s impressive, so let’s investigate. The library is written in C language and was released about 8 years ago on Github with the following key features: Low memory usage – As low as 50 bytes with specific parameters, and usually under 300 bytes are needed. Incremental, bounded CPU use – Input data is processed in tiny bites Static or dynamic memory allocation Released under an ISC license which allows you to use the library freely, even in commercial products. The internal workings of the library are explained as follows: Heatshrink is […]

Google Introduces Draco Open Source 3D Mesh Compression Tool

Specific compression and/or encoding algorithms are used for video, audio, and files, and each time one watches a video, listens to music, or downloads a file from the Internet, the amount data has likely been reduced thanks to the implementation of one of those algorithms. Google has been involved in the development of some algorithms and their implementation such as VP8/VP9/VP10 video codecs, and brotli file compression. With the emergence of virtual and augmented reality applications and accompanying 3D mesh data, the company has also worked on 3D data compression, and just unveiled Draco. A simple web search showed me some other 3D mesh compression tools are already available including Open3DGC and OpenCTM, but Google decided to compare Draco to GZIP instead, and it indeed offers much better compression than this general purpose file compression tool. Encoding and decoding also appear to be fairly fast, although Google did not compare […]

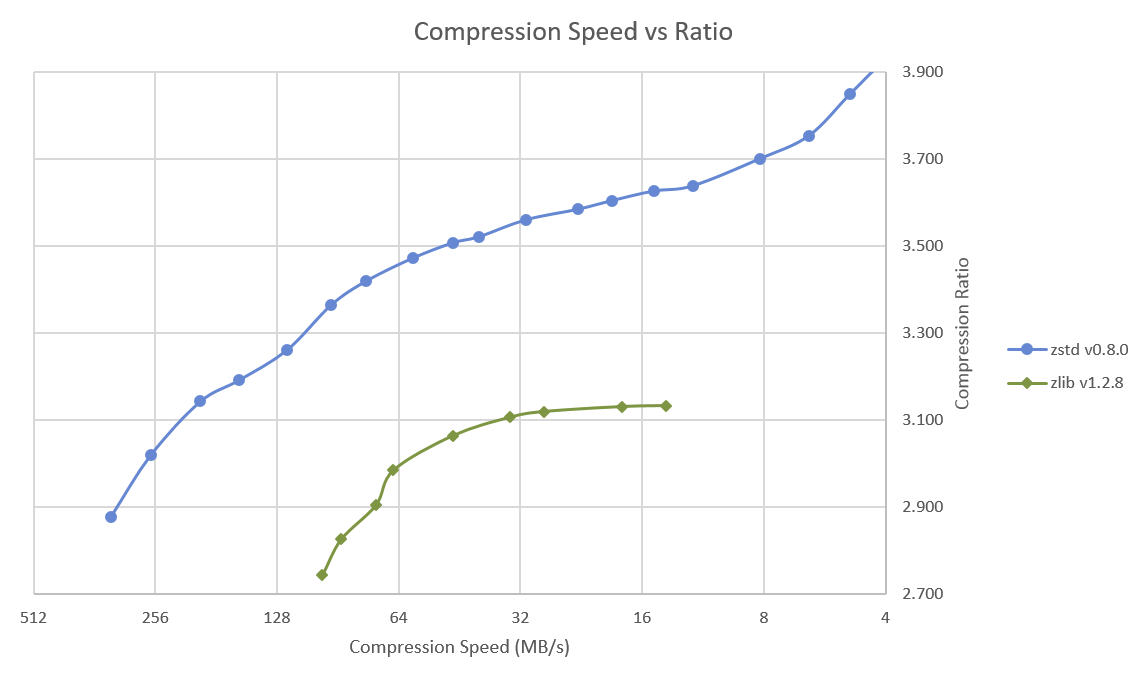

Facebook Zstandard “zstd” & “pzstd” Data Compression Tools Deliver High Performance & Efficiency

Ubuntu 16.04 and – I assume – other recent operating systems are still using single-thread version of file & data compression utilities such as bzip2 or gzip by default, but I’ve recently learned that compatible multi-threaded compression tools such as lbzip2, pigz or pixz have been around for a while, and you can replace the default tools by them for much faster compression and decompression on multi-core systems. This post led to further discussion about Facebook’s Zstandard 1.0 promising both smaller and faster data compression speed. The implementation is open source, released under a BSD license, and offers both zstd single threaded tool, and pzstd multi-threaded tool. So we all started to do own little tests and were impressed by the results. Some concerns were raised about patents, and development is still work-in-progess with a few bugs here and there including pzstd segfaulting on ARM. Zlib has 9 levels of […]

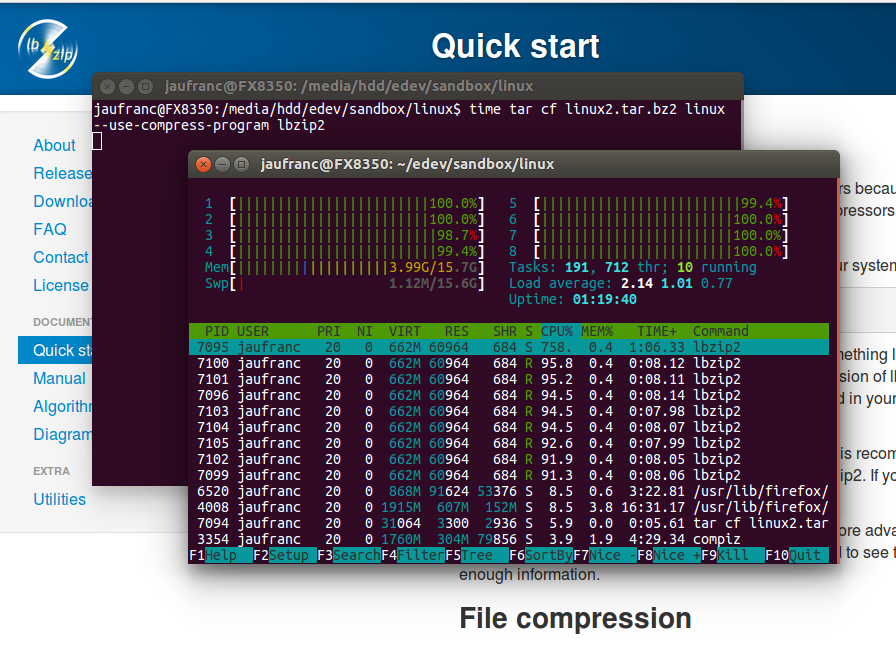

Compress & Decompress Files Faster with lbzip2 multi-threaded version of bzip2

Bzip2 is still one of the most commonly used compression tools in Linux, but it only works with a single thread, and I’ve been made aware that lbzip2 allows multi-threaded bzip2 compressions which should lead to much better performance on multi-core systems. lbzip2 was not installed by default in my Ubuntu 16.04 machine, but it’s easy enough to install:

|

1 |

sudo apt install lbzip2 |

I have cloned mainline linux repository on my machine, so let’s see how long it takes to compress the directory with bzip2 (one core compression):

|

1 2 3 4 5 |

time tar cjf linux.tar.bz2 linux real 9m22.131s user 7m42.712s sys 0m19.280s |

9 minutes and 22 seconds. Now let’s repeat the test with lbzip2 using all 8 cores from my AMD FX8350 processor:

|

1 2 3 4 5 |

time tar cf linux2.tar.bz2 linux --use-compress-program=lbzip2 real 2m32.660s user 7m4.072s sys 0m17.824s |

2 minutes 32 seconds. Almost 4x times, not bad at all. It’s not 8 times faster because you have to take into account I/Os, and at the beginning the system is scanning the drive, using all 8-core but not all full throttle. […]

Lepton Image Compression Achieves 22% Lossless Compression of JPEG Images (on Average)

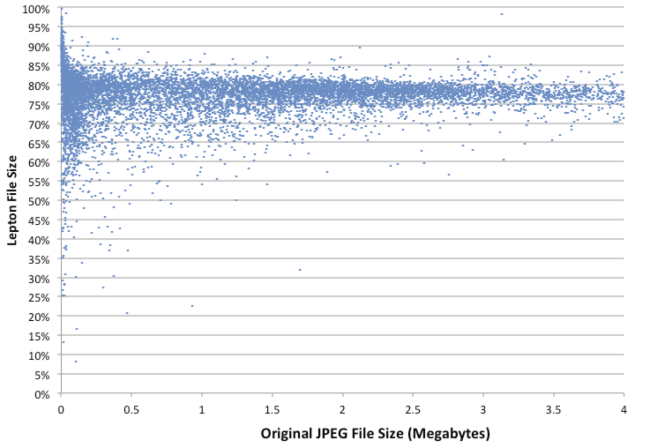

Dropbox stores billions of images on their servers, most of them JPEGs, so if they can reduce the size of pictures it can have a big impact on their storage requirements, so the company developed Lepton image compression, which – on average – achieved 22% lossless compression on the images stored in their cloud. Compression and decompression speed is also important, since the files are compressed when uploaded and uncompressed on the fly when downloaded so that the complete process is transparent to the end users, who only see JPEG photos, and the company claims 5MB/s compression, and 15MB/s compression, again on average. The good news is that the company released Lepton implementation on Github, so in theory it could also be used to increase the capacity of NAS which may contain lots of pictures. So I’ve given it a try in a terminal window in Ubuntu 14.04, but it […]

Brotli Compression Algorithm Combines High Compression Ratio, and Fast Decompression

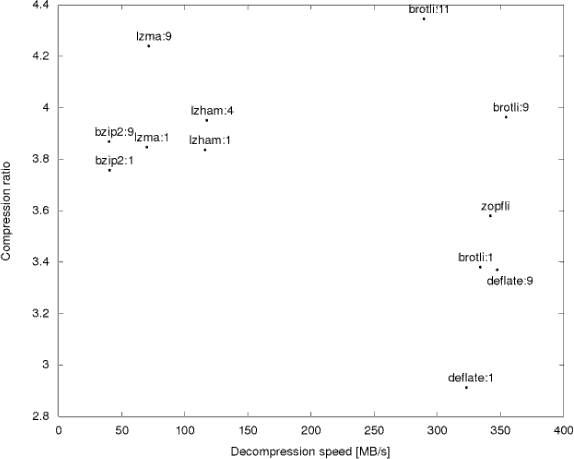

After Zopfli, Google has now announced and released Brotli, a new compression algorithm to make the web faster, with a ratio compression similar to LZMA, but a much faster decompression, making it ideal for low power mobile devices. Contrary to Zopfli that is deflate compatible, Brotli is a complete new format, and combines “2nd order context modeling, re-use of entropy codes, larger memory window of past data and joint distribution codes” to achieve higher compression ratios. Google published some benchmark results comparing Brotli to other common algorithms. Since the company aims to make the web faster, the target is to decrease both downloading time (high compression ratio), and rendering time (fast decompression speed), and Brotli with a quality set to 11 is much better than competitor once both parameters are taken into account. As you’d expect the source code can also be pulled from Github. So I gave it a […]

Linux 3.9 Release

Linus Torvalds has announced the release of Linux Kernel 3.9: So the last week was much quieter than the preceding ones, which makes me suspect that one reason -rc7 was bigger than I liked was that people were gaming the system and had timed some of their pull requests for just before the release, explaining why -rc7 was big enough that I didn’t actually want to do a final release last week. Please don’t do that. Anyway. Whatever the reason, this week has been very quiet, which makes me much more comfortable doing the final 3.9 release, so I guess the last -rc8 ended up working. Because not only aren’t there very many commits here, even the ones that made it really are tiny and not pretty obscure and not very interesting. Also, this obviously means that the merge window is open. I won’t be merging anything today, but if […]

Zopfli Library Improves Zlib Compression by 3 to 8%

Google developers have released a new compression library called Zopfli. This library, written in C, is compatible with zlib, yet provide a better compression, more exactly 3 to 8% according to Google. This library can be used on servers for better compression in order to save bandwidth, as well as delivering web pages faster. Since it’s fully compatible with zlib, the web browsers do not need to be changed. The only drawback is that it’s several magnitude slower than zlib, so it’s better used for static content that is compressed once, and sent over the Internet many times, and it may not be a good choice for dynamic content. The source code is available at https://code.google.com/p/zopfli/, so let’s try it. Get the code and build zopfli:

|

1 2 3 |

git clone https://code.google.com/p/zopfli/ cd zopfli make |

Different levels of compression are available:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

./zopfli -h Usage: zopfli [OPTION]... FILE -h gives this help -c write the result on standard output, instead of disk filename + '.gz' -v verbose mode --gzip output to gzip format (default) --deflate output to deflate format instead of gzip --zlib output to zlib format instead of gzip --i5 less compression, but faster --i10 less compression, but faster --i15 default compression, 15 iterations --i25 more compression, but slower --i50 more compression, but slower --i100 more compression, but slower --i250 more compression, but slower --i500 more compression, but slower --i1000 more compression, but slower |

For testing purpose, I’ve just saved this blog as one html file (test.html – 67275 bytes) […]