Google has been working one several front to make data and images smaller, hence faster to load from the Internet, with project such as Zopfli algorithm producing smalled PNG & gzip files, or WebP image compression algorithm that provides better lossless compression compare to PNG, and better lossy compression compared to JPEG, but requires updated support from clients such as web browsers. Google has now released Guetzli algorithm that improve on the latter, as it can create JPEG files that are 20 to 35% smaller compared to libjpeg with similar quality, and still compatible with the JPEG format.

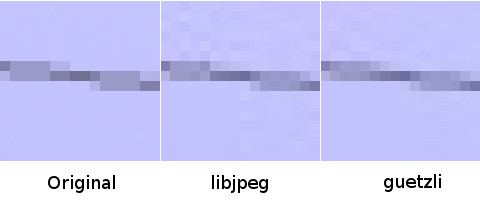

The image above shows a close up on a phone line with the original picture, the JPEG picture compressed with libjpeg with the artifacts around the line, and a smaller JPEG picture compressed with Guetzli with less artifacts.

The image above shows a close up on a phone line with the original picture, the JPEG picture compressed with libjpeg with the artifacts around the line, and a smaller JPEG picture compressed with Guetzli with less artifacts.

You can find out more about the algorithm in the paper entitled “Guetzli: Perceptually Guided JPEG Encoder“, or read the abstract below:

Guetzli is a new JPEG encoder that aims to produce visually indistinguishable images at a lower bit-rate than other common JPEG encoders. It optimizes both the JPEG global quantization tables and the DCT coefficient values in each JPEG block using a closed-loop optimizer. Guetzli uses Butteraugli, our perceptual distance metric, as the source of feedback in its optimization process. We reach a 29-45% reduction in data size for a given perceptual distance, according to Butteraugli, in comparison to other compressors we tried. Guetzli’s computation is currently extremely slow, which limits its applicability to compressing static content and serving as a proof- of-concept that we can achieve significant reductions in size by combining advanced psychovisual models with lossy compression techniques.

The compression is quite slower than with libjpeg or libjpeg-turbo, but considering that on the Internet a file is usually compressed once, and decompressed many times by visitors, this does not matter so much. Another limitation is that it does not support progressive JPEG encoding.

You can try Guetzli by yourserlf as the code was released on github. It did the following to build the tool on Ubuntu 16.04:

|

1 2 3 4 |

sudo apt install libpng-dev libgflags-dev git build-essential git clone https://github.com/google/guetzli/ cd guetzli make |

You’ll the executable in bin/release directory, and you can run it to list all options:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 |

./bin/Release/guetzli guetzli: Guetzli JPEG compressor. Usage: guetzli [flags] input_filename output_filename Flags from /build/gflags-YYnfS9/gflags-2.1.2/src/gflags.cc: -flagfile (load flags from file) type: string default: "" -fromenv (set flags from the environment [use 'export FLAGS_flag1=value']) type: string default: "" -tryfromenv (set flags from the environment if present) type: string default: "" -undefok (comma-separated list of flag names that it is okay to specify on the command line even if the program does not define a flag with that name. IMPORTANT: flags in this list that have arguments MUST use the flag=value format) type: string default: "" Flags from /build/gflags-YYnfS9/gflags-2.1.2/src/gflags_completions.cc: -tab_completion_columns (Number of columns to use in output for tab completion) type: int32 default: 80 -tab_completion_word (If non-empty, HandleCommandLineCompletions() will hijack the process and attempt to do bash-style command line flag completion on this value.) type: string default: "" Flags from /build/gflags-YYnfS9/gflags-2.1.2/src/gflags_reporting.cc: -help (show help on all flags [tip: all flags can have two dashes]) type: bool default: false -helpfull (show help on all flags -- same as -help) type: bool default: false -helpmatch (show help on modules whose name contains the specified substr) type: string default: "" -helpon (show help on the modules named by this flag value) type: string default: "" -helppackage (show help on all modules in the main package) type: bool default: false -helpshort (show help on only the main module for this program) type: bool default: false -helpxml (produce an xml version of help) type: bool default: false -version (show version and build info and exit) type: bool default: false Flags from guetzli/guetzli.cc: -quality (Visual quality to aim for, expressed as a JPEG quality value.) type: double default: 95 -verbose (Print a verbose trace of all attempts to standard output.) type: bool default: false |

Ideally you should have a raw or losslesly compressed image, but I tried a photo taken from my camera first:

|

1 2 3 |

./bin/Release/guetzli IMG_4354.JPG IMG_4354-Guetzli.JPG Invalid input JPEG file Guetzli processing failed |

But it reported my JPEG file was invalid, so I tried another file (1920×1080 PNG file):

|

1 2 3 4 5 6 |

time ./bin/Release/guetzli MINIX-Launcher3.png MINIX-Launcher3-guetzli.jpg real 2m58.594s user 2m55.840s sys 0m2.616s |

It’s a single threaded process, and it takes an awful lot of time (about 3 minutes on an AMD FX8350 processor), at least with the current implementation. You may want to run it with “verbose” option to make sure it’s not dead.

I repeated the test with convert using quality 95, as it is the default option in Guetzli:

|

1 2 3 4 5 |

time convert -quality 95 MINIX-Launcher3.png MINIX-Launcher3-convert.jpg real 0m0.128s user 0m0.112s sys 0m0.012s |

The file compressed with Guetzli is indeed about 15% smaller, and should have similar quality:

|

1 2 3 4 |

ls -lh MINIX-Launcher3* -rw-rw-r-- 1 jaufranc jaufranc 534K Mar 17 11:45 MINIX-Launcher3-convert.jpg -rw-rw-r-- 1 jaufranc jaufranc 453K Mar 17 11:41 MINIX-Launcher3-Guetzli.jpg -rw-rw-r-- 1 jaufranc jaufranc 1.5M Mar 17 11:35 MINIX-Launcher3.png |

It’s just currently about 1,400 times slower on my machine.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress. We also use affiliate links in articles to earn commissions if you make a purchase after clicking on those links.

Insane, that amount of development work and wasted CPU cycles for a problem already solved long ago: Wavelet compression (JPEG2000) is the way better alternative to solve this problem but due to ignorance was never widely adopted (AFAIK only OS X had full internal support for it from the beginning including Safari browser support for image/jp2 MIME type).

https://petapixel.com/2015/09/12/jpeg-2000-the-better-alternative-to-jpeg-that-never-made-it-big/

Anyway: time to test Guetzli for use with steganography/Digimarc (for a few customers we generated optimized ‘image pipelines’ and sometimes invisible watermarks have to be embedded that survive further compression/save actions)

I’ve run both files through jpegoptim just to make sure no further optimization was possible:

Any comparison with mozjpeg? https://github.com/mozilla/mozjpeg

@cnxsoft

Another ‘problem’ when trying to reduce file size for web optimizations is ‘image metadata’. The relevant 2 JPEG file formats (JFIF and EXIF) allow metadata to be embedded. Some is technical (eg. resolution metadata: is it ‘lines per centimeter’ or ‘per inch’) but some is just to describe contents. In EXIF formats there can be embedded a lot (especially since Adobe started to promote and use XMP throughout their whole Creative Suite) and now you end up with some technical metadata, some contents described and huge amounts of junk (when the target is ‘web’).

This is the same small picture:

The 1st version is with metadata stripped (I use the appropriate save options and in image pipelines usually ‘jhead -de -di -dx’), the 2nd contains all the junk including the file’s work history:

This metadata is sometimes great: we use it in inhouse production to do a lot of fancy automated stuff since image metadata gets also embedded into layout documents (InDesign) and later in exported PDF (where you then track image usage and post-process the PDF). But if you have such a JPEG as above which is 15.4KB in size while the real image data is only 6.3KB then obviously you save a lot more space in less time by throwing away 9KB metadata instead of optimizing the picture representation in the data part 🙂

Recommendation to deal with all sorts of embedded metadata: Phil Harvey’s exiftool (it’s the best, it’s free, runs everywhere and is an example for advanced Perl wizardry)

@–

Just a quick test. Built from github source, and then:

Results:

The file compressed with cjpeg is much smaller. Are the quality settings equivalent to libjpeg?

@cnxsoft

Due to the various ‘optimization’ processes involved with JPEG ‘quality’ switch settings can’t be compared (same value, different optimizations, sometimes also different interpretation of levels) so you always have to compare later. With ImageMagick/GraphicsMagick it’s not ‘[gm] compare’ but ‘[gm] composite -compose difference’ instead.

So if you spend much more time optimising compression you get a small improvement in size. What a time to be alive!

@JM

It’s worse than that: using JPEG in 2017 is insanely stupid since for the task of ‘compress images lossy’ better alternatives exist since decades: wavelet based algorithms beat DCT — discrete cosine transform — easily.

The whole reason smart people waste their time with improving JPEG is the fact that innovations or better alternatives don’t get adopted widely. We still use JPEG today since we used it already 20 years ago. That’s the only and pretty lame reason 🙁

I’m not sure whether wavelets are universally considered superior to DCT. From my understanding, most modern image codecs (eg WebP, BPG etc) use DCT based transforms.

Here’s an article by someone more knowledgeable than myself, if interested: http://archive.is/K7jKA

Quote from above article:

> JPEG2000 is a classic example of wavelet failure: despite having more advanced entropy coding, being designed much later than JPEG, being much more computationally intensive, and having much better PSNR, comparisons have consistently shown it to be visually worse than JPEG at sane filesizes.

@–

Just visit #18 in the comments section there and check the link. The visual examples there and the link to Mozilla Bug 36351 (‘Add JPEG2000 support’, opened 17 years ago and proudly ignored by Mozilla since then) should be obvious regarding both Wavelet compression advantages for still images and the source of the problem: ignorance.

@tkaiser

The fun thing is that I worked on Wavelet video encoding back in the year 1999 with a PhD student and professor at the University of Bath. I even get my name listed in an IEEE paper in 2000 @ http://ieeexplore.ieee.org/document/899326/

I don’t think it went anywhere after that.

@tkaiser

IMO the main problem for all JPEG “alternatives” is patents.

BTW you may also have a look at http://bellard.org/bpg/ (which is great, but it has the same issue : HEVC is patent-encumbered…)

I think the patent kerfuffle around JPEG2000 scared a lot of adoption at the time (and possibly even now), so am not surprised that it’s hardly used.

Whilst wavelets are particularly problematic for video codecs, I don’t believe that they are able to match state of the art DCT based coders for still images (again, modern image codecs are usually based off DCT, kinda says something). Issues like blocking from block based codecs can be mitigated with deblocking filters (something JPEG doesn’t have), and this has been fairly well studied.

We developed an online tool that automatically does the job for free, based on Guetzli algorithm. We’d be glad it you give it a try… thanks! 🙂

Here it is: http://www.ishrinker.com