Debian 11 “Bullseye” was released in August 2021, and I was expecting Raspberry Pi OS to soon get upgraded to the latest version, especially the last time around, in 2019, Raspian Buster was released even before the official Debian 10 “Buster” release, although the reason was Raspberry Pi 4 launch.

This time around it took longer, but the good news is that Raspberry Pi OS has just been upgraded to Debian 11, meaning it benefits from the new features such as driverless printing, in-kernel exFAT module, “yescrypt” password hashing, and packages upgraded to more recent versions.

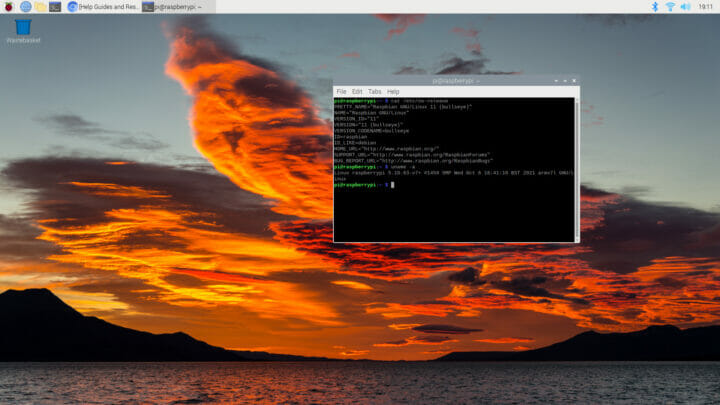

The Raspberry Pi Foundation goes into more details about what changed in the new Raspberry Pi OS release with GTK+3 user interface toolkit, Mutter window manager replacing OpenBox in boards with 2GB RAM or more, new KMS video and camera drivers, and more. Raspberry Pi OS “BullsEye” can be downloaded from the usual place, and I installed it on my Raspberry Pi Zero 2 W.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

pi@raspberrypi:~ $ cat /etc/issue Raspbian GNU/Linux 11 \n \l pi@raspberrypi:~ $ uname -a Linux raspberrypi 5.10.63-v7+ #1459 SMP Wed Oct 6 16:41:10 BST 2021 armv7l GNU/Linux pi@raspberrypi:~ $ cat /proc/cpuinfo processor : 0 model name : ARMv7 Processor rev 4 (v7l) BogoMIPS : 64.00 Features : half thumb fastmult vfp edsp neon vfpv3 tls vfpv4 idiva idivt vfpd32 lpae evtstrm crc32 CPU implementer : 0x41 CPU architecture: 7 CPU variant : 0x0 CPU part : 0xd03 CPU revision : 4 ... Hardware : BCM2835 Revision : 902120 Serial : 00000000e51cb671 Model : Raspberry Pi Zero 2 Rev 1.0 |

The Linux kernel is 5.10.63, bumped from Linux 5.10.17 in the “Buster” image.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 |

$ inxi -Fc0 System: Host: raspberrypi Kernel: 5.10.63-v7+ armv7l bits: 32 Console: tty 1 Distro: Raspbian GNU/Linux 11 (bullseye) Machine: Type: ARM Device System: Raspberry Pi Zero 2 Rev 1.0 details: BCM2835 rev: 902120 serial: 00000000e51cb671 CPU: Info: Quad Core model: ARMv7 v7l variant: cortex-a53 bits: 32 type: MCP Speed: 1000 MHz min/max: 600/1000 MHz Core speeds (MHz): 1: 1000 2: 1000 3: 1000 4: 1000 Graphics: Device-1: bcm2835-hdmi driver: vc4_hdmi v: N/A Device-2: bcm2835-vc4 driver: vc4_drm v: N/A Display: server: X.org 1.20.11 driver: loaded: modesetting tty: 80x24 Message: Advanced graphics data unavailable in console. Try -G --display Audio: Device-1: bcm2835-hdmi driver: vc4_hdmi Device-2: bcm2835-audio driver: bcm2835_audio Sound Server: ALSA v: k5.10.63-v7+ Network: Message: No ARM data found for this feature. IF-ID-1: wlan0 state: up mac: e4:5f:01:10:88:f4 Drives: Local Storage: total: 14.84 GiB used: 7.32 GiB (49.3%) ID-1: /dev/mmcblk0 model: SC16G size: 14.84 GiB Partition: ID-1: / size: 14.29 GiB used: 7.27 GiB (50.9%) fs: ext4 dev: /dev/mmcblk0p2 ID-2: /boot size: 252 MiB used: 48.1 MiB (19.1%) fs: vfat dev: /dev/mmcblk0p1 Swap: ID-1: swap-1 type: file size: 100 MiB used: 99.7 MiB (99.7%) file: /var/swap Sensors: System Temperatures: cpu: 47.2 C mobo: N/A Fan Speeds (RPM): N/A Info: Processes: 165 Uptime: 25m Memory: 491.6 MiB used: 356.6 MiB (72.5%) gpu: 64 MiB Init: systemd runlevel: 5 Shell: Bash inxi: 3.3.01 |

Some changes appear in the Graphics part, with “Buster” showing:

|

1 2 3 4 5 |

Graphics: Device-1: bcm2708-fb driver: bcm2708_fb v: kernel Device-2: bcm2835-hdmi driver: N/A Display: tty server: X.org 1.20.4 driver: fbturbo tty: 80x24 Message: Advanced graphics data unavailable in console. Try -G --display |

and BullsEye:

|

1 2 3 4 |

Device-1: bcm2835-hdmi driver: vc4_hdmi v: N/A Device-2: bcm2835-vc4 driver: vc4_drm v: N/A Display: server: X.org 1.20.11 driver: loaded: modesetting tty: 80x24 Message: Advanced graphics data unavailable in console. Try -G --display |

That should be because of the switch from the Raspberry Pi-specific video driver to a standard KMS video driver.

There was no swap in Debian 10 image, but 100MB is enabled in the Debian 11 one. I can see there’s close to 100% of it used, which may explain which the Windows felt a bit sluggish in the desktop environment. It should not be an issue with Raspberry Pi boards equipped with more memory.

I’ve also run SBC bench benchmark from an SSH terminal with the goal of double-check any potential regressions and/or improvements:

|

1 2 3 4 5 |

sudo /bin/bash ./sbc-bench.sh -c sbc-bench v0.8.3 Installing needed tools. This may take some time... |

It got stuck here like forever, so I tried to access the desktop without luck, and I was unable to initiate another SSH terminal or terminate sbc-bench.sh. I power cycled the board, and tried again, and I got the exact same results.

So I would not recommend upgrading a production machine right now, or you’d better perform some testing first. Note it’s not possible to upgrade Raspberry Pi OS Buster to BullsEye from the command line just yet, and this time the only solution is to flash the image to a microSD card.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress. We also use affiliate links in articles to earn commissions if you make a purchase after clicking on those links.

They still don’t support upgrading systems without nuking them and reinstalling. 🙁

I was actually thinking that I could just do apt dist-upgrade and get the new version. Not a problem for me, as I am only using it on my RPi Zero 2 W and I need it working right now (I also just set it up last week). I will postpone its update after I see more about the new OS version.

If you want to try upgrading instead of nuking, here’s their guide: forums.raspberrypi.com/viewtopic.php?t=323279

Edited: Oh, tkaiser mentioned this already.

I found out that there is an article on TomsHardware that does the same thing: in place update to Debian 11 on RPi. It looks like the list of commands is a little different than the forum topic. I can live with the current installation for now, not enough time to fiddle with it, I just finished set it up.

Being able to retain system customizations is one of the advantages of the distros which do support in place major version upgrades. The other advantage which means more to me is to be able to upgrade a machine in place. I don’t want to go to where the machine is and swap out a uSD card. I want to log into it remotely and issue some commands.

They’re starting to stand out by not offering this ability when so many other distros do support it.

> I want to log into it remotely and issue some commands.

Just do it with their Debian fork or whatever userland runs on your RPi.

As long as you keep in mind that you’re always dealing with 2 OS on these things and how stuff needs to be organised on the FAT partition for ThreadX to work and for their kernel and ThreadX related (firmware) packages to flawlessly update you’re absolutely fine.

BTW: to have a look at Write Amplification when doing an upgrade from Buster to Bullseye I simply tried it. Not with Raspbian but with Armbian and OS on a SATA SSD to query Total_LBAs_Written SMART attribute before and after.

This resulted on ext4 in ~2140 MB written at the flash layer and with btrfs (with compress-lzo) even in ~13150 MB. Package count: 476 before and 506 after.

A Raspbian minimal image ships with ~100 packages more.

According to tune2fs the rootfs shows: ‘Lifetime writes: 2554 MB’. This includes transferring the rootfs from SD card (~1.2GB), a small update of 34 packages in Buster and then the distro upgrade to Bullseye. So with this Samsung SSD controller we’re talking about Write Amplification of ~2.

At this point I realised that I only wasted my time with this experiment since the flash translation layer in an el cheapo SD card is most probably way more primitive and Write Amplification there with the same task could be easily 10 times higher.

The problem is still: such a fat OS like Debian which ends up writing +1000 MB at the filesystem layer is not made for in-place version upgrades on crappy flash storage like SD cards.

You make a good point. But your basic premise was that Raspbian was intentionally designed to be upgraded as an image instead of on a per-package bases because the Foundation was concerned with flash life. You’ve proven that part, but what contradicts that is their failure to tune it to decrease all of the small incidental writes that OSs generate as they go about their business. I would assert that’s the primary mechanism that Raspbian wears out cheap uSD cards.

Additionally, I’m curious how much WA you get from even an image write of a cheap uSD card. Since they lack TRIM, there’s no way to tell them to consider themselves blank before writing a new image. So, each sector written will still cause a reallocation, no? I know there are some SD utilities (at least under windows) which allow you to do sort of a secure erase, but I don’t assume that everyone does that every time they upgrade a system.

Thank you for doing all of the testing that you have done. I appreciate the effort you’ve put into this.

> your basic premise was that Raspbian was intentionally designed

Nope, Raspbian is a mess when it comes to SD cards. No log2ram but swap on SD card and default ext4 commit interval will result in SD cards wearing out way faster than necessary.

Just wanted to hint that you can do a distro upgrade on Raspberries (regardless of the userland) and that the very same process is bad for SD cards with such a fat OS like Debian or Ubuntu which involves way too much fs activity when upgrading.

> I know there are some SD utilities (at least under windows) which allow you to do sort of a secure erase

It’s block erase and the SD Card Formatter tool can do this on macOS or Windows. For SBC != Raspberries that’s the only useful task this tool does (on Raspberries using NOOBS it helps reformatting larger SD cards from ExFAT to FAT since ‘good old’ VideoCore can’t deal with anything newer)

I replied to the wrong post before, sorry. There is a command called blkdiscard. I tried it on a card and…

Simple/dumb test. I ran blkdiscard on a Samsung Pro+ 32GB uSD card. It took a few seconds. I then performed the following full drive ‘dd’ operations:

Read: 50.6MB/s

Write: 84.8MB/s

Read: 92.4MB/s

Write: 84.8MB/s

Read: 92.3MB/s

That seems pretty consistent and shows us that the command does do *something*. It did erase the card as I verified with a separate operation. I wanted to do a rewrite of the whole card to see if the overwrite would be as fast as the original write of the ‘blanked’ card. The write speeds were near identical. One took 377.495 seconds and the other 377.631. I also did a second read of the blanked card and saw the same read speed, so reading the blanked card didn’t make the card do anything visible.

This tells us nothing about write amplification, though. I was hoping for a different rewrite speed since one of the writes had to perform an erase on blocks as it wrote and the other didn’t. Possibly the firmware is smart enough to erase one block when programming another so it can hide the erase cost. It’s a Samsung high end card, so that’s not imossible. I’ll try some other brands of cards.

Tried another card. This one is an Adata Premier uSD 32GB 100MB/s (AUSDH32GUICL10A1-RA1). They were supposedly identical to many other cards I purchased of the same type, but they had a slightly different capacity and my initial simple read speed tests showed poor performance. I suspected that they had changed vendors or something as the writing on the back of the card was different, but it was still MIK like I’d prefer. With the results of the previos test in mind I picked one of them for this testing because *I had a hunch*.

Okay, tests:read: 30.4 MB/s

write: 94.5 MB/s

read: 95.3 MB/s ******

Now I blkdiscard it.

read: 94.8 MB/s

write: 30.5 MB/s

read: 95.0 MB/s

write: 30.4 MB/s

read: 95.0 MB/s

So this one behaved differently than the Samsung. The card when *new* appeared to behave like the blkdiscarded Samsung card. We see the read speed improved greatly after it had been fully written. But, the blkdiscard didn’t seem to cause the read speed to regress. I did verify the blkdiscard erases the Adata, but it does not cause the initial read speed to depress like the Samsung does.

I guess Adata’s blkdiscard doesn’t put the card into the same kind of state that it does for Samsung.

I documented what to do when running Raspberry Pi OS and trying to extend the SD card’s lifespan: https://github.com/ThomasKaiser/Knowledge/blob/master/articles/Quick_Review_of_RPi_Zero_2_W.md#sd-card-endurance

Upgrading their Debian flavour is possible and it works the usual way. But they know their customers well enough to realize that vast majority of them are overwhelmed by a 10 step copy&paste shell commands sequence and as such they officially state ‘not possible’.

Better think about who the users are: if you run Linux on a PC you’re most probably a Linux expert able to deal with minor or even major Linux issues (and there are tons of them). If you run a Desktop Linux on an RPi most probably you were just after cheap hardware?

And then there’s the ‘crappy storage’ dilemma as well: while in 2021 some SBC users might have heard of random IO vs. sequential IO and know they should look for A1 rated quality SD cards… majority still has no clue.

Writing a new OS image to an SD card is only sequential IO with Write Amplification close to 1. Doing a distro upgrade on an SD card is pure random IO with Write Amplification magnitudes higher. On slow/crappy SD cards this process can take ages and there’s a good chance the card will die of this.

It looks like there’s a linux tool called blkdiscard. I’m trying it on a card now.

x

So interesting. Am very interested in reading the News post in detail. I only glance at it now. I never use a LXDE without Openbox before …

I had long been thinking the stop* in LXDE development would be a challenge for rPi. Anything with only 512MB memory needs ideally <100MB used once you get to desktop. So is this the start of a “forced fork” of the DE by rPi developers? (It is a lot of work …)

*Not total stop. Github still active…

> kernel is 5.10.63, bumped from Linux 5.10.17

If you recently did an ‘apt upgrade’ on Buster you were on 5.10.63 too. The Bullseye image shares also same ThreadX version and (Integer) performance is more or less the same: ix.io/3EgS vs. ix.io/3EpV

One difference is shipped GCC version (and I would assume packages were all built with same version): 8.3 vs. 10.2.

> I was unable to initiate another SSH terminal or terminate sbc-bench.sh

Your board locked up while compiling cpuminer most probably due to underpowering (overheating is close to impossible). On my Zero 2 W same performance numbers as with former Buster installation: ix.io/3Eq3

BTW: cpuminer doesn’t build with armhf userland anyway 🙂

It seems running the Bullseye image significantly increases temperatures and consumption, especially when overclocking/overvolting is at play: https://github.com/ThomasKaiser/Knowledge/blob/master/articles/Quick_Review_of_RPi_Zero_2_W.md#performance-and-consumption

There is an upgrade description which deals with Buster -> Bullseye ans has some more additional changes for the transition:

https://dietpi.com/blog/?p=811

Although it has some specialities for DietPi (a Debian based lightweight distribution) it may give some hints where to look at other distributions when upgrading.

Where does this obsession for net.ifnames=0 comes from?

Mostly because our scripts currently count on the legacy interface names and because as far as I could see, the simple identifying names “eth” and “wlan” are still preferred by most users compared to the more cryptic predictable enpNsM/”wlpNsM styled ones. I haven’t heard of any DietPi system where the network interfaces really got random different classic indices on every boot, hence the reason for “predictable” interface names seems to be a rare case, probably large network switch or server racks, not sure.

One of the next releases will have the network config script reworked, which will then support predictable interface names as well. But this doesn’t necessarily mean that we ship them as default.

> simple identifying names “eth” and “wlan” are still preferred by most users

IMO a system where end users have to deal with the device names of network interfaces is somewhat broken or at least not ‘user friendly’ at all 🙂

Asides that problems already appear with USB Ethernet adapters and not just ‘large network switch or server racks’. At least as long as managing the network with anachronisms…

You don’t need to deal with interface names, at least not when setting up a common Internet network connection via WiFi or Ethernet, or a WiFi access point with shared Ethernet Internet connection. But like on any other non-GUI Linux distribution, for a more complex network setup, you need to create/edit some own configs, and then of course one needs to deal with those. We plan to extend the abilities of our network setup script, as mentioned, but until then for other than mentioned cases, /etc/network/interfaces.d can be used for custom ifupdown configs, or any other network stack or GUI tool of choice, Webmin or whatsever can be installed and used. We cannot and do not aim to provide everything in every use case with our scripts, which isn’t possible anyway, but they offer the selected set of features which we were able to implement until now. I know you are working for another project with some overlap, so you know what I am talking about :).

> IMO a system where end users have to deal with the device names of network interfaces is somewhat broken or at least not ‘user friendly’ at all

For me it’s the opposite. My activities consists in using network interfaces all the time. “ip” and “ethotool” are commands I can use a hundred times a day. The newer atrocious names are totally unusable and make me set the wrong interface very frequently. It’s very easy to remember “eth12”, but “en63s2p6f0y7” is not an interface name but a password. I was actually very happy when I found this setting that I could add to the boot loader of machines suffering from this stuff.

In addition, for production servers, the cryptic names even prevent one from writing portable configurations. Having a network application bind to eth0 is easy. Having it bind to en63s2p6f0y7 on one machine and en36s1 on another one is a big problem. Usually admins face this when they buy new servers with a slightly different motherboard where the name doesn’t match anymore.

I can’t even imagine how the absurd names are called “predictable”, because for me the only predictable ones are the sequential ones. The other ones force you to type “ip -br a” first to get a listing of your interface names to know what to work with. That’s a real pain in network environments!

I don’t think you qualify for an ‘end user’ role 😉

And even for your type of work (administrator, system integrator?) at least I would prefer to have predictable names where after HW changes device names disappear and new ones appear compared to a ‘silent renaming’ of this eth stuff.

Sounds like fun when after some minor HW changes the NICs come up in different order and now firewall rules for one segment are applied to another and vice versa since the same eth names now are associated with other NICs 🙂

> I don’t think you qualify for an ‘end user’ role

Well, I have yet to meet a single person who finds them useful to anything. For pure end-users, this brings nothing at all, and for admins it’s a nightmare.

> at least I would prefer to have predictable names

Please, these are *not* “predictable”. “predictable” means “someone remote can guess the name prior to connecting to the machine”. “eth0” is predictable. These are “stable names” at best.

> where after HW changes device names disappear and new ones appear compared to a ‘silent renaming’ of this eth stuff

That’s not that often true, because by the time a NIC dies and you have to replace it, you get a slightly different model that gets completely different names. With regular names, if you replace a single-port NIC with a dual-port one (classical case with 10G NICs for example), and the old one was called “eth1”, you can be sure that one port will be “eth1” and the other port “eth2”. With the funny naming the ports will completely change with an “f0” or “f1” being appended as the NIC exposes two functions.

This mechanism was built by people who anticipated a problem they have never faced themselves and they tried to address it in a way that absolutely does not match reality in field, and in practice requires to modify configs everywhere on the FS or even on centralized repos to accommodate for an almost transparent change.

And I’ve had the problem you mention happen with firewalls in the past. But it was extremely rare. Nowadays it’s systematical 🙁

Also it’s fun to see that someone who just moves a NIC by one slot to arrange cables at the back gets a different config afterwards 🙁

> For pure end-users, this brings nothing at all

My point above was that end users should never need to know NIC device names anyway (something that can be achieved by using network-manager on Linux for example).

> by the time a NIC dies and you have to replace it, you get a slightly different model that gets completely different names.

As long as you put the new one in the same PCIe slot why should the name change?

> one port will be “eth1” and the other port “eth2”

Yeah, and the former eth2 now being eth3 or something else since order of brought up interfaces changes due to different drivers now…

> As long as you put the new one in the same PCIe slot why should the name change?

Just because the new one comes with a slightly different chip providing two functions instead of one, or because the new one is located behind a transparent PCIe bridge that eases production of NIC variants. The flawed assumption behind this concept is that you will replace one NIC with *exactly* the same one. But that’s not the reality in field. NICs rarely die, but they have to be upgraded for driver reasons, to get better optims, or higher bandwidth (e.g. upgrade 10G to 25G). And now the name even depends on the driver :-/

> > one port will be “eth1” and the other port “eth2”

> Yeah, and the former eth2 now being eth3 or something else since order of brought up interfaces changes due to different drivers now…

Sure but at least you can anticipate what changes and how to reorder your ports because they all continue to exist. Many times I’ve unplugged and replugged ports in firewalls or load balancers without trouble. With the new scheme you have to revisit your config and run sed on them… or get into trouble when the config is centralized and the names change depending on the machines. So you quickly end up fixing the trouble with the boot option!

We’re really getting off-topic here especially below a blog post dealing with an OS for an SBC where the old naming scheme definitely doesn’t work at all (think of multiple USB Ethernet or wireless dongles).

Anyway: the ‘boot option’ is not a fix but a fight against a more modern and flexible system. If now something called udev tries to create predictable interface names and your ‘style’ of configuration depends on the old naming scheme… this is just asking for troubles.

If you want those names to be really predictable in a way you control it’s always up to you to define the relevant rules (e.g. based on MAC addresses) and drop them into /etc/udev/rules.d/ on the target machine.

If you don’t know the new NIC’s MAC addresses yet or want to know the other criteria available to base rules on then it’s time to run udevadm info -a -p /sys/class/net/enX (maybe in another PC prior to final deployment of the card)

> If you want those names to be really predictable in a way you control it’s always up to you to define the relevant rules

Yes, as usual with so called “modern” distros, it’s up to the users to write configs to undo the stuff that gets in their way and that breaks by default simple things that normally work out of the box 🙁

But I agree we’re getting off-topic. It’s just that you were the one asking where this “obsession” comes from and I gave you my point of view based on my painful experience of this misfeature.

I was asking a guy working on a distro targeted at end users why they continue to still use a broken scheme (just try it out: use 2 USB Ethernet dongles, boot, check names, shutdown, change USB ports, boot again et voilà).

The answer: outdated scripts that fail to cope with OS reality and user expectations. The latter I do understand since the searchable web is a huge pile of crap full of outdated stuff that gets copy&pasted over and over again by another blog post, gist or whatever. Linux users are trained to look at ethX and wlanX and as such they create support efforts if you switch to something else.

For your use case the amount of time this useless discussion takes is probably higher than accepting reality and simply switching from ‘random names that most of the times work as expected’ (net.ifnames=0) to really predictable names by simply using MAC addresses.