NVIDIA Tegra X1 octa-core Arm processor with a 256-core Maxwell GPU was introduced in 2015. The processor powers the popular NVIDIA Shield Android TV box, and is found in Jetson TX1 development board which still costs $500 and is approaching end-of-life.

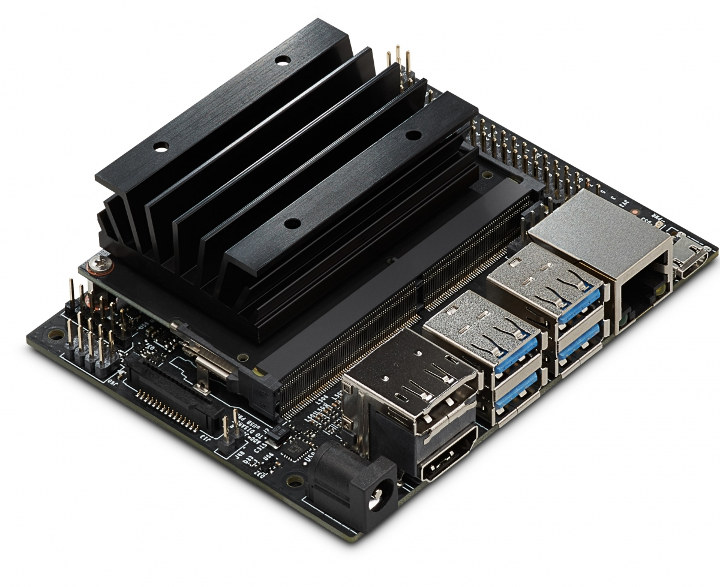

The company has now introduced a much cheaper board with Jetson Nano Developer Kit offered for just $99. It’s not exactly powered by Tegra X1 however, but instead what appears to be a cost-down version of the processor with four Arm Cortex-A57 cores clocked at 1.43 GHz and a 128-core Maxwell GPU.

Jetson Nano developer kit specifications:

- Jetson Nano CPU Module

- 128-core Maxwell GPU

- Quad-core Arm A57 processor @ 1.43 GHz

- System Memory – 4GB 64-bit LPDDR4 @ 25.6 GB/s

- Storage – microSD card slot (devkit) or 16GB eMMC flash (production)

- Video Encode – 4K @ 30 | 4x 1080p @ 30 | 9x 720p @ 30 (H.264/H.265)

- Video Decode – 4K @ 60 | 2x 4K @ 30 | 8x 1080p @ 30 | 18x 720p @ 30 (H.264/H.265)

- Dimensions – 70 x 45 mm

- Baseboard

- 260-pin SO-DIMM connector for Jetson Nano module.

- Video Output – HDMI 2.0 and eDP 1.4 (video only)

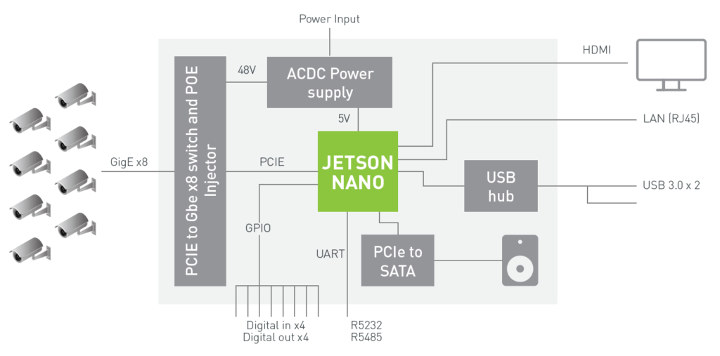

- Connectivity – Gigabit Ethernet (RJ45) + 4-pin PoE header

- USB – 4x USB 3.0 ports, 1x USB 2.0 Micro-B port for power or device mode

- Camera I/F – 1x MIPI CSI-2 DPHY lanes compatible with Leopard Imaging LI-IMX219-MIPI-FF-NANO camera module and Raspberry Pi Camera Module V2

- Expansion

- M.2 Key E socket (PCIe x1, USB 2.0, UART, I2S, and I2C) for wireless networking cards

- 40-pin expansion header with GPIO, I2C, I2S, SPI, UART signals

- 8-pin button header with system power, reset, and force recovery related signals

- Misc – Power LED, 4-pin fan header

- Power Supply – 5V/4A via power barrel or 5V/2A via micro USB port; optional PoE support

- Dimensions – 100 x 80 x 29 mm

Jetson Nano runs full desktop Ubuntu out of the box, and is supported by the JetPack 4.2 together with Jetson AGX Xavier and Jetson TX2. You’ll find hardware and software documentation in the developer page, and some AI benchmarks as well as other details in the blog post announcing the launch of the kit and module.

The company explains their new low cost “AI computer delivers 472 GFLOPS of compute performance for running modern AI workloads and is highly power-efficient, consuming as little as 5 watts”.

While Jetson Nano module found in the development kit will only support microSD card storage, NVIDIA will also offer a production-ready Jetson Nano module with a 16GB eMMC flash slot that will start selling for $129 in June.

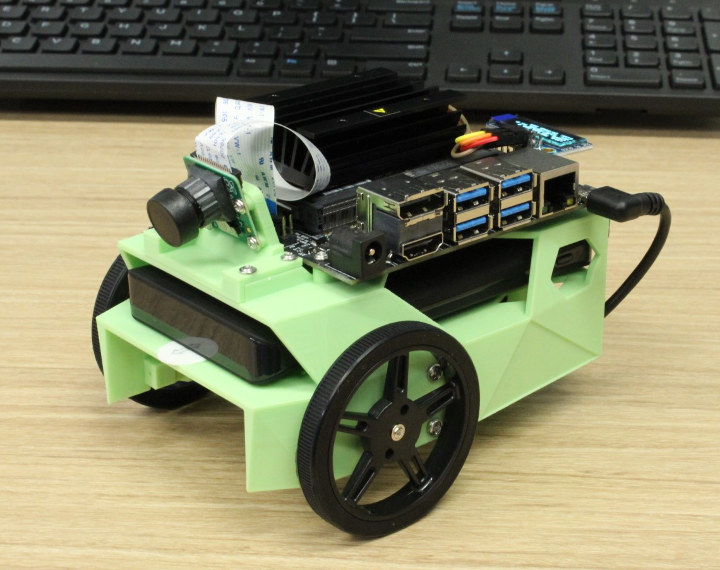

NVIDIA Jetson Nano targets AI projects such as mobile robots and drones, digital assistants, automated appliances and more. To make everything even easier to get started with robotics, NVIDIA also designed Jetbot educational open source AI robotics platform. All resources will be found in a dedicated Github repo by the end of this month.

NVIDIA Jetson Nano developer kit is up for pre-order on Seeed Studio, Arrow, and other websites for $99, and shipping is currently scheduled for April 12, 2019. Jetbot will be sold for $249 including Jetson Nano developer kit.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress. We also use affiliate links in articles to earn commissions if you make a purchase after clicking on those links.

TX1 also powers the nitendo switch. BTW, first paragraph says CA53, but detailed specs further down say CA57.

Also, the nVidia website lists”1 x1/2/4 PCIE” for the compute module, not “PCIe x1”. The M2.E key could be restricted to that but I can’t imagine why nVidia would do that.

The kit is limited to x1, the module is not.

Interesting pricing. Now if Nvidia would just sell the chip on its own as well…

You’re right. I missed the blog entry which has a more detailed listing. Same, still a nice piece of kit though.

Yes but that should be a 4xA57+4xA53+256 CUDA cores GPU config, here is a 4xA57+128 CUDA cores.

Does anyone know how much faster the A57 is realistically vs. a decent A53?

I’m really interested now in getting a new board, then it’s either this Jetson nano or the Odroid N2 plus Google edge TPU USB board. The Nvidia benchmarks say that the Jetson nano is about as fast or slightly faster than the google edge TPU dev board, but the Odroid should have a better CPU and may be overall faster if you have some CPU workload as well?

> Does anyone know how much faster the A57 is realistically vs. a decent A53?

Significantly: https://www.anandtech.com/show/7995/arm-shares-updated-cortex-a53a57-performance-expectations

But the S922X should be able to outperform the Jetson Nano easily if it’s only about CPU workloads.

It should indeed be roughly the same CPU performance as an Odroid XU4/MC1 where the A15 runs at 2 GHz. The wider memory bus should give it an advantage.

Ah good point, the N2 has 32Bit memory bandwidth and the Jetson Nano 64Bit, both with DDR4 memory.

CPU-wise s922x shoud beat this decisively. GPU-wise, though, a 128-core maxwell is still a formidable piece of equipment. The catch? It’s cuda-only.

ps: my switch reference was in relation to the shield tv TX1.

what do you mean by CUDA only ? to me it’s a Maxwell generation GPU with the same encoder and decoder of the TX1.

By cuda-only I mean no OCL.

very interesting board, at this price it could indeed be a strong odroid n2 competitor

it seems many information can be found from nvidia docs : “jetson nano software features”

https://docs.nvidia.com/jetson/l4t/index.html#page/Tegra%2520Linux%2520Driver%2520Package%2520Development%2520Guide%2Fsoftware_features_jetson_nano.html

edit:

for example : 2 pcie controllers, graphics api, video encoders/decoders capabilities, etc…

..And its GLES is 2.0-only.. Why, nvidia, why?

Wait, elsewhere it says 3.2. I hope so.

This is a Maxwell GPU. I think Nvidia even provides X11 Linux drivers for ARM, just like the desktop version. That is certainly some kind of typo.

I’m aware of the capabilities of the device, ergo my reaction. The one place where they refer to GLES2 better be a documentation overlook : )

BTW, the quoted 472 GFLOPS are for fp16 (half-precision floats), which runs at double throughput compared to fp32 (single-precision floats).

This is quite good, though – the Nvidia GT1030, the smallest desktop card, comes out at 1,127 GFLOPS at FP32, so the Jetson is about 1/5th of the performance if I calculate correctly. However, that is a 30W card and you can’t really add it to any small device. I think it’s quite remarkable. Just adding some info to your comment – I do not think that you meant to criticize it 😉

True, I didn’t mean to criticize it in any way, just clarified the number.

good for mediacenter and retrogaming?

Heat sink is a good consideration but it also suggests the board is not intended for a countinuos heavyload for long period. May be a life of 1-3 years

The heat sink has pre-drilled holes and there’s a PWM fan connector, so it should not be so hard to add an active fan if you so wish. There seem to be two power modes, 5W and 10W, and I guess 5W can be passively cooled, but 10W probably not.

> There seem to be two power modes, 5W and 10W, and I guess 5W can be passively cooled, but 10W probably not

Seems to be adjustable to one’s own needs (e.g. preferring higher single-threaded performance over throttling with all cores active): https://docs.nvidia.com/jetson/l4t/index.html#page/Tegra%2520Linux%2520Driver%2520Package%2520Development%2520Guide%2Fpower_management_nano.html%23wwpID0E0YI0HA

To be honest board design is pretty raw compared to other boards and if there is a massive heat sink already attached by vendor it means damn heat issues are present and for now they are not that much interested in stability or design as we are taking here of Nvidia which is known for beautiful cards. It suggest this is just a sidekick project and they may not provide that much support they just want feedback from the market.

The cpu has 4xa53+4xa57, but the a53 are not accessible. So it’s 4xa57.

At 1.4Ghz, I actually doubt it equals the xu4 in CPU (yes I’ve seen the Phoronix tests). A57 is the immediate successor of the A15, I doubt it’s 50% faster. So I think XU4 is better in raw CPU. Also, like the xu4, it really needs a fan – at least the tx1 (@1.9GHz) does.

Compared to the N2 – is def slower, even per clock, so maybe 40% slower considering N2 has 400MHz extra A73, and 2 extra A53. Also, fan or throttling. GPU – can’t really say, but NVIDIA does support OpenGL and probably Vulkan drivers are available. For NPU, I won’t say anything for now 😀

I/O, NVIDIA wins – too bad they didn’t keep the pcie port or the sata they had on the tx1…

I like how Nvidia thought it worth doing a chart of vs a RPI 3. Its like comparing a hoop and stick to a Ferrari !

They’re comparing it mainly to TPUs (e.g. Pi + intel TPU), the ‘bare-naked’ Pi3 appears just as a curiosity ‘what if one had no TPU’, IMO.

Wrong post

Would you look at that.. an affordable ARM64 machine that has a kernel that didn’t come out while dinosaurs still roamed the earth, has GPU etc drivers publicly available and looks like it won’t overheat until it fails. I wonder if USB3 etc actually work reliably enough to drive a LimeSDR for longer than 10 minutes.

It’s kernel 4.9, like the upcoming N2 🙂

4.9 is LTS and recent enough. That’s a massive step up from all of the boards out there that only really work fully with a 3.reallyold vendor kernel. I’d go for this over the N2 because of the nvidia GPU.

Would this be usable as a main desktop?

We had a similar conversation on this forum not long ago — it really depends what your ‘desktop’ needs are.

For instance this SBC can be a no-brainer devbox for a GPU/ML developer (it really trounces most other offers in terms of price/performance/features, OCL non-withstanding), but a tough sell for a build-farm enthusiast (like @willy) due to the not-particularly-good power/perf of the CA57s in there — there an odroid N2 would be a better pick.

This looks like an excellent vision platform for hacking. At $129 for the module it is likely not going to be use in any production items sold in volume. But if you want want to make a robot vision system, this board is hard to beat. This board beats the Lindenis V5 board. First question, does it support binocular depth mapping? It supports two cameras, but I don’t see anything about depth mapping.

Note that you will still want to train on desktop level hardware with an RTX class card.

From my understanding the Jetson software has some packages for such tasks.

https://developer.nvidia.com/embedded/develop/software

There it links to the Isaac SDK:

https://developer.nvidia.com/isaac-sdk

Where it says there is a functionality “Stereo Depth Estimation”. Is that what you mean? I think the advantage of these packages are that they will be built on the hardware capabilities of the Jetson platform, i.e. take use of the CUDA cores wherever possible.

Well Nvidia aim at in car use and they show the module displaying video from 8 cameras at once in a video. So maybe it can?

Yes it can with a ZED SDK camera

https://www.stereolabs.com/blog/announcing-zed-sdk-for-jetson-nano/

If it helps?

This GPU can arrive to 4k@60Hz opengles graphics? (not 4Kvideo, just a 4k opengles Qt/QML application)

Without having seen the dev caps — it should. My mediatek chromebook drives 4K@30Hz desktop and GLES without issues, and the 30Hz limitation is due to the sink link, not a performance limitation.

“Jetbox” ??? do you mean jetbot?