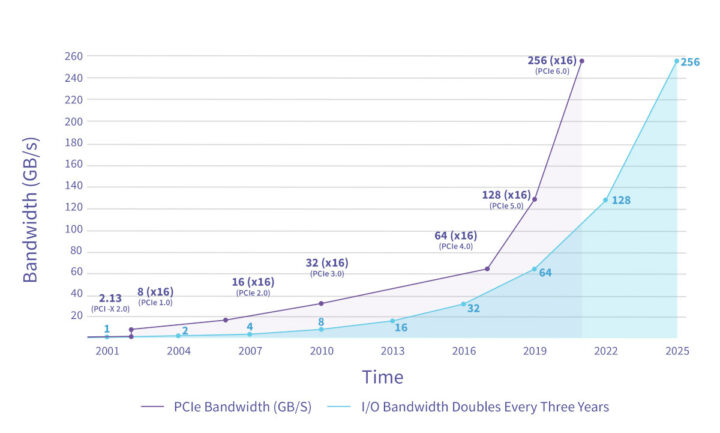

The PCI-SIG has just released the PCIe 6.0 specification reaching 64 GT/s transfer speeds, or 256GB/s, doubling the PCIe 5.0 specification data rate, and aimed at Big Data applications in the data center, artificial intelligence/machine learning, HPC, automotive, IoT, and military/aerospace sectors.

PCIe 6.0 follows PCIe 5.0 announced in 2019 and can achieve the 256GB/s data rate in a 16-lane configuration. Implementation will take time, as even PCIe 5.0 is not widely used yet, and in the embedded space, I only found PCIe 5.0 in the recently announced Alder Lake-S Desktop IoT processors, with the Alder Lake Mobile IoT processors still being limited to PCIe 4.0.

- 64 GT/s raw data rate and up to 256 GB/s via x16 configuration

- Pulse Amplitude Modulation with 4 levels (PAM4) signaling, leveraging existing PAM4 already available in the industry

- Lightweight Forward Error Correct (FEC) and Cyclic Redundancy Check (CRC) mitigate the bit error rate increase associated with PAM4 signaling

- Flit (flow control unit) based encoding supports PAM4 modulation and works in conjunction with the FEC and CRC to enable double the bandwidth gain

- Updated Packet layout used in Flit Mode to provide additional functionality and simplify processing

- Low latency and reduced bandwidth overhead.

- Maintains backward compatibility with all previous generations of PCIe technology

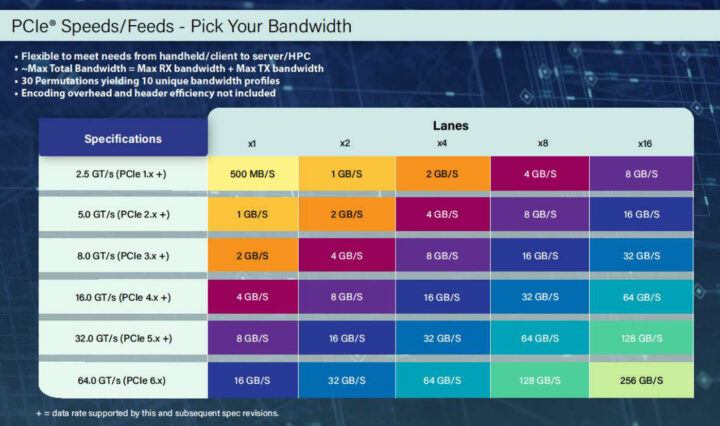

I’m glad I found the handy table above, as I’m always confused with the speed delivered by specific versions of PCIe and the number of lanes. I don’t expect to write much about PCIe 6.0 any time soon though. Most Arm processors we cover here only started to come with a PCIe 2.1 interface a few years ago starting with Allwinner H6 in 2017, and the PCIe implementation did not work that well. The Rockchip RK3588 octa-core processor that should become available this year features a much faster 4-lane PCIe 3.0 interface that should deliver up to 8GB/s. PCIe 3.0 was introduced in 2010, so if it takes as much time for the new PCIe specification to be implemented into mid-range processors, I can’t wait to write about the latest Rockchip RK3988 processor with PCIe 6.0 once it comes out in 2032!

You’ll find more details about PCIe 6.0 on the PCI-SIG website.

Via Liliputing and Anandtech

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress. We also use affiliate links in articles to earn commissions if you make a purchase after clicking on those links.

> I’d glad I found he handy table above

For anyone wondering how PCIe Gen2 speeds with 5 GT/s can result in 1 GB/s per lane while Gen3 operation achieves 2 GB/s throughput with only 8 GT/s instead of 10 GT/s…

That’s since Gen1/Gen2 use 8b/10b coding while Gen3 adopted the way more efficient 128b/130b coding. As such it’s not really 2 GB/s with Gen3 but approx. 1.969 GB/s 😉

That’s without counting with the 24-28 bytes of framing overhead which start to count at 1514 bytes (~3.4% total) 🙂

Thinks for that info and the wiki link. Brings back memories from university years on Information Theory, like channel coding.

Also from that wiki a nice history:

“64b/66b encoding, introduced for 10 Gigabit Ethernet’s 10GBASE-R Physical Medium Dependent (PMD) interfaces, is a lower-overhead alternative to 8b/10b encoding, having a two-bit overhead per 64 bits (instead of eight bits) of encoded data. This scheme is considerably different in design from 8b/10b encoding, and does not explicitly guarantee DC balance, short run length, and transition density (these features are achieved statistically via scrambling). 64b/66b encoding has been extended to the 128b/130b and 128b/132b encoding variants for PCI Express 3.0 and USB 3.1, respectively, replacing the 8b/10b encoding in earlier revisions of each standard”

Thanks for the info Jean-Luc. Just to clarify the table, all bandwidths need to be halved since they have summed Rx and Tx. So PCIe 6.0 x16 is 128GB/s (or 1 Tbps) in each direction. This should allow to support dual-400Gbps NICs for example.

Thanks for the news! Looking forward to your review of the new Rockchip CPU in 2032

“The PCI-SIG has just released the PCIe 6.0 specification reaching 64 GB/s”

… Should have been 64GT/s, not 64GB/s

Maximum number of Bytes per second: 252061538432 excl. overhead.

That means we’d only get 234.7 GB/sec (or only 252 Marketing-GB/sec) before the PCIe protocol overhead tax is paid.

… Anyway, I think the “handy table” is slightly misleading.

As far as I understand, the calculations for PCIe 1.0 are:

2.5 GT/s = 2.5GT transmitted and 2.5GT received per second.

That is a total of 5 GT/s, but that requires a sustained bidirectional transfer.

So per lane, you’d have …

2500000000 bits per second = 250000000 Bytes per second (because a byte in this case occupies 10 bits, since it’s using 8b/10b encoding)

250000000 Bytes per second / 1024 / 1024 = 238.4185 MB/sec.

So 1 lane can transfer 238.4 MB in each direction.

This is advertised as 250 MB per second.

For PCIe 3.0, things get worse; it’s not twice the speed of PCIe 2.0.

In PCIe 3.0, we have 8GT/s at 128b/130b encoding, resulting in …

8GT/s = 8GT transmitted and 8GT received per second.

8000000000 transfers per second = 8000000000 bits per second.

8000000000 bts per second * 128 / 130 / 8 = 984615384 Bytes per second

984615384 Bytes per second / 1024 / 1024 = 939.0024 MB/s

Comparing that to PCIe2.0’s doubling of 476.837 MB/s to 953.674 MB/sec, one does not get twice the speed of PCIe 2.0 on PCIe 3.0…

-Don’t even get me started on USB – I’d like everyone to think they get what they pay for, rather than getting “up to, but excluding what they pay for”!