Whenever I ran out of RAM on a Linux system, I used to enable swap memory using the storage device to provide an extra bit of memory. The main advantage is that it’s does not require extra hardware, but come at the cost of much slower access, and potential issues or wear and tear, unless you only use it temporary.

This week-end, I compiled Arm Compute Library on ODROID-XU4Q board, and the first time it crashed because the system ran out of memory, so I enable swap on the eMMC flash module to restart and complete the build successfully. However, I was told it would have been better to enable ZRAM instead.

So what is ZRAM? Wikipedia explains:

zram, formerly called compcache, is a Linux kernel module for creating a compressed block device in RAM, i.e. a RAM disk, but with on-the-fly “disk” compression.

So it’s similar to swap, expect it operates in RAM and compresses memory. It’s also possible to combine it with swap, but if you have to go that route, it may be worth considering upgrading the memory, or changing to a more powerful hardware platform. ZRAM support has been considered stable since Linux 3.14 released in early 2014.

Before showing how to use ZRAM, let’s check the memory in my board.

|

1 2 3 4 |

free -m total used free shared buff/cache available Mem: 1994 275 1216 30 503 1632 Swap: 0 0 0 |

That’s about 2GB RAM, and swap is disabled. In theory, enabling ZRAM in Ubuntu or Debian should be one simple step:

|

1 |

sudo apt install zram-config |

Installation went just fine, but it did not enable ZRAM. The first thing to check is whether ZRAM is enabled in the Linux kernel by checking out /proc/config or /proc/config.gz:

|

1 2 3 |

zcat /proc/config.gz | grep ZRAM CONFIG_ZRAM=m CONFIG_ZRAM_WRITEBACK=y |

ZRAM is built as a module. I tried different things to enable it and check, but I was not sure where to go at this stage at it was almost time to go to bed. What’s usually the best course of action in this case? Stay awake and work overnight to fix the issue? Nope! Rookie mistake. Years of experience have taught me, you just turn off your equipment, and have a good night sleep.

Morning time, breakfast, walk to office, turn on computer and board, et voilà:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

odroid@odroid:~$ free -m total used free shared buff/cache available Mem: 1994 281 1222 22 490 1634 Swap: 997 0 997 odroid@odroid:~$ cat /proc/swaps Filename Type Size Used Priority /dev/zram0 partition 127648 0 5 /dev/zram1 partition 127648 0 5 /dev/zram2 partition 127648 0 5 /dev/zram3 partition 127648 0 5 /dev/zram4 partition 127648 0 5 /dev/zram5 partition 127648 0 5 /dev/zram6 partition 127648 0 5 /dev/zram7 partition 127648 0 5 |

Success! So I would just have had to reboot the board to make it work the previous day… So we have 1GB ZRAM swap enabled across 8 block devices. I assume those are used as needed to avoid eating RAM necessary.

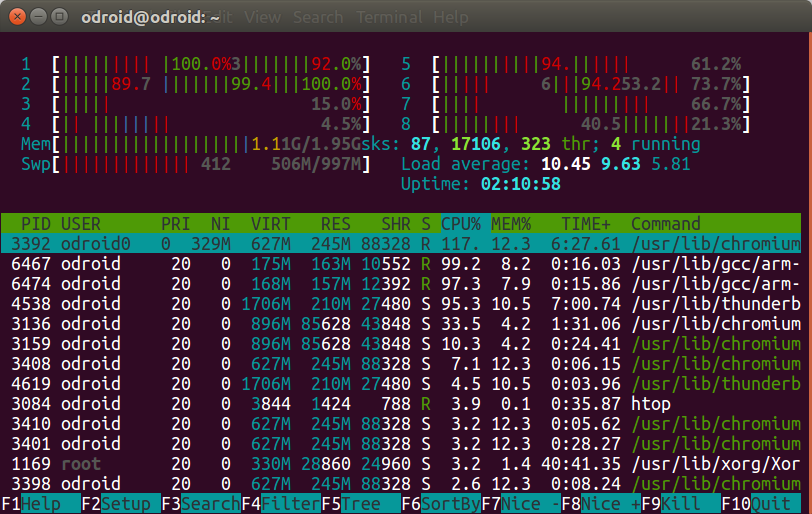

So let’s try to use that swap by making it a bit more challenging that just building the Arm Compute Library, by running a few programs like Chromium and Thunderbird, and monitoring RAM usage at the same time with htop.

At the final stage we can see 506 MB “swap” is used, with 1.11GB memory, probably since the rest of the RAM is used for ZRAM. I was however wrong in myinitial assumption that ZRAM block devices would be used one by one as needed, as all eight devices were show to hold about the same amount of data:

At the final stage we can see 506 MB “swap” is used, with 1.11GB memory, probably since the rest of the RAM is used for ZRAM. I was however wrong in myinitial assumption that ZRAM block devices would be used one by one as needed, as all eight devices were show to hold about the same amount of data:

|

1 2 3 4 5 6 7 8 9 10 |

cat /proc/swaps Filename Type Size Used Priority /dev/zram0 partition 127648 48156 5 /dev/zram1 partition 127648 48452 5 /dev/zram2 partition 127648 47688 5 /dev/zram3 partition 127648 46884 5 /dev/zram4 partition 127648 47780 5 /dev/zram5 partition 127648 47320 5 /dev/zram6 partition 127648 48212 5 /dev/zram7 partition 127648 46948 5 |

That’s the output of free for reference:

|

1 2 3 4 |

free -m total used free shared buff/cache available Mem: 1994 877 813 47 303 1104 Swap: 997 368 628 |

The good news is that the build could complete just fine with ZRAM, even with Chromium and Firefox running in the background.

ZRAM requires compressing and decompressing data constantly, and reduces the amount of uncompressed RAM your system can access, so it may actually decrease system performance. However, if you run out of RAM frequently or for a specific application it may be worth enabling it. I just needed ZRAM for a single build, so I could disable it now by removing it:

|

1 |

sudo apt remove zram-config |

However, you may also consider tweaking it by using zramctl utility:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 |

zramctl --help Usage: zramctl [options] <device> zramctl -r <device> [...] zramctl [options] -f | <device> -s <size> Set up and control zram devices. Options: -a, --algorithm lzo|lz4 compression algorithm to use -b, --bytes print sizes in bytes rather than in human readable format -f, --find find a free device -n, --noheadings don't print headings -o, --output <list> columns to use for status output --raw use raw status output format -r, --reset reset all specified devices -s, --size <size> device size -t, --streams <number> number of compression streams -h, --help display this help -V, --version display version Available output columns: NAME zram device name DISKSIZE limit on the uncompressed amount of data DATA uncompressed size of stored data COMPR compressed size of stored data ALGORITHM the selected compression algorithm STREAMS number of concurrent compress operations ZERO-PAGES empty pages with no allocated memory TOTAL all memory including allocator fragmentation and metadata overhead MEM-LIMIT memory limit used to store compressed data MEM-USED memory zram have been consumed to store compressed data MIGRATED number of objects migrated by compaction MOUNTPOINT where the device is mounted |

Running zramctl without parameters allows use to see which how much actual compressed / uncompressed data is used per block:

|

1 2 3 4 5 6 7 8 9 10 |

zramctl NAME ALGORITHM DISKSIZE DATA COMPR TOTAL STREAMS MOUNTPOINT /dev/zram7 lzo 124.7M 29.8M 9.7M 13M 8 [SWAP] /dev/zram6 lzo 124.7M 30.6M 10.1M 13.5M 8 [SWAP] /dev/zram5 lzo 124.7M 30.5M 9.6M 12.8M 8 [SWAP] /dev/zram4 lzo 124.7M 29.7M 9.9M 10.6M 8 [SWAP] /dev/zram3 lzo 124.7M 29.6M 10.2M 10.9M 8 [SWAP] /dev/zram2 lzo 124.7M 30M 9.6M 10.3M 8 [SWAP] /dev/zram1 lzo 124.7M 30.7M 10.2M 13.4M 8 [SWAP] /dev/zram0 lzo 124.7M 30.4M 9.5M 12.7M 8 [SWAP] |

zramswap service is handled by systemd in Ubuntu 18.04 and you could check out /etc/systemd/system/multi-user.target.wants/zram-config.service to understand how it is setup and control it at boot time too. For example, we can see one block device is set per processor. Exynos 5422 is an eight core processor, and that’s why we have 8 block devices here. Any other tips are appreciated in comments.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress. We also use affiliate links in articles to earn commissions if you make a purchase after clicking on those links.

Switching to lz4 from lzo when possible is always a good idea since slightly faster compression times but magnitudes faster decompression. Also limiting zram devices to count of big cores on a big.LITTLE system could be a good idea. In my tests an A15 at 2 GHz was almost three times faster than an A7 at 1.4 GHz.

On the other hand these zram devices are just used as normal swap devices and the kernel treats these devices as being slow as hell since historically swap ends up on HDDs that show a horribly low random IO performance. So the kernel will take care that pages that end up swapped out (be it to disk or to such a zram device) will only be accessed again if really needed. That’s also what can be seen on your system with 506 MB used for swap while only utilizing 1.11 GB out of 1.95 GB.

Is there a reason why zram is not enabled by default on Armbian based on Debian stretch? I think it will be very useful especially for my OrangePi One with 512 MB RAM running OpenHab/InfluxDB/Grafana at same time.

It’s only active on recent Ubuntu Xenial builds (since on Ubuntu it’s simply adding the zram-config package to default package list and you’re done). I added a generic zram activation handler but other devs objected for reasons I partially forgot in the meantime.

But all you need is to edit line 422 in /etc/init.d/armhwinfo, remove the ‘#’ sign there and reboot:

https://github.com/armbian/build/blob/23a81c22a28264abb627c590ce6e29776a22b091/packages/bsp/common/etc/init.d/armhwinfo#L422

Thanks a lot for explanation.

Just as feedback as I tried your tweak and it worked but unfortunately the command of changing the compression algorithm (echo lz4 >/sys/block/zram${i}/comp_algorithm 2>/dev/null) is not working:

zram: Can’t change algorithm for initialized device

When I played with zramctl, I had to turn off ZRAM first, before running the command. So you may have to disable it first with something like swapoff /dev/zram${i}.

If it is in an initialization script that even easier, just run your command before ZRAM is enabled with swapon.

Thanks for reply but this error pops up from dmesg during the creation of zram devices done by the script armhwinfo tkaiser has pointed out. And in the script the creation of the devices is definitely after the algorithm selection. Anyway I’m glad that currently I have almost half of the memory free but I’m not sure if the kernel does not limit the caching to RAM assuming that the swap is slow which can lead to slower response of the system (this is not so critical as my processes are not so fast changing)

Unfortunately most of this zram stuff is kernel dependent (with Armbian we try to support +30 different kernels for the various boards but with some of them some things simply don’t work or work differently when trying to adjust things from userspace).

Two important notes wrt activating zram on Armbian (when not using Armbian’s Ubuntu variant where zram is enabled by default now).

1) It’s important to remove/comment the last line in /etc/sysctl.conf that reads ‘vm.swappiness=0’. This will restore default vm.swappiness (60) which is important to get a performant system when swapping to zram occurs.

2) At least with mainline kernel ‘comp_algorithm’ has to be defined prior to ‘disksize’ otherwise lzo remains active.

Ha, I just found this out by accident. I was about to start compiling large packages and thought zram would help but wouldn’t you know it, it’s installed by default on whichever armbian version I flashed! Thanks tkaiser, that’s allowed me to run two Make threads comfortably rather than one saving unknown but large amounts of time.

Agreed on limiting the number of zram devices. It is usually enough to use half the cores and I would not go further than 2, as to not impact latency when ZRAM is doing it’s thing.

I’ve used 1/2 A15 and for Ivy Bridge and been very happy thus far.

Nice info! Thanks!

The most common misunderstanding wrt zram is SBC users thinking zram would automagically reduce the amount of RAM available. People see half of the RAM assigned as swap via zram (as above, that’s Ubuntu’s default) and think now they could only use half of the RAM any more.

It’s not how this works. In the above example still 1.95 GB RAM is available. At one point in time 1.11 GB is used while 506 MB are reported as being swapped out. By looking at the zramctl output we see that there’s an actual 1:3 compression ratio so zram uses ~170 MB of the available and used DRAM to store the 506 MB pages that are swapped out.

So yes, zram is eating up available RAM when situations with higher ‘memory pressure’ occured but the kernel can deal with that pretty fine and you end up being able to run more memory intensive applications at the same time than without zram and this without the usual swapping slowdowns caused by ultra slow storage access with traditional swap on HDD (or SD cards / eMMC on SBC). There’s a reason zram is default on billions of devices (Android thingies).

To make it really efficient a few more tunables exist of course. E.g. on systems that only use zram and no traditional swap on spinning rust changing the default swap behaviour is a good idea (by default 8 pages are handled at the same time since HDDs are bad at accessing small chunks of data due to their nature). The following would tell the kernel to switch from 8 to just 1 page which is pretty fine with zram (and also swap on SD card and eMMC that also do not suffer from HDD behaviour):

note:

cat file.gz | grep somethingmay be shortened to

zgrep something myfile.gzEvery time there is a “needless use of cat”, the brain of a kitten implodes.

ZRAM is great, I am using it for over a year on 1 GB VPS and several SBCs. I confirm than lz4 for swapping works better than lzo. I also found that there is no need for several zram devices, that advise is a remnant from the times when zram code was not multithreaded. I create ZRAM swap alongside disk swap but give it higher priority and set vm.swappiness=100 to encourage it’s use. The kernel switches to disk swap when no more zram can be filled. I set it’s size 3x of RAM as my measurements also show 3x compression ratios and the overhead is negligible. One can also keep logs and tmp on it.

I thought you might fancy some stats:

1 GB VPS hosting ~10 websites with nginx, mysql and php uses 732 MB of ZRAM swap that is compressed to 195 MB (3.75x ratio). Reading ~70 KB/sec and writing ~12 KB/sec to ZRAM does not impact CPU at all. The system still has ~150 MB of disk cache and does ~200 read op/sec from SSD for ~30 sql queries/sec

but you didnt show your syntax for changing lzo to lz4 or setting a higher priority. You also didnt say how you changed to or grouped (in my case 4 instances of zram [4 core CPU]) into only one instance.

Yes, that would be too much for a comment. Basically it is all in manuals and searchable on the web. Do not be lazy 😉

It’s not about laziness, its about completeness. I am book marking this article to share with others and would be nice to say “Everything is explained” instead of bookmarking all those other places and linking them to here for people to run all over the web. I’m a firm believer in KISS and not giving people a trail to follow that get lost or give up before reaching the end. 🙂

Here is a good start: https://gist.github.com/sultanqasim/79799883c6b81c710e36a38008dfa374

Tune it to your liking. I still believe that the only way to comprehension is independent research 🙂

@Tesla said: “Yes, that would be too much for a comment. Basically it is all in manuals and searchable on the web. Do not be lazy… I still believe that the only way to comprehension is independent research.”

Yeah, now everyone else has to waste his or her time to duplicate the work you already did while you hide the solution because you think you know what is best for us. Or maybe you were just lazy and didn’t properly document your work, and this is a way for you to avoid admitting it. I sure hope it’s the latter because truly believing you are helping people by hiding things from them has no place in an Open Source community of Linux users!

Anyway, thanks for the one 2-year old GitHub link you provided, it’s better than nothing.

this person’s posted a comment on a website, they’re not writing up a how-to, they’re sharing anecdotal information.

if that were true, the author should not have included the hashtags How-to and Tutorial on this post at all.

Rocket you have to remember the clique here have contempt for users.

Aaah, thread over everyone, this person’s a regular troll on how-tos if this comment didn’t make it easy enough to tell:

modernromantix.com/2016/04/02/help-my-boyfriend-is-an-internet-troll-part-1/#comment-207

Also by this user, “You forgot to mention sudo is required”:

tecmint.com/cheat-command-line-cheat-sheet-for-linux-users/comment-page-3/#comment-753513

And “This guide about virtual machines, you didn’t mention virtual machines!”:

brianlinkletter.com/installing-debian-linux-in-a-virtualbox-virtual-machine/#comment-74904

> I also found that there is no need for several zram devices, that advise is a remnant from the times when zram code was not multithreaded.

Problem is you can never know which ancient version of ZRAM code will be running in those pesky vendor kernels so it is always a good idea to be on the safe side and create several zram devices.

Couple things to note about ZRAM:

1) as you use more, there’s less and less room to keep inode cache and demand-paged shared files (mostly libraries and shared copies of forked daemons) in memory, so those demand-paged files are being evicted more and more often, resulting in increased read bandwidth to the system disk.

2) if you enable both ZRAM swap and disk swap, generally people apply the ZRAM swap at a higher priority so it gets used first. The problem is that the disk swap will only get used AFTER the ZRAM is full, and then the disk swap will become progressively less stale than the ZRAM – the ZRAM will become full of static paged-out content – which would make much more sense to page out to disk and free that RAM.

3) some configurations come with a certain amount of disk swap at a higher priority than the ZRAM swap. See LineageOS for example. The idea is that the disk swap will then fill with static paged-out content and performance will improve as the ZRAM gets better and better locality. But of course this is bad for startup performance.

In my own experience it’s best to allocate about 8 ZRAM disks with total size equal to system RAM, and then allocate disk swap the same or larger than the ZRAM disks. Then set up a cron job that watches for the system to be idle, ie load level 1.0 is idle for a 4-way SMP system. When that happens, and if the ZRAM is at least 75% full, then swapoff half the ZRAM disks. That will evict some of the least-often-used blocks to the disk swap. Then swapon the ZRAM disks again. Next time swapoff the other half of the ZRAM disks. Eventually the blocks that are never loaded will end up on disk and the ZRAM’s locality will approach the ideal, which will give you performance similar to actually having twice the RAM.

As for LZ4 vs LZO… I’m more excited about seeing Zstd enabled soon, which will give both better throughput and better ratio than LZO.

While feeling scheduler priority settings (time dependency) for ram organisation is just some kind of work around for not knowing what files are used intensively, it seems more interesting having a top like statistics tool, that would show locally file TYPES accessed and their time share of usage?

This knowledge followed by automation should improve swap prefetch (advanced anticipatory paging) and (configurable) page routing to ram or swap?

Since I never saw any benchmark comparing swap on zram vs. physical storage… I did one myself on an SBC platform: https://forum.armbian.com/topic/5565-zram-vs-swap/?do=findComment&comment=54487

NanoPi Fire3, most probably the most powerful, inexpensive and small SBC available today (octa-core A53 at 1.6 GHz for the same price you are charged for a lousy Raspberry Pi).

8 CPU cores, just 1 GB DRAM and cnxsoft’s try to build the ‘Arm Compute Library’ with OpenCL Samples.

Zram finishes the job in 47 minutes while swap on HDD takes 9.5 hours. The ‘average SD card’ shows even crappier random IO performance so in such situations compiling with swap on SD card could take days…

Simple conclusion: Use or at least evaluate zram. If you know what you’re doing and want to improve things explore using zram combined with traditional storage (you need to know that sequential and random IO performance are two different things — otherwise you’re only fooling yourself). Then zcache can be an interesting alternative to zram and the insights William Barath shared already.

This also shows the extra RAM and eMMC flash really matter in that case. ODROID-XU4Q completes the build in about 12 minutes.

12 minutes with both zram and swap on eMMC?

Well, the eMMC modules sold by Hardkernel are really fast especially when it’s about random I/O performance but the ODROID-XU4 also has a SoC consisting of 4 fast A15 cores combined with 4 slow A7 ones while the S5P6818 SoC on my Fire3 only has 8 slow A53 cores. I also realized now that I ran with just 1.4 GHz and not 1.6 GHz as I thought so 12 minutes vs. 47 minutes is also the result of the 4 fast A15 cores running at up to 2 GHz compared to slow A53 cores.

Also according to tinymembench RAM access is slower on the Fire3 and I had to build differently (arch=arm64-v8a vs. arch=armv7a).

If you have the time it would be really interesting to repeat your build test with 2.5 GB zram and mem=1G added to cmdline parameter in boot.ini to get an idea how much zram bottlenecks in such a situation.

That’s the time I remember the time when I re-built it with zram only.

That’s by doing a clean before making:

But I think the difference on ODROID-XU4 will be marginal, because I managed to build the Arm Compute Library without any zram nor swap later on.

> I think the difference on ODROID-XU4 will be marginal, because I managed to build the Arm Compute Library without any zram nor swap later on.

Right, I almost forgot that running a 64-bit userland requires much much more RAM compared to 32-bit. At the time when I observed 2.3 GB swap being used RAM usage was reported as 950 MB. Given an average compression ratio of 3.5:1 as my few samples showed then these 2.3 GB zram swap would’ve used 650 MB of physical RAM so the compilation needed 2.6 GB in total at that time while your 32-bit build was able to cope with less than 2 GB.

I just asked a Vim2 owner to repeat the 64-bit test since the board features 3 GB DRAM and the S912 SoC is also an octa-core A53 design (with one cluster artificially limited to 1 GHz for whatever stupid reasons while the other is allowed to clock up to 1.4GHz — Amlogic still cheating on us!)

http://forum.khadas.com/t/s912-limited-to-1200-mhz-with-multithreaded-loads/

“compcache” by Wheeler && team could be a source for other benchmark done before zram grew into mainline kernel. Zram has been available since more than 10 yrs now. So users are to blame for their steadiness, to some extent, but not only, like single board computers did a huge performance step during last 5 yrs and cpu power is needed for compression and decompression. Thx for Your numbers.

Maybe this will support a more appropriate (configurable) ram organisation, so that least updated pages could be swapped to lower bandwidth storage if necessary.

https://en.wikipedia.org/wiki/Page_replacement_algorithm#Least_recently_used

zram-config-0.5 is broke and totally illogical.

It overwrites any previous config as I founf out with log2ram but then just goes from bad to worse in its implementation.

For the sys-admin the mem_limit is the control of actual memory usage whilst drive_size is just estimated uncompressed size and has a memory overhead of 0.1% of driver_size so just dont be too optimistic about your compression ration.

But the whole dividing into small partitions and setting drive size to 50% total mem / cores is fubar to say the least.

I started by only wanting to have log2ram with a zram backing and now know far more about zram than I ever wished purely because the more I delved into zram_config the more apparent it was that its totally broke.

I ended up writing my own as you just can not use that if you want to load up a zram drive for logs as I do.

https://github.com/StuartIanNaylor/zram-swap-config seriously suggest that as a better alternative.

Also finally got my log2ram with zram backing https://github.com/StuartIanNaylor/log2ram

But for me its WTF zram_config as I am totally bemused how little correlation there is to https://www.kernel.org/doc/Documentation/blockdev/zram.txt but not is you shackle it with zram_config.

zram-config is an optional Ubuntu package from 7 years ago that went mostly unmaintained since then. Back in 2012 there was no support for multiple streams with one zram device and as such this simple script creates as much zram devices as CPU cores (see also @mehmet’s remark above). Same goes with all the other ‘logic’ therein. It represents what was possible with zram in 2012.

Things evolve and as such setting up zram has to be adjusted. That’s why I just added to Armbian’s zram setup the optional possibility to specify a backing device since as per the documentation ‘zram can write idle/incompressible page to backing storage rather than keeping it in memory’.

The zram writeback idle cache is something I left out as was mainly aiming at IoT / Pi devices to use zram as an alternative to flash because of block wear whilst gaining its lower latency higher speed advantages.

I had a look at the write back and you can provide a daily limit but in the application in mind writing back out to flash seemed counter intuitive to the reason to employ especially because of swappiness it hardly ever seems to fill.

I have been hitting the ubuntu package pages hard and stating that this package is massively outdated as zram turned up in Linux 3.14 and streams 3.15.

Ubuntu updated for zram-config-01 to zram-config-05 in xenial for all I can tell and they are still to change the manner from what was the 3.14 initial release.

When i install Linux mint( 19.3 tricia-64b), i turn off the swap, but i see again in my print screen #nr2 🙂 is a real swap…make on SSD ? or is zram, rename to “swap” ? http://pix.toile-libre.org/upload/original/1592641170.png “swap..is green at syinfo app…:P

Yes, the ZRAM will show as swap together with the actual swap file if you have one when running “free” command.

merci / thanks

When you did “sudo apt install zram-config” you just installed the script, to make it run you have to do “sudo systemctl start zram-config” and it will start immediately, the script runs every time you reboot your system, that’s why it ran after.

there’s a tool to benchmark several compression algoritms in your system “lzbench”, keep in mind that you also want fast compression and decompression even if the compression ratio is not the best.

Didn’t work on Ubuntu 21.10