Sometimes hardware blocks got to work on tasks they were not initially designed to handle. For example, AI inference used to be mostly offloaded to the GPU before neural network accelerators became more common in SoC’s.

Qualcomm AI Research has now showcased a software-based neural video decoder that leverages both the CPU and AI engine in Snapdragon 888 processor to decode a 1280×704 HD video at over 30 fps without any help from the video decoding unit.

The neural video decoder is still a work in progress as it only supports intra frame decoding, and inter frame decoding is being worked on. That means each frame is currently decoded independently without taking into account small changes between frames as all other video codecs do.

The CPU handles parallel entropy decoding while the decoder network is accelerated on the 6th generation Qualcomm AI Engine found in Snapdragon 888 mobile platform. This is partially handled through the AI Model Efficiency Toolkit (AIMET) open-source library which can be found on Github.

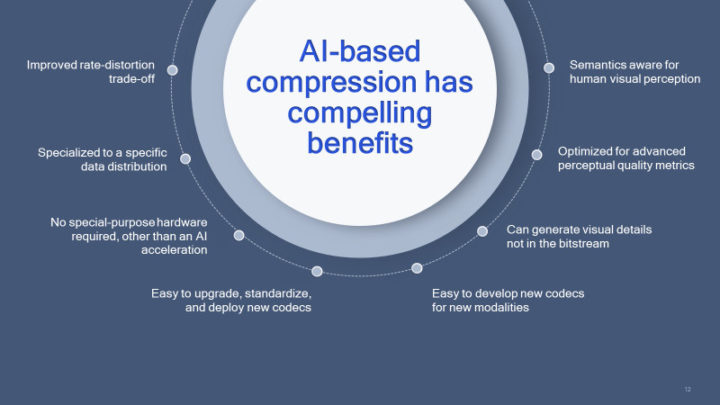

Getting a 1280×704 video decoding by software is not really an impressive feat on a Snapdragon 888, as the CPU could certainly handle H.264 or H.265 at that resolution and 30 fps without issue. But we have to think about the potential/future benefits of neural video decoding which include:

- Direct optimization of bitrate and perceptual quality metrics

- Simplified codec development

- Intrinsic massive parallelism

- Efficient execution and ability to update on deployed hardware

- Downloadable codec updates

More information can be found in the press release.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

Cool stuff but just how hot does the phone get and how quickly does the battery drain vs. a codec natively supported by the hardware?

And the software may be open source but how easily portable is it to a competitor’s AI engine? e.g are Google likely to adopt this for Android on Whitechapel…

Given enough training material it can also make up new wildlife videos: “This wildlife documentary does not exist”.

But in all fairness it is an interesting technology, but I think coding will take up a lot of time. It may need to train a Neural Network per scene, and transmit that to the mobile phone.

Though this made reduce the total required data-rate it may actually increase the required power for coding and (maybe) decoding. So it may be that what you save in data, you gain in power consumption.

I don’t see the advantages materializing anytime soon, when these companies can drop in a 4K 120 Hz AV1 hardware decoder in their next SoC.

After multiple generations of improvements to the NPUs, maybe. But I woudn’t bet on it happening before AV2 is released.