We’ve previously seen it’s possible to connect an eGPU to a mini PC through a PCIe x16 to M.2 NVMe adapter or a Thunderbolt 3 port, but while it’s fine to install on your desk for gaming or develop AI applications, the eGPU being larger than most mini PCs, it’s a little too big to integrate into products, and potentially inconvenient to carry around.

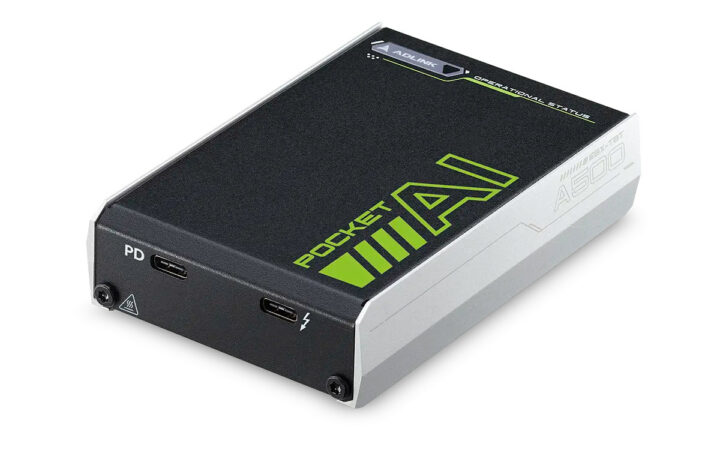

ADLINK Pocket AI portable eGPU changes that with an NVIDIA RTX A500 GPU housed in a 106 x 72 x 25mm box that’s about the size of a typical power bank and connects to a host through a Thunderbolt 3 connector. The company says the upcoming eGPU is mostly designed for AI developers, professional graphics users, and embedded industrial applications, but can also be for gaming.

Pocket AI specifications:

- GPU – NVIDIA RTX 500

- Architecture – NVIDIA Ampere GA107

- Base clock: 435 MHz Boost clock: 1335 MHz

- 2,048x CUDA Cores

- 64x NVIDIA Tensor Cores

- 16x NVIDIA RT Cores

- Performance

- 100 TOPS (INT8) inference

- 6.54 TFLOPS single-precision floating point

- 1x NVENC, 2x NVDEC

- Memory – 4 GB GDDR6 @ 6001 MHz, 112 GB/s

- TGP – 25 W

- Host Interface – Thunderbolt 3.0 (PCI Express 3.0 x 4)

- Power Supply – USB Power Delivery 3.0+ via Type-C connection on 15V and 40W+ supports

- Dimensions – 106 x 72 x25mm

- Weight – 250 grams

- Temperature Range – 0 to 40°C

The portable eGPU can be connected to a laptop or mini PC with a Thunderbolt 3.0 port and running either Windows 10, Windows 11, or Linux, albeit hot plugging is not supported for the latter. You’ll also need a 40W+ USB-PD power adapter to power the thing.

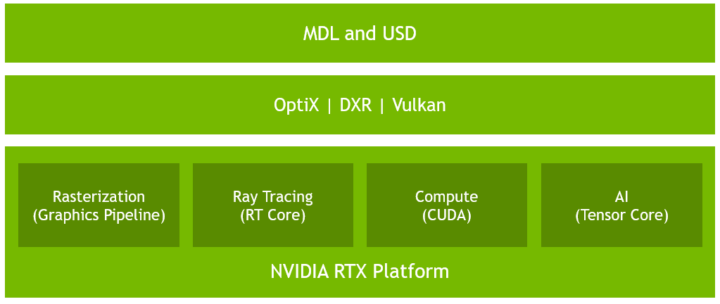

ADLINK says both NVIDIA CUDA X GPU-Accelerated Libraries for AI and HPC and NVIDIA RTX platform are supported with the latter offering support for ray tracing (OptiX, Microsoft DXR, Vulkan), AI-accelerated features (NGX), rasterization (Advanced Shaders), simulation (CUDA 10, PhysX, Flex), and asset interchange formats (USD, MDL). I can also see there are running Yolov4 demo from the NVIDIA TAO toolkit.

NVIDIA Jetson platforms are still better suited to design embedded AI systems from the ground up, especially if low power is required, but the small Pocket AI RTX eGPU looks great for developers or to add higher-end AI capabilities to existing x86 systems with a Thunderbolt 3 port. Embedded industrial applications may benefit from 3D rendering, AI-enhanced image processing, and sensor fusion pairing on a mobile workstation.

ADLINK did not disclose pricing yet but says the Pocket AI will provide a route to GPU acceleration at a fraction of the cost of a laptop with equivalent GPU power. Pre-order will start this month, and shipping is scheduled to start in June 2023. Further details may be found on the product page.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

Needs more VRAM for AI

Stable Diffusion and some other things can work with as little as 4 GB. But yeah, that should go up if they take another swing at it. The A500 is a professional laptop GPU with a TGP range of 20-60 W. RTX A2000 (Mobile) is the next step up with 8 GB. Maybe ADLINK got a good deal on unused A500 GPUs which are a year old and fairly weak.

Some models you can run and squeeze into 4gb, but for training its pretty mweh.

Even though ‘RTX3050’ its considerably short of the desktop 3060 which also has 8gb, but the perf of the mobile version is much less.

I don’t get it really as with a squeeze it can run some pretty hefty models, but is aimed as a dev device where for training the oppisite is true wiyh 4GB?

It depends on the models, and more VRAM will also make it work much quirker as can load more

Agreed, 64 GB VRAM looks like perfect config.

? 4GB is about right as its just not that great a GPU something close to a GTX1650 which also has about 4GB as not much point on that level of card.

Really need at least 16GB VRAM or better yet 24GB VRAM for picture and video AI inference

Honestly, an option like this one but for gaming would be great.

Lots of light laptops with a USB4/TB3 port would be great having a RTX3050 like eGPU for some casual gaming when the person is at home.

All eGPUs are huge. This one would be great even for traveling