Firefly AIBOX-1684X is a compact AI Box based on SOPHON BM1684X octa-core Arm Cortex-53 processor with a 32 TOPS AI accelerator suitable for large language models (LLM) such as Llama 2, Stable Diffusion image generation solution, and traditional CNN and RNN neural network architectures.

Firefly had already released several designs based on the SOPHON BM1684X AI processor with the full-featured Firefly EC-A1684XJD4 FD Edge AI computer and the AIO-1684XQ motherboard, but the AIBOX-1684X AI Box offers the same level of performance, just without as many interfaces, in a compact enclosure measuring just 90.6 x 84.4 x 48.5 mm.

AIBOX-1684X AI box specifications:

- SoC – SOPHGO SOPHON BM1684X

- CPU – Octa-core Arm Cortex-A53 processor @ up to 2.3 GHz

- TPU – Up to 32TOPS (INT8), 16 TFLOPS (FP16/BF16), 2 TFLOPS (FP32)

- VPU

- Up to 32-channel H.265/H.264 1080p25 video decoding

- Up to 32-channel 1080p25 HD video processing (decoding + AI analysis)

- Up to 12-channel H.265/H.264 1080p25fps video encoding

- System Memory – 8GB, 12GB, or 16GB LPDDR4/LPDDR4X

- Storage

- 32GB, 64GB, or 128GB eMMC flash

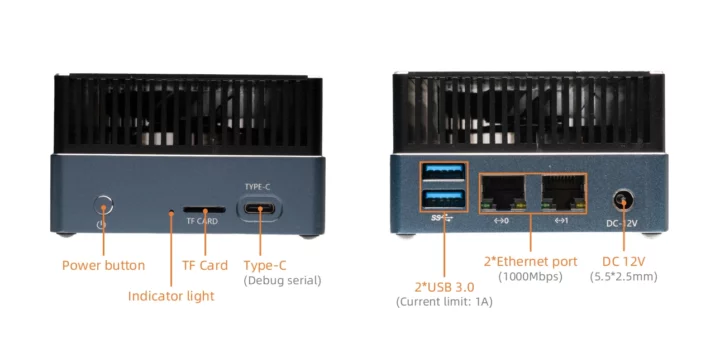

- MicroSD card slot

- Networking – 2x Gigabit Ethernet RJ45 ports

- USB – 2x USB 3.0 ports, 1x USB-C serial debug port

- Misc – Power button, LED

- Power Supply – 12V/4A via 5.5/2.5mm power barrel jack

- Dimensions – 90.6 x 84.4 x 48.5 mm

- Weight – 420 grams

- Temperature Range – Operating: -20°C to 60°C; storage: -20°C to 70°C

- Humidity – 10%90% (non-condensing)

Firefly has yet to provide documentation for the new AIBOX-1684X AI box, but the Wiki for one of the earlier BM1684X systems shows it runs Ubuntu 20.04 with Linux 5.4 LTS kernel (image released in July 2023) and docker is also apparently preinstalled in the image.

The company also explains the AIBOX-1684X is suitable for the local deployment of “ultra-large-scale parameter models under the Transformer architecture” such as large language models (LLM) such as LLaMa2, ChatGLM, and Qwen, as well as large vision models like ViT, Grounding DINO, and SAM. Stable Diffusion V1.5 image generation model is also supported and so are traditional network architectures such as CNN, RNN, and LSTM (Long short-term memory). Users can rely on popular frameworks such as TensorFlow, PyTorch, MXNet, PaddlePaddle, ONNX, and Darknet for pre-trained or custom models. More details about the software can be found in the SOPHON SDK section of the wiki.

Firefly is not the only company to launch an AI Box based on a SOPHON processor since the Radxa Fogwise Airbox has just been unveiled based on the SOPHON SG2300X processor that looks almost identical to the BM1684X, except it delivers (only) 24 TOPS. It seems odd to have similar CPUs doing more or less the same thing, so I asked, and I’ve been told SG2300X supports “open-source” generative AI, while the BM1684X does not. I’ll be able to find out more and test it by myself soon since I expect to receive a sample of the Fogwise Airbox by the end of the month or early May.

In the meantime, you can try the just-released Llama3 8B model on the Radxa Fogwise Airbox as I did when asking “What do you know about CNX Software?”. Some details are incorrect, but the text was rendered relatively fast.

The Firefly AIBOX-1684X is available now, but only in China (Taobao) for 2,499 CNY or about $344 US, but Firefly told CNX Software they’d also sell it on their online shop “very soon”, so anybody can get it. [Update April 28, 2024: The AIBOX-1684X with 16GB RAM and 64GB flash is now sold for $369] For reference, the Radxa Fogwise Airbox – which I’ll cover in more detail once I get a sample – can be pre-ordered for $321 and up on Arace Tech. Additional information about the Firefly AIBOX-1684X can also be found on the product page.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress. We also use affiliate links in articles to earn commissions if you make a purchase after clicking on those links.

For running LLMs, the AI accelerator their is mostly pointless since the limitation for token generation is essentially the memory bandwidth. I’m seeing that Radxa’s device has a 128b memory bus, this is more than commonly found on ARM and it’s what really helps here. The real benefit of the accelerator really is to limit power draw. But given that I’m running llama3 in Q5 in pure software at 12 tok/s with 16 cores only on an Ampere Altra at 2.6 GHz. The optimal perf is reached at 32 cores with 18 tok/s and beyond 40 it decreases again. The machine has 6 memory channels equipped with 2933 MT/s memory, hence just twice the total bandwidth of Radxa’s machine. This just shows that without more memory bandwidth, calculations are not the limiting factor at all. I think that the smallest dual-channel DDR5 PC with higher frequency RAM would cost less and deliver a bit more. For example I remember reaching the Altra level of performance from an AMD 7700X with DDR5-4800.