We’ve already seen Neural Processing Units (NPU) added to Arm processors such as Huawei Kirin 970 or Rockchip RK3399Pro in order to handle the tasks required by machine learning & artificial intelligence in a faster or more power efficient way.

Arm has now announced their Project Trillium offering two A.I. processors, with one ML (Machine Learning) processor and one OD (Object Detection) processor, as well as open source Arm NN (Neural Network) software to leverage the ML processor, as well as Arm CPUs and GPUs.

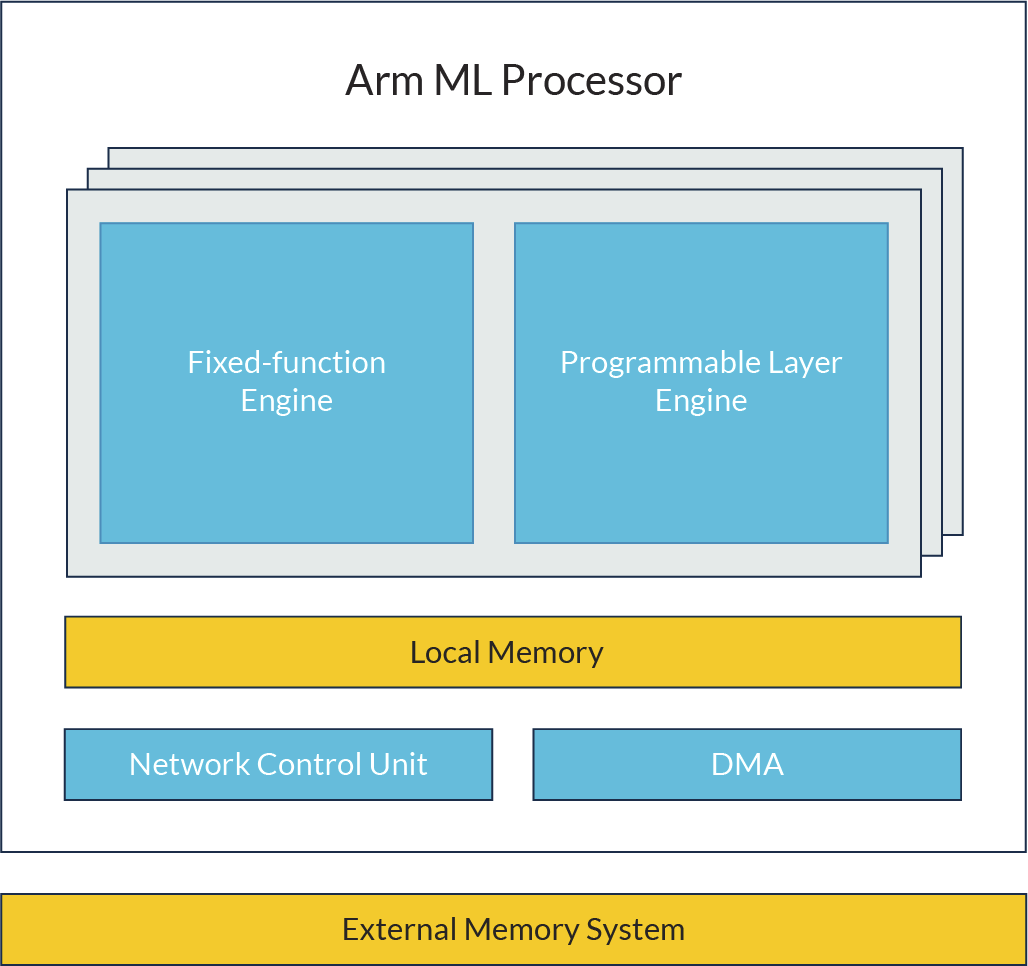

Arm ML processor key features and performance:

- Fixed function engine for the best performance & efficiency for current solutions

- Programmable layer engine for futureproofing the design

- Tuned for advance geometry implementations.

- On-board memory to reduce external memory traffic.

- Performance / Efficiency – 4.6 TOP/s with an efficiency of 3 TOPs/W for mobile devices and smart IP cameras

- Scalable design usable for lower requirements IoT (20 GOPS) and Mobile (2 to 5 TOPS) applications up to more demanding server and networking loads (up to 150 TOPS)

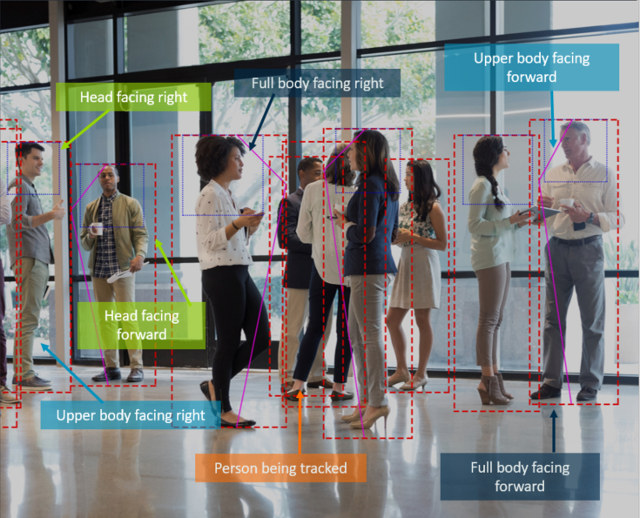

Arm OD processor main features:

- Detects objects in real time running with Full HD (1920×1080) at 60fps (no dropped frames)

- Object sizes – 50×60 pixels to full screen

- Virtually unlimited objects per frame

- Image Input – Raw input from camera or from ISP

- Latency – 4 frames.

- Can be combined with CPUs, GPUs or the Arm ML processor for additional local processing, reducing overall compute requirement

The company provides drivers, detailed people model with rich metadata allowing the detection of direction, trajectory, pose and gesture, an object tracking library and sample applications. I could not find any of those online, and it appears you have to contact the company for details if you plan to use this into your chip.

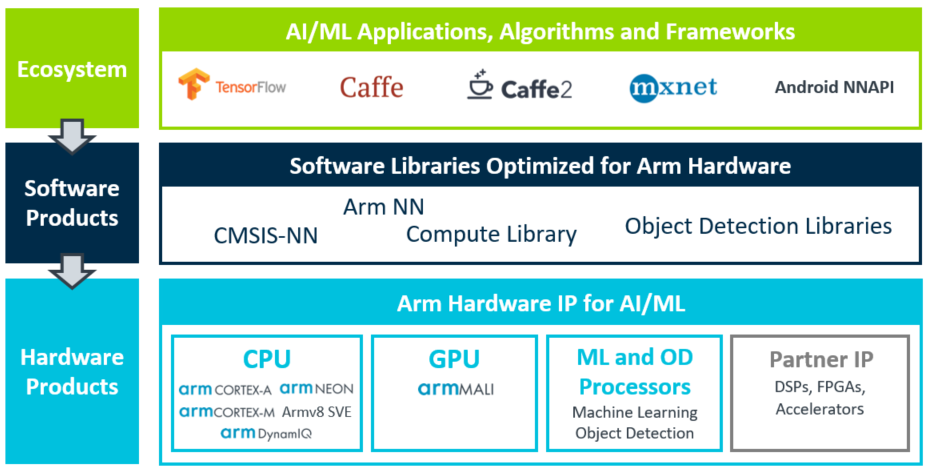

Arm will be more open about their Arm NN software development kit which bridges existing neural network frameworks like TensorFlow, Caffe(2), or MxNet – to the underlying processing hardware including Arm Cortex CPUs, Arm Mali GPUs, or the new ML processor. Arm NN will focus on inference, which happens on the target device (aka at the edge), rather than training which happens on powerful servers.

Arm NN utilizes the Compute Library to target Cortex-A CPUs, Mali GPUs & Arm ML processor, and CMSIS-NN for Cortex-M CPUs. The first release will support Caffe with downloads, resources, and documentation becoming available in March 2018, with TensorFlow coming next, and subsequently other neural network frameworks will be added to the SDK. There’s also seems to be an Arm NN release for Android 8.1 (NNAPI) that currently works with CPU and GPU.

Visit Arm’s machine learning page for more details about Project Trillum, ML & OD processors, and Arm NN SDK. You may also be interested in a few related blog posts on Arm community.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress