We’ve previously covered networked hard drive enclosures with Ethernet and/or WiFi running OpenWrt or Ubuntu that allows you to easily and fairly cheaply connect SATA drives to your local network with models such as Blueendless X3.

NODE has done something similar with a DIY project featuring a Raspberry Pi 3 Model B+. NODE Mini Server V2 connects the popular SBC to a 2.5″ SATA hard drive over USB and is designed to build out the physical infrastructure for the decentralized web (e.g. IPFS) that would allow users to replace remote servers with nodes that they themselves own and operate. Having said that nothing would prevent you from using this as a simple NAS although performance will not be as optimal as the aforementioned products due to the lack of SATA or USB 3.0 interface, as well as having “Gigabit” Ethernet limited to 300 Mbps. Having said that the design could also be easily be adapted to one of the Raspberry Pi alternatives with the same factor but with “full” Gigabit Ethernet and USB 3.0 ports such as Rock64 or Rock Pi 4 SBCs.

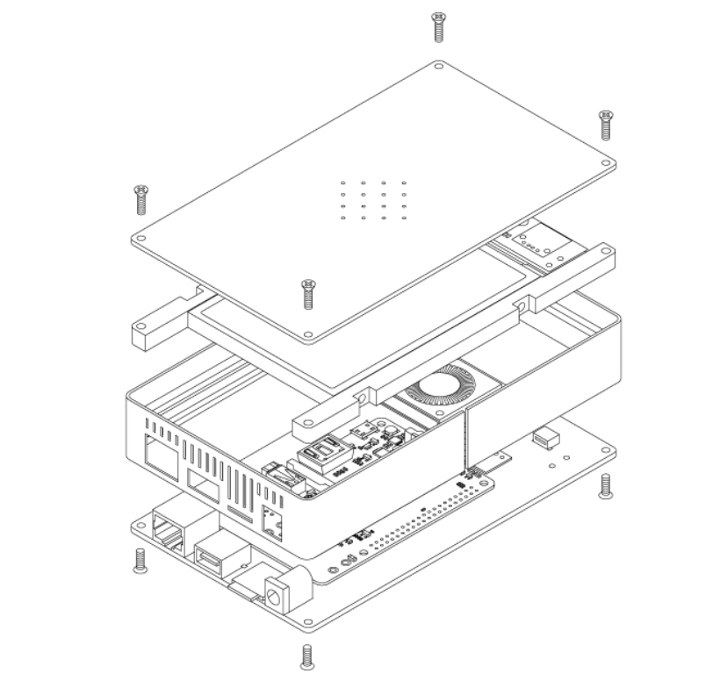

Main parts used in Node Mini Server V2:

- 3D printed case and HDD/SSD frame

- Raspberry Pi 3B+ board stripped from all USB ports, Ethernet port, and GPIO headers

- Four custom PCBs

- Bottom PCB with 1x USB port, 1x Ethernet port, microSD slot, and DC power jack

- Top “PCB” acting as a heatsink (optionally aluminum based)

- Micro SD Card adapter port

- USB to SATA adapter PCB

- 2.5″ HDD or SSD with 7mm thickness

- Various screws and nuts, as well as self-adhesive rubber feet

- Various FPC cables

- 1x S8050 Transistor

- 30x10x10mm 5v Blower Fan

Putting everything together requires a fair amount of work, as you need to desoldering connectors from the Raspberry Pi, solder boards together, and other tasks required in the assembly. Currently, you’d also have to manufacture your own PCB and print the 3D parts yourself with the PCB Gerber and STL files available here.

Putting everything together requires a fair amount of work, as you need to desoldering connectors from the Raspberry Pi, solder boards together, and other tasks required in the assembly. Currently, you’d also have to manufacture your own PCB and print the 3D parts yourself with the PCB Gerber and STL files available here.

NODE may open a limited preorder for NODE Mini Server 2 depending on the response from the community, but he’s also open to others start making them, so you may not have to build it all it from scratch. You’ll find the build instructions in the video below at the 4:50 mark, or if you prefer to read a transcript you’ll find it on NODE website.

Via Liliputing and MiniMachines

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress. We also use affiliate links in articles to earn commissions if you make a purchase after clicking on those links.

Just why? Buy an ODROID HC1 or even better HC2 and you’re done. Less expensive, way more powerful, zero time wasted for questionable hardware modifications (an annoying fan? Seriously?!).

And if you care more about reliability than performance then get an Olimex A20-Lime2 where you can attach an SSD or 2.5″ HDD using native SATA (without any USB storage hassles) and add a LiPo battery to get full UPS functionality also allowing to run the disk on battery.

> having “Gigabit” Ethernet limited to 300 Mbps

With every use case that also requires storage access at the same time it’s even less on the RPi 3 B+ since both storage and network interface are hanging off the same single USB2 connection being the real bottleneck here responsible for throughputs dropping below 20MB/s.

How is the new a20 SATA driver keeping up? Are you still stress testing it? If yes, I think I’d prefer a lime2 over my currently planned toy nas with my old atom 330.

One question besides would you suggest btrfs for such a task? Its confusing as everyone has an opinion about it or two…

Not testing any longer since it makes no sense with my limited capabilities (more volunteers would’ve been needed but no idea whether anyone else tested and how long).

And of course I would use btrfs for data shares. At least on ARM, with x86 we mostly use ZFS instead. And it’s not that much about ‘opinions’ but about a lot of FUD and BS spread, people loving to shoot the messenger (btrfs/ZFS being the bad guys when reporting silent bit rot but ext4 being fine since… no data corruption reported) and people not able to weigh features. A CoW filesystem will always perform rather poorly in some kitchen-sink storage benchmarks but those people whining about ‘low btrfs performance’ usually do not think a single second about use cases and whether they’re affected by kitchen-sink benchmarks or not.

Some more ranting: https://github.com/openmediavault/openmediavault/issues/101#issuecomment-466787160

Im not at all worried by benchmarks more because of data loss. And bitrot so far I thought was belonging to the kingdom of urban myths… Probably I was wrong with my trust in average IT…

Bitrot is a real issue, citing an old study: ‘During the 41-month period covered by our data we observe a total of about 400, 000 checksum mismatches. Of the total sample of 1.53 million disks, 3855 disks developed checksum mismatches – 3088 of the 358,000 nearline disks (0.86%) and 767 of the 1.17 million enter- prise class disks (0.065%)’

0.86% is not that much but we (and the storage stack in our computers) naively expect 0%. Also please keep in mind that the study has been published over 11 years ago and back then we measured disk sizes in GB and not in TB as today.

Asides from fighting bit rot modern storage attempts like ZFS and btrfs provide other benefits too:

* flexible dataset/subvolume handling overcoming partition limitations

* transparent filesystem compression

* snapshots (freezing a filesystem in a consistent state)

* ability to efficiently send snapshots to another disk/host (backup functionality)

* immune to power failures due to CoW (Copy on Write)

With the last feature in mind, it’s important that the drive in question supports correct write barrier semantics otherwise a power loss can result in data loss. See my link in the comment above. This broken write barrier behavior is an issue especially with SBC and random USB enclosures so using toy grade hardware like an RPi is a really bad idea if you love your data.

Hmmm how can I find out if the write barrier will work before a purchase? Would a lime2 work? Any average pc hw? UAS USB enclosures? Some HD? Intel or WD SSDs?

Also is btrfs/zfs in a single drive setup only reporting bitrot or can it also correct it?

I had the impression with dm-integrity you would have to use two volumes to scrub bitrot away.

With Allwinner SATA and today’s drives there shouldn’t be any issues (this was different back then when ZFS was new and people used commodity hardware to build up RAIDz arrays and lost whole pools due to broken write barrier behavior caused by weird behaving disks as well as weird IDE and SATA controllers). We’ve a bunch of such A20 Lime2 servers in production and never encountered any issues with btrfs (starting as early as kernel 4.7 IIRC).

And yes, for bitrot correction you need redundancy (at least a zmirror or a btrfs raid1). But since there always should be a backup, being able to detect data corruption already helps since then you can restore damaged files from backup.

On the other hand on today’s HDDs is mostly junk anyway (movies, TV series and such stuff) and majority of media file formats is pretty robust if it’s about internal data corruption (speaking of some visual artifacts if bits flipped inside the file). As such simply being able to get an idea whether there’s data corruption or not can help you with decisions (good practice is to run scrubs regularly and if count of detected checksum mismatches increases you know this disk is about to fail soon)

My use case mostly are jpegs and I doubt 0.86% of flipped bits are already deadly so will think about it some longer but i decided already that as much as I don’t want to invest in disks anymore i better go for a hgstfor now.

Mainly I’m disappointed that our perceived 0.0% as stated in your post above is not true ?

RPi is good enough for most people out there. Say you’re streaming ripped or downloaded pirate movies. The playback only requires 1 to 10 Mbps. 20 MB/s is actually 180 Mbps. So the network can easily support up to 16x rewind speed or multiple frontends for pirate videos. Many users have RPi both as a backend and as a Kodi frontend. Works just fine and provides excellent quality 1080p video with full HDMI CEC controls.

HDMI CEC isn’t implicitly supported. For example Allwinners seem to require fairly recent 4.14 to 5.2 kernel. LTS kernel users depend on 4.4, 4.9, 4.14, or 4.19. Some competing hardware like ODROID C1 had broken hardware so they released C1+ with real CEC support. Some more recent hardware got decent support only recently. So plenty of reasons to pick even the oldest RPi instead of its competition.

This is getting fun, our RPi fanboy is now promoting piracy and suggesting that many RPi users probably are like him. On this point he might be right, as clueless people also tend not to respect laws nor copyright and to find this normal. Poor Jerry.

ARM11 instead of Allwinner? Seriously Jerry?!

Better buying this , while on sale at £56.57 inc p&p. Beats RPI Tart.

https://www.geekbuying.com/item/A95X-MAX-S905X2-Android-8-1-4GB-64GB-TV-Box-411536.html

How is the 4k playback, 4GB memory, 64GB ROM and Android on the RPI tart?

64GB isn’t a lot in 2019. Raspberries (like other boards with SDHC support) can handle even 1TB microSD. If you really need a lot of space, e.g. when hosting complex Docker compose scenarios, these large SD cards might suffice.

> Raspberries (like other boards with SDHC support) can handle even 1TB microSD

SDHC is limited to 32GB, most probably you’re talking about SDXC instead (up to 2TB). Doesn’t matter that much since all Raspberries only support 3.3V supply voltage and therefore the so called ‘High Speed’ (HS) mode limiting SD card access to 50MHz which limits sequential transfer speeds to 25 MB/s and also ruins random I/O performance.

Superior SBC able to switch voltage from 3.3V to 1.8V if necessary are UHS-I/SDR104 capable and allow for much higher SD card performance (both sequential and random I/O).

Rule of thumb: using any Raspberry Pi for anything related to storage access or network is a mistake. There exist so many way better other SBC variants out there.

Yep, HC2 does it pretty well. I have 3 of them serving 10 TB each over Samba. The first one is the “default” one. The two others are CC backups syncing with rsync via CRON. Each consistently providing +100MB/s for transiting large files (i.e. VMware images). 3 HC2 and disks dying at the same time? Nope, short of natural disaster.

As long as you don’t have them hooked to the same power supply, …

UPS(es).