The challenge with TinyML is to extract the maximum performance/efficiency at the lowest footprint for AI workloads on microcontroller-class hardware. The TinyML-CAM pipeline, developed by a team of machine learning researchers in Europe, demonstrates what’s possible to achieve on relatively low-end hardware with a camera.

Most specifically, they managed to reach over 80 FPS image recognition on the sub-$10 ESP32-CAM board with the open-source TinyML-CAM pipeline taking just about 1KB of RAM. It should work on other MCU boards with a camera, and training does not seem complex since we are told it takes around 30 minutes to implement a customized task.

The researchers note that solutions like TensorFlow Lite for Microcontrollers and Edge Impulse already enable the execution of ML workloads, onMCU boards, using Neural Networks (NNs). However, those usually take quite a lot of memory, between 50 and 500 kB of RAM, and take 100 to 600 ms for inference, forcing developers to select low complexity and/or low accuracy NNs.

So the researchers decided to use more efficient non-NN algorithms (like Decision Tree, SVM) leveraging their “Eloquent Arduino” libraries alternative for TFLite for Microcontrollers to design the TinyML-CAM pipeline that works in four stages:

- Data collection through a camera server (160×120 resolution) and MjpegCollector asking users for a class name and collecting image frames for a given amount of time until the user exits

- Feature Extraction in four steps:

- Convert the collected images from RGB to gray

- Run the Histogram of Oriented Gradients (HOG) feature extractor to output feature vectors (uses lower resolution 40 x 30 images to speed up the task)

- Run Pairplot Visualization to get a visual understanding of how informative the extracted features are

- Run Uniform Manifold Approximation and Projection (UMAP) dimensionality reduction algorithm to take a feature vector and compresses it to length 2

- Classifier Training

- Porting to C++ – Convert the HOG component and the classifier (Pairplot) to C++ code, or more exactly a header file (.h)

The header file can then be added to an Arduino Sketch, the program compiled and run on the board. You’ll find the source code, instructions to install it on ESP32 hardware, and a demo video on GitHub.

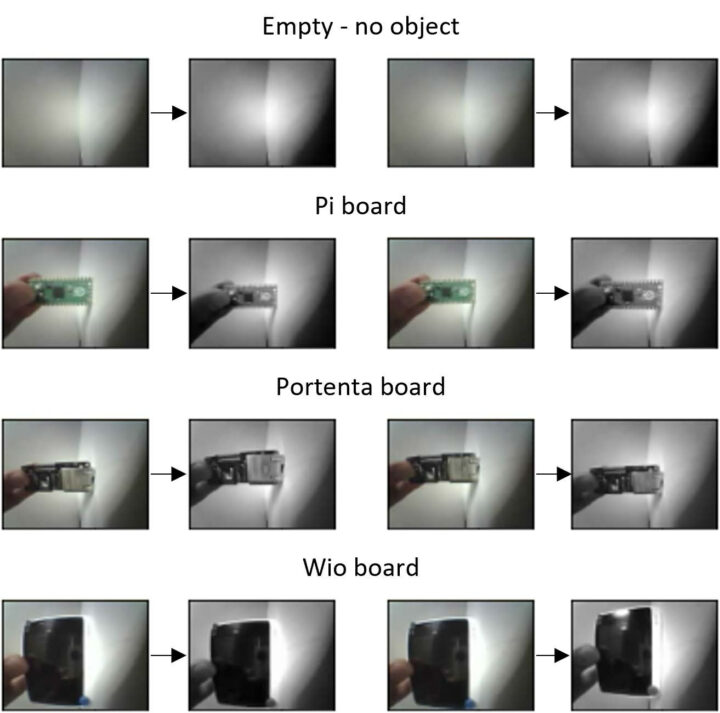

To test the solution they train the ESP32-CAM to recognize a Raspberry Pi Pico, an Arduino Portenta H7, or a Wio Terminal. Performance is great for this type of board, and the footprint is really small, but the researchers note that the Portenta and Pi boards were often mislabelled during the Pairplot analysis, and expect this issue to be rectified by improving the dataset quality.

Besides having a look at the source code on GitHub, you’ll also find additional information in a short 4-page research paper.

Via Hackster.io

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

Any machine-learning/data-scientist experts out there? I’m having trouble wrapping my head around the basics.. for example, . For image classification, how is machine-learning preferred/different from OpenCV? Are there certain applications/data-sets where certain tools would work better than others? For example, is it true nearly all the popular libraries use supervised learning (correct input/output pairs must be collected).. none use unsupervised-learning (raw data with no classification)? And does the training data always have to be captured on the device that will run the algorithm? Also, I understand you have to feed the collected data to a library (YOLOv5, EloquentTinyML, Node-Red, tensorflow… Read more »

I’m not a data scientist either, but the only frameworks I’m aware of are C/C++, with convenient bindings to Python and others. OpenCV can make use of a variety of detection algorithms – not just ML – and that gives it good performance. As for your answer re: output, you get a set of likely answers with float weights usually in a range of around 0.4 to 0.99, and you filter that for categories you were interested in. For example you might give it a photo of a car lot. It might return Car 0.9 Road 0.9 Window 0.9 Truck… Read more »

OpenCV uses predefined features/algorithms. So you suppose you have a known model (the one you setup using OpenCV) Machine learning is a black box, you put data (your images in it) and it output you something (your classification vector : is it a cat or a dog or …). It learns because you tell it that image A is the image of a dog and image B is a cat…. Inside, groups of neurons get specialized is specific features during training. But you can never know which neuroon will fire for which feature (and you don’t care) Basically, if you… Read more »