EdgeCortix has just announced its SAKURA-II Edge AI accelerator with its second-generation Dynamic Neural Accelerator (DNA) architecture delivering up to 60 TOPS (INT8) in an 8Watts power envelope and suitable to run complex generative AI tasks such as Large Language Models (LLMs), Large Vision Models (LVMs), and multi-modal transformer-based applications at the edge.

Besides the AI accelerator itself, the company designed a range of M.2 modules and PCIe cards with one or two SAKURA-II chips delivering up to 120 TOPS with INT8, 60 TFLOPS with BF16 to enable generative AI in legacy hardware with a spare M.2 2280 socket or PCIe x8/x16 slot.

SAKURA-II Edge AI accelerator

SAKURA-II key specifications:

- Neural Processing Engine – DNA-II second-generation Dynamic Neural Accelerator (DNA) architecture

- Performance

- 60 TOPS (INT8)

- 30 TFLOPS (BF16)

- DRAM – Dual 64-bit LPDDR4x (8GB,16GB, or 32GB on board)

- DRAM Bandwidth – 68 GB/sec

- On-chip SRAM – 20MB

- Compute Efficiency – Up to 90% utilization

- Power Consumption – 8W (typical)

- Package – 19mm x 19mm BGA

- Temperature Range – -40°C to 85°C

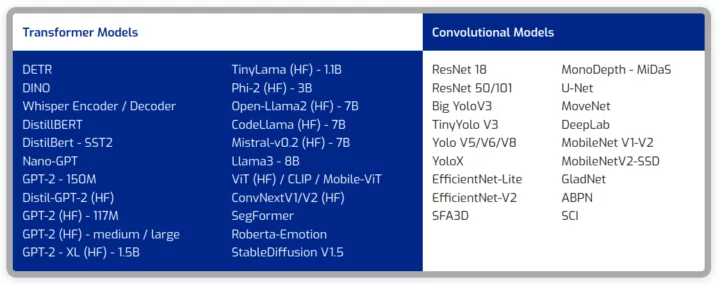

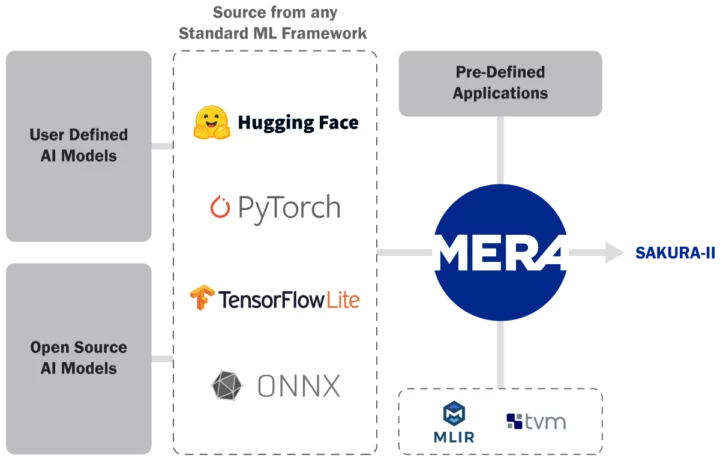

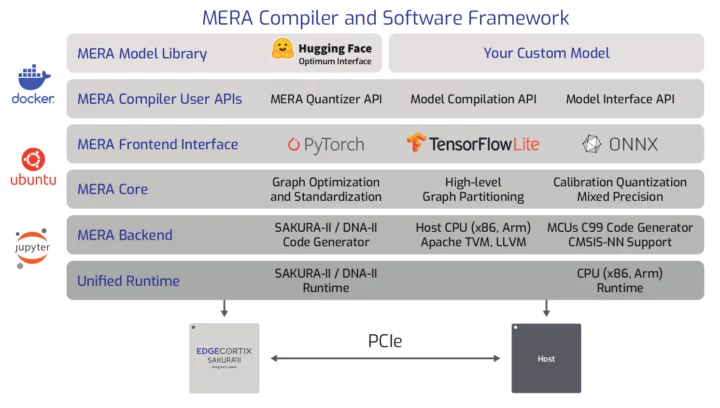

The SAKURA-II platform is programmable with the MERA software suite featuring a heterogeneous compiler platform, advanced quantization, and model calibration capabilities. The software suite natively supports development frameworks such as PyTorch, TensorFlow Lite, and ONNX. It also integrates with the MERA Model Library, interfacing with Hugging Face Optimum, to offer a large range of the latest transformer models such as Llama-2 or Stable Diffusion, and convolutional models such as Yolo V8.

SAKURA-II M.2 and PCIe accelerators

EdgeCortix can provide the SAKURA-II as a standalone device as described above, but the company has also been working on two M.2 modules with a single chip and 8GB or 16GB DRAM capacity, and single and dual-device low-profile PCIe cards.

M.2 SAKURA-II modules’ key features:

- DRAM

- 8GB (2x banks of 4GB LPDDR4) OR

- 16GB (2x banks of 8GB LPDDR4)

- Host Interface – PCIe Gen 3.0 x4

- Peak Performance – 60 TOPS with INT8, 30 TFLOPS with BF16

- Module Power – 10W (typical)

- Dimensions – M.2 Key M 2280 module (22mm x 80mm)

Both the 8GB and 16GB models have the same performance and typical power consumption, so selecting one over the other is just a case of finding out whether the model will fits into 8GB of RAM, or requires more.

PCIe cards’ specifications:

- Host Interface – PCIe Gen 3.0 x8

- Single-chip model

- DRAM Memory – 16GB (2x banks of 8GB LPDDR4)

- Peak Performance – 60 TOPS with INT8, 30 TFLOPS with BF16

- Card Power – 10W (typical)

- Dual-chip model

- DRAM Memory – 32GB (2x banks of 16GB LPDDR4)

- Peak Performance – 120 TOPS with INT8, 60 TFLOPS with BF16

- Card Power – 20W (typical)

- Form Factor – PCIe low profile, single slot

- Included accessories – Half-height and full-height brackets and active or passive heat sink

EdgeCortix is taking pre-orders for the M.2 modules and PCIe cards for delivery in H2 2024 with the following pricing:

- M.2 8GB – $249

- M.2 16GB – $299

- PCIe single – $429

- PCIe dual – $749

We are seeing more and more M.2 and PCIe Edge AI accelerators with the most popular (based on news coverages) being the Google Coral Edge TPU, Intel Myriad X, and Hailo-8 modules. There are others such as the Axelera AI module that’s the most impressive on paper, but it’s always difficult to compare different accelerators due to the lack of a standardized benchmark.

With silicon vendors now integrating powerful AI accelerators into SoCs including the new ones from Intel and AMD, it’s unclear whether this type of AI accelerators will have a long life in front of them, except if they can be combined with low-end processors. Only time will tell.

You’ll find more details about the SAKURA-II chip and module on the product page and in the press release.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress. We also use affiliate links in articles to earn commissions if you make a purchase after clicking on those links.

The 60 TOPS level will be old hat, especially at these prices. The XDNA2 NPU in the entire Zen 5 APU family should be hitting at least 45 TOPS, possibly up to 60 TOPS for Strix Halo. Intel’s Lunar Lake will hit 45 TOPS, as will Qualcomm’s Snapdragon X Elite. We may see 100 TOPS (NPU only) in mainstream chips a generation or two later. I think these NPUs can benefit from as much DRAM as you can throw at them, enabling large models.

For retrofitting an older computer, most people would go for something like an RTX 3060 if they aren’t planning to use it for AI all the time. These accelerators should be more power efficient than something like an A2000, with more dedicated memory too.

The question is why pair it up with an old processor when something like this can cost like a new one?

I believe that in the near future, all the major vendors will have NPUs integrated in them. ARM vendors already had a headstart and AMD/Intel are starting just now but it should be soon.

TOPS also doesn’t mean much. What happens if your network has some operations that require FP32 or FP64(you would need to handle that in CPU)? What happens if the operation isn’t particularly suited for the architecture? etc etc

What should really matter is model benchmarks.

Also don’t forget that you can also run integrated GPUs to run models, and vendors often add FLOPs/TOPs from iGPU to it. Something like a 7940HS has 8.9 TFlops or so(can’t really use that in games but in AI, it should be doable), which would transform into almost 36 TOPs with INT8 operation and then you add in the integrated NPU and etc.

Maybe all resources can be leveraged at once but I think NPUs will start to take on the brunt of the work as they are being boosted massively to hit the numbers Microsoft wants. They can outclass the iGPU this year. Example:

techpowerup.com/316436/amd-ryzen-8040-series-hawk-point-mobile-processors-announced-with-a-faster-npu

Phoenix = 10 TOPS NPU + 23 TOPS CPU/GPU

Hawk Point = 16 TOPS NPU + 23 TOPS CPU/GPU

Strix Point = 45-50 TOPS NPU + ??? TOPS CPU/GPU

It would be reasonable to expect no less than 30 non-NPU TOPS in Strix Point, from CU count going from 12 to 16, and CPU cores from 8 to 12. But the NPU will likely be faster and more efficient, having been quintupled from XDNA1 in Phoenix.

If the NPU were to be doubled for an XDNA3, the iGPU is not catching up to that in the mainstream APUs at least. Strix Halo and other mega APUs could be another story.

Intel is likely to put NPUs in their mainstream desktop CPU lineup (Arrow Lake) before AMD does, but they are going to be everywhere within about 3 years. The latest consumer desktop GPUs can deliver a lot more than 120 TOPS, but at dramatically worse power efficiency as far as I can tell. Will there be a market for small discrete accelerators? We know the large accelerators are in demand, Nvidia is selling AI pickaxes and shovels as fast as they can be made, and moving to a 1-year cadence for new releases.

Well, I think your numbers are a bit wrong.

Phoenix can deliver 33,4 TOPs int8 from GPU alone. (i.e. it can do 8.2 TFlops and int8 is x4)

The CPU can probably drive something like 4~5 TOPs(it depends on how AVX clocks) int8.

Going from 12 CUs to 16 CUs from Phoenix to Strix while keeping the same clocks means you could get something like 45 TOPs, if you do some clock bump then well, that is even better.

For desktop GPUs, they will vary a lot, a 3080 can do 238 int8 TOPs in the tensor cores without sparsity or 476 int8 TOPs sparse. If Nvidia has dp4a or similar instructions in the vector units, they could probably get another 120 TOPs from that.

How much power is used will depend a lot, generally when GPUs run in compute mode they will actually spend significantly less power because they aren’t using all those fancy ASIC graphical blocks. If you are also doing inference once in a while it might also not matter.

Obviously if you have a camera that you need to run AI models on it 30 frames per second then power wise, GPUs will be a very bad solution.

Well, what I really mean is that given the cost, additional power and etc, it’s not much of an advantage if any.

Also don’t forget that while this say 8W, this is per Sakura II chip. And it isn’t counting the memory or supporting components or the PCIe root in your SoC.

Need to have support for OneAPI:

https://www.oneapi.io

I cant add a whole 100W x86 APU with a NPU on a drone, but I can sure add one of these to the m.2 port of the rk3588 or rpi5 sbc on it.

The SAKURA-II M.2 AI accelerator module is now working on Arm platforms and has been tested on the Raspberry Pi 5 and a Rockchip RK3588 embedded PC from AETINA.

https://www.edgecortix.com/en/press-releases/edgecortixs-sakura-ii-ai-accelerator-brings-low-power-generative-ai-to-raspberry-pi-5-and-other-arm-based-platforms