Yesterday, news surfaced about a “bug” in Intel processors that could be fixed at the operating system level at the cost of a decrease in performance for some tasks, from a typical, and barely noticeable 5% hit, to a more consequent 30% hit for some specific tasks, and as we discussed yesterday I/O intensive tasks are the most impacted by the changes.

While Intel (and Arm) are impacted, AMD claims not to be, and the issue was reported by major news outlets and likely impacting the stock price of the companies with Intel stock losing 3.39%, and AMD stock gaining 5.19%, so obviously every company felt the need to answer, starting with Intel’s response to security research findings:

Recent reports that these exploits are caused by a “bug” or a “flaw” and are unique to Intel products are incorrect. Based on the analysis to date, many types of computing devices — with many different vendors’ processors and operating systems — are susceptible to these exploits.

…

Check with your operating system vendor or system manufacturer and apply any available updates as soon as they are available.

…

Intel believes its products are the most secure in the world and that, with the support of its partners, the current solutions to this issue provide the best possible security for its customers.

This looks like damage limitation, and I guess more info will be released once the fixes are all released.

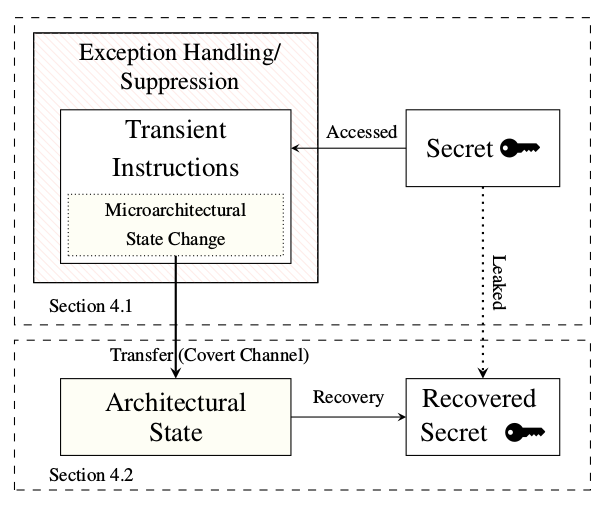

But the most detailed report is by Google, since Project Zero found three variant of two vulnerabilities – Metldown and Spectre – related to speculative execution, a technique to predict (and run) likely future instruction in order to boost performance:

We have discovered that CPU data cache timing can be abused to efficiently leak information out of mis-speculated execution, leading to (at worst) arbitrary virtual memory read vulnerabilities across local security boundaries in various contexts.

Variants of this issue are known to affect many modern processors, including certain processors by Intel, AMD and ARM. For a few Intel and AMD CPU models, we have exploits that work against real software. We reported this issue to Intel, AMD and ARM on 2017-06-01.

The three variants:

- Variant 1: bounds check bypass (CVE-2017-5753)

- Variant 2: branch target injection (CVE-2017-5715)

- Variant 3: rogue data cache load (CVE-2017-5754)

Variants 1 & 2 are referred to as Spectre, and variant 3 as Meltdown, with the latter easier to exploit.

Yesterday, we learned AMD was not impacted, but Google clearly mention they could exploit AMD processors too, and that’s because AMD is only subject to Spectre. So AMD responded too:

It is important to understand how the speculative execution vulnerability described in the research relates to AMD products, but please keep in mind the following:

- The research described was performed in a controlled, dedicated lab environment by a highly knowledgeable team with detailed, non-public information about the processors targeted.

- The described threat has not been seen in the public domain.

and provided a table showing how AMD processors are impacted:

| Google Project Zero (GPZ) Research Title | Details | |

| Variant One | Bounds Check Bypass | Resolved by software / OS updates to be made available by system vendors and manufacturers. Negligible performance impact expected. |

| Variant Two | Branch Target Injection | Differences in AMD architecture mean there is a near zero risk of exploitation of this variant. Vulnerability to Variant 2 has not been demonstrated on AMD processors to date. |

| Variant Three | Rogue Data Cache Load | Zero AMD vulnerability due to AMD architecture differences. |

So it looks like only variant 1 is a potential issue, and operating systems will have to be patched.

Cache timing side-channels are a well-understood concept in the area of security research and therefore not a new finding. However, this side-channel mechanism could enable someone to potentially extract some information that otherwise would not be accessible to software from processors that are performing as designed and not based on a flaw or bug. This is the issue addressed here and in the Cache Speculation Side-channels whitepaper.

It is important to note that this method is dependent on malware running locally which means it’s imperative for users to practice good security hygiene by keeping their software up-to-date and avoid suspicious links or downloads.

The majority of Arm processors are not impacted by any variation of this side-channel speculation mechanism. A definitive list of the small subset of Arm-designed processors that are susceptible can be found below.

The currently popular Cortex-A7 and Cortex A53 cores are not impacted at all, but some others are:

| Processor |

Variant 1 |

Variant 2 |

Variant 3 |

Variant 3a |

| Cortex-R7 |

Yes* |

Yes* |

No |

No |

| Cortex-R8 |

Yes* |

Yes* |

No |

No |

| Cortex-A8 |

Yes (under review) |

Yes |

No |

No |

| Cortex-A9 |

Yes |

Yes |

No |

No |

| Cortex-A15 |

Yes (under review) |

Yes |

No |

Yes |

| Cortex-A17 |

Yes |

Yes |

No |

No |

| Cortex-A57 |

Yes |

Yes |

No |

Yes |

| Cortex-A72 |

Yes |

Yes |

No |

Yes |

| Cortex-A73 |

Yes |

Yes |

No |

No |

| Cortex-A75 |

Yes |

Yes |

Yes |

No |

Variant 3a of Meltdown is detailed in the whitepaper linked above, and Arm “does not believe that software mitigations for this issue are necessary”. In the table above, “Yes” means exploitable, but has a mitigation, and “No” means “no problem” :). So only Cortex-A75 is subject to both Meltdown and Spectre exploits, and it’s not in devices yet. Like other companies, Arm will provide a fix for future revisions of their processors.

Silicon vendors are not the only companies to issue answers, as operating systems vendors will have to issues fixes, and cloud providers are also impacted. Patchsets have been merged into Linux 4.15 as we’ve seen yesterday, Microsoft issued a statement for their Cloud service, Red Hat / Debian and others are working on it, and Google listed products impacted, and even Chrome web browser users need to take action to protect themselves. Android phones with the latest security patch will be protected, bearing in mind that all those Cortex-A53 phones in the wild are not affected at all. It’s worse noting that while Meltdown and Spectre make the news, there are over thirty other critical or high severity vulnerabilities fixed in January that did not get much coverage if any…

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress. We also use affiliate links in articles to earn commissions if you make a purchase after clicking on those links.

“there are over thirty other critical or high severity vulnerabilities fixed in January that did not get much coverage if any…”

Please, give them some coverage then!

@Anton Fosselius

Well I’ve linked to them. It’s actually very hard to cover security news, unless you dedicate your site and all efforts to them.

Edit: and most of the time, it’s usually boring.

The main problem is generic users have little to no interest in how devices work and their interest fades when reading detail such as above. I guess maybe Security or Virus protection software may try to use the flaw coverage as a sales tool.

It is like Car and Machinery use, many use them but are not trained, specialized Mechanics. How you educated the product users to understand the problem details, as well, as product designers and those who implement products understand the problem. Is a problem yet to be solved I suggest.

It’s interesting that ARM claims Cortex-A53 not to be vulnurable but Cortex-A8 to be in 2 cases. Interesting to me since both are in-order dual-issues pipelines IIRC.

“Intel believes its products are the most secure in the world” LOL

Re ‘Intel believes its products.. yada-yada’, let me just leave that here: https://lkml.org/lkml/2018/1/3/797

I’m not as critical as most people seem to be on the subject. For having worked on CPU blocks at university 20 years ago, the level of performance that todays CPUs manage to reach is simply tremendous, given that RAM didn’t get faster at all during all this time frame and programming practices have become so much worse.

The little secret there was to add caches, then fast caches to offload slow caches, and even faster caches to offload fast caches, and in parallel, in order to be able to keep all this little world busy, deep pipelining was implemented, mandating speculative processing by definition, then immediately branch prediction to limit the amount of pipeline stalls.

It’s already quite difficult to design an in-order, single-issue 5-stage pipeline without delayed slot from scratch. It certainly is even more when it has to support 4 issues, out of order, instruction translation, with 15-20 stages. To make an analogy, stopping an instruction late in the pipeline is similar to patching the binary on the fly when changing just the condition of an “if” block in source code : some would imagine that it’s just a JZ turned to JNZ but that’s wrong, deeply optimized code is completely different and spreads very wide into individual bytes.

I’m not saying all this as an excuse and Linus is right in that they certainly went a bit too far. But with todays’ slow RAM (and even caches), you need to go further and further in speculative processing otherwise you end up with many idle cycles. And to add to the joke, keep in mind that todays languages and programming methodologies encourage abuse of indirect calls everywhere, forcing your code to take a huge hit every 2-3 lines when you don’t do this.

The problem this poses is that such behaviors are not stable in time, they’re optimizations. And if they’re not stable, their variations are measurable, allowing to infer what impacts the behavior (often data). The only sane way to go back to constant execution time is to disable branch prediction and caching, and observe your 3 GHz processor being as fast as your good old 386 at 33 MHz.

So placing the limit between when to speculate and when not really becomes rocket science. Unfortunately long are the days where CPUs could be considered reliable (the 6501 was released with critical bugs by the way). And today it requires as complex a brain to decide if an optimization is risky as what is required to do the same in the software world. We all get caught once in a while for having done something unsafe in our code. The same crap happens in CPUs because they’re designed by humans like all software. The difference is that fixes are much harder to deploy in field unless you possess a 10nm laser to re-etch your CPU at home.

What is sad is that I think this event will mark the end of an era. The era of trustable fast CPUs. We all want the fastest possible CPU given that security is obviously provided. Now we will have to learn to choose a CPU based on the target workload to decide if we want something very very fast (ie for a gaming machine or for a terabit router) where local abuse doesn’t matter or very very safe (for a browser, a server, a satellite or an ATM).

Einstein once said it requires a smarter brain to fix a problem than the one that created it. We seem to have used all available brains to create (and sometimes fix) trouble. Now we’ll have to accept hardware bugs and slow CPUs being a normal part of the landscape, and I would not be surprised if in 10 years we start to see disposable CPUs to deal with upgrades, just like software upgrades have become mandatory now (who still remembers buying MS-DOS on diskettes and never getting a fix since the code was reliable enough?). In 10 years we may very well say “remember when the CPU came soldered in your laptop and you couldn’t replace it to install fixes?”. This will allow half-baked CPUs to be distributed with the same quality as we know with todays software since they will be fixed in field. And no, this is not yet the current situation. Just imagine if your CPU hanged as often as your browser, we would all be really pissed off.

It’s important to keep all this in mind. Maybe we’ll complain less next time we’re seeing that newer CPUs don’t get much faster.

Intel has posted more details about the issue and mitigations @ https://security-center.intel.com/advisory.aspx?intelid=INTEL-SA-00088

Microsoft have pushed for security updates for Windows 10, 8.1 and 7 SP1, Surface and Surface Book: https://portal.msrc.microsoft.com/en-US/security-guidance/advisory/ADV180002

Apple have made a statement that all Mac, iPhones and iPad devices are affected, but there are no known exploits.

” Intel CEO share dump ‘unrelated’ to processor flaws

An Intel spokeswoman has stated that last November’s sale of shares by Intel CEO Brian Kzranich was ‘unrelated’ to the discovery of the Spectre and Meltdown flaws in Intel processors. ”

ROFLMAO

@willy

That’s an interesting take on the matter. So next time a get filed a crash ticket I could shrug it off with the explanation that the complexity of my code has overgrown my mental capacity to fix bugs?

For most of us who have been in the industry since the 6502 days the growing complexities and associated risks in the processor designs are nothing new. What is new in this entire fiasco is the response of the affected parties: some vendors responded with ‘it’s an issue that needs fixing; here are our affected products, here are the patches’, and other vendors responded with ‘Fake news! NO PUPPET!’

If you want 🙂 I’m also managing a widely deployed opensource project which initially started as the most reliable never failing piece of software yada yada (told by users, never claimed by me). Now as its complexity has increased, we’re seeing more and more complex bugs sometimes requiring two people to understand and fix. Most of the time these bugs will affect only one user in one million and are almost impossible to reproduce. But fixing them gives us long head scratching sessions while trying not to break anything else nor affect performance. Again I’m not providing excuses for what happened. I’m just saying that it’s an unfortunate byproduct of increased products complexity, and that while we’ve known the era of (claimed) bug-free software, then the era of permanently bogus software, we’ve known the era of (claimed) bug-free hardware and we have to be prepared to see permanently bogus hardware. None of us likes this. But software adapted to frequent updates. Hardware will adapt as well. It may take 10 years but it will because there’s no other option to deal with complexity invented by unreliable brains.

BTW I continue to think that having the option to disable TSC in userland would put a strong stop to most if not all of these side-channel attacks, many of which require nanosecond precision.

@willy

I’m afraid that doing my job ‘if I want’ is not part of my job description : ) And neither would it feel right to me, as I truly love what I do, and every challenge that makes my code better is a challenge I welcome. Anyhow, I’m not in security, and the daily problems I have to solve are a tad remote to the current story of the day. Still.

Wrong design decisions are made on a daily basis, by the millions in this industry. Side-channel attacks don’t indicate ‘all is well and right, let’s kill the timers that facilitate that attack’, they indicate a serious design flaw. The normal course of action then is for engineering to backtrack a tad, look around and see where they branched off wrong and what would be the right course of advancement. Otherwise we, collectively, are bound to face plenty of dead-ends and get stuck there forever.

Tested with Windows 10 on Apollo Lake mini PC, and mostly no negative effects in benchmarks, except maybe for random reads on storage.

https://www.cnx-software.com/2018/01/06/intel-apollo-lake-windows-10-benchmarks-before-and-after-meltdown-spectre-security-update/

Oh the joy of updates

Intel’s patches for its processor bugs are themselves buggy.

It is reported that datacentres have been told to delay installing them, while Intel issues patches to patch the patches.

“We are working quickly with these customers to understand, diagnose and address this reboot issue,” says Navin Shenoy, GM of Intel’s data centre groupsaid in the statement. “If this requires a revised firmware update from Intel, we will distribute that update through the normal channels.”

The Wall Street Journal reports that Intel is asking datacentre customers to delay installing patches because the patches have bugs of their own.